目录

- 一、构建数据集

- 1. 对Dataset和DataLoader的理解

- 2. torch.utils.data.Dataset

- 3. torch.utils.data.DataLoader

- 4. 代码分块解析

- 5. 完整代码

- 6. 可视化

- 二、模型搭建

- 三、定义损失函数和优化器

- 四、迭代训练

- 参考文章

一、构建数据集

1. 对Dataset和DataLoader的理解

Pytorch提供了一种十分方便的数据读取机制,即使用Dataset与DataLoader组合得到数据迭代器。在每次训练时,利用这个迭代器输出每一个batch的数据,并能在输出时对数据进行相应的预处理或数据增广操作。

-

我们如果要自定义数据读取的方法,就需要继承torch.utils.data.Dataset,并将其封装到torch.utils.data.DataLoader中。

-

torch.utils.data.Dataset表示该数据集,继承该类可以重载其中的方法,实现多种数据读取及数据预处理。

-

torch.utils.data.DataLoader 封装了Data对象,实现单(多)进程迭代器输出数据集。

2. torch.utils.data.Dataset

torch.utils.data.Dataset是代表自定义数据集方法的类,用户可以通过继承该类来自定义自己的数据集类,在继承时要求用户重载__len__()和__getitem__()这两个方法。

-

__len__():返回的是数据集的大小。 -

__getitem__():实现索引数据集中的某一个数据。此外,可以在__getitem__()中实现数据预处理。

3. torch.utils.data.DataLoader

-

DataLoader将Dataset对象或自定义数据类的对象封装成一个迭代器;

-

这个迭代器可以迭代输出Dataset的内容;

-

同时可以实现多进程、shuffle、不同采样策略,数据校对等等处理过程。

4. 代码分块解析

- 自定义的数据集类为NYUv2_Dataset,继承自torch.utils.data.Dataset

class NYUv2_Dataset(Dataset):

def __init__(self, data_root, img_transforms):

self.data_root = data_root

self.img_transforms = img_transforms

data_list, label_list = read_image_path(root=self.data_root)

self.data_list = data_list

self.label_list = label_list

def __len__(self):

return len(self.data_list)

def __getitem__(self, item):

img = self.data_list[item]

label = self.label_list[item]

img = cv2.imread(img)

label = cv2.imread(label)

img, label = self.img_transforms(img, label)

return img,label

- 在NYUv2_Dataset类中,使用到了read_image_path()函数,输入为数据集的路径,输出为数据和标签的地址列表。

# 定义需要读取的数据路径的函数

def read_image_path(root=r"C:\Users\22476\Desktop\VOC_format_dataset\ImageSet\train.txt"):

# 原始图像路径输出为data,标签图像路径输出为label

image = np.loadtxt(root, dtype=str)

n =len(image)

data, label = [None]*n, [None]*n

for i,fname in enumerate(image):

data[i] = r"C:\Users\22476\Desktop\VOC_format_dataset\JPEGImages\%s.jpg" % (fname)

label[i] = r"C:\Users\22476\Desktop\VOC_format_dataset\Segmentation_40\%s.png" % (fname)

return data, label

- 在NYUv2_Dataset类中,还使用到了img_transforms()函数,用来将原始图像和类别标签转变为Tensor格式,并将标签转化为每个像素值为一类数据。

def image2label(image, colormap):

# 将标签转化为每个像素值为一类数据

cm2lbl = np.zeros(256**3)

for i,cm in enumerate(colormap):

cm2lbl[(cm[0]*256+cm[1]*256+cm[2])] = i

# 对一张图像转换

image = np.array(image, dtype="int64")

ix = (image[:,:,0]*256+image[:,:,1]*256+image[:,:,2])

image2 = cm2lbl[ix]

return image2

def img_transforms(data, label):

# 将标记图像数据进行二维标签化的操作

# 输出原始图像和类别标签的张量数据

data_tfs = transforms.Compose([

transforms.ToTensor(),

])

data = data_tfs(data)

label = torch.from_numpy(image2label(label, colormap)) # 把数组转换成张量,且二者共享内存,对张量进行修改比如重新赋值,那么原始数组也会相应发生改变

return data, label

5. 完整代码

下面展示了构建NYUv2数据集的全部代码,其中colormap是NYUv2数据集中分割标签的像素值(即类别),该部分代码实现了读取数据、数据处理、创建数据加载器dataloader。

colormap = [[0, 0, 0],

[1, 1, 1],

[2, 2, 2],

[3, 3, 3],

[4, 4, 4],

[5, 5, 5],

[6, 6, 6],

[7, 7, 7],

[8, 8, 8],

[9, 9, 9],

[10, 10, 10],

[11, 11, 11],

[12, 12, 12],

[13, 13, 13],

[14, 14, 14],

[15, 15, 15],

[16, 16, 16],

[17, 17, 17],

[18, 18, 18],

[19, 19, 19],

[20, 20, 20],

[21, 21, 21],

[22, 22, 22],

[23, 23, 23],

[24, 24, 24],

[25, 25, 25],

[26, 26, 26],

[27, 27, 27],

[28, 28, 28],

[29, 29, 29],

[30, 30, 30],

[31, 31, 31],

[32, 32, 32],

[33, 33, 33],

[34, 34, 34],

[35, 35, 35],

[36, 36, 36],

[37, 37, 37],

[38, 38, 38],

[39, 39, 39],

[40, 40, 40]]

# 定义计算设备

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

def image2label(image, colormap):

# 将标签转化为每个像素值为一类数据

cm2lbl = np.zeros(256**3)

for i,cm in enumerate(colormap):

cm2lbl[(cm[0]*256+cm[1]*256+cm[2])] = i

# 对一张图像转换

image = np.array(image, dtype="int64")

ix = (image[:,:,0]*256+image[:,:,1]*256+image[:,:,2])

image2 = cm2lbl[ix]

return image2

# 定义需要读取的数据路径的函数

def read_image_path(root=r"C:\Users\22476\Desktop\VOC_format_dataset\ImageSet\train.txt"):

# 原始图像路径输出为data,标签图像路径输出为label

image = np.loadtxt(root, dtype=str)

n =len(image)

data, label = [None]*n, [None]*n

for i,fname in enumerate(image):

data[i] = r"C:\Users\22476\Desktop\VOC_format_dataset\JPEGImages\%s.jpg" % (fname)

label[i] = r"C:\Users\22476\Desktop\VOC_format_dataset\Segmentation_40\%s.png" % (fname)

return data, label

# 单组图像的转换操作

def img_transforms(data, label):

# 将输入图像进行亮度、对比度、饱和度、色相的改变;将标记图像数据进行二维标签化的操作

# 输出原始图像和类别标签的张量数据

data_tfs = transforms.Compose([

transforms.ToTensor(),

])

data = data_tfs(data)

label = torch.from_numpy(image2label(label, colormap)) # 把数组转换成张量,且二者共享内存,对张量进行修改比如重新赋值,那么原始数组也会相应发生改变

return data, label

class NYUv2_Dataset(Dataset):

def __init__(self, data_root, img_transforms):

self.data_root = data_root

self.img_transforms = img_transforms

data_list, label_list = read_image_path(root=self.data_root)

self.data_list = data_list

self.label_list = label_list

def __len__(self):

return len(self.data_list)

def __getitem__(self, item):

img = self.data_list[item]

label = self.label_list[item]

img = cv2.imread(img)

label = cv2.imread(label)

img, label = self.img_transforms(img, label)

return img,label

nyuv2_train = NYUv2_Dataset(r"C:\Users\22476\Desktop\VOC_format_dataset\ImageSet\train.txt", img_transforms)

nyuv2_val = NYUv2_Dataset(r"C:\Users\22476\Desktop\VOC_format_dataset\ImageSet\val.txt", img_transforms)

# 创建数据加载器每个batch使用4张图像

train_loader = Data.DataLoader(nyuv2_train, batch_size=2, shuffle=True, num_workers=0, pin_memory=True)

val_loader = Data.DataLoader(nyuv2_val, batch_size=2, shuffle=True, num_workers=0, pin_memory=True)

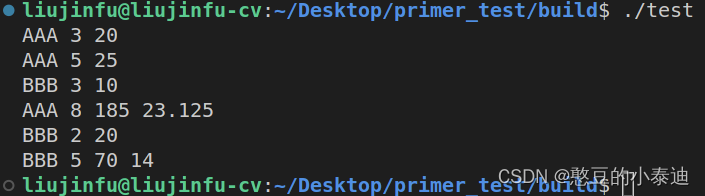

6. 可视化

通过可视化一个batch的数据,检查数据预处理是否正确

# 检查训练数据集的一个batch的样本的维度是否正确

for step,(b_x,b_y) in enumerate(train_loader):

if step > 0:

break

# 输出训练图像的尺寸和标签的尺寸,以及接受类型

# print("Train数据:",b_x)

# print("Train标签:",b_y)

#

# print("b_x.shape:",b_x.shape)

# print("b_y.shape:",b_y.shape)

# 可视化一个batch的图像,检查数据预处理是否正确

b_x_numpy = b_x.data.numpy()

b_x_numpy = b_x_numpy.transpose(0,2,3,1)

plt.imshow(b_x_numpy[1])

plt.show()

b_y_numpy = b_y.data.numpy()

plt.imshow(b_y_numpy[1])

plt.show()

二、模型搭建

本文以预训练好的VGG19模型作为Backbone,然后自己搭建上采样部分网络,从而完成整个语义分割网络的模型搭建。

high, width = 320, 480

# 使用预训练好的VGG19网络作为backbone

model_vgg19 = vgg19(pretrained=True)

# 不使用VGG19网络后面的AdaptiveAvgPool2d和Linear层

base_model = model_vgg19.features

base_model = base_model.cuda()

# summary(base_model,input_size=(3, high, width))

# 定义FCN语义分割网络

class FCN8s(nn.Module):

def __init__(self, num_classes):

super().__init__()

self.num_classes = num_classes

model_vgg19 = vgg19(pretrained=True)

# 不使用VGG19网络后面的AdaptiveAvgPool2d和Linear层

self.base_model = model_vgg19.features

# 定义几个需要的层操作,并且使用转置卷积将特征映射进行升维

self.relu = nn.ReLU(inplace=True)

self.deconv1 = nn.ConvTranspose2d(512, 512, kernel_size=3, stride=2, padding=1, dilation=1, output_padding=1)

self.bn1 = nn.BatchNorm2d(512)

self.deconv2 = nn.ConvTranspose2d(512, 256, 3, 2, 1, 1, 1)

self.bn2 = nn.BatchNorm2d(256)

self.deconv3 = nn.ConvTranspose2d(256, 128, 3, 2, 1, 1, 1)

self.bn3 = nn.BatchNorm2d(128)

self.deconv4 = nn.ConvTranspose2d(128, 64, 3, 2, 1, 1, 1)

self.bn4 = nn.BatchNorm2d(64)

self.deconv5 = nn.ConvTranspose2d(64, 32, 3, 2, 1, 1, 1)

self.bn5 = nn.BatchNorm2d(32)

self.classifier = nn.Conv2d(32, num_classes, kernel_size=1)

## VGG19中MaxPool2d所在的层

self.layers = {"4":"maxpool_1","9":"maxpool_2",

"18": "maxpool_3", "27": "maxpool_4",

"36": "maxpool_5"}

def forward(self, x):

output ={}

for name, layer in self.base_model._modules.items():

## 从第一层开始获取图像的特征

x = layer(x)

## 如果是layers参数指定的特征,那就保存到output中

if name in self.layers:

output[self.layers[name]] = x

x5 = output["maxpool_5"] ## size = (N, 512, x.H/32, x.W/32)

x4 = output["maxpool_4"] ## size = (N, 512, x.H/16, x.W/16)

x3 = output["maxpool_3"] ## size = (N, 512, x.H/8, x.W/8)

## 对特征进行相关的转置卷积操作,逐渐将图像放大到原始图像大小

## size = (N, 512, x.H/16, x.W/16)

score = self.relu(self.deconv1(x5))

## 对应元素相加,size = (N, 512, x.H/16, x.W/16)

score = self.bn1(score + x4)

## size = (N, 256, x.H/8, x.W/8)

score = self.relu(self.deconv2(score))

## 对应元素相加,size = (N, 256, x.H/8, x.W/8)

score = self.bn2(score + x3)

## size = (N, 128, x.H/4, x.W/4)

score = self.bn3(self.relu(self.deconv3(score)))

## size = (N, 64, x.H/2, x.W/2)

score = self.bn4(self.relu(self.deconv4(score)))

## size = (N, 32, x.H, x.W)

score = self.bn5(self.relu(self.deconv5(score)))

score = self.classifier(score)

return score ## size = (N, n_class, x.H/1, x.W/1)

fcn8s = FCN8s(41).to(device)

summary(fcn8s, input_size=(3, high, width))

模型结构如下表所示:

| Layer (type) | Output Shape | Param |

|---|---|---|

| Conv2d-1 | [-1, 64, 320, 480] | 1,792 |

| ReLU-2 | [-1, 64, 320, 480] | 0 |

| Conv2d-3 | [-1, 64, 320, 480] | 36,928 |

| ReLU-4 | [-1, 64, 320, 480] | 0 |

| MaxPool2d-5 | [-1, 64, 160, 240] | 0 |

| Conv2d-6 | [-1, 128, 160, 240] | 73,856 |

| ReLU-7 | [-1, 128, 160, 240] | 0 |

| Conv2d-8 | [-1, 128, 160, 240] | 147,584 |

| ReLU-9 | [-1, 128, 160, 240] | 0 |

| MaxPool2d-10 | [-1, 128, 80, 120] | 0 |

| Conv2d-11 | [-1, 256, 80, 120] | 295,168 |

| ReLU-12 | [-1, 256, 80, 120] | 0 |

| Conv2d-13 | [-1, 256, 80, 120] | 590,080 |

| ReLU-14 | [-1, 256, 80, 120] | 0 |

| Conv2d-15 | [-1, 256, 80, 120] | 590,080 |

| ReLU-16 | [-1, 256, 80, 120] | 0 |

| Conv2d-17 | [-1, 256, 80, 120] | 590,080 |

| ReLU-18 | [-1, 256, 80, 120] | 0 |

| MaxPool2d-19 | [-1, 256, 40, 60] | 0 |

| Conv2d-20 | [-1, 512, 40, 60] | 1,180,160 |

| ReLU-21 | [-1, 512, 40, 60] | 0 |

| Conv2d-22 | [-1, 512, 40, 60] | 2,359,808 |

| ReLU-23 | [-1, 512, 40, 60] | 0 |

| Conv2d-24 | [-1, 512, 40, 60] | 2,359,808 |

| ReLU-25 | [-1, 512, 40, 60] | 0 |

| Conv2d-26 | [-1, 512, 40, 60] | 2,359,808 |

| ReLU-27 | [-1, 512, 40, 60] | 0 |

| MaxPool2d-28 | [-1, 512, 20, 30] | 0 |

| Conv2d-29 | [-1, 512, 20, 30] | 2,359,808 |

| ReLU-30 | [-1, 512, 20, 30] | 0 |

| Conv2d-31 | [-1, 512, 20, 30] | 2,359,808 |

| ReLU-32 | [-1, 512, 20, 30] | 0 |

| Conv2d-33 | [-1, 512, 20, 30] | 2,359,808 |

| ReLU-34 | [-1, 512, 20, 30] | 0 |

| Conv2d-35 | [-1, 512, 20, 30] | 2,359,808 |

| ReLU-36 | [-1, 512, 20, 30] | 0 |

| MaxPool2d-37 | [-1, 512, 10, 15] | 0 |

| ConvTranspose2d-38 | [-1, 512, 20, 30] | 2,359,808 |

| ReLU-39 | [-1, 512, 20, 30] | 0 |

| BatchNorm2d-40 | [-1, 512, 20, 30] | 1,024 |

| ConvTranspose2d-41 | [-1, 256, 40, 60]] | 1,179,904 |

| ReLU-42 | [-1, 256, 40, 60] | 0 |

| BatchNorm2d-43 | [-1, 256, 40, 60] | 512 |

| ConvTranspose2d-44 | [-1, 128, 80, 120] | 295,040 |

| ReLU-45 | [-1, 128, 80, 120] | 0 |

| BatchNorm2d-46 | [-1, 128, 80, 120] | 256 |

| ConvTranspose2d-47 | [-1, 64, 160, 240] | 73,792 |

| ReLU-48 | [-1, 64, 160, 240] | 0 |

| BatchNorm2d-49 | [-1, 64, 160, 240] | 128 |

| ConvTranspose2d-50 | [-1, 32, 320, 480] | 18,464 |

| ReLU-51 | [-1, 32, 320, 480] | 0 |

| BatchNorm2d-52 | [-1, 32, 320, 480] | 64 |

| Conv2d-53 | [-1, 41, 320, 480] | 1,353 |

三、定义损失函数和优化器

# 定义损失函数和优化器

LR = 0.0003

criterion = nn.NLLLoss()

optimizer = optim.Adam(fcn8s.parameters(), lr=LR,weight_decay=1e-4)

四、迭代训练

# 对模型进行迭代训练,对所有的数据训练epoch轮

fcn8s,train_process = train_model(

fcn8s,criterion,optimizer,train_loader,

val_loader, num_epochs=200)

## 保存训练好的模型fcn8s

torch.save(fcn8s,"fcnNYUv2.pt")

def train_model(model, criterion, optimizer, traindataloader, valdataloader, num_epochs = 25):

"""

:param model: 网络模型

:param criterion: 损失函数

:param optimizer: 优化函数

:param traindataloader: 训练的数据集

:param valdataloader: 验证的数据集

:param num_epochs: 训练的轮数

"""

since = time.time()

best_model_wts = copy.deepcopy(model.state_dict())

best_loss = 1e10

train_loss_all = []

train_acc_all = []

val_loss_all = []

val_acc_all = []

since = time.time()

for epoch in range(num_epochs):

print('Epoch {}/{}'.format(epoch, num_epochs-1))

print('-' * 10)

train_loss = 0.0

train_num = 0

val_loss = 0.0

val_num = 0

## 每个epoch包括训练和验证阶段

model.train() ## 设置模型为训练模式

for step,(b_x,b_y) in enumerate(traindataloader):

optimizer.zero_grad()

b_x = b_x.float().to(device)

b_y = b_y.long().to(device)

out = model(b_x)

out = F.log_softmax(out, dim=1)

pre_lab = torch.argmax(out,1) ## 预测的标签

loss = criterion(out, b_y) ## 计算损失函数值

loss.backward()

optimizer.step()

train_loss += loss.item() * len(b_y)

train_num += len(b_y)

## 计算一个epoch在训练集上的损失和精度

train_loss_all.append(train_loss / train_num)

print('{} Train loss: {:.4f}'.format(epoch, train_loss_all[-1]))

## 计算一个epoch训练后在验证集上的损失

model.eval() ## 设置模型为验证模式

for step,(b_x,b_y) in enumerate(valdataloader):

b_x = b_x.float().to(device)

b_y = b_y.long().to(device)

out = model(b_x)

out = F.log_softmax(out, dim=1)

pre_lab = torch.argmax(out,1) ## 预测的标签

loss = criterion(out, b_y) ## 计算损失函数值

val_loss += loss.item() * len(b_y)

val_num += len(b_y)

## 计算一个epoch在验证集上的损失和精度

val_loss_all.append(val_loss / val_num)

print('{} Val loss: {:.4f}'.format(epoch, val_loss_all[-1]))

## 保存最好的网络参数

if val_loss_all[-1] < best_loss:

best_loss = val_loss_all[-1]

best_model_wts = copy.deepcopy(model.state_dict())

## 每个epoch花费的时间

time_use = time.time() - since

print("Train and val complete in {:.0f}m {:.0f}s".format(time_use // 60, time_use %60))

train_process = pd.DataFrame(

data = {"epoch":range(num_epochs),

"train_loss_all":train_loss_all,

"val_loss_all":val_loss_all})

## 输出最好的模型

model.load_state_dict(best_model_wts)

return model,train_process

参考文章

Pytorch构建数据集——torch.utils.data.Dataset()和torch.utils.dataDataLoader()

Pytorch笔记05-自定义数据读取方式orch.utils.data.Dataset与Dataloader

![[ROC-RK3568-PC] [Firefly-Android] 10min带你了解RTC的使用](https://img-blog.csdnimg.cn/344014cfa99d41488c461e7d480bb3da.png)