部署完hadoop单机版后,试下mapreduce是怎么分析处理数据的

Word Count

Word Count 就是"词语统计",这是 MapReduce 工作程序中最经典的一种。它的主要任务是对一个文本文件中的词语作归纳统计,统计出每个出现过的词语一共出现的次数。

Hadoop 中包含了许多经典的 MapReduce 示例程序,其中就包含 Word Count.

准备演示文件input.txt

# cat input.txt

I LOVE GG

I LIKE YY

I LOVE UU

I LIKE RR复制input.txt至hadoop中

# hdfs dfs -put input.txt /test

# hdfs dfs -ls /test

Found 4 items

drwxr-xr-x - yunwei supergroup 0 2023-02-24 17:14 /test/a

-rw-r--r-- 2 yunwei supergroup 51 2023-02-24 17:25 /test/b.txt

-rw-r--r-- 2 yunwei supergroup 51 2023-02-24 17:21 /test/hello-hadoop.txt

-rw-r--r-- 2 yunwei supergroup 40 2023-02-27 15:59 /test/input.txt查看hadoop下的mapreduce包

# ll $HADOOP_HOME/share/hadoop/mapreduce/

total 4876

-rw-rw-r-- 1 yunwei yunwei 526732 Oct 3 2016 hadoop-mapreduce-client-app-2.6.5.jar

-rw-rw-r-- 1 yunwei yunwei 686773 Oct 3 2016 hadoop-mapreduce-client-common-2.6.5.jar

-rw-rw-r-- 1 yunwei yunwei 1535776 Oct 3 2016 hadoop-mapreduce-client-core-2.6.5.jar

-rw-rw-r-- 1 yunwei yunwei 259326 Oct 3 2016 hadoop-mapreduce-client-hs-2.6.5.jar

-rw-rw-r-- 1 yunwei yunwei 27489 Oct 3 2016 hadoop-mapreduce-client-hs-plugins-2.6.5.jar

-rw-rw-r-- 1 yunwei yunwei 61309 Oct 3 2016 hadoop-mapreduce-client-jobclient-2.6.5.jar

-rw-rw-r-- 1 yunwei yunwei 1514166 Oct 3 2016 hadoop-mapreduce-client-jobclient-2.6.5-tests.jar

-rw-rw-r-- 1 yunwei yunwei 67762 Oct 3 2016 hadoop-mapreduce-client-shuffle-2.6.5.jar

-rw-rw-r-- 1 yunwei yunwei 292710 Oct 3 2016 hadoop-mapreduce-examples-2.6.5.jar

drwxrwxr-x 2 yunwei yunwei 4096 Oct 3 2016 lib

drwxrwxr-x 2 yunwei yunwei 30 Oct 3 2016 lib-examples

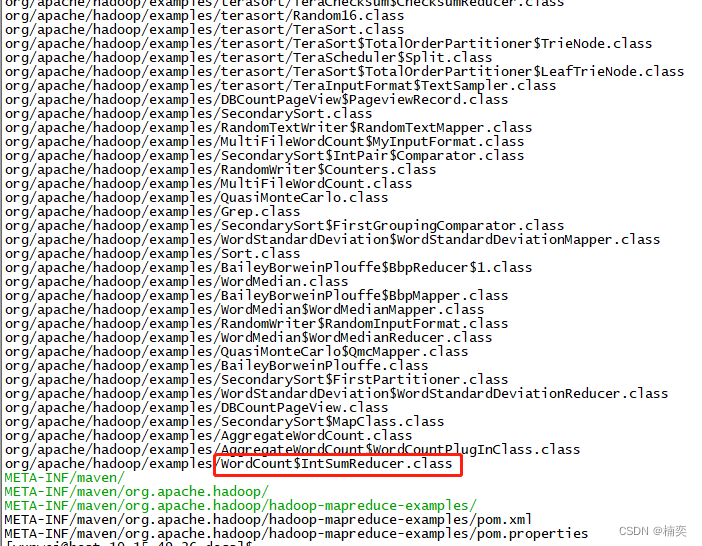

drwxrwxr-x 2 yunwei yunwei 4096 Oct 3 2016 sourcesvi hadoop-mapreduce-examples-2.6.5.jar

hadoop的命令执行jar

# hadoop jar $HADOOP_HOME/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.5.jar WordCount input.txt output

Unknown program 'WordCount' chosen.

Valid program names are:

aggregatewordcount: An Aggregate based map/reduce program that counts the words in the input files.

aggregatewordhist: An Aggregate based map/reduce program that computes the histogram of the words in the input files.

bbp: A map/reduce program that uses Bailey-Borwein-Plouffe to compute exact digits of Pi.

dbcount: An example job that count the pageview counts from a database.

distbbp: A map/reduce program that uses a BBP-type formula to compute exact bits of Pi.

grep: A map/reduce program that counts the matches of a regex in the input.

join: A job that effects a join over sorted, equally partitioned datasets

multifilewc: A job that counts words from several files.

pentomino: A map/reduce tile laying program to find solutions to pentomino problems.

pi: A map/reduce program that estimates Pi using a quasi-Monte Carlo method.

randomtextwriter: A map/reduce program that writes 10GB of random textual data per node.

randomwriter: A map/reduce program that writes 10GB of random data per node.

secondarysort: An example defining a secondary sort to the reduce.

sort: A map/reduce program that sorts the data written by the random writer.

sudoku: A sudoku solver.

teragen: Generate data for the terasort

terasort: Run the terasort

teravalidate: Checking results of terasort

wordcount: A map/reduce program that counts the words in the input files.

wordmean: A map/reduce program that counts the average length of the words in the input files.

wordmedian: A map/reduce program that counts the median length of the words in the input files.

wordstandarddeviation: A map/reduce program that counts the standard deviation of the length of the words in the input files.执行报错,虽然example.jar中有这个WordCount类,但填下类名没起作用。改为小写后,继续执行,报错。hdfs的目录下没有这个文件,将input.txt文件上传至hadoop中。

# hadoop jar $HADOOP_HOME/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.5.jar wordcount input.txt output

23/02/27 15:58:58 INFO Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id

23/02/27 15:58:58 INFO jvm.JvmMetrics: Initializing JVM Metrics with processName=JobTracker, sessionId=

23/02/27 15:58:58 INFO mapreduce.JobSubmitter: Cleaning up the staging area file:/tmp/hadoop-yunwei/mapred/staging/yunwei293247600/.staging/job_local293247600_0001

org.apache.hadoop.mapreduce.lib.input.InvalidInputException: Input path does not exist: hdfs://10.15.49.26:8020/user/yunwei/input.txt

at org.apache.hadoop.mapreduce.lib.input.FileInputFormat.singleThreadedListStatus(FileInputFormat.java:321)

at org.apache.hadoop.mapreduce.lib.input.FileInputFormat.listStatus(FileInputFormat.java:264)

at org.apache.hadoop.mapreduce.lib.input.FileInputFormat.getSplits(FileInputFormat.java:385)

at org.apache.hadoop.mapreduce.JobSubmitter.writeNewSplits(JobSubmitter.java:302)

at org.apache.hadoop.mapreduce.JobSubmitter.writeSplits(JobSubmitter.java:319)

at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:197)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1297)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1294)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1692)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1294)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1315)

at org.apache.hadoop.examples.WordCount.main(WordCount.java:87)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.ProgramDriver$ProgramDescription.invoke(ProgramDriver.java:71)

at org.apache.hadoop.util.ProgramDriver.run(ProgramDriver.java:144)

at org.apache.hadoop.examples.ExampleDriver.main(ExampleDriver.java:74)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)# hdfs dfs -put input.txt /test

# hdfs dfs -ls /test

# hdfs dfs -put input.txt /test

# hdfs dfs -ls /test

Found 4 items

drwxr-xr-x - yunwei supergroup 0 2023-02-24 17:14 /test/a

-rw-r--r-- 2 yunwei supergroup 51 2023-02-24 17:25 /test/b.txt

-rw-r--r-- 2 yunwei supergroup 51 2023-02-24 17:21 /test/hello-hadoop.txt

-rw-r--r-- 2 yunwei supergroup 40 2023-02-27 15:59 /test/input.txt# hadoop jar $HADOOP_HOME/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.5.jar wordcount /test/input.txt output

解释一下含义:

hadoop jar从 jar 文件执行 MapReduce 任务,之后跟着的是示例程序包的路径。

wordcount表示执行示例程序包中的 Word Count 程序,之后跟这两个参数,第一个是输入文件,第二个是输出结果的目录名(因为输出结果是多个文件)。

执行之后,应该会输出一个文件夹 output,在这个文件夹里有两个文件:_SUCCESS 和 part-r-00000。

/test/output 上面命令如果指定output在hadoop的路径,相关执行结果便不会生成在默认位置,而是命令指定的位置。

其中 _SUCCESS 只是用于表达执行成功的空文件,part-r-00000 则是处理结果,当我们显示一下它的内容:

指定到hadoop目录下的input.txt文件

# hadoop jar $HADOOP_HOME/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.5.jar wordcount /test/input.txt output

23/02/27 16:00:13 INFO Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id

23/02/27 16:00:13 INFO jvm.JvmMetrics: Initializing JVM Metrics with processName=JobTracker, sessionId=

23/02/27 16:00:13 INFO input.FileInputFormat: Total input paths to process : 1

23/02/27 16:00:13 INFO mapreduce.JobSubmitter: number of splits:1

23/02/27 16:00:13 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_local1519269801_0001

23/02/27 16:00:13 INFO mapreduce.Job: The url to track the job: http://localhost:8080/

23/02/27 16:00:13 INFO mapreduce.Job: Running job: job_local1519269801_0001

23/02/27 16:00:13 INFO mapred.LocalJobRunner: OutputCommitter set in config null

23/02/27 16:00:13 INFO mapred.LocalJobRunner: OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

23/02/27 16:00:13 INFO mapred.LocalJobRunner: Waiting for map tasks

23/02/27 16:00:13 INFO mapred.LocalJobRunner: Starting task: attempt_local1519269801_0001_m_000000_0

23/02/27 16:00:13 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

23/02/27 16:00:13 INFO mapred.MapTask: Processing split: hdfs://10.15.49.26:8020/test/input.txt:0+40

23/02/27 16:00:14 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

23/02/27 16:00:14 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

23/02/27 16:00:14 INFO mapred.MapTask: soft limit at 83886080

23/02/27 16:00:14 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

23/02/27 16:00:14 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

23/02/27 16:00:14 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

23/02/27 16:00:14 INFO mapred.LocalJobRunner:

23/02/27 16:00:14 INFO mapred.MapTask: Starting flush of map output

23/02/27 16:00:14 INFO mapred.MapTask: Spilling map output

23/02/27 16:00:14 INFO mapred.MapTask: bufstart = 0; bufend = 88; bufvoid = 104857600

23/02/27 16:00:14 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 26214352(104857408); length = 45/6553600

23/02/27 16:00:14 INFO mapred.MapTask: Finished spill 0

23/02/27 16:00:14 INFO mapred.Task: Task:attempt_local1519269801_0001_m_000000_0 is done. And is in the process of committing

23/02/27 16:00:14 INFO mapred.LocalJobRunner: map

23/02/27 16:00:14 INFO mapred.Task: Task 'attempt_local1519269801_0001_m_000000_0' done.

23/02/27 16:00:14 INFO mapred.LocalJobRunner: Finishing task: attempt_local1519269801_0001_m_000000_0

23/02/27 16:00:14 INFO mapred.LocalJobRunner: map task executor complete.

23/02/27 16:00:14 INFO mapred.LocalJobRunner: Waiting for reduce tasks

23/02/27 16:00:14 INFO mapred.LocalJobRunner: Starting task: attempt_local1519269801_0001_r_000000_0

23/02/27 16:00:14 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

23/02/27 16:00:14 INFO mapred.ReduceTask: Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@5cd45799

23/02/27 16:00:14 INFO reduce.MergeManagerImpl: MergerManager: memoryLimit=334338464, maxSingleShuffleLimit=83584616, mergeThreshold=220663392, ioSortFactor=10, memToMemMergeOutputsThreshold=10

23/02/27 16:00:14 INFO reduce.EventFetcher: attempt_local1519269801_0001_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events

23/02/27 16:00:14 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local1519269801_0001_m_000000_0 decomp: 68 len: 72 to MEMORY

23/02/27 16:00:14 INFO reduce.InMemoryMapOutput: Read 68 bytes from map-output for attempt_local1519269801_0001_m_000000_0

23/02/27 16:00:14 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 68, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->68

23/02/27 16:00:14 INFO reduce.EventFetcher: EventFetcher is interrupted.. Returning

23/02/27 16:00:14 INFO mapred.LocalJobRunner: 1 / 1 copied.

23/02/27 16:00:14 INFO reduce.MergeManagerImpl: finalMerge called with 1 in-memory map-outputs and 0 on-disk map-outputs

23/02/27 16:00:14 INFO mapred.Merger: Merging 1 sorted segments

23/02/27 16:00:14 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 63 bytes

23/02/27 16:00:14 INFO reduce.MergeManagerImpl: Merged 1 segments, 68 bytes to disk to satisfy reduce memory limit

23/02/27 16:00:14 INFO reduce.MergeManagerImpl: Merging 1 files, 72 bytes from disk

23/02/27 16:00:14 INFO reduce.MergeManagerImpl: Merging 0 segments, 0 bytes from memory into reduce

23/02/27 16:00:14 INFO mapred.Merger: Merging 1 sorted segments

23/02/27 16:00:14 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 63 bytes

23/02/27 16:00:14 INFO mapred.LocalJobRunner: 1 / 1 copied.

23/02/27 16:00:14 INFO Configuration.deprecation: mapred.skip.on is deprecated. Instead, use mapreduce.job.skiprecords

23/02/27 16:00:14 INFO mapred.Task: Task:attempt_local1519269801_0001_r_000000_0 is done. And is in the process of committing

23/02/27 16:00:14 INFO mapred.LocalJobRunner: 1 / 1 copied.

23/02/27 16:00:14 INFO mapred.Task: Task attempt_local1519269801_0001_r_000000_0 is allowed to commit now

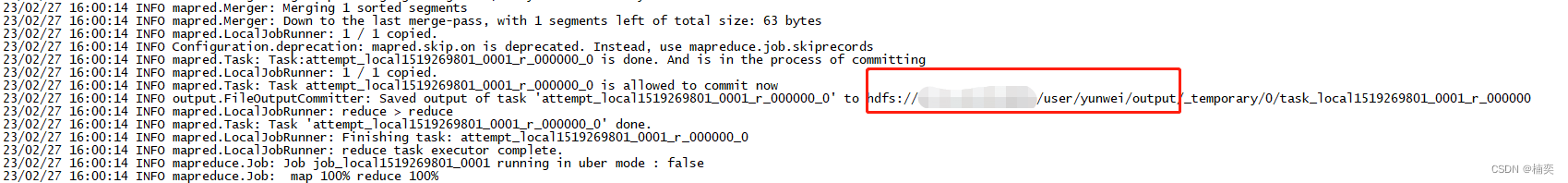

23/02/27 16:00:14 INFO output.FileOutputCommitter: Saved output of task 'attempt_local1519269801_0001_r_000000_0' to hdfs://xx.xx.xx.xx:xx/user/yunwei/output/_temporary/0/task_local1519269801_0001_r_000000

23/02/27 16:00:14 INFO mapred.LocalJobRunner: reduce > reduce

23/02/27 16:00:14 INFO mapred.Task: Task 'attempt_local1519269801_0001_r_000000_0' done.

23/02/27 16:00:14 INFO mapred.LocalJobRunner: Finishing task: attempt_local1519269801_0001_r_000000_0

23/02/27 16:00:14 INFO mapred.LocalJobRunner: reduce task executor complete.

23/02/27 16:00:14 INFO mapreduce.Job: Job job_local1519269801_0001 running in uber mode : false

23/02/27 16:00:14 INFO mapreduce.Job: map 100% reduce 100%

23/02/27 16:00:14 INFO mapreduce.Job: Job job_local1519269801_0001 completed successfully

23/02/27 16:00:14 INFO mapreduce.Job: Counters: 38

File System Counters

FILE: Number of bytes read=585924

FILE: Number of bytes written=1105104

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=80

HDFS: Number of bytes written=38

HDFS: Number of read operations=13

HDFS: Number of large read operations=0

HDFS: Number of write operations=4

Map-Reduce Framework

Map input records=4

Map output records=12

Map output bytes=88

Map output materialized bytes=72

Input split bytes=103

Combine input records=12

Combine output records=7

Reduce input groups=7

Reduce shuffle bytes=72

Reduce input records=7

Reduce output records=7

Spilled Records=14

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=0

CPU time spent (ms)=0

Physical memory (bytes) snapshot=0

Virtual memory (bytes) snapshot=0

Total committed heap usage (bytes)=716177408

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=40

File Output Format Counters

Bytes Written=38查看执行结果,从上面执行的日志中,可以看到output文件生成的位置信息

执行之后,应该会输出一个文件夹 output,在这个文件夹里有两个文件:_SUCCESS 和 part-r-00000。

其中 _SUCCESS 只是用于表达执行成功的空文件,part-r-00000 则是处理结果

# hdfs dfs -lsr /user/yunwei

lsr: DEPRECATED: Please use 'ls -R' instead.

drwxr-xr-x - yunwei supergroup 0 2023-02-27 16:00 /user/yunwei/output

-rw-r--r-- 2 yunwei supergroup 0 2023-02-27 16:00 /user/yunwei/output/_SUCCESS

-rw-r--r-- 2 yunwei supergroup 38 2023-02-27 16:00 /user/yunwei/output/part-r-00000hdfs dfs -cat /user/yunwei/output/part-r-00000

# hdfs dfs -cat /user/yunwei/output/part-r-00000

GG 1

I 4

LIKE 2

LOVE 2

RR 1

UU 1

YY 1