Kubernetes之Service

- Service的概念

- Service的类型

- Service演示案例

- 环境准备

- ClusterIP(集群内部访问)

- Iptables

- IPVS

- Endpoint

- NodePort(对外暴露应用)

- LoadBalancer(对外暴露应用,适用于公有云)

- Ingress

- 搭建Ingress环境

- 演示案例环境准备

- 配置http代理

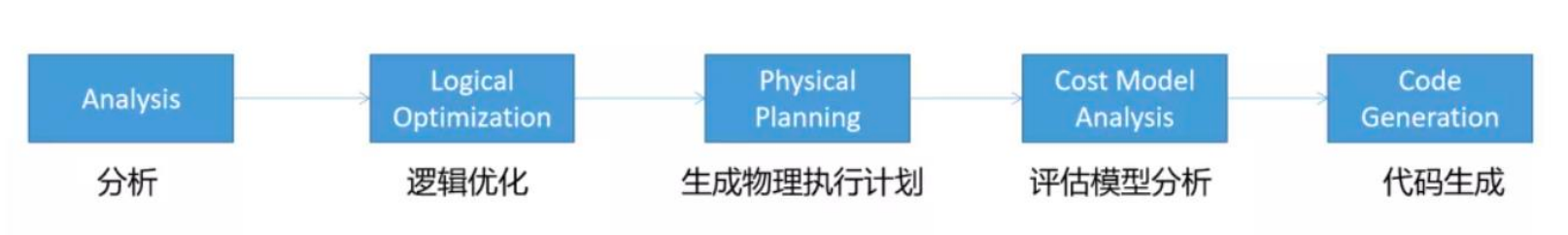

Service的概念

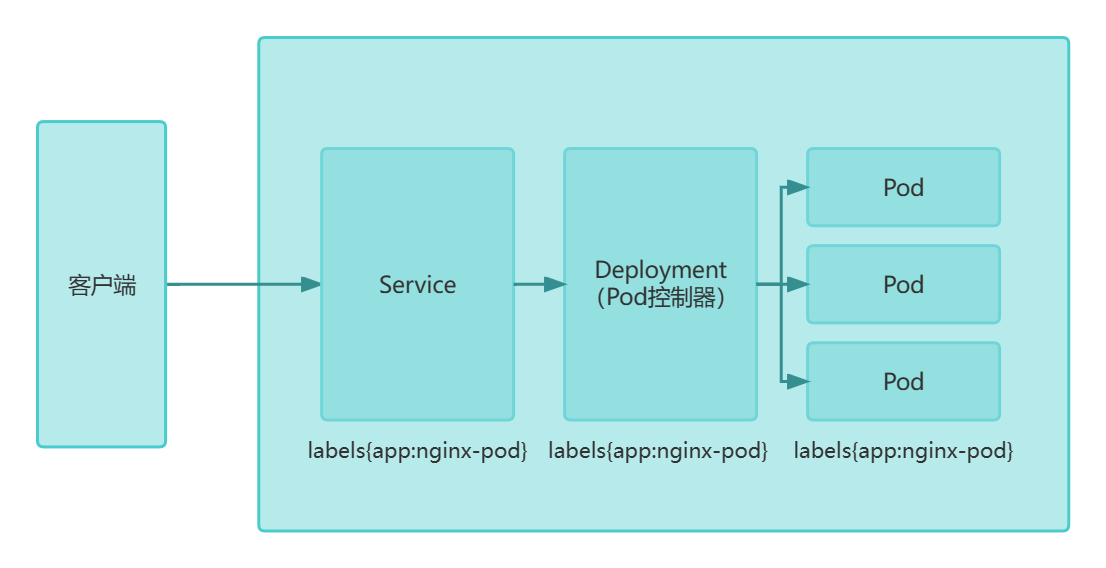

Kubernetes Pod是有生命周期的,它们可以被创建,也可以被销毁,然而一旦被销毁生命就永远结束,每个Pod都会获取它自己的IP地址,可Pod一旦销毁并重新创建后,IP地址就会发生改变。这时候我们需要通过k8s中的Service访问整个Pod集群,只要Service不被销毁,Pod就算不断发生变化,入口访问的IP总是固定的。

Service资源用于为Pod对象提供一个固定、统一的访问入口及负载均衡的能力,并借助新一代DNS系统的服务发现功能,解决客户端发现并访问容器化应用的问题。

Kubernetes中的Service是一种逻辑概念。它定义了一个Pod逻辑集合以及访问它们的策略,Service与Pod的关联同样是通过Label完成的。Service的目标是提供一种桥梁,它会为访问者提供一个固定的访问IP地址,用于在访问时重定向到相应的后端。

Service的类型

- ClusterIP:默认值,K8S系统给Service自动分配的虚拟IP,只能在集群内部访问。一个Service可能对应多个EndPoint(Pod),Client访问的是Cluster IP,通过iptables规则转到Real Server,从而达到负载均衡的效果;

- NodePort:将Service通过指定的Node上的端口暴露给外部,访问任意一个;

- LoadBalancer:在NodePort的基础上,借助Cloud Provider创建一个外部的负载均衡器,并将请求转发到:NodePort,此模式只能在云服务器上使用;

- ExternalName:将服务通过DNS CNAME记录方式转发到指定的域名(通过spec.externlName设定);

Service演示案例

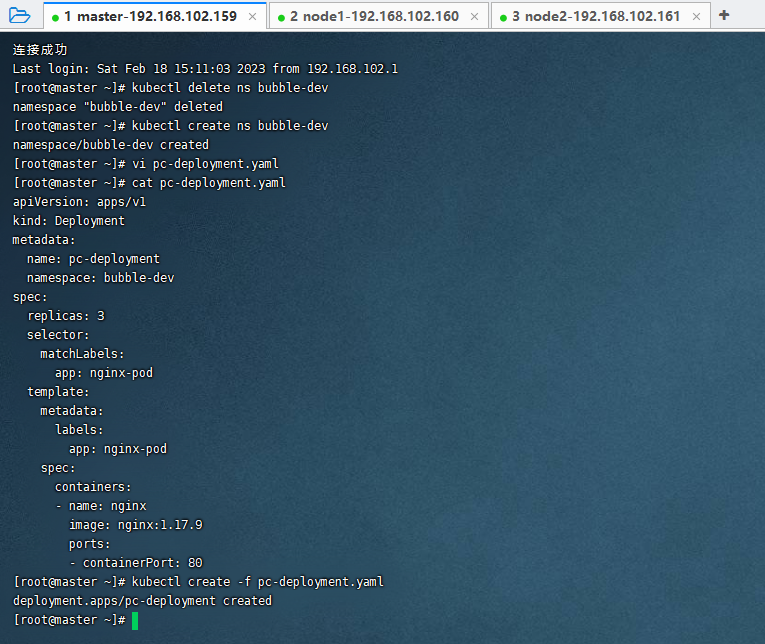

环境准备

在同一个Namespace下启动三个不同的pod

apiVersion: apps/v1

kind: Deployment

metadata:

name: pc-deployment

namespace: bubble-dev

spec:

replicas: 3

selector:

matchLabels:

app: nginx-pod

template:

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx

image: nginx:1.17.9

ports:

- containerPort: 80

kubectl delete ns bubble-dev

kubectl create ns bubble-dev

vi pc-deployment.yaml

cat pc-deployment.yaml

kubectl create -f pc-deployment.yaml

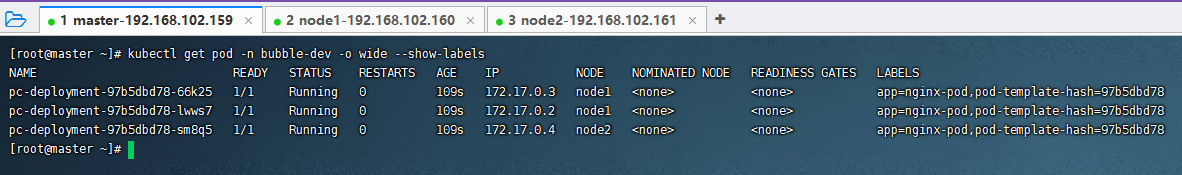

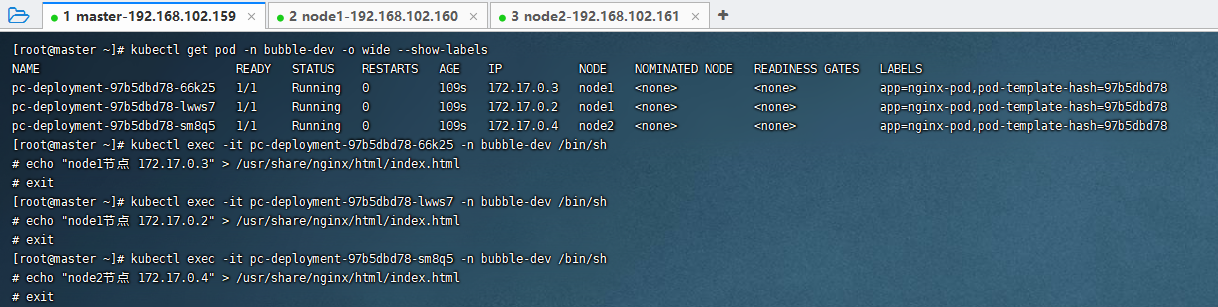

修改每个Pod里面的Nginx容器信息

kubectl get pod -n bubble-dev -o wide --show-labels

为了方便测试查看的效果,修改每个Nginx中的index.html页面为对应的IP地址

kubectl exec -it pc-deployment-97b5dbd78-66k25 -n bubble-dev /bin/sh

echo "node1节点 172.17.0.3" > /usr/share/nginx/html/index.html

exit

kubectl exec -it pc-deployment-97b5dbd78-lwws7 -n bubble-dev /bin/sh

echo "node1节点 172.17.0.2" > /usr/share/nginx/html/index.html

exit

kubectl exec -it pc-deployment-97b5dbd78-sm8q5 -n bubble-dev /bin/sh

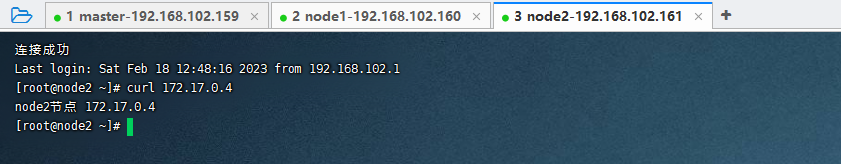

echo "node2节点 172.17.0.4" > /usr/share/nginx/html/index.html

exit

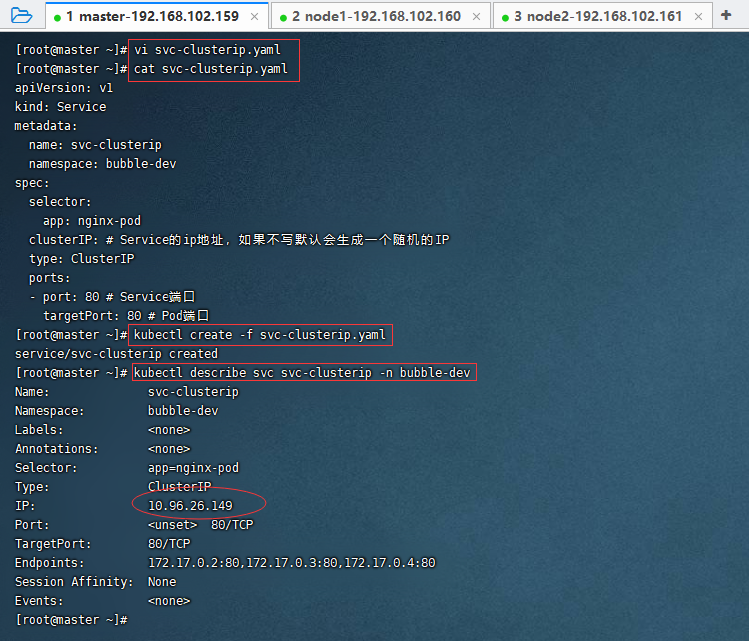

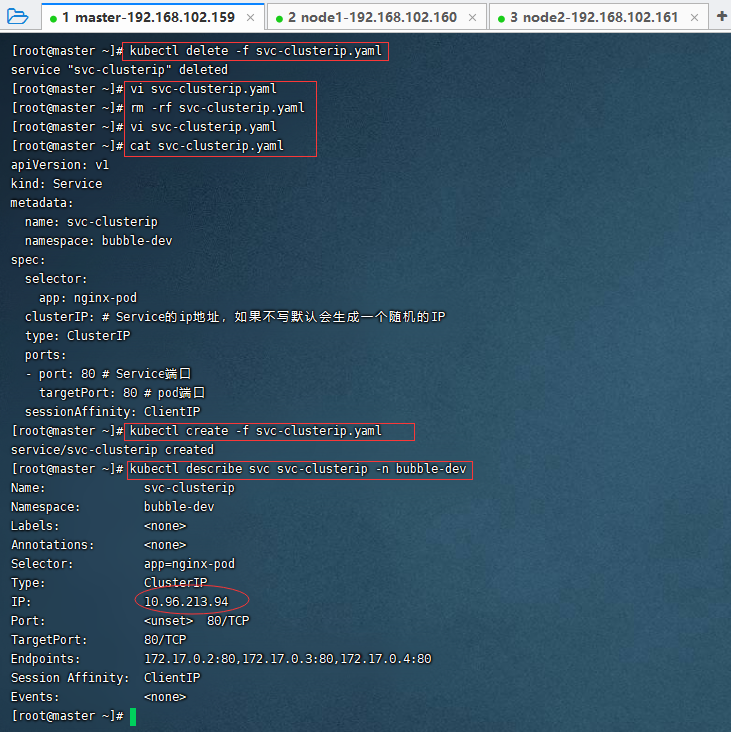

ClusterIP(集群内部访问)

apiVersion: v1

kind: Service

metadata:

name: svc-clusterip

namespace: bubble-dev

spec:

selector:

app: nginx-pod

clusterIP: # Service的ip地址,如果不写默认会生成一个随机的IP

type: ClusterIP

ports:

- port: 80 # Service端口

targetPort: 80 # Pod端口

vi svc-clusterip.yaml

cat svc-clusterip.yaml

kubectl create -f svc-clusterip.yaml

kubectl describe svc svc-clusterip -n bubble-dev

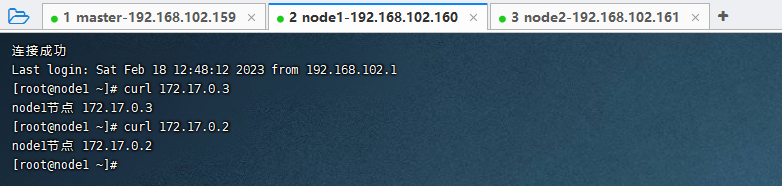

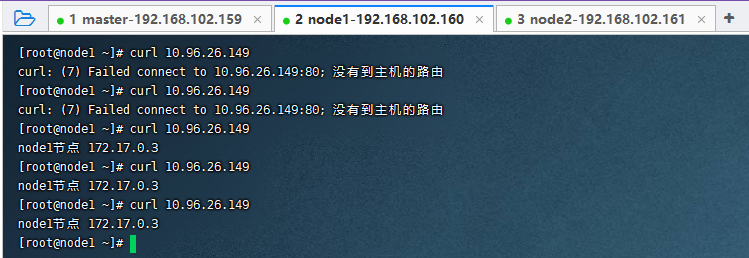

在node1节点上执行

curl 10.96.26.149

每隔1s访问Nginx集群的IP

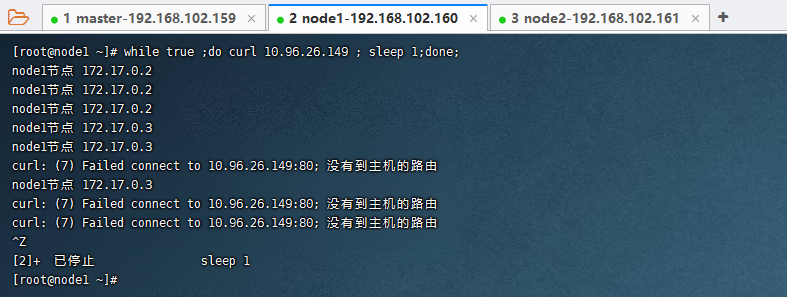

while true ;do curl 10.96.26.149 ; sleep 1;done;

分发策略:默认是随机或者轮询,自定义改成固定IP访问,即来自同一个客户端发起的所有请求都会转发到固定的一个Pod上

apiVersion: v1

kind: Service

metadata:

name: svc-clusterip

namespace: bubble-dev

spec:

selector:

app: nginx-pod

clusterIP: # Service的ip地址,如果不写默认会生成一个随机的IP

type: ClusterIP

ports:

- port: 80 # Service端口

targetPort: 80 # pod端口

sessionAffinity: ClientIP

kubectl delete -f svc-clusterip.yaml

rm -rf svc-clusterip.yaml

vi svc-clusterip.yaml

cat svc-clusterip.yaml

kubectl create -f svc-clusterip.yaml

kubectl describe svc svc-clusterip -n bubble-dev

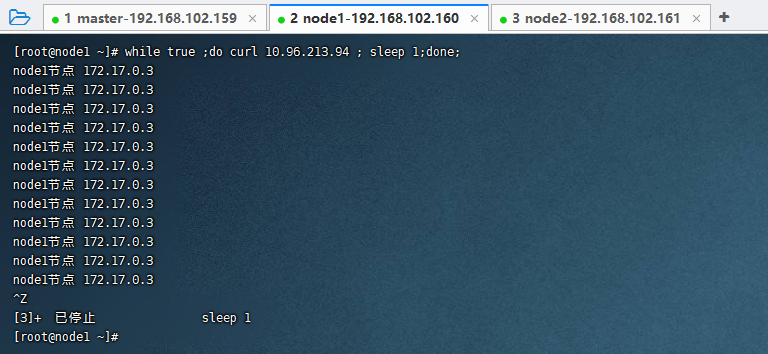

每隔1s访问Nginx集群的IP

while true ;do curl 10.96.213.94 ; sleep 1;done;

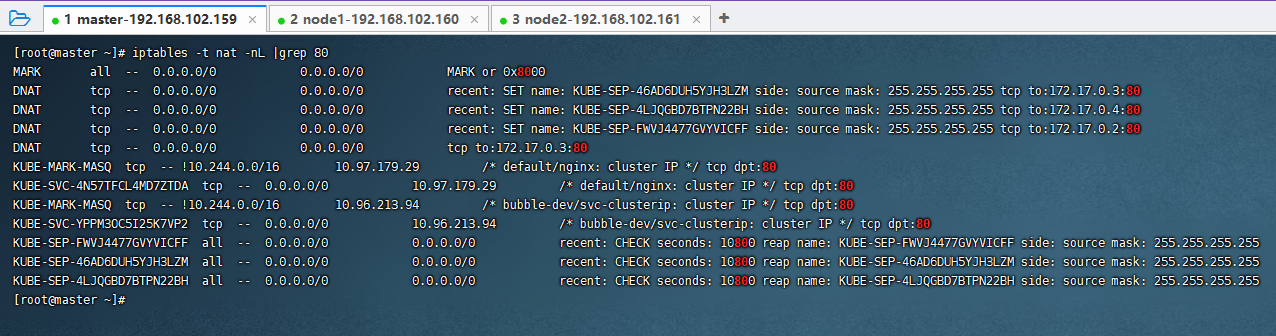

Iptables

查看iptables的规则

iptables -t nat -nL |grep 80

Iptables使用NAT等技术将virtualIP的流量转至endpoint中,容器内暴露的端口是80。kube-proxy 通过iptables处理Service的过程,需要在宿主机上设置相当多的iptables规则,如果宿主机有大量的Pod,不断刷新iptables规则,会消耗大量的CPU资源。

IPVS

IPVS模式的Service,可以使K8S集群支持更多量级的Pod。Pod和Service通信:通过iptables或ipvs实现通信,ipvs取代不了iptables,因为ipvs只能做负载均衡,而做不了NAT转换。

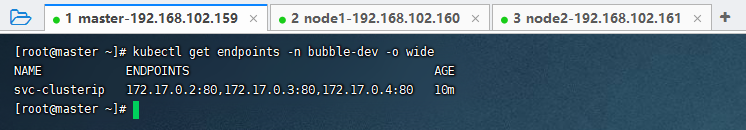

Endpoint

Endpoint是Kubernetes中的一个资源对象,存储在etcd中,用于记录一个Service对应的所有Pod的访问地址,一个Service由一组Pod组成,这些Pod通过Endpoints暴露出来,Endpoints是实现实际服务的端点集合。

kubectl get endpoints -n bubble-dev -o wide

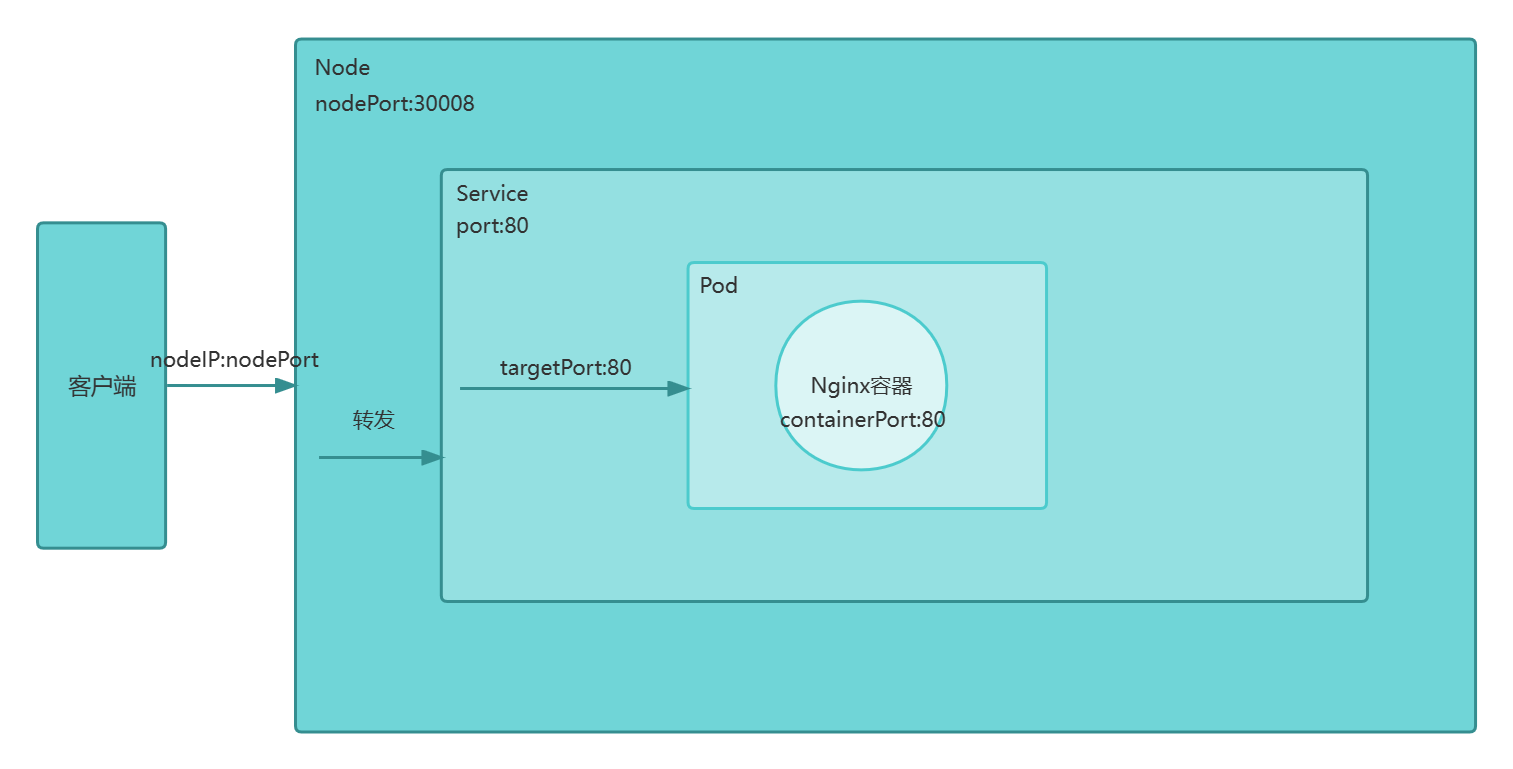

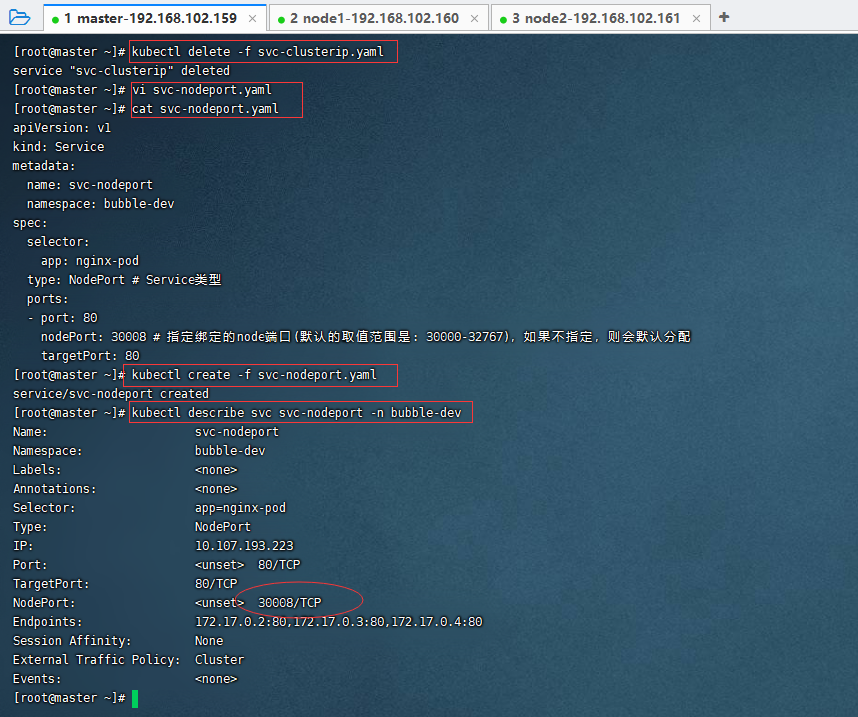

NodePort(对外暴露应用)

在每个节点启用一个端口来暴露服务,可以在集群外部访问,通过NodeIP:NodePort访问。

apiVersion: v1

kind: Service

metadata:

name: svc-nodeport

namespace: bubble-dev

spec:

selector:

app: nginx-pod

type: NodePort # Service类型

ports:

- port: 80

nodePort: 30008 # 指定绑定的node端口(默认的取值范围是: 30000-32767),如果不指定,则会默认分配

targetPort: 80

kubectl delete -f svc-clusterip.yaml

vi svc-nodeport.yaml

cat svc-nodeport.yaml

kubectl create -f svc-nodeport.yaml

kubectl describe svc svc-nodeport -n bubble-dev

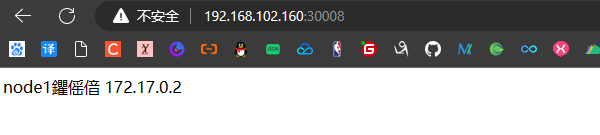

使用NodeIP:NodePort从外部访问 http://192.168.102.160:30008/

LoadBalancer(对外暴露应用,适用于公有云)

与NodePort类似,在每个节点启用一个端口来暴露服务。除此之外,K8S请求底层云平台的负载均衡器,把每个NodeIP:NodePort作为后端添加进去。

Ingress

由于在Pod数量多的时候,NodePort性能会急剧下降,如果K8S集群有成百上千的服务需要管理成百上千个NodePort,非常不友好。Ingress和Service、Deployment一样,Ingress也是K8S的资源类型,Ingress实现用域名的方式访问K8S集群的内部应用,Ingress受命名空间隔离。

Ingress文档

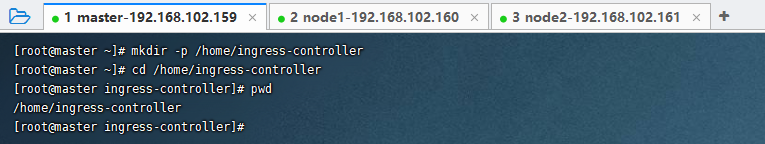

搭建Ingress环境

mkdir -p /home/ingress-controller

cd /home/ingress-controller

下载文件

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.30.0/deploy/static/mandatory.yaml

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.30.0/deploy/static/provider/baremetal/service-nodeport.yaml

如果下载不了,可以自行使用翻墙工具下载该文件或者直接使用我下面贴出来的文件

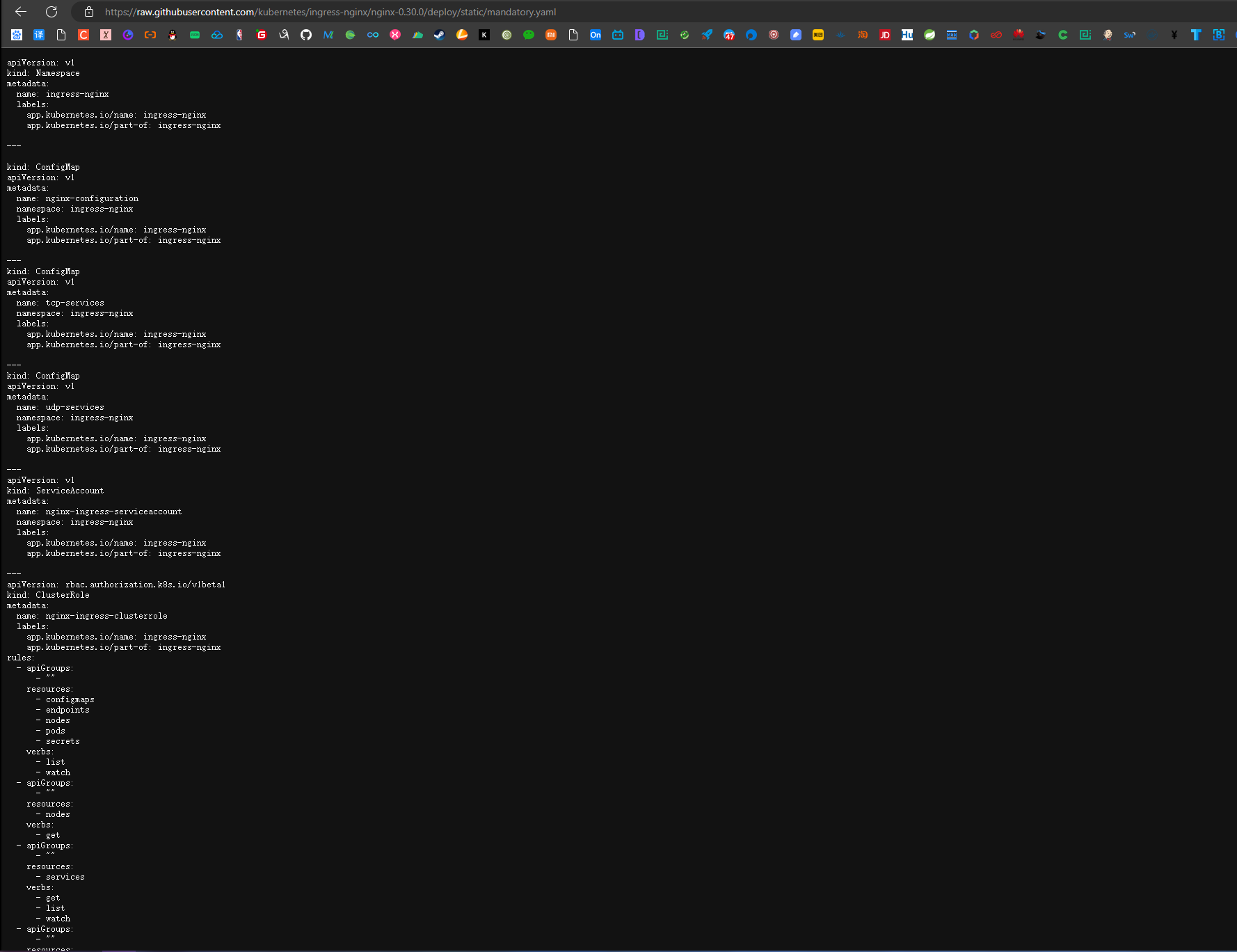

mandatory.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.30.0

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- min:

memory: 90Mi

cpu: 100m

type: Container

service-nodeport.yaml

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

- name: https

port: 443

targetPort: 443

protocol: TCP

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

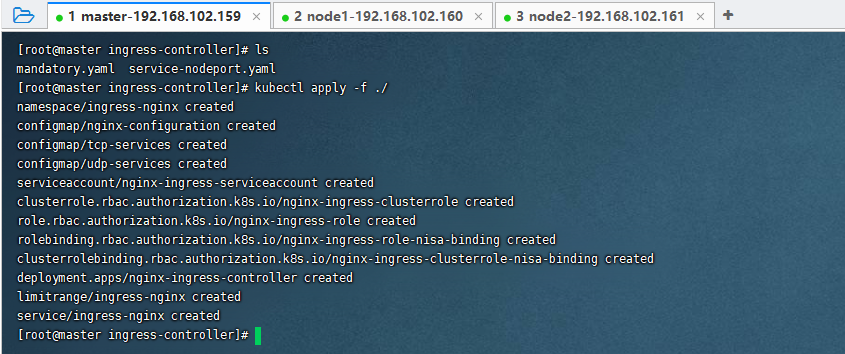

执行yaml文件

kubectl apply -f ./

如果执行有问题,则删除命名空间

kubectl delete ns ingress-nginx

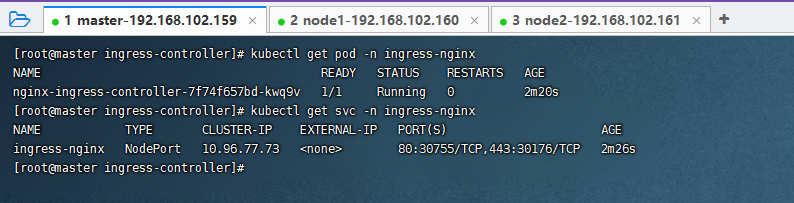

查看信息

kubectl get pod -n ingress-nginx

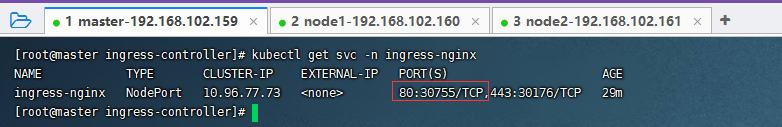

kubectl get svc -n ingress-nginx

其中30755是http协议端口,30176是为 https协议端口。

演示案例环境准备

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: bubble-dev

spec:

replicas: 3

selector:

matchLabels:

app: nx-pod

template:

metadata:

labels:

app: nx-pod

spec:

containers:

- name: nginx

image: nginx:1.17.9

ports:

- containerPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-deployment

namespace: bubble-dev

spec:

replicas: 3

selector:

matchLabels:

app: tc-pod

template:

metadata:

labels:

app: tc-pod

spec:

containers:

- name: tomcat

image: tomcat:9

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

namespace: bubble-dev

spec:

selector:

app: nx-pod

clusterIP: None

type: ClusterIP

ports:

- port: 80

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: tomcat-service

namespace: bubble-dev

spec:

selector:

app: tc-pod

clusterIP: None

type: ClusterIP

ports:

- port: 8080

targetPort: 8080

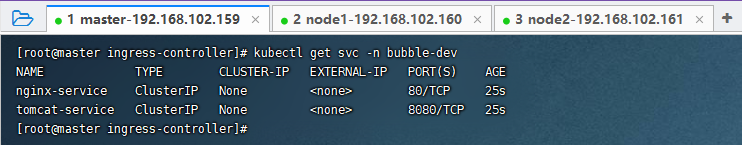

kubectl create ns bubble-dev

vi ingress.yaml

cat ingress.yaml

kubectl create -f ingress.yaml

kubectl get svc -n bubble-dev

配置http代理

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ingress-http

namespace: bubble-dev

spec:

rules:

- host: nginx.bubble.com

http:

paths:

- path: /

backend:

serviceName: nginx-service

servicePort: 80

- host: tomcat.bubble.com

http:

paths:

- path: /

backend:

serviceName: tomcat-service

servicePort: 8080

vi ingress-http.yaml

cat ingress-http.yaml

kubectl create -f ingress-http.yaml

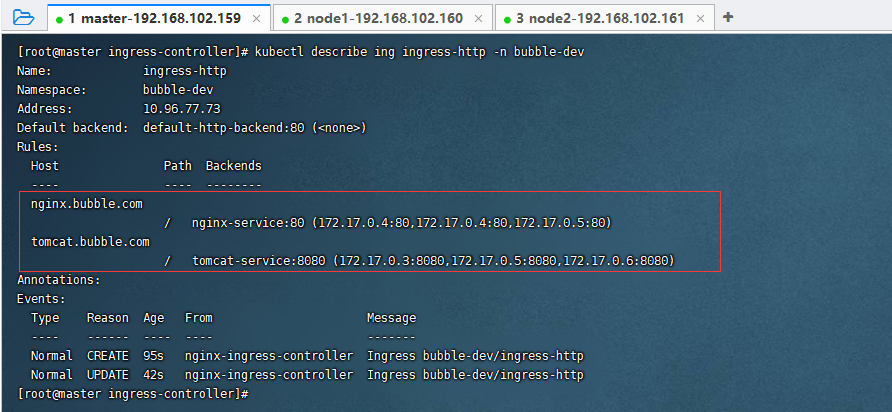

kubectl describe ing ingress-http -n bubble-dev

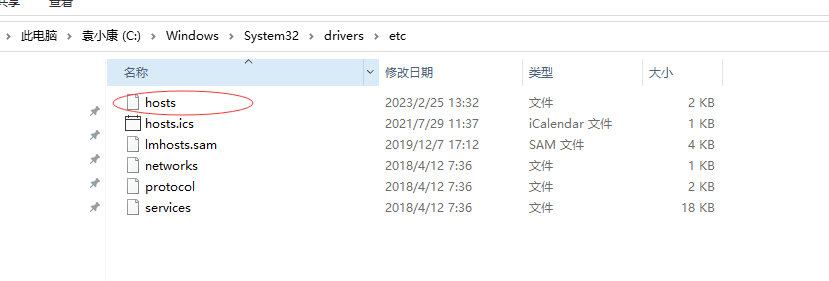

为了能看到效果,在电脑上配置host文件

C:\Windows\System32\drivers\etc

192.168.102.160 nginx.bubble.com

192.168.102.160 tomcat.bubble.com

kubectl get svc -n ingress-nginx

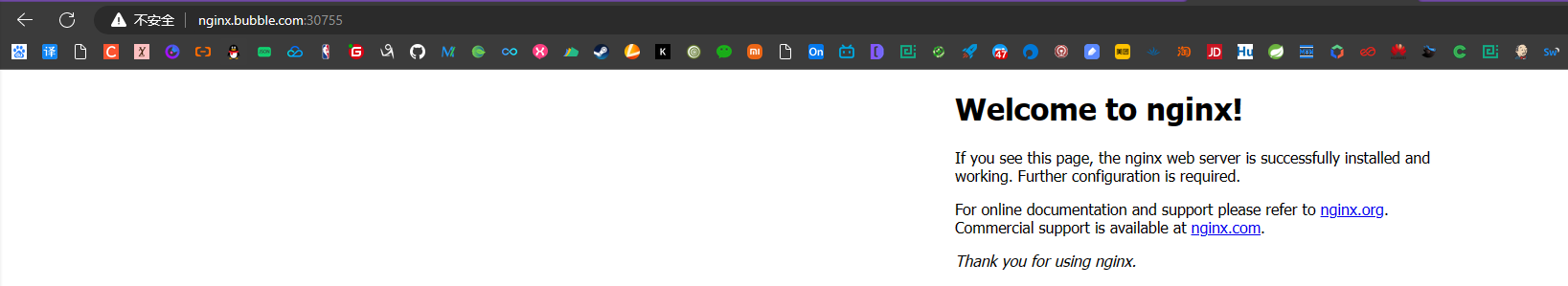

分别访问:

http://nginx.bubble.com:30755/

http://tomcat.bubble.com:30755/