- 案例1--keepalived

- 案例2--keepalived + Lvs集群

1.案例1--keepalived

1.1 环境

初识keepalived,实现web服务器的高可用集群。

Server1: 192.168.26.144

Server2: 192.168.26.169

VIP: 192.168.26.190

1.2 server1

创建etc下的keepalived目录,编辑配置文件:

# yum -y install keepalived

# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id 1 #设备在组中的标识,设置不一样即可

}#vrrp_script chk_nginx { #健康检查

# script "/etc/keepalived/ck_ng.sh" #检查脚本

# interval 2 #检查频率.秒

# weight -5 #priority减5

# fall 3 #失败三次

# }vrrp_instance VI_1 { #VI_1。实例名两台路由器相同。同学们要注意区分。

state MASTER #主或者从状态

interface ens32 #监控网卡

mcast_src_ip 192.168.0.118 #心跳源IP

virtual_router_id 55 #虚拟路由编号,主备要一致。同学们注意区分

priority 100 #优先级

advert_int 1 #心跳间隔authentication { #秘钥认证(1-8位)

auth_type PASS

auth_pass 123456

}virtual_ipaddress { #VIP

192.168.26.190/24

}# track_script { #引用脚本

# chk_nginx

# }}

# scp -r /etc/keepalived/keepalived.conf 192.168.26.169:/etc/keepalived/

用scp复制给server2

# systemctl enable keepalived.service

开机启动keepalived安装Nginx:

rpm -ivh http://nginx.org/packages/centos/7/noarch/RPMS/nginx-release-centos-7-0.el7.ngx.noarch.rpm

yum -y install nginx

systemctl enable nginx.service

systemctl start nginx.service

vim /usr/share/nginx/html/index.htmlsystemctl start keepalived.service

1.3 server2

BACKUP服务器的配置需要几处修改:

# yum -y install keepalived

# vi /etc/keepalived/keepalived.conf

router_id 1 改为 router_id 2

state MASTER改为 state BACKUP

mcast_src_ip 192.168.26.144改为backup服务器实际的IP mcast_src_ip 192.168.26.169

priority 100改为priority 99# systemctl enable keepalived.service

安装Nginx:

rpm -ivh http://nginx.org/packages/centos/7/noarch/RPMS/nginx-release-centos-7-0.el7.ngx.noarch.rpm

yum -y install nginx

systemctl enable nginx.service

systemctl start nginx.service

vim /usr/share/nginx/html/index.htmlsystemctl start keepalived.service

1.4 客户机测试访问

访问VIP http://192.168.26.190

拔掉master的网线。

访问VIP http://192.168.26.190,观察网页已经切换

1.5 关于keepalived对web状态未知的问题

对于上述实验;若maser服务器上的nginx服务出现了问题,此时用户再访问访问服务器是有问题的;

原因是keepalived监控的是接口IP状态。无法监控nginx服务状态

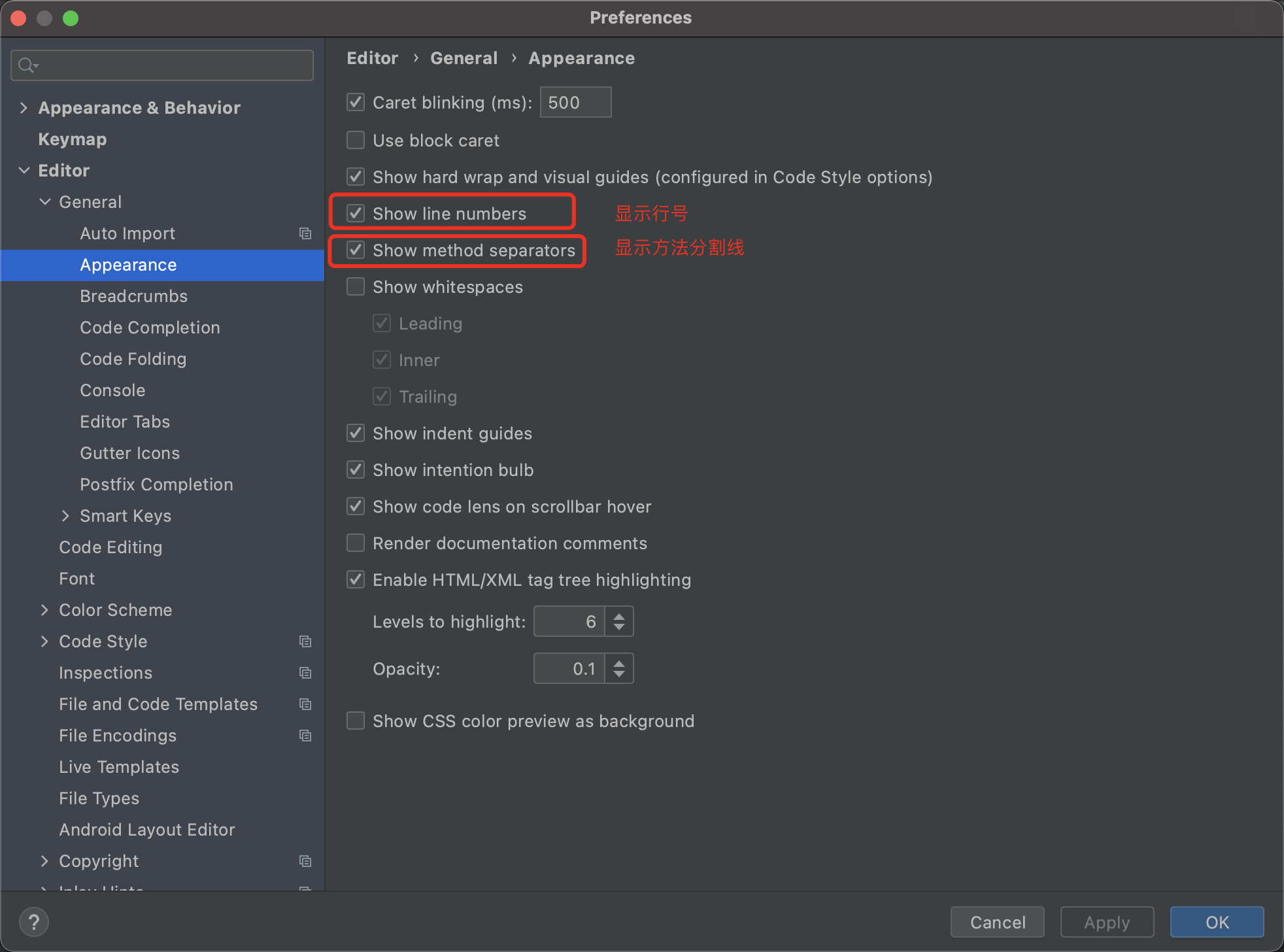

编辑监控脚本:

--server1 添加Nginx监控脚本:

# vim /etc/keepalived/ck_ng.sh

#!/bin/bash

#检查nginx进程是否存在

counter=$(ps -C nginx --no-heading|wc -l)

if [ "${counter}" = "0" ]; then

#尝试启动一次nginx,停止5秒后再次检测

service nginx start

sleep 5

counter=$(ps -C nginx --no-heading|wc -l)

if [ "${counter}" = "0" ]; then

#如果启动没成功,就杀掉keepalive触发主备切换

service keepalived stop

fi

fi# chmod +x /etc/keepalived/ck_ng.sh

--server2 添加Nginx监控脚本:

# vim /etc/keepalived/ck_ng.sh

#!/bin/bash

#检查nginx进程是否存在

counter=$(ps -C nginx --no-heading|wc -l)

if [ "${counter}" = "0" ]; then

#尝试启动一次nginx,停止5秒后再次检测

service nginx start

sleep 5

counter=$(ps -C nginx --no-heading|wc -l)

if [ "${counter}" = "0" ]; then

#如果启动没成功,就杀掉keepalive触发主备切换

service keepalived stop

fi

fi# chmod +x /etc/keepalived/ck_ng.sh

启动监控脚本 清除掉配置文件中的注释。 重启keepalived即可

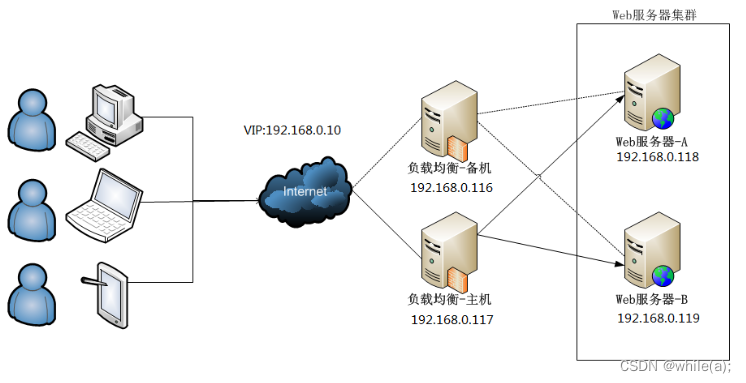

2.案例2--keepalived + Lvs集群

2.1 环境

keepalived+lvs集群

192.168.0.116 26.144 dr1 负载均衡器 master

192.168.0.117 26.169 dr2 负载均衡器

192.168.0.118 26.165 rs1 web1

192.168.0.119 26.166 rs2 web2

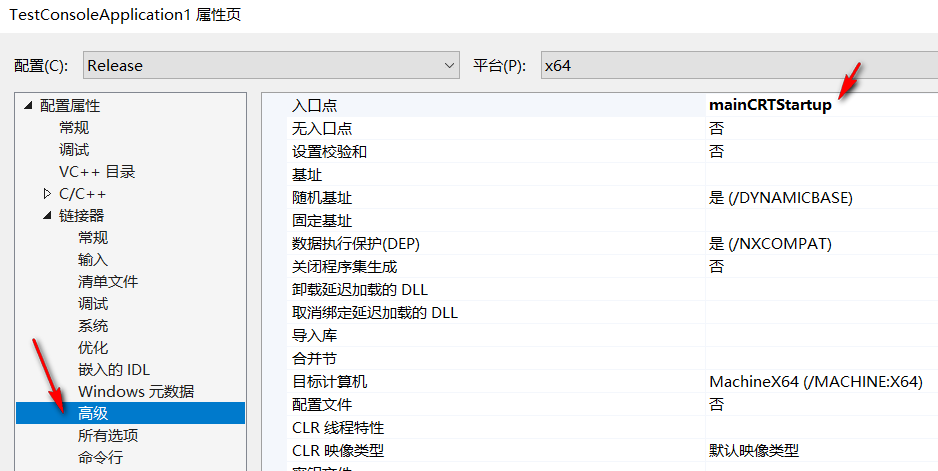

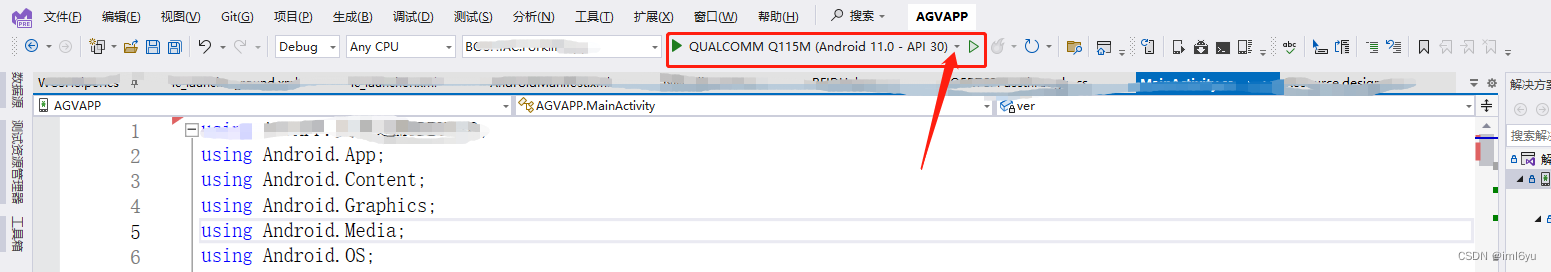

2.2 在master上安装配置Keepalived:

# yum install keepalived ipvsadm -y ipvsadm安装并不启动

# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id Director1 #两边不一样。

}

vrrp_instance VI_1 {

state MASTER #另外一台机器是BACKUP

interface ens33 #心跳网卡

virtual_router_id 51 #虚拟路由编号,主备要一致

priority 150 #优先级

advert_int 1 #检查间隔,单位秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.26.190/24 dev ens33 #VIP和工作接口

}

}

virtual_server 192.168.26.190 80 { #LVS 配置,VIP

delay_loop 3 #服务论询的时间间隔,#每隔3秒检查一次real_server状态

lb_algo rr #LVS 调度算法

lb_kind DR #LVS 集群模式

protocol TCP

real_server 192.168.26.165 80 {

weight 1

TCP_CHECK {

connect_timeout 3 #健康检查方式,连接超时时间

}

}

real_server 192.168.26.166 80 {

weight 1

TCP_CHECK {

connect_timeout 3

}

}

}

2.3 在backup上安装keepalived:

# yum install keepalived ipvsadm -y ipvsadm安装并不启动

拷贝master上的keepalived.conf到backup上:

# scp /etc/keepalived/keepalived.conf 192.168.26.169:/etc/keepalived/

拷贝后,修改配置文件:

router_id Director2

state BACKUP

priority 100

2.4 master和backup上启动服务:

#systemctl enable keepalived

# systemctl start keepalived

#reboot

2.5 web服务器配置

web1和web2同配置

安装web测试站点:

yum install -y httpd && systemctl start httpd && systemctl enable httpd

netstat -antp | grep httpd

# elinks 127.0.0.1自定义web主页,以便观察负载均衡结果

配置虚拟地址:

#cp /etc/sysconfig/network-scripts/{ifcfg-lo,ifcfg-lo:0}

#vim /etc/sysconfig/network-scripts/ifcfg-lo:0

DEVICE=lo:0

IPADDR=192.168.0.20

NETMASK=255.255.255.255

ONBOOT=yes

其他行注释掉配置路由:

#vim /etc/rc.local

/sbin/route add -host 192.168.0.20 dev lo:0

在两台机器(RS)上,添加一个路由:route add -host 192.168.0.20 dev lo:0

确保如果请求的目标IP是$VIP,那么让出去的数据包的源地址也显示为$VIP配置ARP:

# vim /etc/sysctl.conf

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.all.arp_announce = 2

net.ipv4.conf.default.arp_ignore = 1

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.lo.arp_announce = 2忽略arp请求 可以回复

# reboot

2.6 测试:

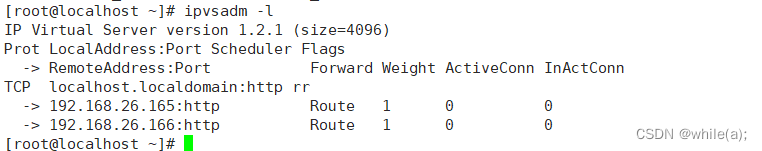

1)观察lvs路由条目 master上 查询 # ipvsadm -L

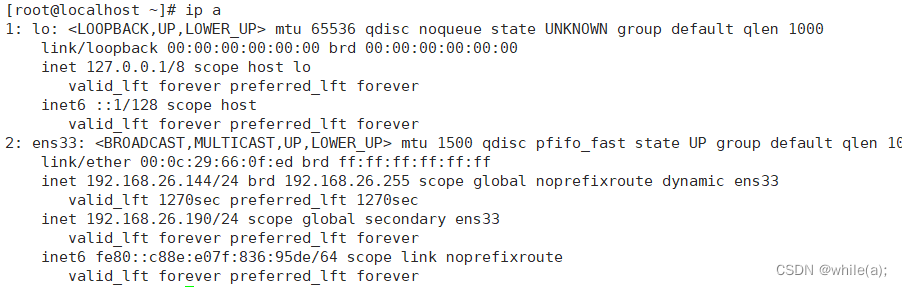

2)观察vip地址在哪台机器上 master上 查询 # ip a

3)客户端浏览器访问vip

4)关闭master上的keepalived服务,再次访问vip

master上 关闭 # systemctl stop keepalived.service5)关闭web1站点服务,再次访问VIP

web1 # systemctl stop httpd