代码在这个基础上改的,虽然跑通了,还是很混乱,这里先简单记录一下处理的流程:

- yolov5 环境设置

- yolov5 网络结构

- ONNX yolov5导出 convert error --grid

- 番外:onnx直接操作

- 番外:yolov5的重新训练

result

0 -- 113.976

1 -- 383.632

2 -- 140.986

3 -- 297.704

4 -- 0.87444

5 -- 0.993708

6 -- 0.000249922

7 -- 0.00041762

8 -- 0.000336945

9 -- 0.000183225

10 -- 0.000236392

11 -- 0.000252217

12 -- 0.000286639

13 -- 0.000230312

14 -- 0.000145376

15 -- 0.000180185

16 -- 0.000151902

17 -- 0.000155926

18 -- 0.000561118

19 -- 0.000221789

20 -- 0.000266582

21 -- 0.000435024

22 -- 0.000301957

23 -- 0.000156939

24 -- 0.000179291

25 -- 0.000255942

26 -- 0.000148356

27 -- 0.000186324

28 -- 0.00023368

29 -- 0.00123373

30 -- 0.000373274

31 -- 0.00248933

32 -- 0.000756234

33 -- 0.000238359

34 -- 0.000234783

35 -- 0.000333279

36 -- 0.000290543

37 -- 0.000204235

38 -- 0.000202298

39 -- 0.000454634

40 -- 0.000459939

41 -- 0.000307143

42 -- 0.000316918

43 -- 0.000477612

44 -- 0.000311136

45 -- 0.000257641

46 -- 0.000291973

47 -- 0.000221223

48 -- 0.000214607

49 -- 0.000198811

50 -- 0.000251591

51 -- 0.000255287

52 -- 0.000200838

53 -- 0.000250727

54 -- 0.000186563

55 -- 0.000201792

56 -- 0.000209004

57 -- 0.000218391

58 -- 0.000272989

59 -- 0.000251412

60 -- 0.000250071

61 -- 0.000756621

62 -- 0.000512242

63 -- 0.000280768

64 -- 0.000362903

65 -- 0.000526428

66 -- 0.000219613

67 -- 0.000243217

68 -- 0.000364214

69 -- 0.000135869

70 -- 0.000272751

71 -- 0.000153422

72 -- 0.000350058

73 -- 0.000167906

74 -- 0.000253022

75 -- 0.00013271

76 -- 0.000194073

77 -- 0.00031364

78 -- 0.000282824

79 -- 0.000165254

80 -- 0.000147492

81 -- 0.000219882

82 -- 0.000352472

83 -- 0.00016591

84 -- 0.000219762

(aaa:36911): Gtk-WARNING **: 21:41:29.113: Unable to locate theme engine in module_path: "adwaita",

0 -- 224.609

1 -- 375.408

2 -- 97.513

3 -- 271.702

4 -- 0.876093

5 -- 0.953948

6 -- 0.000538558

7 -- 0.00474876

8 -- 0.000694662

9 -- 0.000413418

10 -- 0.00379217

11 -- 0.00127783

12 -- 0.00398019

13 -- 0.000409782

14 -- 0.000208467

15 -- 0.000252515

16 -- 0.000275165

17 -- 0.000377715

18 -- 0.00103733

19 -- 0.000248075

20 -- 0.000384241

21 -- 0.000660151

22 -- 0.000630319

23 -- 0.000220686

24 -- 0.000251234

25 -- 0.00030458

26 -- 0.000180662

27 -- 0.000222683

28 -- 0.000281215

29 -- 0.00271425

30 -- 0.000729769

31 -- 0.013637

32 -- 0.000787467

33 -- 0.000634134

34 -- 0.000276208

35 -- 0.00032416

36 -- 0.00035271

37 -- 0.000233561

38 -- 0.000242144

39 -- 0.000500679

40 -- 0.000482202

41 -- 0.000408322

42 -- 0.000535399

43 -- 0.000550061

44 -- 0.000435442

45 -- 0.000299573

46 -- 0.000428587

47 -- 0.000256538

48 -- 0.000239044

49 -- 0.000241041

50 -- 0.000334561

51 -- 0.000299394

52 -- 0.000211567

53 -- 0.000269055

54 -- 0.000210404

55 -- 0.00021863

56 -- 0.000231981

57 -- 0.000238657

58 -- 0.000286818

59 -- 0.000255346

60 -- 0.00029242

61 -- 0.0012323

62 -- 0.000676692

63 -- 0.00039801

64 -- 0.000477135

65 -- 0.000792891

66 -- 0.000286341

67 -- 0.000348002

68 -- 0.000536263

69 -- 0.000176817

70 -- 0.000303745

71 -- 0.000202596

72 -- 0.00048095

73 -- 0.000222802

74 -- 0.000344068

75 -- 0.000235379

76 -- 0.000235349

77 -- 0.000523388

78 -- 0.000439644

79 -- 0.000218719

80 -- 0.000209481

81 -- 0.000268012

82 -- 0.000414878

83 -- 0.000200689

84 -- 0.000240803

0 -- 224.071

1 -- 374.789

2 -- 98.1175

3 -- 270.575

4 -- 0.875973

5 -- 0.958642

6 -- 0.000498533

7 -- 0.00610068

8 -- 0.000723332

9 -- 0.00037539

10 -- 0.00140604

11 -- 0.000572115

12 -- 0.00359043

13 -- 0.000359237

14 -- 0.000208646

15 -- 0.000284642

16 -- 0.000263721

17 -- 0.000354379

18 -- 0.000932723

19 -- 0.000252038

20 -- 0.000384927

21 -- 0.000629812

22 -- 0.000542492

23 -- 0.000220001

24 -- 0.00026685

25 -- 0.00031507

26 -- 0.000189811

27 -- 0.000228554

28 -- 0.000284404

29 -- 0.00209638

30 -- 0.000678867

31 -- 0.00786635

32 -- 0.000743687

33 -- 0.000642598

34 -- 0.000291318

35 -- 0.000312328

36 -- 0.000366569

37 -- 0.000236213

38 -- 0.000247508

39 -- 0.000485033

40 -- 0.000455916

41 -- 0.000428438

42 -- 0.00050813

43 -- 0.000516742

44 -- 0.000414431

45 -- 0.00028041

46 -- 0.000399381

47 -- 0.000241667

48 -- 0.000232786

49 -- 0.000225753

50 -- 0.000307888

51 -- 0.000315517

52 -- 0.000210494

53 -- 0.000268251

54 -- 0.000218302

55 -- 0.000203103

56 -- 0.00023222

57 -- 0.000231177

58 -- 0.000268996

59 -- 0.000277966

60 -- 0.00028941

61 -- 0.00117946

62 -- 0.000705361

63 -- 0.000389308

64 -- 0.000481099

65 -- 0.000689566

66 -- 0.000304729

67 -- 0.00037083

68 -- 0.000513971

69 -- 0.000180334

70 -- 0.000300795

71 -- 0.000200182

72 -- 0.000429869

73 -- 0.000226945

74 -- 0.000321835

75 -- 0.000218093

76 -- 0.000252604

77 -- 0.000460982

78 -- 0.000396043

79 -- 0.000221908

80 -- 0.000228763

81 -- 0.000256807

82 -- 0.000423104

83 -- 0.000196427

84 -- 0.000239581

0 -- 586.69

1 -- 379.493

2 -- 104.643

3 -- 279.054

4 -- 0.868859

5 -- 0.987435

6 -- 0.000355512

7 -- 0.00129452

8 -- 0.000545174

9 -- 0.00024873

10 -- 0.000355482

11 -- 0.000315189

12 -- 0.00093025

13 -- 0.000336021

14 -- 0.000175387

15 -- 0.000271171

16 -- 0.000208765

17 -- 0.000219077

18 -- 0.000818074

19 -- 0.000277936

20 -- 0.000341684

21 -- 0.000608295

22 -- 0.000439823

23 -- 0.00022161

24 -- 0.000257909

25 -- 0.000391006

26 -- 0.000211388

27 -- 0.00024271

28 -- 0.000289857

29 -- 0.00133273

30 -- 0.000453919

31 -- 0.0022139

32 -- 0.000918448

33 -- 0.000335097

34 -- 0.000290811

35 -- 0.000400156

36 -- 0.000362962

37 -- 0.000270098

38 -- 0.000275284

39 -- 0.000576645

40 -- 0.000526071

41 -- 0.000414044

42 -- 0.000414848

43 -- 0.000615329

44 -- 0.00037384

45 -- 0.00032717

46 -- 0.000423133

47 -- 0.000284851

48 -- 0.000276983

49 -- 0.000260025

50 -- 0.00032714

51 -- 0.000324607

52 -- 0.000254303

53 -- 0.000293702

54 -- 0.000264376

55 -- 0.000263631

56 -- 0.000260115

57 -- 0.000269145

58 -- 0.000347644

59 -- 0.000326723

60 -- 0.000309974

61 -- 0.00117621

62 -- 0.000716358

63 -- 0.000375539

64 -- 0.000435114

65 -- 0.000769645

66 -- 0.000286758

67 -- 0.000340611

68 -- 0.000475556

69 -- 0.000173241

70 -- 0.000347823

71 -- 0.000204802

72 -- 0.000439256

73 -- 0.000219136

74 -- 0.000330031

75 -- 0.000153482

76 -- 0.000243932

77 -- 0.000446111

78 -- 0.000341684

79 -- 0.000207216

80 -- 0.000213176

81 -- 0.000275582

82 -- 0.000530362

83 -- 0.000198811

84 -- 0.000284791

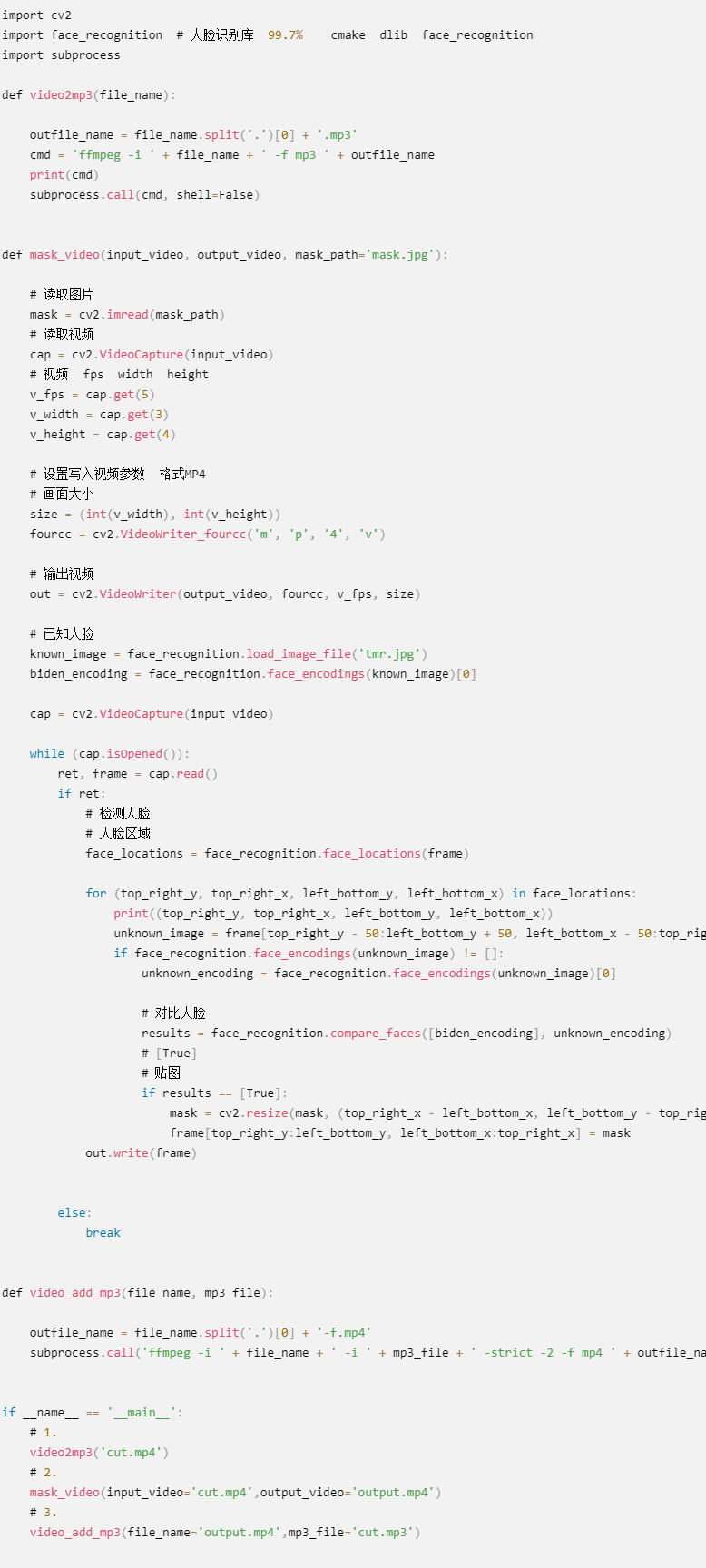

code

//#include <iostream>

//

//int main() {

// std::cout << "Hello, World!" << std::endl;

// return 0;

//}

//PP-HumanSeg-opencv-onnxrun-main

#define _CRT_SECURE_NO_WARNINGS

#include <iostream>

#include <fstream>

#include <string>

#include <opencv2/imgproc.hpp>

#include <opencv2/highgui.hpp>

#include <onnxruntime_cxx_api.h>

//#include <cuda_provider_factory.h> ///如果使用cuda加速,需要取消注释

#include <tensorrt_provider_factory.h> ///如果使用cuda加速,需要取消注释

#include <chrono>

using namespace cv;

using namespace std;

using namespace Ort;

class pphuman_seg

{

public:

pphuman_seg();

Mat inference(Mat cv_image);

private:

void preprocess(Mat srcimg);

void normalize_(Mat srcimg);

int inpWidth;

int inpHeight;

vector<float> input_image_;

const float conf_threshold = 0.5;

Env env = Env(ORT_LOGGING_LEVEL_ERROR, "pphuman-seg");

Ort::Session *ort_session = nullptr;

SessionOptions sessionOptions = SessionOptions();

vector<char*> input_names;

vector<char*> output_names;

vector<vector<int64_t>> input_node_dims; // >=1 outputs

vector<vector<int64_t>> output_node_dims; // >=1 outputs

};

pphuman_seg::pphuman_seg()

{

//string model_path = "../model_float32.onnx";

string model_path = "/home/pdd/Documents/yolov5-5.0/best.onnx";

//string model_path = "/home/pdd/CLionProjects/yolov5-v6.1-opencv-onnxrun-main/opencv/yolov5s.onnx";

//std::wstring widestr = std::wstring(model_path.begin(), model_path.end()); windows写法

OrtStatus* status = OrtSessionOptionsAppendExecutionProvider_CUDA(sessionOptions, 0); ///如果使用cuda加速,需要取消注释

//SessionOptionsAppendExecutionProvider_CUDA

sessionOptions.SetGraphOptimizationLevel(ORT_ENABLE_BASIC);

//ort_session = new Session(env, widestr.c_str(), sessionOptions); windows写法

ort_session = new Session(env, model_path.c_str(), sessionOptions); //linux写法

size_t numInputNodes = ort_session->GetInputCount();

size_t numOutputNodes = ort_session->GetOutputCount();

AllocatorWithDefaultOptions allocator;

for (int i = 0; i < numInputNodes; i++)

{

input_names.push_back(ort_session->GetInputName(i, allocator));

Ort::TypeInfo input_type_info = ort_session->GetInputTypeInfo(i);

auto input_tensor_info = input_type_info.GetTensorTypeAndShapeInfo();

auto input_dims = input_tensor_info.GetShape();

input_node_dims.push_back(input_dims);

}

for (int i = 0; i < numOutputNodes; i++)

{

output_names.push_back(ort_session->GetOutputName(i, allocator));

Ort::TypeInfo output_type_info = ort_session->GetOutputTypeInfo(i);

auto output_tensor_info = output_type_info.GetTensorTypeAndShapeInfo();

auto output_dims = output_tensor_info.GetShape();

output_node_dims.push_back(output_dims);

}

this->inpHeight = input_node_dims[0][2];

this->inpWidth = input_node_dims[0][3];

}

void pphuman_seg::preprocess(Mat srcimg)

{

Mat dstimg;

resize(srcimg, dstimg, Size(this->inpWidth, this->inpHeight), INTER_LINEAR);

int row = dstimg.rows;

int col = dstimg.cols;

this->input_image_.resize(row * col * dstimg.channels());

for (int c = 0; c < 3; c++)

{

for (int i = 0; i < row; i++)

{

for (int j = 0; j < col; j++)

{

float pix = dstimg.ptr<uchar>(i)[j * 3 + c];// uchar* data = image.ptr<uchar>(i); https://blog.csdn.net/xyu66/article/details/79929871 https://blog.csdn.net/HWWH520/article/details/124941723

this->input_image_[c * row * col + i * col + j] = pix/ 255.0; //(pix / 255.0 - 0.5) / 0.5;// todo (pix / 255.0 - 0.5) / 0.5;

}

}

}

}

void pphuman_seg::normalize_(Mat img)// cv::Mat blob= blobFromImage(srcimg,1/255.0,cv::Size(640,640), cv::Scalar(0, 0, 0), true, false);

{

// img.convertTo(img, CV_32F);

int row = img.rows;

int col = img.cols;

this->input_image_.resize(row * col * img.channels());

for (int c = 0; c < 3; c++)

{

for (int i = 0; i < row; i++)

{

for (int j = 0; j < col; j++)

{

float pix = img.ptr<uchar>(i)[j * 3 + 2 - c];// float pix = img.ptr<uchar>(i)[j * 3 + 2 - c];

this->input_image_[c * row * col + i * col + j] = pix / 255.0;

}

}

}

}

Mat pphuman_seg::inference(Mat srcimg)

{

Mat show(srcimg);

Mat shrink;

Size dsize = Size(640, 640);

resize(show, shrink, dsize, 0, 0, INTER_AREA);//https://blog.csdn.net/JiangTao2333/article/details/122591317

// string kWinName = "123";

// namedWindow(kWinName, WINDOW_NORMAL);

// imshow(kWinName, shrink);

// waitKey(0);

// destroyAllWindows();

this->preprocess(srcimg);//this->normalize_(srcimg);//

array<int64_t, 4> input_shape_{1, 3, this->inpHeight, this->inpWidth};

auto allocator_info = MemoryInfo::CreateCpu(OrtDeviceAllocator, OrtMemTypeCPU);

Value input_tensor_ = Value::CreateTensor<float>(allocator_info, input_image_.data(), input_image_.size(), input_shape_.data(), input_shape_.size());

vector<Value> ort_outputs = ort_session->Run(RunOptions{ nullptr }, input_names.data(), &input_tensor_, 1, output_names.data(), output_names.size()); // 开始推理

// post process.

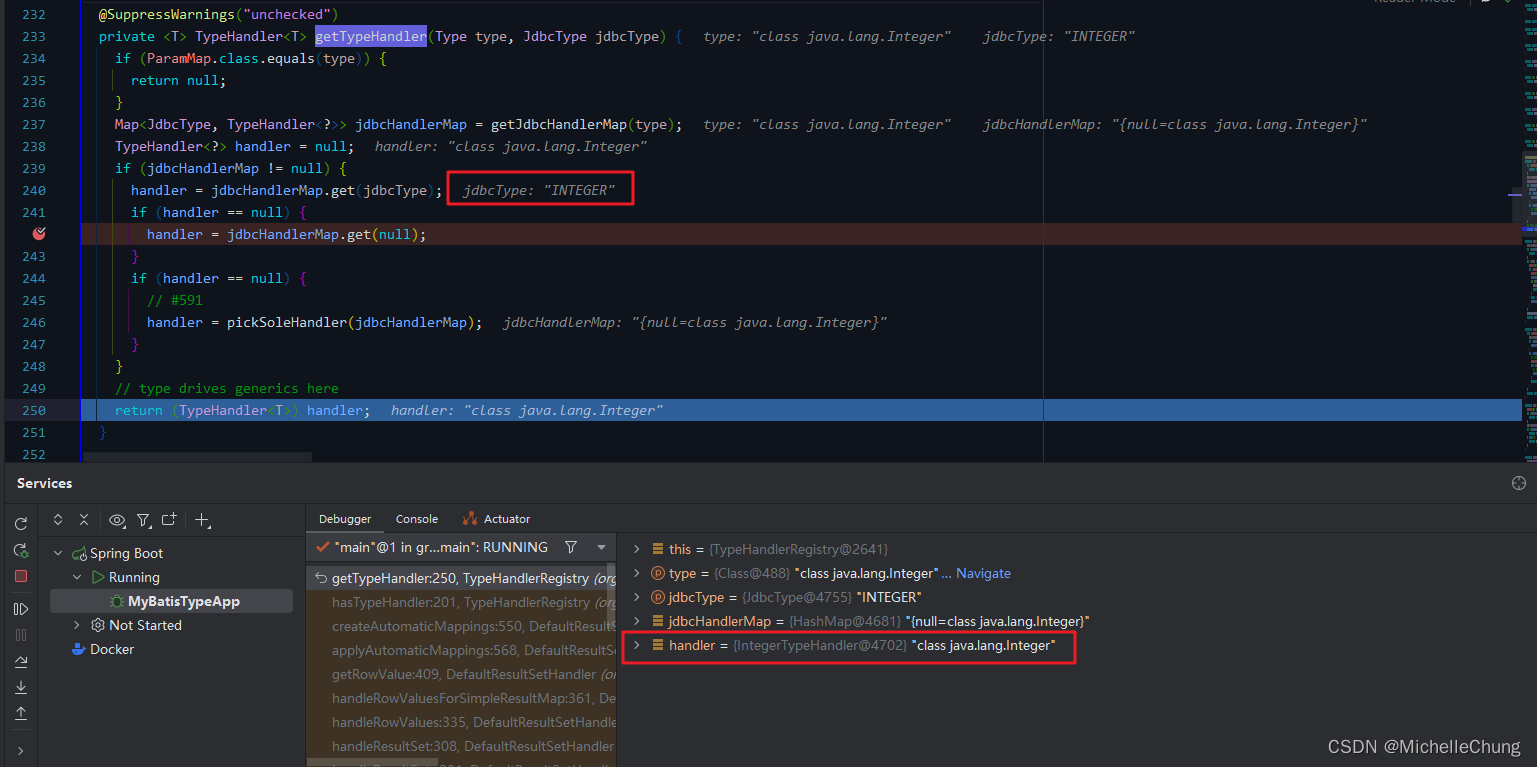

Value &mask_pred = ort_outputs.at(0);

const int out_h = this->output_node_dims[0][1];

const int out_w = this->output_node_dims[0][2];

float *mask_ptr = mask_pred.GetTensorMutableData<float>();

Mat segmentation_map;

Mat mask_out(out_h, out_w, CV_32FC2, mask_ptr);// mask_ptr 拷贝初始化

Mat M_out(out_h, out_w, CV_32FC1, mask_ptr);// mask_ptr 拷贝初始化

int numOfRow = M_out.cols;

//訪问像素

for (int row = 0; row < M_out.rows; row++)

{

float *data = M_out.ptr<float>(row);//获得该行的地址 //cv::Vec3b *data = mask_out.ptr<cv::Vec3b>(row);

if(data[4]>0.2){

//訪问该行元素

for (int col = 0; col < numOfRow; col++)

{ //data[col][0] = 0; data[col][2] = 255;

std::cout << col << " -- "<< data[col] << std::endl;

}

// int xmin = int(data[0]);

// int ymin = int(data[1]);

float ratiow =1, ratioh =1;

float w = data[2]; ///w

float h = data[3]; ///h

float xmin = (data[0] - 0.5 * w)*ratiow;

float ymin = (data[1] - 0.5 * h)*ratioh;

float xmax = (data[0] + 0.5 * w)*ratiow;

float ymax = (data[1] + 0.5 * h)*ratioh;

rectangle(shrink, Point(int(xmin), int(ymin)), Point(int(xmax), int(ymax)), Scalar(0, 0, 255), 2);

// string kWinName = "123";

// namedWindow(kWinName, WINDOW_NORMAL);

// imshow(kWinName, shrink);

// waitKey(0);

//destroyAllWindows();

}else{

cout<< "omit" << data[4]<<endl;

}

}

return shrink;

// resize(mask_out, segmentation_map, Size(srcimg.cols, srcimg.rows));

// Mat dstimg = srcimg.clone();

//

// for (int h = 0; h < srcimg.rows; h++)

// {

// for (int w = 0; w < srcimg.cols; w++)

// {

// float pix = segmentation_map.ptr<float>(h)[w * 2];

// if (pix > this->conf_threshold)//

// {

// float b = (float)srcimg.at<Vec3b>(h, w)[0];

// dstimg.at<Vec3b>(h, w)[0] = uchar(b * 0.5 + 1);

// float g = (float)srcimg.at<Vec3b>(h, w)[1];

// dstimg.at<Vec3b>(h, w)[1] = uchar(g * 0.5 + 1);

// float r = (float)srcimg.at<Vec3b>(h, w)[2];

// dstimg.at<Vec3b>(h, w)[2] = uchar(r * 0.5 + 1);

// }

// }

// }

//

// for (int h = 0; h < srcimg.rows; h++)

// {

// for (int w = 0; w < srcimg.cols; w++)

// {

// float pix = segmentation_map.ptr<float>(h)[w * 2 + 1];

// if (pix > this->conf_threshold)

// {

// float b = (float)dstimg.at<Vec3b>(h, w)[0];

// dstimg.at<Vec3b>(h, w)[0] = uchar(b * 0.5 + 1);

// float g = (float)dstimg.at<Vec3b>(h, w)[1] ;//

// dstimg.at<Vec3b>(h, w)[1] = uchar(g * 0.5 + 1);

// float r = (float)dstimg.at<Vec3b>(h, w)[2]+ 255.0;

// dstimg.at<Vec3b>(h, w)[2] = uchar(r * 0.5 + 1);

// }

// }

// }

// return dstimg;

}

int main()

{

//return 0;

const int use_video = 1;

pphuman_seg mynet;

if (use_video)

{

//cv::VideoCapture video_capture("/home/pdd/Downloads/davide_quatela--breathing_barcelona.mp4"); ///也可以是视频文件

cv::VideoCapture video_capture(0); ///也可以是视频文件

if (!video_capture.isOpened())

{

std::cout << "Can not open video " << endl;

return -1;

}

cv::Mat frame;

while (video_capture.read(frame))

{

//std::chrono::time_point<std::chrono::steady_clock>

std::chrono::time_point<std::chrono::high_resolution_clock> start,end;

std::chrono::duration<float> duration;

start = std::chrono::high_resolution_clock::now();

Mat dstimg = mynet.inference(frame);

end = std::chrono::high_resolution_clock::now();

duration = end -start;

float ms = duration.count() *1000.0f ;

std::cout<< "Time took" << ms << "ms"<<std::endl;

string kWinName = "Deep learning ONNXRuntime with pphuman seg";

namedWindow(kWinName, WINDOW_NORMAL);

imshow(kWinName, dstimg);

waitKey(1);

}

destroyAllWindows();

}

else

{

string imgpath = "/home/pdd/Documents/yolov5-5.0/data/images/a.jpg";

Mat srcimg = imread(imgpath);

Mat dstimg = mynet.inference(srcimg);

namedWindow("srcimg", WINDOW_NORMAL);

imshow("srcimg", srcimg);

static const string kWinName = "Deep learning ONNXRuntime with pphuman seg";

namedWindow(kWinName, WINDOW_NORMAL);

imshow(kWinName, dstimg);

waitKey(0);

destroyAllWindows();

}

}

CG

Mat M_out(out_h, out_w, CV_32FC1, mask_ptr);// mask_ptr 拷贝初始化

int numOfRow = M_out.cols;

//訪问像素

for (int row = 0; row < M_out.rows; row++)

{

float *data = M_out.ptr<float>(row);//获得该行的地址 //cv::Vec3b *data = mask_out.ptr<cv::Vec3b>(row);

//訪问该行元素

for (int col = 0; col < numOfRow; col++)

{ //data[col][0] = 0; data[col][2] = 255;

std::cout << col << " -- "<< data[col] << std::endl;

}

}

Mat mask_out(out_h, out_w, CV_32FC2, mask_ptr);// mask_ptr 拷贝初始化

//Mat mask_out(out_h, out_w, CV_32FC1, mask_ptr);// mask_ptr 拷贝初始化

//每行元素数量

int numOfRow = mask_out.cols;

//訪问像素

for (int row = 0; row < mask_out.rows; row++)

{

cv::Vec2f *data = mask_out.ptr<cv::Vec2f>(row);//获得该行的地址 //cv::Vec3b *data = mask_out.ptr<cv::Vec3b>(row);

//訪问该行元素

for (int col = 0; col < numOfRow; col++)

{

std::cout << data[col] << std::endl;

}

}

-

https://mp.weixin.qq.com/s?__biz=MzU0NjgzMDIxMQ==&mid=2247593379&idx=2&sn=7cd3b7517336ccd2a208b92a5221cac9&chksm=fb548f4fcc2306597be4438aa6dc60c5b69746520b4ca26b63a3e0bc85c5cd3cf19cfdd077f8&scene=27 yolov5-face后处理

-

https://github.com/shaoshengsong/DeepSORT/blob/master/detector/YOLOv5/src/YOLOv5Detector.cpp 后处理代码

-

TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can’t record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

if self.grid[i].shape[2:4] != x[i].shape[2:4]:

# y[..., 2:4] = (y[..., 2:4] * 2) ** 2 * self.anchor_grid[i] # wh

y[..., 2:4] = (y[..., 2:4] * 2) ** 2 * torch.tensor(self.anchor_grid[i].tolist()).float() # wh

- terminate called after throwing an instance of ‘Ort::Exception’

what(): Load model from /home/pdd/Documents/yolov5-5.0/weights/yolov5s.onnx failed:Node (Mul_925) Op (Mul) [ShapeInferenceError] Incompatible dimensions

![[安装之4] 联想ThinkPad 加装固态硬盘教程](https://img-blog.csdnimg.cn/img_convert/9423ce763668483b9bd9a396ef98911d.png)