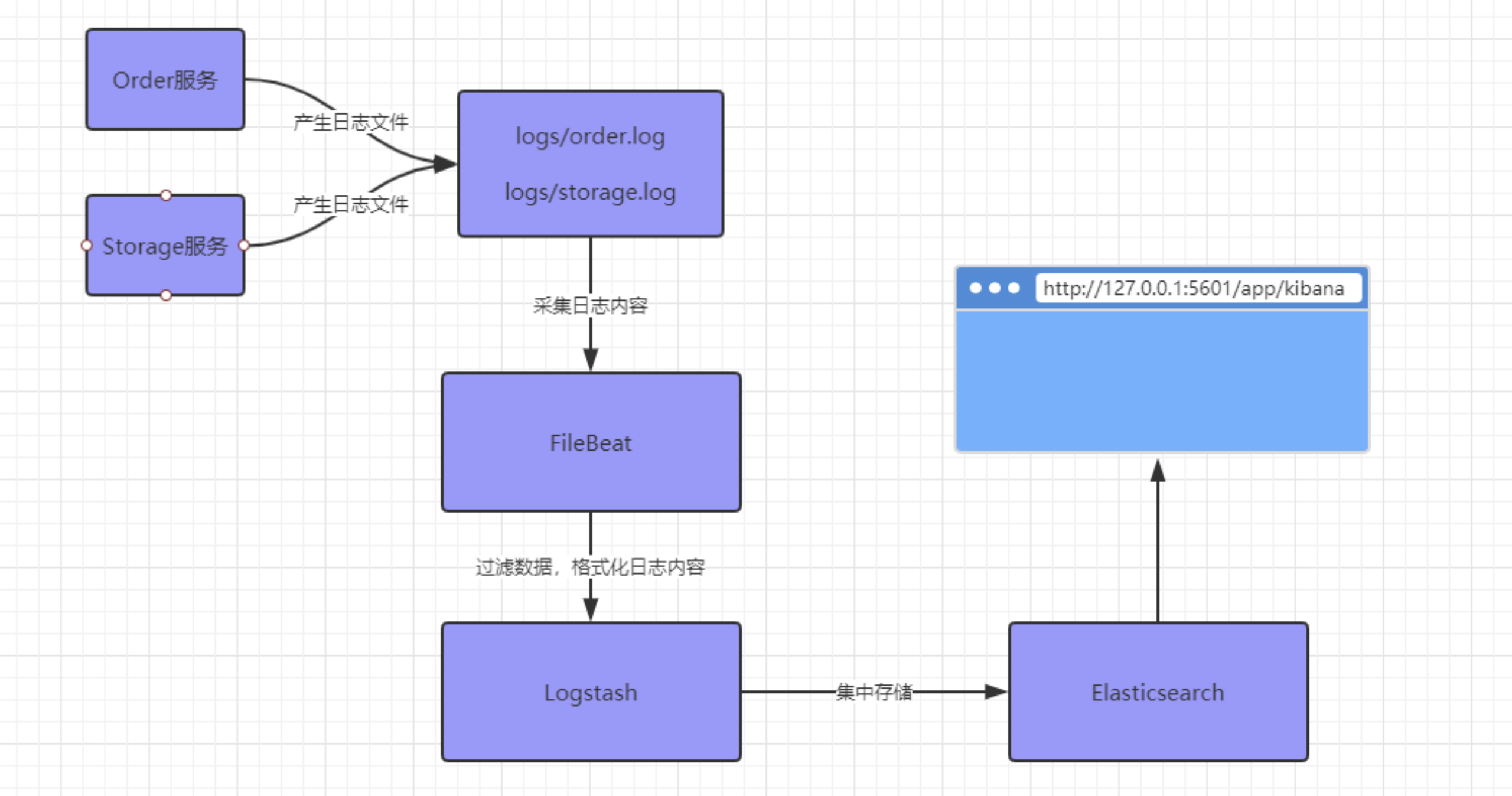

1、背景

鉴于现在项目中的日志比较乱,所以建议使用现在较为流行的elk收集日志并展示;

2、下载、配置与启动

在下载 Elastic 产品 | Elastic 官网下载filebeat、logstash、elasticSearch、kibana 版本要一致 本人测试用的7.14 mac版本 实际生产使用7.14 linux版本

2.1、Filebeat:

tar -zxvf filebeat-7.14.0-darwin-x86_64.tar.gz

Cd filebeat-7.14.0-darwin-x86_64

配置:filebeat.yml

- type: log

enabled: true

paths:

- /Users/admin/IdeaProjects/maijia-tebu/log/info/*.log

- /Users/admin/IdeaProjects/taosj-aliexpress-data/log/*/*.log

multiline.pattern: '^[0-9][0-9][0-9][0-9]'

multiline.negate: true

multiline.match: after

output.logstash:

hosts: ["localhost:5044”]

sudo chown root:root /Users/admin/IdeaProjects/maijia-tebu/log/info root:为执行用户

sudo chown root filebeat.yml

测试配置 ./filebeat test config -e

sudo ./filebeat -e

配置说明:

Paths:后面可以配置多个目录,配置的目录不会去递归,只会在最后的子目录里面寻找.log的文件;

multiline:以 '^[0-9][0-9][0-9][0-9]' 格式后面的识别为一行,这样报错等信息就在一行了;

output.logstash:logstash的地址;

sudo chown admin:admin /Users/admin/IdeaProjects/maijia-tebu/log/info admin:为执行用户 给log目录设置权限

sudo chown root filebeat.yml 给配置文件权限

./filebeat test config -e 测试配置

sudo ./filebeat -e 启动

2.2、Logstash:

tar -zxvf logstash-7.14.0-darwin-x86_64.tar.gz

Cd logstash-7.14.0

配置:

Vi ./config/logstash-test.conf

input {

beats {

port => 5044

}

}

filter{

dissect {

mapping => {

"message" => "%{log_time}|%{ip}|%{serverName}|%{profile}|%{level}|%{logger}|%{thread}|%{traceId}|%{msg}" //与项目中的日志输出格式相对应

}

}

date{

match => ["log_time", "yyyy-MM-dd HH:mm:ss.SSS"]

target => "@timestamp"

}

}

output {

elasticsearch {

hosts => ["localhost:9200"]

index => "logs-%{+YYYY.MM.dd}" //创建索引的格式

}

}

sudo ./bin/logstash -f config/logstash-test.conf

2.3、elaticSearch:

tar -zxvf elasticsearch-7.14.0-darwin-x86_64.tar.gz

Cd elasticsearch-7.14.0

Sodu ./bin/elasticSearch

检查服务:curl http://127.0.0.1:9200

2.4、kibana:

tar -zxvf kibana-7.14.0-darwin-x86_64.tar.gz

Cd kibana-7.14.0-darwin-x86_64

配置:如果es与kibana不在同一台服务器,在config/kibana.yml,添加 elasticsearch.url: "http://ip:port"

Sudo ./bin/kibana

检查:浏览器打开http://127.0.0.1:5601

3、新建jar包

新建jar包 写一些工具类

3.1Snowflake

import com.baomidou.mybatisplus.core.toolkit.ArrayUtils;

import com.baomidou.mybatisplus.core.toolkit.SystemClock;

import java.io.Serializable;

import java.util.Date;

public class Snowflake implements Serializable {

private static final long serialVersionUID = 1L;

private final long twepoch;

private final long workerIdBits = 5L;

private final long dataCenterIdBits = 5L;

// 最大支持数据中心节点数0~31,一共32个

@SuppressWarnings({"PointlessBitwiseExpression", "FieldCanBeLocal"})

private final long maxWorkerId = -1L ^ (-1L << workerIdBits);

@SuppressWarnings({"PointlessBitwiseExpression", "FieldCanBeLocal"})

private final long maxDataCenterId = -1L ^ (-1L << dataCenterIdBits);

// 序列号12位

private final long sequenceBits = 12L;

// 机器节点左移12位

private final long workerIdShift = sequenceBits;

// 数据中心节点左移17位

private final long dataCenterIdShift = sequenceBits + workerIdBits;

// 时间毫秒数左移22位

private final long timestampLeftShift = sequenceBits + workerIdBits + dataCenterIdBits;

@SuppressWarnings({"PointlessBitwiseExpression", "FieldCanBeLocal"})

private final long sequenceMask = -1L ^ (-1L << sequenceBits);// 4095

private final long workerId;

private final long dataCenterId;

private final boolean useSystemClock;

private long sequence = 0L;

private long lastTimestamp = -1L;

/**

* 构造

*

* @param workerId 终端ID

* @param dataCenterId 数据中心ID

*/

public Snowflake(long workerId, long dataCenterId) {

this(workerId, dataCenterId, false);

}

/**

* 构造

*

* @param workerId 终端ID

* @param dataCenterId 数据中心ID

* @param isUseSystemClock 是否使用{@link SystemClock} 获取当前时间戳

*/

public Snowflake(long workerId, long dataCenterId, boolean isUseSystemClock) {

this(null, workerId, dataCenterId, isUseSystemClock);

}

/**

* @param epochDate 初始化时间起点(null表示默认起始日期),后期修改会导致id重复,如果要修改连workerId dataCenterId,慎用

* @param workerId 工作机器节点id

* @param dataCenterId 数据中心id

* @param isUseSystemClock 是否使用{@link SystemClock} 获取当前时间戳

* @since 5.1.3

*/

public Snowflake(Date epochDate, long workerId, long dataCenterId, boolean isUseSystemClock) {

if (null != epochDate) {

this.twepoch = epochDate.getTime();

} else {

// Thu, 04 Nov 2010 01:42:54 GMT

this.twepoch = 1288834974657L;

}

if (workerId > maxWorkerId || workerId < 0) {

throw new IllegalArgumentException(format("worker Id can't be greater than {} or less than 0", maxWorkerId));

}

if (dataCenterId > maxDataCenterId || dataCenterId < 0) {

throw new IllegalArgumentException(format("datacenter Id can't be greater than {} or less than 0", maxDataCenterId));

}

this.workerId = workerId;

this.dataCenterId = dataCenterId;

this.useSystemClock = isUseSystemClock;

}

/**

* 根据Snowflake的ID,获取机器id

*

* @param id snowflake算法生成的id

* @return 所属机器的id

*/

public long getWorkerId(long id) {

return id >> workerIdShift & ~(-1L << workerIdBits);

}

/**

* 根据Snowflake的ID,获取数据中心id

*

* @param id snowflake算法生成的id

* @return 所属数据中心

*/

public long getDataCenterId(long id) {

return id >> dataCenterIdShift & ~(-1L << dataCenterIdBits);

}

/**

* 根据Snowflake的ID,获取生成时间

*

* @param id snowflake算法生成的id

* @return 生成的时间

*/

public long getGenerateDateTime(long id) {

return (id >> timestampLeftShift & ~(-1L << 41L)) + twepoch;

}

/**

* 下一个ID

*

* @return ID

*/

public synchronized long nextId() {

long timestamp = genTime();

if (timestamp < lastTimestamp) {

if (lastTimestamp - timestamp < 2000) {

// 容忍2秒内的回拨,避免NTP校时造成的异常

timestamp = lastTimestamp;

} else {

// 如果服务器时间有问题(时钟后退) 报错。

throw new IllegalStateException(format("Clock moved backwards. Refusing to generate id for {}ms", lastTimestamp - timestamp));

}

}

if (timestamp == lastTimestamp) {

sequence = (sequence + 1) & sequenceMask;

if (sequence == 0) {

timestamp = tilNextMillis(lastTimestamp);

}

} else {

sequence = 0L;

}

lastTimestamp = timestamp;

return ((timestamp - twepoch) << timestampLeftShift) | (dataCenterId << dataCenterIdShift) | (workerId << workerIdShift) | sequence;

}

/**

* 下一个ID(字符串形式)

*

* @return ID 字符串形式

*/

public String nextIdStr() {

return Long.toString(nextId());

}

// ------------------------------------------------------------------------------------------------------------------------------------ Private method start

/**

* 循环等待下一个时间

*

* @param lastTimestamp 上次记录的时间

* @return 下一个时间

*/

private long tilNextMillis(long lastTimestamp) {

long timestamp = genTime();

// 循环直到操作系统时间戳变化

while (timestamp == lastTimestamp) {

timestamp = genTime();

}

if (timestamp < lastTimestamp) {

// 如果发现新的时间戳比上次记录的时间戳数值小,说明操作系统时间发生了倒退,报错

throw new IllegalStateException(

format("Clock moved backwards. Refusing to generate id for {}ms", lastTimestamp - timestamp));

}

return timestamp;

}

/**

* 生成时间戳

*

* @return 时间戳

*/

private long genTime() {

return this.useSystemClock ? SystemClock.now() : System.currentTimeMillis();

}

public String format(String target, Object... params) {

return target.contains("%s") && ArrayUtils.isNotEmpty(params) ? String.format(target, params) : target;

}

}3.2 TraceIdAdvice

import com.alibaba.dubbo.rpc.RpcContext;

import org.aopalliance.intercept.MethodInterceptor;

import org.aopalliance.intercept.MethodInvocation;

import org.slf4j.MDC;

/** * 类 描 述:traceId切面

*/

public class TraceIdAdvice implements MethodInterceptor {

@Override

public Object invoke(MethodInvocation invocation) throws Throwable {

String tid = MDC.get("traceId");

String rpcTid = RpcContext.getContext().getAttachment("traceId");

if (tid != null) {

RpcContext.getContext().setAttachment("traceId", tid);

} else {

if (rpcTid != null) {

MDC.put("traceId",rpcTid);

}

}

Object result = invocation.proceed();

return result;

}

}3.3 TraceIdAdviceConfig

import org.springframework.aop.aspectj.AspectJExpressionPointcutAdvisor;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

/**

* 类 描 述:traceId切面动态配置

*/

@Configuration

public class TraceIdAdviceConfig {

@Value("${traceId.pointcut.property}")

private String pointcut;

@Bean

public AspectJExpressionPointcutAdvisor configurabledvisor() {

AspectJExpressionPointcutAdvisor advisor = new AspectJExpressionPointcutAdvisor();

advisor.setExpression(pointcut);

advisor.setAdvice(new TraceIdAdvice());

return advisor;

}

}

3.4 IPConverterConfig

import ch.qos.logback.classic.pattern.ClassicConverter;

import ch.qos.logback.classic.spi.ILoggingEvent;

import java.net.InetAddress;

import java.net.NetworkInterface;

import java.net.UnknownHostException;

import java.util.Enumeration;

/**

* 类 描 述:log打出ip

*/

public class IPConverterConfig extends ClassicConverter {

private String url;

@Override

public String convert(ILoggingEvent iLoggingEvent) {

try {

if (url == null){

String hostAddress = getLocalHostLANAddress().getHostAddress();

url = hostAddress;

}

return url;

} catch (UnknownHostException e) {

e.printStackTrace();

}

return null;

}

// 正确的IP拿法,即优先拿site-local地址

private static InetAddress getLocalHostLANAddress() throws UnknownHostException{

try {

InetAddress candidateAddress = null;

// 遍历所有的网络接口

for (Enumeration ifaces = NetworkInterface.getNetworkInterfaces(); ifaces.hasMoreElements();) {

NetworkInterface iface = (NetworkInterface) ifaces.nextElement();

//服务器上eth0才是正确的ip

if (!"eth0".equals(iface.getName())){

continue;

}

// 在所有的接口下再遍历IP

for (Enumeration inetAddrs = iface.getInetAddresses(); inetAddrs.hasMoreElements();) {

InetAddress inetAddr = (InetAddress) inetAddrs.nextElement();

if (!inetAddr.isLoopbackAddress()) {// 排除loopback类型地址

if (inetAddr.isSiteLocalAddress()) {

// 如果是site-local地址,就是它了

return inetAddr;

} else if (candidateAddress == null) {

// site-local类型的地址未被发现,先记录候选地址

candidateAddress = inetAddr;

}

}

}

}

if (candidateAddress != null) {

return candidateAddress;

}

// 如果没有发现 non-loopback地址.只能用最次选的方案

InetAddress jdkSuppliedAddress = InetAddress.getLocalHost();

if (jdkSuppliedAddress == null) {

throw new UnknownHostException("The JDK InetAddress.getLocalHost() method unexpectedly returned null.");

}

return jdkSuppliedAddress;

} catch (Exception e) {

e.printStackTrace();

}

return null;

}

}3.5 TraceIdInterceptor

/**

* 类 描 述:traceId 用于追踪链路

*/

@Slf4j

@Component

public class TraceIdInterceptor implements HandlerInterceptor {

/*@Autowired

RedisHelper redisHelper;*/

Snowflake snowflake = new Snowflake(1,0);

@Override

public boolean preHandle(HttpServletRequest request, HttpServletResponse response, Object handler){

String tid = snowflake.nextIdStr();

MDC.put("traceId",tid);

RpcContext.getContext().setAttachment("traceId", tid);

return true;

}

@Override

public void postHandle(HttpServletRequest request, HttpServletResponse response, Object handler, ModelAndView modelAndView){

MDC.remove("traceId");

}

@Override

public void afterCompletion(HttpServletRequest request, HttpServletResponse response, Object handler, Exception ex){}

//生成导入批次号

/*public String getTraceId() {

String dateString = DateUtils.formatYYYYMMDD(new Date());

Long incr = redisHelper.incr("taosj-common-traceId" + dateString, 24);

if (incr == 0) {

incr = redisHelper.incr("taosj-common-traceId" + dateString, 24);

}

DecimalFormat df = new DecimalFormat("000000");

return dateString + df.format(incr);

}*/

}3.6 WebAppConfigurer

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.annotation.Configuration;

import org.springframework.web.servlet.config.annotation.InterceptorRegistry;

import org.springframework.web.servlet.config.annotation.WebMvcConfigurer;

/**

* 类 描 述:TODO

*/

@Configuration

public class WebAppConfigurer implements WebMvcConfigurer {

@Autowired

private TraceIdInterceptor traceIdInterceptor;

/**

* 注册拦截器

*/

@Override

public void addInterceptors(InterceptorRegistry registry) {

registry.addInterceptor(traceIdInterceptor).addPathPatterns("/**");

//.excludePathPatterns("/admin/login")

//.excludePathPatterns("/admin/getLogin");

// - /**: 匹配所有路径

// - /admin/**:匹配 /admin/ 下的所有路径

// - /admin/*:只匹配 /admin/login,不匹配 /secure/login/tologin ("/*"只匹配一级子目录,"/**"匹配所有子目录)

}

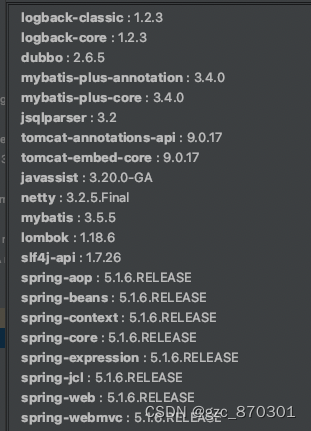

}3.7 jar pom

<dependencies>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-context</artifactId>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-webmvc</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>dubbo</artifactId>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

</dependency>

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-core</artifactId>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

</dependency>

<dependency>

<groupId>org.apache.tomcat.embed</groupId>

<artifactId>tomcat-embed-core</artifactId>

</dependency>

</dependencies>

4、jar包的使用

1、pom文件:

添加自己的jar包

2、启动类增加类扫描:步骤3的包名

3、配置文件增加切面pointcut配置:例如:traceId.pointcut.property=execution(public * com.taosj.tebu.service..*.*(..)) 自己项目的包名

4、logback-spring文件修改:ip 日志输出格式

<property resource="application.properties"/>

<springProperty scope="context" name="serverName" source="spring.application.name"/>

<conversionRule conversionWord="ip" converterClass="com.taosj.elk.config.IPConverterConfig”/>

<property name="pattern" value="%date{yyyy-MM-dd HH:mm:ss} | %ip | ${serverName} | ${profile} | %highlight(%-5level) | %boldYellow(%thread) | %boldGreen(%logger) | %X{traceId} | %msg%n"/>

<property name="pattern_log" value="%d{yyyy-MM-dd HH:mm:ss.SSS}|%ip|${serverName}|${profile}|%level|%logger|%thread|%X{traceId}|%m%n"/>

应该是把现在正在运行的日志拿过来,所以要把之前的日志删除掉,以及把形成的日志名改掉:

比如<fileNamePattern>${dir}/info/info.%d{yyyy-MM-dd}.%i.log</fileNamePattern> 改成

<fileNamePattern>${dir}/info/info.log.%d{yyyy-MM-dd}.%i</fileNamePattern>

5、springboot项目logback-spring.xml例子

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<springProfile name="dev">

<property name="dir" value="log"/>

</springProfile>

<springProfile name="test">

<property name="dir" value="/data/project/taosj-tebu-web/code/logs"/>

</springProfile>

<springProfile name="pre">

<property name="dir" value="/data/project/taosj-tebu-web/code/logs"/>

</springProfile>

<springProfile name="prod">

<property name="dir" value="/data/project/taosj-tebu-web/code/logs"/>

</springProfile>

<property resource="application.properties"/>

<springProperty scope="context" name="serverName" source="spring.application.name"/>

<conversionRule conversionWord="ip" converterClass="com.taosj.elk.config.IPConverterConfig"/>

<property name="pattern" value="%date{yyyy-MM-dd HH:mm:ss} | %ip | ${serverName} | ${profile} | %highlight(%-5level) | %boldYellow(%thread) | %boldGreen(%logger) | %X{traceId} | %msg%n"/>

<property name="pattern_log" value="%d{yyyy-MM-dd HH:mm:ss.SSS}|%ip|${serverName}|${profile}|%level|%logger|%thread|%X{traceId}|%m%n"/>

<appender name="INFO_LOG" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${dir}/info/info.log</file>

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<fileNamePattern>${dir}/info/info.log.%d{yyyy-MM-dd}.%i</fileNamePattern>

<maxFileSize>100MB</maxFileSize>

<maxHistory>10</maxHistory>

<totalSizeCap>5GB</totalSizeCap>

</rollingPolicy>

<filter class="com.taosj.bizlogback.LoggerFilter">

<param name="levelMin" value="20000"/>

<param name="levelMax" value="20000"/>

</filter>

<encoder>

<pattern>${pattern_log}</pattern>

<charset>UTF-8</charset>

</encoder>

</appender>

<appender name="DEBUG_LOG" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${dir}/debug/debug.log</file>

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<fileNamePattern>${dir}/debug/debug.%i.log.%d{yyyy-MM-dd}.%i</fileNamePattern>

<maxFileSize>100MB</maxFileSize>

<maxHistory>10</maxHistory>

<totalSizeCap>5GB</totalSizeCap>

</rollingPolicy>

<filter class="com.taosj.bizlogback.LoggerFilter">

<param name="levelMin" value="10000"/>

<param name="levelMax" value="10000"/>

</filter>

<encoder>

<pattern>${pattern_log}</pattern>

<charset>UTF-8</charset>

</encoder>

</appender>

<appender name="ERROR_LOG" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${dir}/error/error.log</file>

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<fileNamePattern>${dir}/error/error.log.%d{yyyy-MM-dd}.%i</fileNamePattern>

<maxFileSize>100MB</maxFileSize>

<maxHistory>10</maxHistory>

<totalSizeCap>5GB</totalSizeCap>

</rollingPolicy>

<filter class="com.taosj.bizlogback.LoggerFilter">

<param name="levelMin" value="40000"/>

<param name="levelMax" value="40000"/>

</filter>

<encoder>

<pattern>${pattern_log}</pattern>

<charset>UTF-8</charset>

</encoder>

</appender>

<appender name="BIZ_LOG" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${dir}/biz/biz.log</file>

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<fileNamePattern>${dir}/biz/biz.log.%d{yyyy-MM-dd}.%i</fileNamePattern>

<maxFileSize>100MB</maxFileSize>

<maxHistory>10</maxHistory>

<totalSizeCap>5GB</totalSizeCap>

</rollingPolicy>

<filter class="com.taosj.bizlogback.LoggerFilter">

<param name="levelMin" value="50000"/>

<param name="levelMax" value="50000"/>

</filter>

<encoder>

<pattern>${pattern_log}</pattern>

<charset>UTF-8</charset>

</encoder>

</appender>

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>${pattern}</pattern>

</encoder>

</appender>

<springProfile name="dev">

<root level="info">

<appender-ref ref="INFO_LOG"/>

<appender-ref ref="ERROR_LOG"/>

<appender-ref ref="DEBUG_LOG"/>

<appender-ref ref="BIZ_LOG"/>

<appender-ref ref="console"/>

</root>

</springProfile>

<springProfile name="prod">

<root level="info">

<appender-ref ref="INFO_LOG"/>

<appender-ref ref="ERROR_LOG"/>

<appender-ref ref="DEBUG_LOG"/>

<appender-ref ref="BIZ_LOG"/>

<appender-ref ref="console"/>

</root>

</springProfile>

<springProfile name="test">

<root level="info">

<appender-ref ref="INFO_LOG"/>

<appender-ref ref="ERROR_LOG"/>

<appender-ref ref="DEBUG_LOG"/>

<appender-ref ref="BIZ_LOG"/>

<appender-ref ref="console"/>

</root>

</springProfile>

<springProfile name="pre">

<root level="info">

<appender-ref ref="INFO_LOG"/>

<appender-ref ref="ERROR_LOG"/>

<appender-ref ref="DEBUG_LOG"/>

<appender-ref ref="BIZ_LOG"/>

<appender-ref ref="console"/>

</root>

</springProfile>

</configuration>

7、部署时候的小tip:

7.1:filebeat启动时

nohup ./filebeat & 这样shell关闭了还是会停

nohup ./filebeat & disown 要这样启动

7.2: filebeat推送logstash的地址:

116.62.126.104 35044 可以查看filebeat.yml配置文件

7.4:ip

ip获取的是eth0网卡的ip

7.4:小问题

springmvc项目使用log4j不能一开始就注入servername、ip、profile等信息 所以启动等信息没有这些字段,调用接口等是有这些信息的;springboot项目使用logback没有这个问题