前期准备:

Scrapy入门_win10安装scrapy-CSDN博客

新建 Scrapy项目

scrapy startproject mySpider03 # 项目名为mySpider03

进入到spiders目录

cd mySpider03/mySpider03/spiders

创建爬虫

scrapy genspider heima bbs.itheima.com # 爬虫名为heima ,爬取域为bbs.itheima.com

制作爬虫

items.py:

import scrapy

class heimaItem(scrapy.Item):

title = scrapy.Field()

url = scrapy.Field()heima.py:

import scrapy

from scrapy.selector import Selector

from mySpider03.items import heimaItem

class HeimaSpider(scrapy.Spider):

name = 'heima'

allowed_domains = ['bbs.itheima.com']

start_urls = ['http://bbs.itheima.com/forum-425-1.html']

def parse(self, response):

print('response.url: ', response.url)

selector = Selector(response)

node_list = selector.xpath("//th[@class='new forumtit'] | //th[@class='common forumtit']")

for node in node_list:

# 文章标题

title = node.xpath('./a[1]/text()')[0].extract()

# 文章链接

url = node.xpath('./a[1]/@href')[0].extract()

# 创建heimaItem类

item = heimaItem()

item['title'] = title

item['url'] = url

yield item

pipelines.py:

from itemadapter import ItemAdapter

from pymongo import MongoClient

class heimaPipeline:

def open_spider(self, spider):

# MongoDB 连接设置

self.MONGO_URI = 'mongodb://localhost:27017/'

self.DB_NAME = 'heima' # 数据库名称

self.COLLECTION_NAME = 'heimaNews' # 集合名称

self.client = MongoClient(self.MONGO_URI)

self.db = self.client[self.DB_NAME]

self.collection = self.db[self.COLLECTION_NAME]

# 如果集合中已有数据,清空集合

self.collection.delete_many({})

print('爬取开始')

def process_item(self, item, spider):

title = item['title']

url = item['url']

# 将item转换为字典

item_dict = {

'title': title,

'url': url,

}

# 插入数据

self.collection.insert_one(item_dict)

return item

def close_spider(self, spider):

print('爬取结束,显示数据库中所有元素')

cursor = self.collection.find()

for document in cursor:

print(document)

self.client.close()

settings.py,解开ITEM_PIPELINES的注释,并修改其内容:

ITEM_PIPELINES = {

'mySpider03.pipelines.heimaPipeline': 300,

}

创建run.py:

from scrapy import cmdline

cmdline.execute("scrapy crawl heima -s LOG_ENABLED=False".split())

# cd mySpider03/mySpider03/spiders运行run.py文件,即可实现爬取第一页'http://bbs.itheima.com/forum-425-1.html'内容并保存到数据库中的功能。

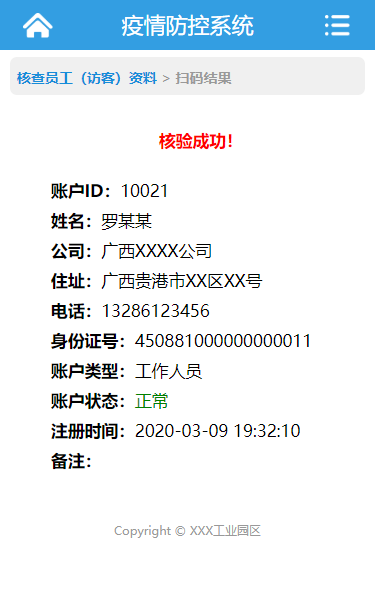

结果如下图:

爬取到了50条数据。

爬取所有页面

方法一:通过获取下一页url地址的方法爬取所有页面。

在heima.py的parse方法结尾加上以下内容:

# 获取下一页的链接

if '下一页' in response.text:

next_url = selector.xpath("//a[@class='nxt']/@href").extract()[0]

yield scrapy.Request(next_url, callback=self.parse)

即heima.py:

import scrapy

from scrapy.selector import Selector

from mySpider03.items import heimaItem

class HeimaSpider(scrapy.Spider):

name = 'heima'

allowed_domains = ['bbs.itheima.com']

start_urls = ['http://bbs.itheima.com/forum-425-1.html']

def parse(self, response):

print('response.url: ', response.url)

selector = Selector(response)

node_list = selector.xpath("//th[@class='new forumtit'] | //th[@class='common forumtit']")

for node in node_list:

# 文章标题

title = node.xpath('./a[1]/text()')[0].extract()

# 文章链接

url = node.xpath('./a[1]/@href')[0].extract()

# 创建heimaItem类

item = heimaItem()

item['title'] = title

item['url'] = url

yield item

# 获取下一页的链接

if '下一页' in response.text:

next_url = selector.xpath("//a[@class='nxt']/@href").extract()[0]

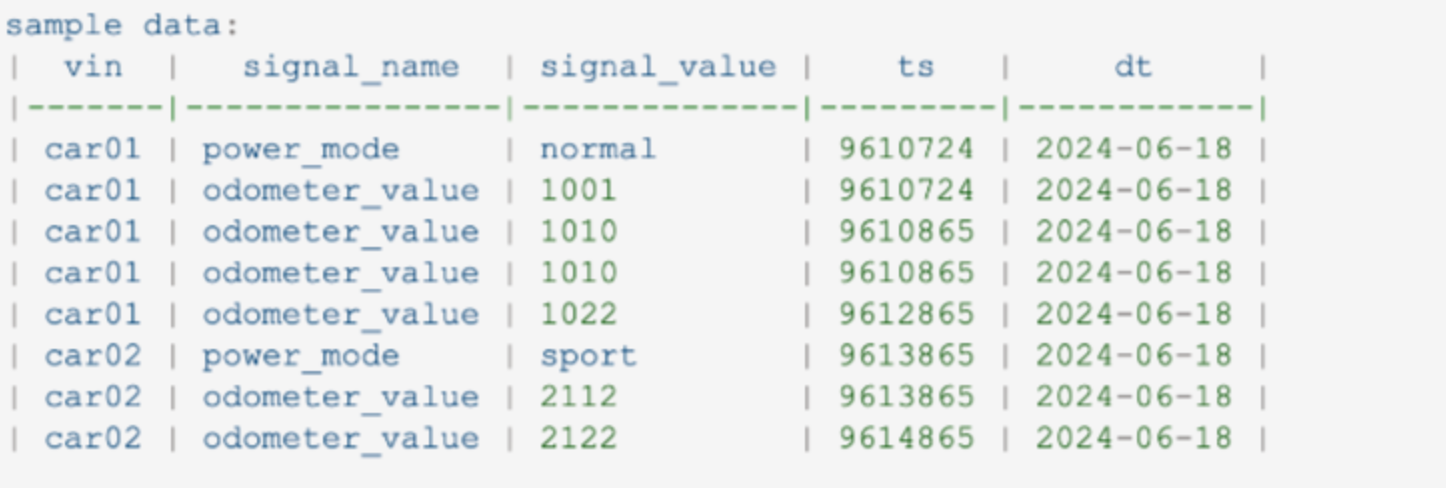

yield scrapy.Request(next_url, callback=self.parse)爬取结果:

爬取到了70页,一共3466条数据。

# 在cmd中输入以下命令,查看数据库中的数据:

> mongosh # 启动mongoDB

> show dbs # 查看所有数据库

> use heima # 使用heima数据库

> db.stats() # 查看当前数据库的信息

> db.heimaNews.find() # 查看heimaNews集合中的所有文档

方法二:使用crawlspider提取url链接

新建crawlspider类的爬虫

scrapy genspider -t crawl heimaCrawl bbs.itheima.com

# 爬虫名为heimaCrawl ,爬取域为bbs.itheima.com

2.1在rules中通过xpath提取链接

修改heimaCrawl.py文件:

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

from mySpider03.items import heimaItem

class HeimacrawlSpider(CrawlSpider):

name = 'heimaCrawl'

allowed_domains = ['bbs.itheima.com']

start_urls = ['http://bbs.itheima.com/forum-425-1.html']

rules = (

Rule(LinkExtractor(restrict_xpaths=r'//a[@class="nxt"]'), callback='parse_item', follow=True),

)

# 处理起始页面内容,如果不重写该方法,则只爬取满足rules规则的链接,不会爬取起始页面内容

def parse_start_url(self, response):

# 调用 parse_item 处理起始页面

return self.parse_item(response)

def parse_item(self, response):

print('CrawlSpider的response.url: ', response.url)

node_list = response.xpath("//th[@class='new forumtit'] | //th[@class='common forumtit']")

for node in node_list:

# 文章标题

title = node.xpath('./a[1]/text()')[0].extract()

# 文章链接

url = node.xpath('./a[1]/@href')[0].extract()

# 创建heimaItem类

item = heimaItem()

item['title'] = title

item['url'] = url

yield item

修改run.py:

# heimaCrawl

cmdline.execute("scrapy crawl heimaCrawl -s LOG_ENABLED=False".split())

爬取结果:

爬取到全部70页,一共3466条数据。

2.2在rules中通过正则表达式提取链接

修改heimaCrawl.py文件:

rules = (

Rule(LinkExtractor(allow=r'forum-425-\d+\.html'), callback='parse_item', follow=True),

)

结果:

一共爬取到3516条数据。