环境

1.一台阿里云作为k8s-master:8.130.XXX.231(阿里云私有IP)

2.Vmware 两个虚拟机分别作为

k8s-node1:192.168.40.131

k8s-node2:192.168.40.131

3.安装Docker

部署过程

k8s-master,k8s-node1,k8s-node2 初始操作

# 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

# 关闭selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久

# 关闭swap

sed -ri 's/.*swap.*/#&/' /etc/fstab # 永久

# 关闭完swap后,一定要重启一下虚拟机!!!

# 根据规划设置主机名

hostnamectl set-hostname k8s-master/k8s-node1/k8s-node2

# 在k8s-master添加hosts

cat >> /etc/hosts << EOF

192.168.113.120 k8s-master

192.168.113.121 k8s-node1

192.168.113.122 k8s-node2

EOF

# 将桥接的IPv4流量传递到iptables的链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system # 生效

# 时间同步

yum install ntpdate -y

ntpdate time.windows.com

k8s-master,k8s-node1,k8s-node2 添加阿里云 yum 源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

k8s-master,k8s-node1,k8s-node2 安装 kubeadm、kubelet、kubectl

yum install -y kubelet-1.23.6 kubeadm-1.23.6 kubectl-1.23.6

systemctl enable kubelet

# 配置关闭 Docker 的 cgroups,修改 /etc/docker/daemon.json,加入以下内容

"exec-opts": ["native.cgroupdriver=systemd"]

# 重启 docker

systemctl daemon-reload

systemctl restart docker

k8s-master部署 Kubernetes Master

# 在 Master 节点下执行

kubeadm init \

--apiserver-advertise-address=阿里云私有IP \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.23.6 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

这里先记下来kubeadm join command, 用在后面加入节点使用

# 安装成功后,复制如下配置并执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl get nodes

k8s-node1,k8s-node2加入 Kubernetes Node

分别在 k8s-node1 和 k8s-node2 执行刚才记下来的kubeadm join command

# 下方命令可以在 k8s master 控制台初始化成功后复制 join 命令

kubeadm join 192.168.113.120:6443 --token w34ha2.66if2c8nwmeat9o7 --discovery-token-ca-cert-hash sha256:20e2227554f8883811c01edd850f0cf2f396589d32b57b9984de3353a7389477

# 如果初始化的 token 不小心清空了,可以通过如下命令获取或者重新申请

# 如果 token 已经过期,就重新申请

kubeadm token create

# token 没有过期可以通过如下命令获取

kubeadm token list

# 获取 --discovery-token-ca-cert-hash 值,得到值后需要在前面拼接上 sha256:

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | \

openssl dgst -sha256 -hex | sed 's/^.* //'

#重启节点

systemctl restart kubelet

k8s-master 部署 CNI 网络插件

mkdir /opt/k8s

touch flannel.yml

kubectl apply -f kube-flannel.yml

flannel.yml

apiVersion: v1

kind: Namespace

metadata:

labels:

k8s-app: flannel

pod-security.kubernetes.io/enforce: privileged

name: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: flannel

name: flannel

namespace: kube-flannel

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: flannel

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

- apiGroups:

- networking.k8s.io

resources:

- clustercidrs

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: flannel

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

kind: ConfigMap

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

name: kube-flannel-cfg

namespace: kube-flannel

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

name: kube-flannel-ds

namespace: kube-flannel

spec:

selector:

matchLabels:

app: flannel

k8s-app: flannel

template:

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

containers:

- args:

- --ip-masq

- --kube-subnet-mgr

command:

- /opt/bin/flanneld

command: ["/bin/bash", "-ce", "tail -f /dev/null"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

image: registry.cn-hangzhou.aliyuncs.com/liuk8s/flannel:v0.21.5

name: kube-flannel

resources:

requests:

cpu: 100m

memory: 50Mi

securityContext:

capabilities:

add:

- NET_ADMIN

- NET_RAW

privileged: false

volumeMounts:

- mountPath: /run/flannel

name: run

- mountPath: /etc/kube-flannel/

name: flannel-cfg

- mountPath: /run/xtables.lock

name: xtables-lock

hostNetwork: true

initContainers:

- args:

- -f

- /flannel

- /opt/cni/bin/flannel

command:

- cp

image: registry.cn-hangzhou.aliyuncs.com/liuk8s/flannel-cni-plugin:v1.1.2

name: install-cni-plugin

volumeMounts:

- mountPath: /opt/cni/bin

name: cni-plugin

- args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

command:

- cp

image: registry.cn-hangzhou.aliyuncs.com/liuk8s/flannel:v0.21.5

name: install-cni

volumeMounts:

- mountPath: /etc/cni/net.d

name: cni

- mountPath: /etc/kube-flannel/

name: flannel-cfg

priorityClassName: system-node-critical

serviceAccountName: flannel

tolerations:

- effect: NoSchedule

operator: Exists

volumes:

- hostPath:

path: /run/flannel

name: run

- hostPath:

path: /opt/cni/bin

name: cni-plugin

- hostPath:

path: /etc/cni/net.d

name: cni

- configMap:

name: kube-flannel-cfg

name: flannel-cfg

- hostPath:

path: /run/xtables.lock

type: FileOrCreate

name: xtables-lock

创建完成后能看到kube-flannel下的pods都在Running状态

#所有命名空间下的pods

kubectl get pod --all-namespaces

如果没有看到Running状态,使用如下command查看问题

kubectl describe po kube-flannel-ds-f6cgc -n kube-flannel

k8s-master上查看nodes都是Ready状态

kubectl get nodes

k8s-master配置nginx样例测试

# 创建部署

kubectl create deployment nginx --image=nginx

# 暴露端口

kubectl expose deployment nginx --port=80 --type=NodePort

# 查看 pod 以及服务信息

kubectl get pod,svc

nginx pods是Running状态

http://192.168.40.132:31113/

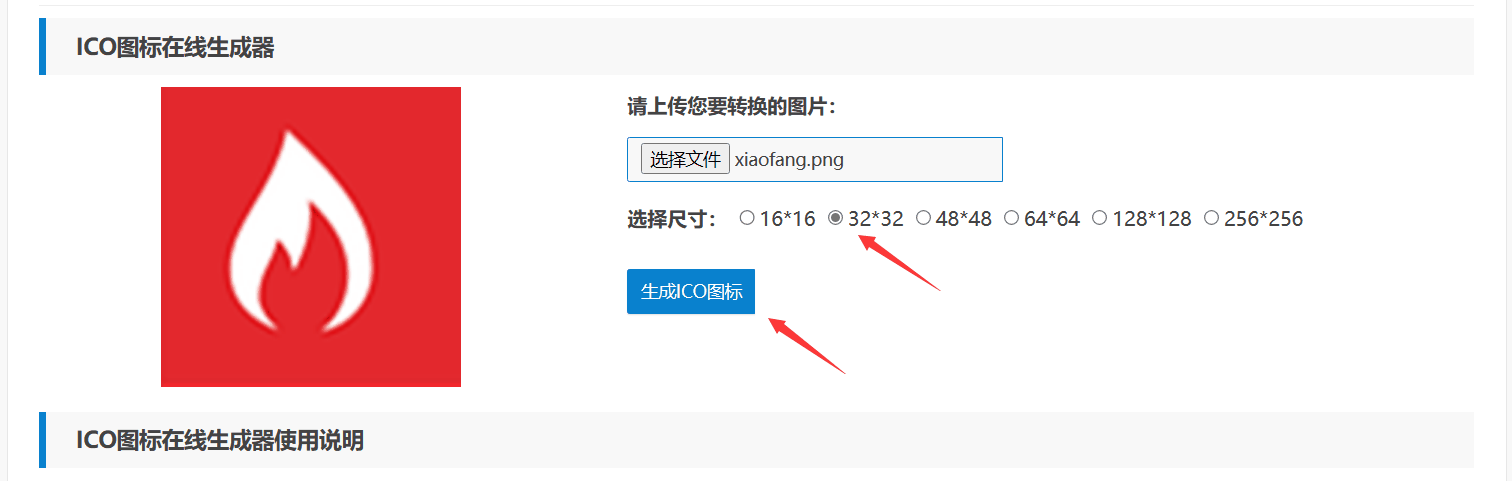

在部署Nginx的时候遇到一个问题

我以为是k8s-master没有这个文件,但是我看了k8s-master是有这个文件的,想了好久才知道,它是要部署到node,所以node也要有这个文件,所以我把k8s-master的文件也同步复制到node

在对应node节点上 新建 /run/flannel/subnet.env

这个文件写入内容:

FLANNEL_NETWORK=10.244.0.0/16

FLANNEL_SUBNET=10.244.0.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=true

![[SWPUCTF 2021 新生赛]easy_sql的write up](https://img-blog.csdnimg.cn/img_convert/365a6a2cba6a0d8ba9384f7af9eb26df.png)