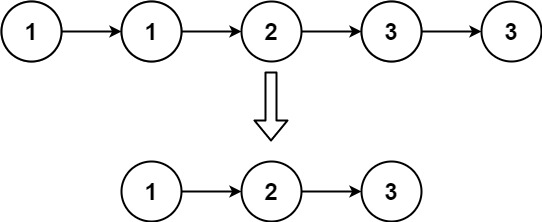

一. AVFrame 核心回顾,uint8_t *data[AV_NUM_DATA_POINTERS] 和 int linesize[AV_NUM_DATA_POINTERS]

AVFrame 存储的是解码后的数据,(包括音频和视频)例如:yuv数据,或者pcm数据,参考AVFrame结构体的第一句话。

其核心数据为:

AV_NUM_DATA_POINTERS = 8;

uint8_t *data[AV_NUM_DATA_POINTERS];

int linesize[AV_NUM_DATA_POINTERS];

uint8_t *data[AV_NUM_DATA_POINTERS];

data -->xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

^ ^ ^

| | |

data[0] data[1] data[2]

比如说,当pix_fmt=AV_PIX_FMT_YUV420P时,data中的数据是按照YUV的格式存储的,也就是:

data -->YYYYYYYYYYYYYYYYYYYYYYYYUUUUUUUUUUUVVVVVVVVVVVV

^ ^ ^

| | |

data[0] data[1] data[2]

int linesize[AV_NUM_DATA_POINTERS];

linesize是指对应于每一行的大小,为什么需要这个变量,是因为在YUV格式和RGB格式时,每行的大小不一定等于图像的宽度。

linesize = width + padding size(16+16) for YUV

linesize = width*pixel_size for RGB

padding is needed during Motion Estimation and Motion Compensation for Optimizing MV serach and P/B frame reconstruction

for RGB only one channel is available

so RGB24 : data[0] = packet rgbrgbrgbrgb......

linesize[0] = width*3

data[1],data[2],data[3],linesize[1],linesize[2],linesize[2] have no any means for RGB

在二核心函数中 关于linesize[x]字节数的验证 代码,可以参考

/**

* This structure describes decoded (raw) audio or video data.

*

* AVFrame must be allocated using av_frame_alloc(). Note that this only

* allocates the AVFrame itself, the buffers for the data must be managed

* through other means (see below).

* AVFrame must be freed with av_frame_free().

*

* AVFrame is typically allocated once and then reused multiple times to hold

* different data (e.g. a single AVFrame to hold frames received from a

* decoder). In such a case, av_frame_unref() will free any references held by

* the frame and reset it to its original clean state before it

* is reused again.

*

* The data described by an AVFrame is usually reference counted through the

* AVBuffer API. The underlying buffer references are stored in AVFrame.buf /

* AVFrame.extended_buf. An AVFrame is considered to be reference counted if at

* least one reference is set, i.e. if AVFrame.buf[0] != NULL. In such a case,

* every single data plane must be contained in one of the buffers in

* AVFrame.buf or AVFrame.extended_buf.

* There may be a single buffer for all the data, or one separate buffer for

* each plane, or anything in between.

*

* sizeof(AVFrame) is not a part of the public ABI, so new fields may be added

* to the end with a minor bump.

*

* Fields can be accessed through AVOptions, the name string used, matches the

* C structure field name for fields accessible through AVOptions. The AVClass

* for AVFrame can be obtained from avcodec_get_frame_class()

*/

typedef struct AVFrame {

#define AV_NUM_DATA_POINTERS 8

/**

* pointer to the picture/channel planes.

* This might be different from the first allocated byte. For video,

* it could even point to the end of the image data.

*

* All pointers in data and extended_data must point into one of the

* AVBufferRef in buf or extended_buf.

*

* Some decoders access areas outside 0,0 - width,height, please

* see avcodec_align_dimensions2(). Some filters and swscale can read

* up to 16 bytes beyond the planes, if these filters are to be used,

* then 16 extra bytes must be allocated.

*

* NOTE: Pointers not needed by the format MUST be set to NULL.

*

* @attention In case of video, the data[] pointers can point to the

* end of image data in order to reverse line order, when used in

* combination with negative values in the linesize[] array.

*/

uint8_t *data[AV_NUM_DATA_POINTERS];

/**

* For video, a positive or negative value, which is typically indicating

* the size in bytes of each picture line, but it can also be:

* - the negative byte size of lines for vertical flipping

* (with data[n] pointing to the end of the data

* - a positive or negative multiple of the byte size as for accessing

* even and odd fields of a frame (possibly flipped)

*

* For audio, only linesize[0] may be set. For planar audio, each channel

* plane must be the same size.

*

* For video the linesizes should be multiples of the CPUs alignment

* preference, this is 16 or 32 for modern desktop CPUs.

* Some code requires such alignment other code can be slower without

* correct alignment, for yet other it makes no difference.

*

* @note The linesize may be larger than the size of usable data -- there

* may be extra padding present for performance reasons.

*

* @attention In case of video, line size values can be negative to achieve

* a vertically inverted iteration over image lines.

*/

int linesize[AV_NUM_DATA_POINTERS];

/**

* pointers to the data planes/channels.

*

* For video, this should simply point to data[].

*

* For planar audio, each channel has a separate data pointer, and

* linesize[0] contains the size of each channel buffer.

* For packed audio, there is just one data pointer, and linesize[0]

* contains the total size of the buffer for all channels.

*

* Note: Both data and extended_data should always be set in a valid frame,

* but for planar audio with more channels that can fit in data,

* extended_data must be used in order to access all channels.

*/

uint8_t **extended_data;

/**

* @name Video dimensions

* Video frames only. The coded dimensions (in pixels) of the video frame,

* i.e. the size of the rectangle that contains some well-defined values.

*

* @note The part of the frame intended for display/presentation is further

* restricted by the @ref cropping "Cropping rectangle".

* @{

*/

int width, height;

/**

* @}

*/

/**

* number of audio samples (per channel) described by this frame

*/

int nb_samples;

/**

* format of the frame, -1 if unknown or unset

* Values correspond to enum AVPixelFormat for video frames,

* enum AVSampleFormat for audio)

*/

int format;

/**

* 1 -> keyframe, 0-> not

*/

int key_frame;

/**

* Picture type of the frame.

*/

enum AVPictureType pict_type;

/**

* Sample aspect ratio for the video frame, 0/1 if unknown/unspecified.

*/

AVRational sample_aspect_ratio;

/**

* Presentation timestamp in time_base units (time when frame should be shown to user).

*/

int64_t pts;

/**

* DTS copied from the AVPacket that triggered returning this frame. (if frame threading isn't used)

* This is also the Presentation time of this AVFrame calculated from

* only AVPacket.dts values without pts values.

*/

int64_t pkt_dts;

/**

* Time base for the timestamps in this frame.

* In the future, this field may be set on frames output by decoders or

* filters, but its value will be by default ignored on input to encoders

* or filters.

*/

AVRational time_base;

#if FF_API_FRAME_PICTURE_NUMBER

/**

* picture number in bitstream order

*/

attribute_deprecated

int coded_picture_number;

/**

* picture number in display order

*/

attribute_deprecated

int display_picture_number;

#endif

/**

* quality (between 1 (good) and FF_LAMBDA_MAX (bad))

*/

int quality;

/**

* for some private data of the user

*/

void *opaque;

/**

* When decoding, this signals how much the picture must be delayed.

* extra_delay = repeat_pict / (2*fps)

*/

int repeat_pict;

/**

* The content of the picture is interlaced.

*/

int interlaced_frame;

/**

* If the content is interlaced, is top field displayed first.

*/

int top_field_first;

/**

* Tell user application that palette has changed from previous frame.

*/

int palette_has_changed;

#if FF_API_REORDERED_OPAQUE

/**

* reordered opaque 64 bits (generally an integer or a double precision float

* PTS but can be anything).

* The user sets AVCodecContext.reordered_opaque to represent the input at

* that time,

* the decoder reorders values as needed and sets AVFrame.reordered_opaque

* to exactly one of the values provided by the user through AVCodecContext.reordered_opaque

*

* @deprecated Use AV_CODEC_FLAG_COPY_OPAQUE instead

*/

attribute_deprecated

int64_t reordered_opaque;

#endif

/**

* Sample rate of the audio data.

*/

int sample_rate;

#if FF_API_OLD_CHANNEL_LAYOUT

/**

* Channel layout of the audio data.

* @deprecated use ch_layout instead

*/

attribute_deprecated

uint64_t channel_layout;

#endif

/**

* AVBuffer references backing the data for this frame. All the pointers in

* data and extended_data must point inside one of the buffers in buf or

* extended_buf. This array must be filled contiguously -- if buf[i] is

* non-NULL then buf[j] must also be non-NULL for all j < i.

*

* There may be at most one AVBuffer per data plane, so for video this array

* always contains all the references. For planar audio with more than

* AV_NUM_DATA_POINTERS channels, there may be more buffers than can fit in

* this array. Then the extra AVBufferRef pointers are stored in the

* extended_buf array.

*/

AVBufferRef *buf[AV_NUM_DATA_POINTERS];

/**

* For planar audio which requires more than AV_NUM_DATA_POINTERS

* AVBufferRef pointers, this array will hold all the references which

* cannot fit into AVFrame.buf.

*

* Note that this is different from AVFrame.extended_data, which always

* contains all the pointers. This array only contains the extra pointers,

* which cannot fit into AVFrame.buf.

*

* This array is always allocated using av_malloc() by whoever constructs

* the frame. It is freed in av_frame_unref().

*/

AVBufferRef **extended_buf;

/**

* Number of elements in extended_buf.

*/

int nb_extended_buf;

AVFrameSideData **side_data;

int nb_side_data;

/**

* @defgroup lavu_frame_flags AV_FRAME_FLAGS

* @ingroup lavu_frame

* Flags describing additional frame properties.

*

* @{

*/

/**

* The frame data may be corrupted, e.g. due to decoding errors.

*/

#define AV_FRAME_FLAG_CORRUPT (1 << 0)

/**

* A flag to mark the frames which need to be decoded, but shouldn't be output.

*/

#define AV_FRAME_FLAG_DISCARD (1 << 2)

/**

* @}

*/

/**

* Frame flags, a combination of @ref lavu_frame_flags

*/

int flags;

/**

* MPEG vs JPEG YUV range.

* - encoding: Set by user

* - decoding: Set by libavcodec

*/

enum AVColorRange color_range;

enum AVColorPrimaries color_primaries;

enum AVColorTransferCharacteristic color_trc;

/**

* YUV colorspace type.

* - encoding: Set by user

* - decoding: Set by libavcodec

*/

enum AVColorSpace colorspace;

enum AVChromaLocation chroma_location;

/**

* frame timestamp estimated using various heuristics, in stream time base

* - encoding: unused

* - decoding: set by libavcodec, read by user.

*/

int64_t best_effort_timestamp;

/**

* reordered pos from the last AVPacket that has been input into the decoder

* - encoding: unused

* - decoding: Read by user.

*/

int64_t pkt_pos;

#if FF_API_PKT_DURATION

/**

* duration of the corresponding packet, expressed in

* AVStream->time_base units, 0 if unknown.

* - encoding: unused

* - decoding: Read by user.

*

* @deprecated use duration instead

*/

attribute_deprecated

int64_t pkt_duration;

#endif

/**

* metadata.

* - encoding: Set by user.

* - decoding: Set by libavcodec.

*/

AVDictionary *metadata;

/**

* decode error flags of the frame, set to a combination of

* FF_DECODE_ERROR_xxx flags if the decoder produced a frame, but there

* were errors during the decoding.

* - encoding: unused

* - decoding: set by libavcodec, read by user.

*/

int decode_error_flags;

#define FF_DECODE_ERROR_INVALID_BITSTREAM 1

#define FF_DECODE_ERROR_MISSING_REFERENCE 2

#define FF_DECODE_ERROR_CONCEALMENT_ACTIVE 4

#define FF_DECODE_ERROR_DECODE_SLICES 8

#if FF_API_OLD_CHANNEL_LAYOUT

/**

* number of audio channels, only used for audio.

* - encoding: unused

* - decoding: Read by user.

* @deprecated use ch_layout instead

*/

attribute_deprecated

int channels;

#endif

/**

* size of the corresponding packet containing the compressed

* frame.

* It is set to a negative value if unknown.

* - encoding: unused

* - decoding: set by libavcodec, read by user.

*/

int pkt_size;

/**

* For hwaccel-format frames, this should be a reference to the

* AVHWFramesContext describing the frame.

*/

AVBufferRef *hw_frames_ctx;

/**

* AVBufferRef for free use by the API user. FFmpeg will never check the

* contents of the buffer ref. FFmpeg calls av_buffer_unref() on it when

* the frame is unreferenced. av_frame_copy_props() calls create a new

* reference with av_buffer_ref() for the target frame's opaque_ref field.

*

* This is unrelated to the opaque field, although it serves a similar

* purpose.

*/

AVBufferRef *opaque_ref;

/**

* @anchor cropping

* @name Cropping

* Video frames only. The number of pixels to discard from the the

* top/bottom/left/right border of the frame to obtain the sub-rectangle of

* the frame intended for presentation.

* @{

*/

size_t crop_top;

size_t crop_bottom;

size_t crop_left;

size_t crop_right;

/**

* @}

*/

/**

* AVBufferRef for internal use by a single libav* library.

* Must not be used to transfer data between libraries.

* Has to be NULL when ownership of the frame leaves the respective library.

*

* Code outside the FFmpeg libs should never check or change the contents of the buffer ref.

*

* FFmpeg calls av_buffer_unref() on it when the frame is unreferenced.

* av_frame_copy_props() calls create a new reference with av_buffer_ref()

* for the target frame's private_ref field.

*/

AVBufferRef *private_ref;

/**

* Channel layout of the audio data.

*/

AVChannelLayout ch_layout;

/**

* Duration of the frame, in the same units as pts. 0 if unknown.

*/

int64_t duration;

} AVFrame;二 核心函数 av_frame_alloc(),av_frame_get_buffer

AVFrame* avframe1 = av_frame_alloc();

从实现来看,av_frame_alloc 函数只是 给 avframe1分配了空间,但是内部的值都没有,也就是说avframe内部需要空间的都没有分配。

int av_frame_get_buffer(AVFrame *frame, int align);

给传递进来的 frame 的内部元素分配空间,

第一个参数:给那个frame分配空间

第二个参数:分配空间的对齐是按照 align 进行,如果填充的是0,会根据当前CPU给一个默认值,测试在32位 windows上,这个值就是32. 一般都会填写0,使用默认值

/**

* Allocate new buffer(s) for audio or video data.

*

* The following fields must be set on frame before calling this function:

* - format (pixel format for video, sample format for audio)

* - width and height for video

* - nb_samples and ch_layout for audio

*

* This function will fill AVFrame.data and AVFrame.buf arrays and, if

* necessary, allocate and fill AVFrame.extended_data and AVFrame.extended_buf.

* For planar formats, one buffer will be allocated for each plane.

*

* @warning: if frame already has been allocated, calling this function will

* leak memory. In addition, undefined behavior can occur in certain

* cases.

*

* @param frame frame in which to store the new buffers.

* @param align Required buffer size alignment. If equal to 0, alignment will be

* chosen automatically for the current CPU. It is highly

* recommended to pass 0 here unless you know what you are doing.

*

* @return 0 on success, a negative AVERROR on error.

*/

int av_frame_get_buffer(AVFrame *frame, int align);内部实现:

可以看到如果是video,则会先判断 width 和 height 是否 > 0

也就是说,我们在调用这个函数之前,如果是for video,需要保证avframe 的 width 和height 的属性有被设置过。

int av_frame_get_buffer(AVFrame *frame, int align)

{

if (frame->format < 0)

return AVERROR(EINVAL);

FF_DISABLE_DEPRECATION_WARNINGS

if (frame->width > 0 && frame->height > 0)

return get_video_buffer(frame, align);

else if (frame->nb_samples > 0 &&

(av_channel_layout_check(&frame->ch_layout)

#if FF_API_OLD_CHANNEL_LAYOUT

|| frame->channel_layout || frame->channels > 0

#endif

))

return get_audio_buffer(frame, align);

FF_ENABLE_DEPRECATION_WARNINGS

return AVERROR(EINVAL);

}那么如果我们不设置会有什么问题呢?

试一试

设置一下 width 和height 再来看一下

还是有问题:Invalid argument

void testAVframe() {

cout << avcodec_configuration() << endl;

AVFrame* avframe1 = av_frame_alloc();

cout << "debug1...." << endl;

avframe1->width = 300;

avframe1->height = 600;

int ret = 0;

ret = av_frame_get_buffer(avframe1, 0);

if (ret < 0 ) {

//如果方法失败,会返回一个 负数,可以通过 av_strerror函数打印这个具体的信息

char buf[1024] = { 0 };

av_strerror(ret, buf, sizeof(buf));

cout << buf << endl;

}

cout << "debug2......" << endl;

}那么应该再来看源码中的具体方法:get_video_buffer(frame, align);

源码在 frame.c中,我们看到 在 av_pix_fmt_desc_get(frame->format)中 返回了一个 desc,如果这个desc 为null,也会返回error。那么也就是说,这个frame->format 应该是有必要设置的,如下:

static int get_video_buffer(AVFrame *frame, int align)

{

const AVPixFmtDescriptor *desc = av_pix_fmt_desc_get(frame->format);

int ret, i, padded_height, total_size;

int plane_padding = FFMAX(16 + 16/*STRIDE_ALIGN*/, align);

ptrdiff_t linesizes[4];

size_t sizes[4];

if (!desc)

return AVERROR(EINVAL);为了验证这个问题,我们可以设置一下frame 中的 format 测试一下。发现是可以的。我们这时候再将 avframe1中的 关键数据 打印 看一下。

void testAVframe() {

cout << avcodec_configuration() << endl;

AVFrame* avframe1 = av_frame_alloc();

cout << "debug1...." << endl;

avframe1->width = 300;

avframe1->height = 600;

//设置 foramt为 AV_PIX_FMT_YUV420P,再次测试

avframe1->format = AV_PIX_FMT_YUV420P;

int ret = 0;

ret = av_frame_get_buffer(avframe1, 0);

if (ret < 0 ) {

//如果方法失败,会返回一个 负数,可以通过 av_strerror函数打印这个具体的信息

char buf[1024] = { 0 };

av_strerror(ret, buf, sizeof(buf));

cout << buf << endl;

}

cout << "debug2......" << endl;

}关于linesize[x]字节数的验证

void testAVframe() {

cout << avcodec_configuration() << endl;

AVFrame* avframe1 = av_frame_alloc();

cout << "debug1...." << endl;

//只设置 宽和高 ,av_frame_get_buffer 函数还是会报错误。

avframe1->width = 641 ;

avframe1->height = 111;

//设置 foramt为 AV_PIX_FMT_YUV420P,再次测试 就成功了

avframe1->format = AV_PIX_FMT_RGB24;

int ret = 0;

ret = av_frame_get_buffer(avframe1, 0);

if (ret < 0 ) {

//如果方法失败,会返回一个 负数,可以通过 av_strerror函数打印这个具体的信息

char buf[1024] = { 0 };

av_strerror(ret, buf, sizeof(buf));

cout << buf << endl;

}

cout << "debug2......" << endl;

// avframe1 通过 av_frame_get_buffer 函数后,打印相关数据

cout<< " 640 *111 yuv420p case , avframe1->linesize[0] = " << avframe1->linesize[0] << endl; ///640

cout << " 640 *111 yuv420p case , avframe1->linesize[1] = " << avframe1->linesize[1] << endl; ///320

cout << " 640 *111 yuv420p case , avframe1->linesize[2] = " << avframe1->linesize[2] << endl; ///320

cout << " 641 *111 yuv420p case , avframe1->linesize[0] = " << avframe1->linesize[0] << endl;///672 由于字节对齐,多了一个32字节出来

cout << " 641 *111 yuv420p case , avframe1->linesize[1] = " << avframe1->linesize[1] << endl;///352 由于字节对齐,多了一个32字节出来

cout << " 641 *111 yuv420p case , avframe1->linesize[2] = " << avframe1->linesize[2] << endl;///352 由于字节对齐,多了一个32字节出来

cout << " 640 *111 AV_PIX_FMT_RGB24 case , avframe1->linesize[0] = " << avframe1->linesize[0] << endl; //1920,这是因为640/32 是可以除尽的,因此640 * (RGB占用3个字节) = 1920

cout << " 640 *111 AV_PIX_FMT_RGB24 case , avframe1->linesize[1] = " << avframe1->linesize[1] << endl; //0

cout << " 640 *111 AV_PIX_FMT_RGB24 case , avframe1->linesize[2] = " << avframe1->linesize[2] << endl;//0

cout << " 641 *111 AV_PIX_FMT_RGB24 case , avframe1->linesize[0] = " << avframe1->linesize[0] << endl; //2016, 这是因为641/32 是不能除尽的,因此 对于 多出来的这1个像素,本来占用1*3 = 3个字节就好,但是由于需要字节对齐,实际上给这1个像素要分配32个单位,因此实际分配位 32 *3 = 96字节 96+1920 = 2016个字节

cout << " 641 *111 AV_PIX_FMT_RGB24 case , avframe1->linesize[1] = " << avframe1->linesize[1] << endl;

cout << " 641 *111 AV_PIX_FMT_RGB24 case , avframe1->linesize[2] = " << avframe1->linesize[2] << endl;

}三。核心函数 av_frame_ref() 和 av_frame_unref(AVFrame *frame); av_frame_free(AVFrame **frame); av_buffer_get_ref_count(const AVBufferRef *buf);

int av_frame_ref(AVFrame *dst, const AVFrame *src);

引用计数 +1 和 引用计数 -1

void testAVframe1() {

int ret = 0;

AVFrame* avframe1 = av_frame_alloc();

avframe1->width = 641;

avframe1->height = 111;

avframe1->format = AV_PIX_FMT_YUV420P;

ret = av_frame_get_buffer(avframe1, 0);

if (ret < 0) {

//如果方法失败,会返回一个 负数,可以通过 av_strerror函数打印这个具体的信息

char buf[1024] = { 0 };

av_strerror(ret, buf, sizeof(buf));

cout << buf << endl;

}

//这里有个疑问,这时 avframe没有放置具体的数据,为什么这个buf[0] 有值?

if (avframe1->buf[0])

{

//av_buffer_get_ref_count函数打印 引用计数 为1

cout << "frame1 ref count = " <<

av_buffer_get_ref_count(avframe1->buf[0]); // 线程安全

cout << endl;

}

AVFrame* avframe2 = av_frame_alloc();

ret = av_frame_ref(avframe2, avframe1);

if (ret <0 ) {

//如果方法失败,会返回一个 负数,可以通过 av_strerror函数打印这个具体的信息

char buf[1024] = { 0 };

av_strerror(ret, buf, sizeof(buf));

cout << buf << endl;

}

if (avframe1->buf[0])

{

//av_buffer_get_ref_count函数打印 引用计数2

cout << "frame1 ref count = " <<

av_buffer_get_ref_count(avframe1->buf[0]); // 线程安全

cout << endl;

}

if (avframe2->buf[0])

{

//av_buffer_get_ref_count函数打印 引用计数2

cout << "frame2 ref count = " <<

av_buffer_get_ref_count(avframe2->buf[0]); // 线程安全

cout << endl;

}

cout << "debug2...." << endl;

av_frame_unref(avframe2);

if (avframe1->buf[0])

{

//av_buffer_get_ref_count函数打印 引用计数1

cout << "frame111111 ref count = " <<

av_buffer_get_ref_count(avframe1->buf[0]); // 线程安全

cout << endl;

}

//到这里 只是通过 av_frame_unref(avframe2); 释放了avframe2的内部数据,但是avframe2还是存在的

if (avframe2->buf[0])

{

//走不到这一行

//av_buffer_get_ref_count函数打印 引用计数

cout << "frame222222 ref count = " <<

av_buffer_get_ref_count(avframe2->buf[0]); // 线程安全

cout << endl;

}

av_frame_free(&avframe2);

cout << "debug3...." << endl;

av_frame_free(&avframe1);

}