目录

目录

1.水域识别

2.模型介绍

3.文件框架

4.代码示例

4.1 data_preprocess.py

4.2 model1.0.py

4.3 train2.0.py

4.4 predict.py

4.5 运行结果

5.总结

1.水域识别

人眼看见河道可以直接分辨出这是河道,但是如何让计算机也能识别出这是河道呢?

这里就用到了深度学习之图像处理技术。

想要识别河道中的河水,对拍摄的河道图片进行编辑,可以创建两个图片文件的集合,一个文件中存放具体的河道图片,另一个存放带有标注的河道水域的分割掩码(标签),然后进行模型训练,训练一个可以识别河道水域的深度学习模型。

原始图像

掩码图像

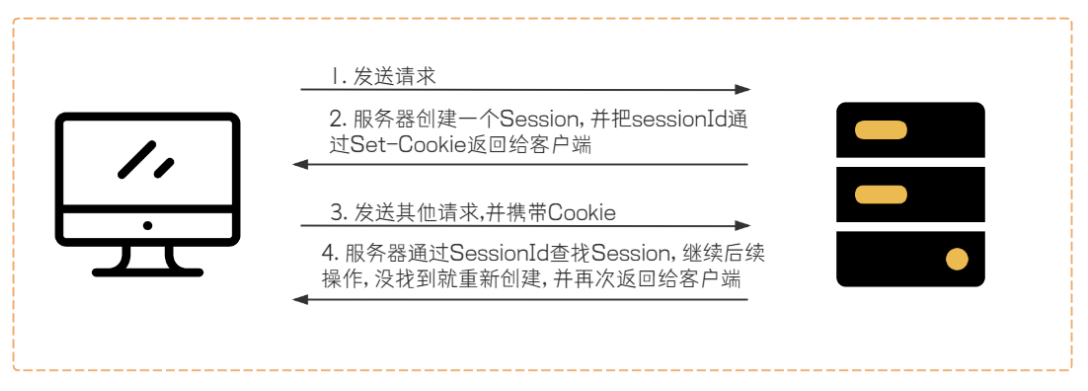

2.模型介绍

使用深度学习框架TensorFlow和Keras进行图像分割任务。

模型的训练首先要进行数据处理,然后再进行模型训练。

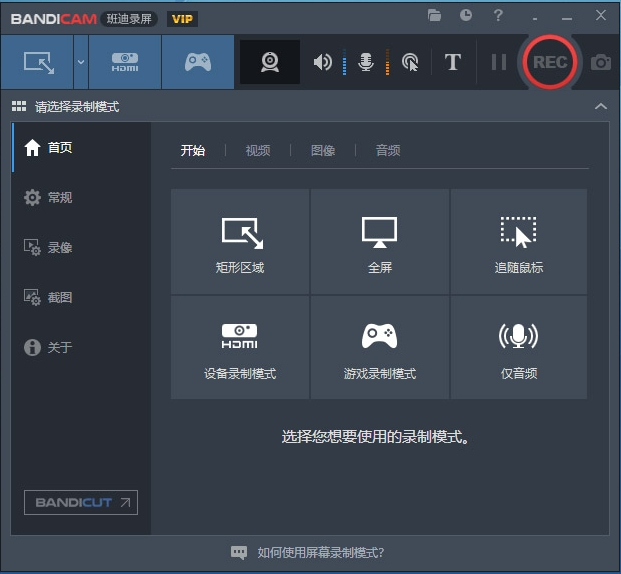

3.文件框架

data2.0中的三个数据文件夹分别是训练集,验证集和测试集的图片。

data_preprocess.py是图片预处理代码。

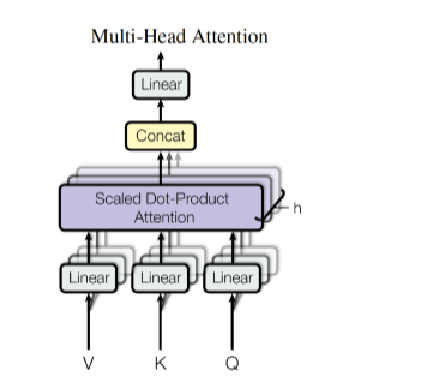

model1.0.py是定义了一个基于U-Net架构的卷积神经网络模型,用来做图像分割任务。

train2.0.py是训练程序,最重要的是要运行这个。

predict.py是调用训练后的模型的代码。

4.代码示例

4.1 data_preprocess.py

import os

import cv2

import numpy as np

from tensorflow.keras.preprocessing.image import img_to_array, load_img

# 图像大小和通道数

IMG_HEIGHT = 256

IMG_WIDTH = 256

IMG_CHANNELS = 3

# 路径设置

image_dir = './River Water Segmentation/data2.0/train/images'

mask_dir = './River Water Segmentation/data2.0/train/masks'

def preprocess_images(image_dir, target_size=(IMG_HEIGHT, IMG_WIDTH)):

images = []

for filename in os.listdir(image_dir):

img_path = os.path.join(image_dir, filename)

img = load_img(img_path, target_size=target_size)

img_array = img_to_array(img)

images.append(img_array)

return np.array(images)

def preprocess_masks(mask_dir, target_size=(IMG_HEIGHT, IMG_WIDTH)):

masks = []

for filename in os.listdir(mask_dir):

mask_path = os.path.join(mask_dir, filename)

mask = load_img(mask_path, target_size=target_size, color_mode="grayscale")

mask_array = img_to_array(mask) / 255.0 # 将掩码值标准化为0和1

masks.append(mask_array)

return np.array(masks)

if __name__ == "__main__":

images = preprocess_images(image_dir)

masks = preprocess_masks(mask_dir)

print(f"Processed {len(images)} images and {len(masks)} masks.")

4.2 model1.0.py

import tensorflow as tf

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Conv2DTranspose, concatenate, Input, Dropout

from tensorflow.keras.models import Model

def build_unet(input_shape):

inputs = Input(input_shape)

# 编码器部分 (下采样)

c1 = Conv2D(64, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(inputs)

c1 = Dropout(0.1)(c1)

c1 = Conv2D(64, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c1)

p1 = MaxPooling2D((2, 2))(c1)

c2 = Conv2D(128, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(p1)

c2 = Dropout(0.1)(c2)

c2 = Conv2D(128, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c2)

p2 = MaxPooling2D((2, 2))(c2)

c3 = Conv2D(256, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(p2)

c3 = Dropout(0.2)(c3)

c3 = Conv2D(256, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c3)

p3 = MaxPooling2D((2, 2))(c3)

c4 = Conv2D(512, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(p3)

c4 = Dropout(0.3)(c4)

c4 = Conv2D(512, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c4)

p4 = MaxPooling2D((2, 2))(c4)

# 底层

c5 = Conv2D(1024, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(p4)

c5 = Dropout(0.4)(c5)

c5 = Conv2D(1024, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c5)

# 解码器部分 (上采样)

u6 = Conv2DTranspose(512, (2, 2), strides=(2, 2), padding='same')(c5)

u6 = concatenate([u6, c4])

c6 = Conv2D(512, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(u6)

c6 = Dropout(0.3)(c6)

c6 = Conv2D(512, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c6)

u7 = Conv2DTranspose(256, (2, 2), strides=(2, 2), padding='same')(c6)

u7 = concatenate([u7, c3])

c7 = Conv2D(256, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(u7)

c7 = Dropout(0.2)(c7)

c7 = Conv2D(256, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c7)

u8 = Conv2DTranspose(128, (2, 2), strides=(2, 2), padding='same')(c7)

u8 = concatenate([u8, c2])

c8 = Conv2D(128, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(u8)

c8 = Dropout(0.1)(c8)

c8 = Conv2D(128, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c8)

u9 = Conv2DTranspose(64, (2, 2), strides=(2, 2), padding='same')(c8)

u9 = concatenate([u9, c1], axis=3)

c9 = Conv2D(64, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(u9)

c9 = Dropout(0.1)(c9)

c9 = Conv2D(64, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c9)

outputs = Conv2D(1, (1, 1), activation='sigmoid')(c9) # 单通道输出用于二分类分割

model = Model(inputs=[inputs], outputs=[outputs])

return model

if __name__ == "__main__":

# 输入形状 (256, 256, 3) 用于 RGB 图像

model = build_unet((256, 256, 3))

model.summary()

4.3 train2.0.py

import numpy as np

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping, ReduceLROnPlateau

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from model import build_unet

from data_preprocess import preprocess_images, preprocess_masks

# 设置路径

train_images_path = './River Water Segmentation/data2.0/train/images'

train_masks_path = './River Water Segmentation/data2.0/train/masks'

val_images_path = './River Water Segmentation/data2.0/train/images'

val_masks_path = './River Water Segmentation/data2.0/train/masks'

# 加载数据

train_images = preprocess_images(train_images_path)

train_masks = preprocess_masks(train_masks_path)

val_images = preprocess_images(val_images_path)

val_masks = preprocess_masks(val_masks_path)

# 数据增强

data_gen_args = dict(rotation_range=0.2,

width_shift_range=0.05,

height_shift_range=0.05,

shear_range=0.05,

zoom_range=0.05,

horizontal_flip=True,

fill_mode='nearest')

image_datagen = ImageDataGenerator(**data_gen_args)

mask_datagen = ImageDataGenerator(**data_gen_args)

image_datagen.fit(train_images)

mask_datagen.fit(train_masks)

# 编译模型

model.compile(optimizer=Adam(learning_rate=1e-4), loss='binary_crossentropy', metrics=['accuracy'])

# 设置回调函数

checkpoint = ModelCheckpoint('models/unet_model.h5', save_best_only=True, monitor='val_loss', mode='min')

early_stopping = EarlyStopping(monitor='val_loss', patience=10, restore_best_weights=True)

reduce_lr = ReduceLROnPlateau(monitor='val_loss', factor=0.2, patience=5, min_lr=1e-6)

# 训练模型

history = model.fit(image_datagen.flow(train_images, train_masks, batch_size=16),

validation_data=(val_images, val_masks),

epochs=50,

callbacks=[checkpoint, early_stopping, reduce_lr])

# 保存最终模型

model.save('./River Water Segmentation/models/6.0model.h5')

4.4 predict.py

import numpy as np

from tensorflow.keras.models import load_model

from tensorflow.keras.preprocessing import image

import matplotlib.pyplot as plt

import cv2

# 加载模型

model = load_model('./River Water Segmentation/models/unet_final_model.h5')

# 预测函数

def predict_image(image_path):

img = image.load_img(image_path, target_size=(256, 256))

img_array = image.img_to_array(img) / 255.0

img_array = np.expand_dims(img_array, axis=0)

# 预测掩码

pred_mask = model.predict(img_array)

pred_mask = (pred_mask > 0.5).astype(np.uint8) # 二值化处理

# 显示原图和预测掩码

plt.figure(figsize=(10, 5))

plt.subplot(1, 2, 1)

plt.imshow(img)

plt.title('Original')

plt.subplot(1, 2, 2)

plt.imshow(pred_mask[0, :, :, 0], cmap='gray')

plt.title('Predicted')

plt.show()

# 示例预测

predict_image('./River Water Segmentation/data/test/river/water_body_27.jpg')

4.5 运行结果

5.总结

模型主要用到了U-net深度学习模型,它通过编码器-解码器结构,能够有效地捕捉图像的上下文信息并进行精细的分割。

关于此例程可以考虑用在其他的图像分割对象上,比如森林区域识别,沙漠区域识别,改进代码可以进一步提高准确率,如果需要帮助或者合作,请私信我。

还请转载文章或者使用代码请备注来源呀!