需求:

1.爬取长沙房产网租房信息(长沙租房信息_长沙出租房源|房屋出租价格【长沙贝壳租房】

包括租房标题、标题链接,价格和地址

2.实现翻页爬取

3.使用bs4解析数据

分析

1.抓取正确的数据包——看响应内容

找到正确的后,复制请求地址

翻页参数和refere参数

refere参数:代表页面的来源

headers = {

'Referer':'https://cs.lianjia.com/zufang/pg1/',

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36'

}

pg3翻页参数

for i in range(1,6):

print('==========','当前是第{}页'.format(i))

url = 'https://cs.lianjia.com/zufang/pg{}/#contentList'.format(i)

获取解析栏

def parse_data(data):

s = BeautifulSoup(data,'lxml')

title = s.find_all('a',{"class":"twoline"})#标题

price = s.find_all('span',{'class':'content__list--item-price'})#价格

content = s.find_all('p',{'class':'content__list--item--des'})#地址

处理获取到的地址、价格和租房标题

for titles ,prices,contents in zip(title,price,content):

# print(titles)https://cs.lianjia.com/zufang/CS1777573960666316800.html

name = titles.get_text().strip()#get_text()获取文字信息 strip()去除空格

link = 'https://cs.lianjia.com/'+titles['href']

p = prices.get_text()

c = contents.get_text()

cs = re.sub('/|\n','',c).replace(' ','')

示例代码:

import requests

from bs4 import BeautifulSoup

import re

##Google Proxy SwitchyOmega安装:https://www.cnblogs.com/shyzh/p/11684159.html

url = "https://cs.lianjia.com/zufang/"

headers = {

'Referer':'https://cs.lianjia.com/zufang/pg1/',

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36'

}

def get_data():

r = requests.get(url,headers=headers)

return r.text

def parse_data(data):

s = BeautifulSoup(data,'lxml')

title = s.find_all('a',{"class":"twoline"})#标题

price = s.find_all('span',{'class':'content__list--item-price'})#价格

content = s.find_all('p',{'class':'content__list--item--des'})#地址

# print(title)

for titles ,prices,contents in zip(title,price,content):

# print(titles)https://cs.lianjia.com/zufang/CS1777573960666316800.html

name = titles.get_text().strip()#get_text()获取文字信息 strip()去除空格

link = 'https://cs.lianjia.com/'+titles['href']

p = prices.get_text()

c = contents.get_text()

cs = re.sub('/|\n','',c).replace(' ','')

print(name)

print(link)

# print(prices)

print(p)

print(cs)

print('=============================')

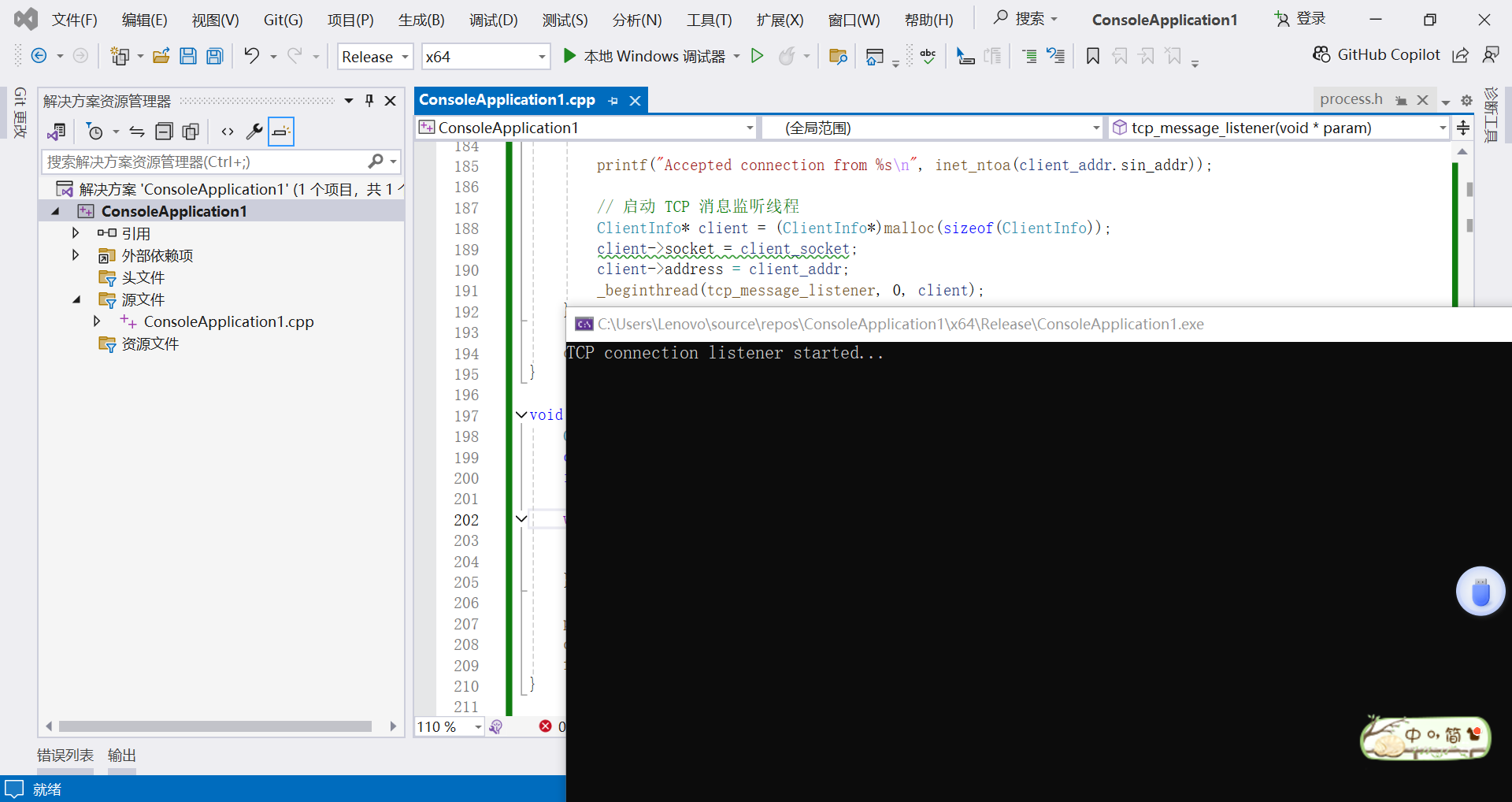

if __name__ == '__main__':

#https://cs.lianjia.com/zufang/pg3/#contentList

for i in range(1,6):

print('==========','当前是第{}页'.format(i))

url = 'https://cs.lianjia.com/zufang/pg{}/#contentList'.format(i)

h = get_data()

# print(h)

parse_data(h)运行结果: