写在前面

- 实验需要一个

CNI为flannel的K8s集群 - 之前有一个

calico的版本有些旧了,所以国庆部署了一个 v1.31.1 版本 3 * master + 5 * work- 时间关系直接用的工具

kubespray - 博文内容为部署过程以及一些躺坑分享

- 需要科学上网

- 理解不足小伙伴帮忙指正 😃,生活加油

99%的焦虑都来自于虚度时间和没有好好做事,所以唯一的解决办法就是行动起来,认真做完事情,战胜焦虑,战胜那些心里空荡荡的时刻,而不是选择逃避。不要站在原地想象困难,行动永远是改变现状的最佳方式

机器准备

用到的机器

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.26.100 Ready control-plane 4h53m v1.31.1

192.168.26.101 Ready control-plane 4h49m v1.31.1

192.168.26.102 Ready control-plane 4h45m v1.31.1

192.168.26.103 Ready <none> 4h43m v1.31.1

192.168.26.105 Ready <none> 4h43m v1.31.1

192.168.26.106 Ready <none> 4h43m v1.31.1

192.168.26.107 Ready <none> 4h43m v1.31.1

192.168.26.108 Ready <none> 4h43m v1.31.1

┌──[root@liruilongs.github.io]-[~/kubespray]

└─

还需要一台客户机(liruilongs.github.io),也可以直接使用 集群 中的一台机器。建议单独出来,需要装一些 docker 之类的工具,放到一起可能会导致 runtime socket 污染。之前遇到一次,kubelet 运行要指定 runtime socket 的才行

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$hostnamectl

Static hostname: liruilongs.github.io

Icon name: computer-vm

Chassis: vm 🖴

Machine ID: d8b7006e0b5246d3940d0da0b5e4d792

Boot ID: 9f978ed1303842f6b8685a36624e5843

Virtualization: vmware

Operating System: openEuler 24.03 (LTS)

Kernel: Linux 6.6.0-44.0.0.50.oe2403.x86_64

Architecture: x86-64

Hardware Vendor: VMware, Inc.

Hardware Model: VMware Virtual Platform

Firmware Version: 6.00

Firmware Date: Wed 2020-07-22

Firmware Age: 4y 2month 1w 4d

使用的系统: openEuler 24.03 (LTS)要选择长期支持版本,镜像下载地址:

https://www.openeuler.org/zh/download/?version=openEuler%2024.03%20LTS

通过 VMware 创建一台机器,配置一下 root 用户和装机磁盘,直接下一步就OK

其他的直接克隆一下,克隆完机器,通过IP 配置脚本统一配置一下IP,主机名称 DNS 相关

IP 配置脚本

┌──[root@192.168.26.100]-[~]

└─$cat set.sh

#!/bin/bash

if [ $# -eq 0 ]; then

echo "usage: `basename $0` num"

exit 1

fi

[[ $1 =~ ^[0-9]+$ ]]

if [ $? -ne 0 ]; then

echo "usage: `basename $0` 10~240"

exit 1

fi

cat > /etc/sysconfig/network-scripts/ifcfg-ens33 <<EOF

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

IPADDR=192.168.26.${1}

PREFIX=24

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=default

NAME=ethernet-ens33

DEVICE=ens33

ONBOOT=yes

GATEWAY=192.168.26.2

DNS1=192.168.26.2

EOF

systemctl restart NetworkManager &> /dev/null

ip=$(ifconfig ens33 | awk '/inet /{print $2}')

sed -i '/192/d' /etc/issue

echo $ip

echo $ip >> /etc/issue

hostnamectl set-hostname vms${1}.liruilongs.github.io

echo "192.168.26.${1} vms${1}.liruilongs.github.io vms${1}" >> /etc/hosts

echo 'PS1="\[\033[1;32m\]┌──[\[\033[1;34m\]\u@\H\[\033[1;32m\]]-[\[\033[0;1m\]\w\[\033[1;32m\]] \n\[\033[1;32m\]└─\[\033[1;34m\]\$\[\033[0m\]"' >> /root/.bashrc

reboot

┌──[root@192.168.26.100]-[~]

└─$

运行方式,后面跟IP的 主机地址,每台机器运行一下

./set.sh 100

配置完成之后我们在客户机上面配置一下免密,当然这个不是必须

配置免密的IP列表

┌──[root@liruilongs.github.io]-[~]

└─$cat host_list

192.168.26.100

192.168.26.101

192.168.26.102

192.168.26.103

192.168.26.105

192.168.26.106

192.168.26.107

192.168.26.108

执行的免密脚本,需要装一个 expect, 脚本中 redhat 为 root 密码,需要修改为自己的

#!/bin/bash

#@File : mianmi.sh

#@Time : 2022/08/20 17:45:53

#@Author : Li Ruilong

#@Version : 1.0

#@Desc : yum install -y expect

#@Contact : 1224965096@qq.com

/usr/bin/expect <<-EOF

spawn ssh-keygen

expect "(/root/.ssh/id_rsa)" {send "\r"}

expect {

"(empty for no passphrase)" {send "\r"}

"already" {send "y\r"}

}

expect {

"again" {send "\r"}

"(empty for no passphrase)" {send "\r"}

}

expect {

"again" {send "\r"}

"#" {send "\r"}

}

expect "#"

expect eof

EOF

for IP in $( cat host_list )

do

if [ -n IP ];then

/usr/bin/expect <<-EOF

spawn ssh-copy-id root@$IP

expect {

"*yes/no*" { send "yes\r"}

"*password*" { send "redhat\r" }

}

expect {

"*password" { send "redhat\r"}

"#" { send "\r"}

}

expect "#"

expect eof

EOF

fi

done

到这里就OK了,剩下的我们通过 ansible 来处理

kubespray 认识

使用的项目: https://github.com/kubernetes-sigs/kubespray

感兴趣小伙伴可以了解一下,这里我们 fork 到自己的仓库,方便后期更改, clone 一下

┌──[root@liruilongs.github.io]-[~]

└─$git clone https://github.com/LIRUILONGS/kubespray.git

然后根据文档描述来部署就OK

# Copy ``inventory/sample`` as ``inventory/mycluster``

cp -rfp inventory/sample inventory/mycluster

# Update Ansible inventory file with inventory builder

declare -a IPS=(10.10.1.3 10.10.1.4 10.10.1.5)

CONFIG_FILE=inventory/mycluster/hosts.yaml python3 contrib/inventory_builder/inventory.py ${IPS[@]}

# Review and change parameters under ``inventory/mycluster/group_vars``

cat inventory/mycluster/group_vars/all/all.yml

cat inventory/mycluster/group_vars/k8s_cluster/k8s-cluster.yml

# Clean up old Kubernetes cluster with Ansible Playbook - run the playbook as root

# The option `--become` is required, as for example cleaning up SSL keys in /etc/,

# uninstalling old packages and interacting with various systemd daemons.

# Without --become the playbook will fail to run!

# And be mind it will remove the current kubernetes cluster (if it's running)!

ansible-playbook -i inventory/mycluster/hosts.yaml --become --become-user=root reset.yml

# Deploy Kubespray with Ansible Playbook - run the playbook as root

# The option `--become` is required, as for example writing SSL keys in /etc/,

# installing packages and interacting with various systemd daemons.

# Without --become the playbook will fail to run!

ansible-playbook -i inventory/mycluster/hosts.yaml --become --become-user=root cluster.yml

简单看一下,项目比较重,大概 1173 个任务,实际执行的大概 500 左右,有些是系统兼容处理,辅助任务。

按照上面的要求 把给的 Demo 剧本相关的主机清单以及清单变量复制一份,修改 mycluster 为自己的名字 liruilong-cluster

需要的 ansible 版本比较新,当前机器的版本有些旧,所以我们使用 容器的方式,有个 ansible_version.yml 的剧本专门做 ansible 校验,可以通过排除 check 标签来跳过,但是试了下不太行,所以还是用容器了

┌──[root@liruilongs.github.io]-[~]

└─$docker run --rm -it -v /root/kubespray/:/kubespray/ -v "${HOME}"/.ssh/id_rsa:/root/.ssh/id_rsa quay.io/kubespray/kubespray:v2.26.0 bash

这里有个

坑,目录映射要直接映射整个项目,容器版本里面的项目有些旧了,和当前项目剧本不一致,执行了好多次发现,项目中给的方式是只映射了清单文件的目录。把整个项目映射过去,不要就映射清单文件目录,在说一遍

下面是项目中给的 容器方式运行的 Demo

git checkout v2.26.0

docker pull quay.io/kubespray/kubespray:v2.26.0

docker run --rm -it --mount type=bind,source="$(pwd)"/inventory/sample,dst=/inventory \

--mount type=bind,source="${HOME}"/.ssh/id_rsa,dst=/root/.ssh/id_rsa \

quay.io/kubespray/kubespray:v2.26.0 bash

# Inside the container you may now run the kubespray playbooks:

ansible-playbook -i /inventory/inventory.ini --private-key /root/.ssh/id_rsa cluster.yml

主机清单以及变量配置

在执行剧本之前我们需要处理下主机清单,以及清单变量,直接在客户机修改即可

主机清单配置

可以看到基本的分组:

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$cat inventory.ini

# ## Configure 'ip' variable to bind kubernetes services on a

# ## different ip than the default iface

# ## We should set etcd_member_name for etcd cluster. The node that is not a etcd member do not need to set the value, or can set the empty string value.

[all]

# node1 ansible_host=95.54.0.12 # ip=10.3.0.1 etcd_member_name=etcd1

# node2 ansible_host=95.54.0.13 # ip=10.3.0.2 etcd_member_name=etcd2

# node3 ansible_host=95.54.0.14 # ip=10.3.0.3 etcd_member_name=etcd3

# node4 ansible_host=95.54.0.15 # ip=10.3.0.4 etcd_member_name=etcd4

# node5 ansible_host=95.54.0.16 # ip=10.3.0.5 etcd_member_name=etcd5

# node6 ansible_host=95.54.0.17 # ip=10.3.0.6 etcd_member_name=etcd6

# ## configure a bastion host if your nodes are not directly reachable

# [bastion]

# bastion ansible_host=x.x.x.x ansible_user=some_user

[kube_control_plane]

# node1

# node2

# node3

[etcd]

# node1

# node2

# node3

[kube_node]

# node2

# node3

# node4

# node5

# node6

Demo 中的有些清单变量的配置,实际上这里的变量优先级要高于角色中的变量,配置重名会覆盖掉角色的变量,所以这里我们只配置主机,关于清单变量小伙伴可以看我之前的文章,有详细的优先级分析。

下面为我们配置的,跳过了 104 ,不吉利 ^_^

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$cat inventory/liruilong-cluster/inventory.ini

[all]

192.168.26.[101:103]

192.168.26.[105:108]

[kube_control_plane]

192.168.26.[100:102]

[etcd]

192.168.26.[100:102]

[kube_node]

192.168.26.103

192.168.26.[105:108]

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$

通过 --graph 命令检查一下分组

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$ansible-inventory -i inventory/liruilong-cluster/inventory.ini --graph

@all:

|--@etcd:

| |--192.168.26.100

| |--192.168.26.101

| |--192.168.26.102

|--@kube_control_plane:

| |--192.168.26.100

| |--192.168.26.101

| |--192.168.26.102

|--@kube_node:

| |--192.168.26.103

| |--192.168.26.105

| |--192.168.26.106

| |--192.168.26.107

| |--192.168.26.108

|--@ungrouped:

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$

我们配置了 root 免密,所以可以直接执行,没配置可能需要在 ansible 配置文件或者命令行做提权

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$ansible all -m ping -i inventory/liruilong-cluster/inventory.ini

192.168.26.103 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.26.105 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.26.101 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.26.102 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.26.106 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.26.107 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.26.108 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.26.100 | SUCCESS => {

"changed": false,

"ping": "pong"

}

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$

下面我们看下清单变量的处理

CNI 插件修改

修改一下网络插件,前面有讲,我们CNI 是要用 flannel ,所以这里修改,如果就使用 calico,那么这里不需要动

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$grep -rnw ./ ../../roles/ -i -e "kube_network_plugin:"

./group_vars/k8s_cluster/k8s-cluster.yml:70:kube_network_plugin: calico

../../roles/container-engine/gvisor/molecule/default/prepare.yml:21: kube_network_plugin: cni

../../roles/container-engine/containerd/molecule/default/prepare.yml:26: kube_network_plugin: cni

../../roles/container-engine/kata-containers/molecule/default/prepare.yml:21: kube_network_plugin: cni

../../roles/container-engine/youki/molecule/default/prepare.yml:21: kube_network_plugin: cni

../../roles/container-engine/cri-dockerd/molecule/default/prepare.yml:21: kube_network_plugin: cni

../../roles/container-engine/cri-o/molecule/default/prepare.yml:26: kube_network_plugin: cni

../../roles/kubespray-defaults/defaults/main/main.yml:189:kube_network_plugin: calico

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$

需要关注下下面两个

./group_vars/k8s_cluster/k8s-cluster.yml:70:kube_network_plugin: calico

../../roles/kubespray-defaults/defaults/main/main.yml:189:kube_network_plugin: calico

这里我们简单看下 ansible 变量优先级,当使用多种方式定义相同变量时,Ansible将使用优先级规则为变量选取值。以下讨论优先级从低到高:

- 配置文件(ansible.cfg)

- 命令行选项

- 角色defaults变量

- host和group变量

- Play变量

- Extra变量(全局变量)

所以group_vars 的优先级要大于 角色中的defaults 目录下的变量,直接修改第一个就可以

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$vim +70 ./group_vars/k8s_cluster/k8s-cluster.yml

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$grep -rnw ./ ../../roles/ -i -e "kube_network_plugin:"

./group_vars/k8s_cluster/k8s-cluster.yml:70:kube_network_plugin: flannel

......

NTP 检查

新装的机器,时间同步检查,时区确认

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$timedatectl status

Local time: 三 2024-10-02 21:02:09 CST

Universal time: 三 2024-10-02 13:02:09 UTC

RTC time: 三 2024-10-02 19:04:08

Time zone: Asia/Shanghai (CST, +0800)

System clock synchronized: no

NTP service: active

RTC in local TZ: no

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$

时区是OK,但是时间不同步

剧本中有相关配置,直接两个都改成 true

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$grep -rnw . -i -C 2 -e "ntp"

./group_vars/all/all.yml-123-# kube_webhook_token_auth_ca_data: "LS0t..."

./group_vars/all/all.yml-124-

./group_vars/all/all.yml:125:## NTP Settings

./group_vars/all/all.yml-126-# Start the ntpd or chrony service and enable it at system boot.

./group_vars/all/all.yml-127-ntp_enabled: false

./group_vars/all/all.yml-128-ntp_manage_config: false

./group_vars/all/all.yml-129-ntp_servers:

./group_vars/all/all.yml:130: - "0.pool.ntp.org iburst"

./group_vars/all/all.yml:131: - "1.pool.ntp.org iburst"

./group_vars/all/all.yml:132: - "2.pool.ntp.org iburst"

./group_vars/all/all.yml:133: - "3.pool.ntp.org iburst"

./group_vars/all/all.yml-134-

./group_vars/all/all.yml-135-## Used to control no_log attribute

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$

./group_vars/all/all.yml-127-ntp_enabled: true

./group_vars/all/all.yml-128-ntp_manage_config: true

确认 NTP 服务器确实可达

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$ ntpdate -q 0.pool.ntp.org

server 202.118.1.81, stratum 1, offset +45688.212514, delay 0.08925

server 193.182.111.143, stratum 2, offset +45688.206599, delay 0.19637

server 193.182.111.14, stratum 2, offset +45688.191100, delay 0.19890

server 78.46.102.180, stratum 2, offset +45688.213370, delay 0.30482

2 Oct 21:08:31 ntpdate[5225]: step time server 202.118.1.81 offset +45688.212514 sec

这里又遇到一个

坑,配置之后看上去是生效了,但是时间差的太远了,在执行剧本的时候,有个生成 tocken 的步骤,死活生成不了,怀疑是 NTP 的问题,后来通过 ansible 手动同步了一遍可以了

检查 chronyd 服务状态

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$ansible all -m shell -a "systemctl is-active chronyd" -i inventory/liruilong-cluster/inventory.ini

192.168.26.102 | CHANGED | rc=0 >>

active

192.168.26.103 | CHANGED | rc=0 >>

active

192.168.26.106 | CHANGED | rc=0 >>

active

192.168.26.105 | CHANGED | rc=0 >>

active

192.168.26.101 | CHANGED | rc=0 >>

active

192.168.26.108 | CHANGED | rc=0 >>

active

192.168.26.107 | CHANGED | rc=0 >>

active

192.168.26.100 | CHANGED | rc=0 >>

active

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$

设置当前时间

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$ansible all -m shell -a " date -s '2024-10-05 15:41'" -i inventory/liruilong-cluster/inventory.ini

这里实际上还需要系统时间设置BIOS时间:hwclock -w

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$ansible all -m shell -a "hwclock -w" -i inventory/liruilong-cluster/inventory.ini

同步检查

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$ansible all -m shell -a "chronyc tracking" -i inventory/liruilong-cluster/inventory.ini

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$ansible all -m shell -a "chronyc sources -v" -i inventory/liruilong-cluster/inventory.ini

防火墙处理

项目中说防火墙不受管理,您需要像以前一样实施自己的规则。 为了避免在部署过程中出现任何问题,您应该禁用防火墙

所以这里我们直接禁用,stop 之后 disable 一下,下面为检查的命令

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$ansible all -m shell -a "systemctl is-active firewalld" -i inventory/liruilong-cluster/inventory.ini

当然不管也可以,可以通过修改 zone 实现,下面为 剧本中使用 firewalld 模块Demo

# 关闭防火墙,这里设置为 trusted ,以后可能处理漏洞使用

- name: firewalld setting trusted

firewalld:

zone: trusted

permanent: yes

state: enabled

SELinux

确认是否有相关配置

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$grep -rnw ./ ../../roles/ -i -e "selinux"

......................

../../roles/kubespray-defaults/defaults/main/main.yml:6:# selinux state

....................

可以看到在 kubespray-defaults 角色中有对 SELinux 做配置 preinstall_selinux_state: permissive

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$vim +6 ../../roles/kubespray-defaults/defaults/main/main.yml

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$grep -rnw ./ ../../roles/ -i -e "preinstall_selinux_state:"

../../roles/kubespray-defaults/defaults/main/main.yml:7:preinstall_selinux_state: permissive

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$

这里不需要修改,kubespray-defaults 角色在任务中多次被调用,这里小伙伴可以通过 核心剧本看到。

当然,你也可以通过剧本中 SELinux 模块来关闭它

# 关闭 SELinux

- name: Disable SELinux

selinux:

state: disabled

DNS

DNS 这里也有一个坑, 配置了没有生效,,后面通过ansible 同步了一遍

/etc/resolv.conf.下面是配置项

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$cat inventory/liruilong-cluster/group_vars/all/all.yml | grep -i -C 2 dns

## By default, Kubespray collects nameservers on the host. It then adds the previously collected nameservers in nameserverentries.

## If true, Kubespray does not include host nameservers in nameserverentries in dns_late stage. However, It uses the nameserver to make sure cluster installed safely in dns_early stage.

## Use this option with caution, you may need to define your dns servers. Otherwise, the outbound queries such as www.google.com may fail.

# disable_host_nameservers: false

## Upstream dns servers

# upstream_dns_servers:

# - 8.8.8.8

# - 8.8.4.4

这是修改完的配置

┌──[root@liruilongs.github.io]-[~/kubespray/inventory]

└─$grep -rnw ./ -i -A 4 -e "upstream_dns_servers"

./liruilong-cluster/group_vars/all/all.yml:39:upstream_dns_servers:

./liruilong-cluster/group_vars/all/all.yml-40- - 8.8.8.8

./liruilong-cluster/group_vars/all/all.yml-41- - 8.8.4.4

./liruilong-cluster/group_vars/all/all.yml-42- - 114.114.114.114

./liruilong-cluster/group_vars/all/all.yml-43-

┌──[root@liruilongs.github.io]-[~/kubespray/inventory]

└─$

但是在后来 ctr 拉集群相关的镜像的时候,发现 DNS 解析不了 registry.k8s.io,看了 /etc/resolv.conf 才发现什么都没配置,所以通过 ansible 同步了一遍 /etc/resolv.conf.

下面为同步的文件,直接通过 ansible 的 copy 模块同步即可

# Generated by NetworkManager

nameserver 192.168.26.2

nameserver 8.8.8.8

nameserver 8.8.4.4

nameserver 114.114.114.114

options ndots:2 timeout:2 attempts:2

镜像仓库修改

国内 docker 镜像仓库拉不了,但是不知道为什么,我用了 VPN 还是不行,各种加速器试了好久,阿里的加速器不能免费用了。最后找个一个开源,dockerpull.com ,很感谢维护者,我折腾了好久,有条件的小伙伴可以捐赠下作者。

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$grep -rnw ./ ../../roles/ -i -e "docker_image_repo"

..............................................

../../roles/kubernetes-apps/external_cloud_controller/hcloud/templates/external-hcloud-cloud-controller-manager-ds.yml.j2:35: - image: {{ docker_image_repo }}/hetznercloud/hcloud-cloud-controller-manager:{{ external_hcloud_cloud.controller_image_tag }}

../../roles/kubernetes-apps/external_cloud_controller/hcloud/templates/external-hcloud-cloud-controller-manager-ds-with-networks.yml.j2:35: - image: {{ docker_image_repo }}/hetznercloud/hcloud-cloud-controller-manager:{{ external_hcloud_cloud.controller_image_tag }}

../../roles/kubespray-defaults/defaults/main/download.yml:94:docker_image_repo: "docker.io"

../../roles/kubespray-defaults/defaults/main/download.yml:240:flannel_image_repo: "{{ docker_image_repo }}/flannel/flannel"

../../roles/kubespray-defaults/defaults/main/download.yml:242:flannel_init_image_repo: "{{ docker_image_repo }}/flannel/flannel-cni-plugin"

../../roles/kubespray-defaults/defaults/main/download.yml:259:netcheck_agent_image_repo: "{{ docker_image_repo }}/mirantis/k8s-netchecker-agent"

........................................

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$

修改位置:

../../roles/kubespray-defaults/defaults/main/download.yml:94:docker_image_repo: "docker.io"

# docker image repo define

#docker_image_repo: "docker.io"

docker_image_repo: "dockerpull.com"

# quay image repo define

quay_image_repo: "quay.io"

# github image repo define (ex multus only use that)

github_image_repo: "ghcr.io"

运行剧本

┌──[root@liruilongs.github.io]-[~]

└─$docker run --rm -it -v /root/kubespray/:/kubespray/ -v "${HOME}"/.ssh/id_rsa:/root/.ssh/id_rsa quay.io/kubespray/kubespray:v2.26.0 bash

root@962b70466dd9:/kubespray# ansible-playbook -i inventory/liruilong-cluster/inventory.ini cluster.yml -f 8

这里需要注意,因为科学上网的原因,可能需要多次运行,剧本中途会挂掉,下面为执行成功的输出

...........................................

PLAY RECAP ******************************************************************************************************************************************************************************************************

192.168.26.100 : ok=515 changed=54 unreachable=0 failed=0 skipped=934 rescued=0 ignored=2

192.168.26.101 : ok=487 changed=49 unreachable=0 failed=0 skipped=900 rescued=0 ignored=2

192.168.26.102 : ok=486 changed=50 unreachable=0 failed=0 skipped=898 rescued=0 ignored=2

192.168.26.103 : ok=410 changed=14 unreachable=0 failed=0 skipped=667 rescued=0 ignored=1

192.168.26.105 : ok=388 changed=10 unreachable=0 failed=0 skipped=597 rescued=0 ignored=1

192.168.26.106 : ok=388 changed=10 unreachable=0 failed=0 skipped=597 rescued=0 ignored=1

192.168.26.107 : ok=388 changed=10 unreachable=0 failed=0 skipped=597 rescued=0 ignored=1

192.168.26.108 : ok=388 changed=10 unreachable=0 failed=0 skipped=597 rescued=0 ignored=1

Friday 04 October 2024 22:15:07 +0000 (0:00:00.843) 0:33:16.408 ********

===============================================================================

.............................................

kubernetes/control-plane : Joining control plane node to the cluster. ---------------------------------------------------------------------------------------------------------------------------------- 494.78s

kubernetes/control-plane : Kubeadm | Initialize first control plane node ------------------------------------------------------------------------------------------------------------------------------- 244.24s

kubernetes/control-plane : Install script to renew K8S control plane certificates ----------------------------------------------------------------------------------------------------------------------- 50.58s

etcd : Gen_certs | Write etcd member/admin and kube_control_plane client certs to other etcd nodes ------------------------------------------------------------------------------------------------------ 36.03s

etcd : Gen_certs | Write node certs to other etcd nodes ------------------------------------------------------------------------------------------------------------------------------------------------- 26.20s

kubernetes/kubeadm : Join to cluster -------------------------------------------------------------------------------------------------------------------------------------------------------------------- 21.51s

network_plugin/cni : CNI | Copy cni plugins ------------------------------------------------------------------------------------------------------------------------------------------------------------- 20.59s

kubernetes-apps/ansible : Kubernetes Apps | Lay Down CoreDNS templates ---------------------------------------------------------------------------------------------------------------------------------- 19.91s

container-engine/containerd : Containerd | Unpack containerd archive ------------------------------------------------------------------------------------------------------------------------------------ 17.56s

kubernetes/control-plane : Control plane | Remove scheduler container containerd/crio ------------------------------------------------------------------------------------------------------------------- 14.68s

container-engine/crictl : Extract_file | Unpacking archive ---------------------------------------------------------------------------------------------------------------------------------------------- 14.62s

container-engine/containerd : Download_file | Download item --------------------------------------------------------------------------------------------------------------------------------------------- 13.04s

container-engine/runc : Download_file | Download item --------------------------------------------------------------------------------------------------------------------------------------------------- 13.02s

container-engine/nerdctl : Extract_file | Unpacking archive --------------------------------------------------------------------------------------------------------------------------------------------- 12.99s

kubernetes-apps/ansible : Kubernetes Apps | Start Resources --------------------------------------------------------------------------------------------------------------------------------------------- 12.54s

container-engine/crictl : Download_file | Download item ------------------------------------------------------------------------------------------------------------------------------------------------- 12.43s

container-engine/nerdctl : Download_file | Download item ------------------------------------------------------------------------------------------------------------------------------------------------ 11.80s

kubernetes/control-plane : Fixup kubelet client cert rotation 2/2 --------------------------------------------------------------------------------------------------------------------------------------- 10.29s

kubernetes-apps/network_plugin/flannel : Flannel | Wait for flannel subnet.env file presence ------------------------------------------------------------------------------------------------------------- 9.50s

download : Extract_file | Unpacking archive -------------------------------------------------------------------------------------------------------------------------------------------------------------- 8.52s

root@962b70466dd9:/kubespray#

然后就可以在 master 上面看到集群信息了

┌──[root@192.168.26.100]-[/]

└─$kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.26.100 Ready control-plane 31m v1.31.1

192.168.26.101 Ready control-plane 28m v1.31.1

192.168.26.102 Ready control-plane 23m v1.31.1

192.168.26.103 Ready <none> 21m v1.31.1

192.168.26.105 Ready <none> 21m v1.31.1

192.168.26.106 Ready <none> 21m v1.31.1

192.168.26.107 Ready <none> 21m v1.31.1

192.168.26.108 Ready <none> 21m v1.31.1

┌──[root@192.168.26.100]-[/]

└─$

到这里我们就完成了安装,在客户机上面拷贝一下 kubeconfig 文件和kubectl 工具,我们可以直接在 在客户机操作

┌──[root@192.168.26.100]-[~]

└─$kubectl version

Client Version: v1.31.1

Kustomize Version: v5.4.2

Server Version: v1.31.1

┌──[root@192.168.26.100]-[~]

└─$

┌──[root@192.168.26.100]-[/usr/local/bin]

└─$scp ./kubectl root@192.168.26.131:/usr/local/bin

Authorized users only. All activities may be monitored and reported.

root@192.168.26.131's password:

kubectl 100% 54MB 249.9MB/s 00:00

这里在安装完之前,发现家里的机器 CPU 核心,能效核饱和,性能核空闲状态,集群卡的命令都运行不了

解决办法: 修改电源计划为性能模式+管理员方式运行

这是因为在Intel酷睿12/13代处理器中,引入了一种新的大小核架构,通常称为“混合核心”架构。这种架构在同一芯片上集成了性能核(大核)和能效核(小核)。

- 性能核用于处理高负载、高性能要求的任务

- 能效核则用于处理低负载、低功耗要求的任务

我的机器 Intel Core i7-12700 处理器实际上具有以下线程配置:

性能核(Performance Cores):

- 数量:8个

- 每个核心支持的线程数:2

- 总线程数(仅性能核):8 × 2 = 16线程

能效核(Efficient Cores):

- 数量:4个

- 每个核心支持的线程数:1

- 总线程数(仅能效核):4 × 1 = 4线程

相关工具的安装

前面我们安装没有安装任何工具,默认情况下这些都是不安装的,比如 HELM,krew 之类,这些工具也可用通过角色的方式安装

┌──[root@liruilongs.github.io]-[~/kubespray/roles/kubernetes-apps]

└─$ls

ansible container_engine_accelerator external_provisioner krew metrics_server policy_controller

argocd container_runtimes gateway_api kubelet-csr-approver network_plugin registry

cloud_controller csi_driver helm meta node_feature_discovery scheduler_plugins

cluster_roles external_cloud_controller ingress_controller metallb persistent_volumes snapshots

┌──[root@liruilongs.github.io]-[~/kubespray/roles/kubernetes-apps]

└─$

helm、argocd 等工具安装

修改下面的文件,重新执行剧本即可

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$cat ./inventory/liruilong-cluster/group_vars/k8s_cluster/addons.yml

下面为修改之后的配置项

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$cat ./inventory/liruilong-cluster/group_vars/k8s_cluster/addons.yml | grep -v ^# | grep -v ^$

---

helm_enabled: true

registry_enabled: true

metrics_server_enabled: true

local_path_provisioner_enabled: true

local_volume_provisioner_enabled: true

cephfs_provisioner_enabled: true

rbd_provisioner_enabled: true

gateway_api_enabled: true

ingress_nginx_enabled: true

ingress_publish_status_address: ""

ingress_alb_enabled: true

cert_manager_enabled: true

metallb_enabled: true

metallb_speaker_enabled: "{{ metallb_enabled }}"

metallb_namespace: "metallb-system"

metallb_version: v0.13.9

metallb_protocol: "layer2"

metallb_port: "7472"

metallb_memberlist_port: "7946"

argocd_enabled: true

krew_enabled: true

krew_root_dir: "/usr/local/krew"

kube_vip_enabled: true

kube_vip_arp_enabled: true

kube_vip_controlplane_enabled: true

kube_vip_address: 192.168.26.120

loadbalancer_apiserver:

address: "{{ kube_vip_address }}"

port: 6443

node_feature_discovery_enabled: false

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$

剧本执行的时候只执行 apps 的 标签即可

root@9d2aab6d0ea8:/kubespray# ansible-playbook -i inventory/liruilong-cluster/inventory.ini cluster.yml --tags apps

部分应用截图

metallb

软 LB metallb 安装,用 自带的角色安装 metallb 一直有报错,解决不了,这里手工安装

┌──[root@liruilongs.github.io]-[~/kubespray/inventory/liruilong-cluster]

└─$kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.13.9/config/manifests/metallb-native.yaml

分配 IP 池

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$vim pool.yaml

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$cat pool.yaml

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: first-pool

namespace: metallb-system

spec:

addresses:

- 192.168.26.220-192.168.26.249

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$kubectl apply -f pool.yaml

ipaddresspool.metallb.io/first-pool created

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$vim l2a.yaml

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$cat l2a.yaml

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: example

namespace: metallb-system

┌──[root@liruilongs.github.io]-[~/kubespray]

└─$kubectl apply -f l2a.yaml

l2advertisement.metallb.io/example created

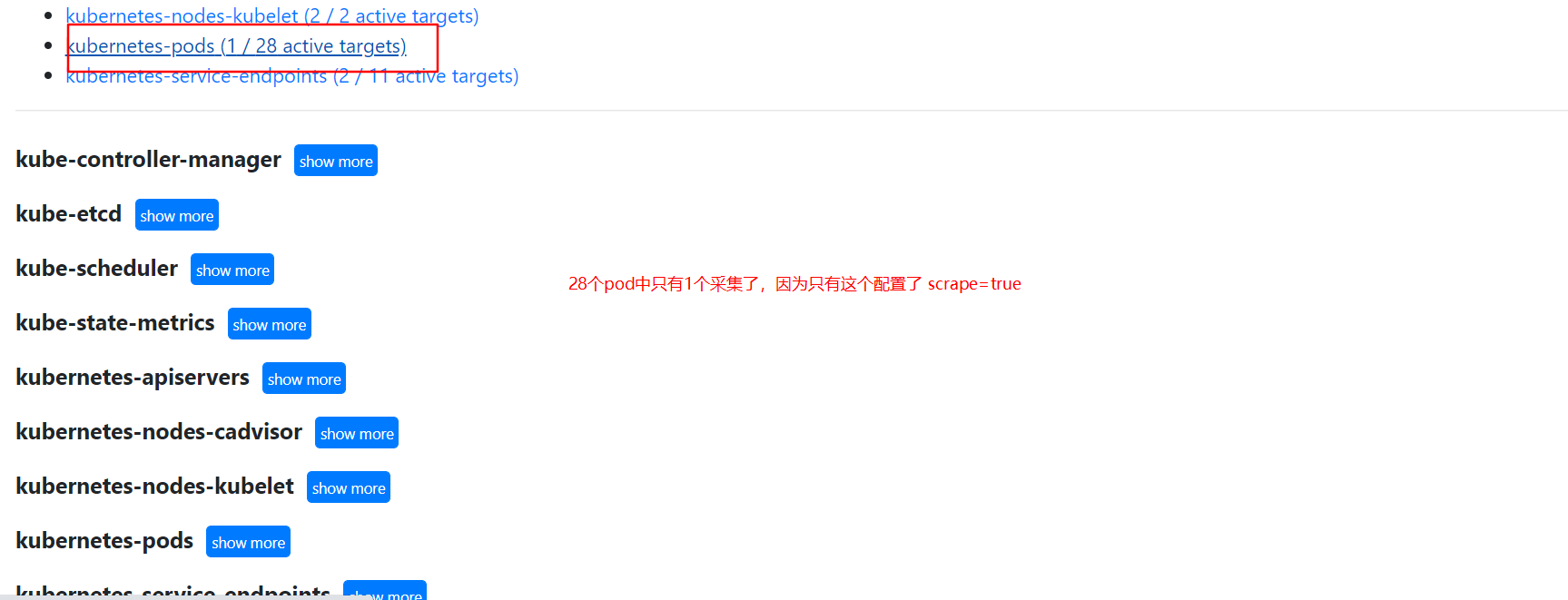

kube-prometheus-stack

prometheus 套件安装,需要先安装上面的 metrics_server

┌──[root@192.168.26.100]-[~]

└─$helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

"prometheus-community" has been added to your repositories

┌──[root@192.168.26.100]-[~]

└─$helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "prometheus-community" chart repository

Update Complete. ⎈Happy Helming!⎈

┌──[root@192.168.26.100]-[~]

└─$helm install prometheus prometheus-community/kube-prometheus-stack

NAME: prometheus

LAST DEPLOYED: Sat Oct 5 20:25:46 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

kube-prometheus-stack has been installed. Check its status by running:

kubectl --namespace default get pods -l "release=prometheus"

Visit https://github.com/prometheus-operator/kube-prometheus for instructions on how to create & configure Alertmanager and Prometheus instances using the Operator.

关于 openEuler 24.03 (LTS) 部署 K8s(v1.31.1) 高可用集群(Kubespray Ansible 方式) 就和小伙伴分享到这里,之后有机会会分析一下Kubespray 集群剧本以及各个角色。

博文部分内容参考

© 文中涉及参考链接内容版权归原作者所有,如有侵权请告知 😃

https://github.com/kubernetes-sigs/kubespray

© 2018-2024 liruilonger@gmail.com, 保持署名-非商用-相同方式共享(CC BY-NC-SA 4.0)