目录

1.加载编码器

1.1编码试算

2.加载数据集

3.数据集处理

3.1 map映射:只对数据集中的'sentence'数据进行编码

3.2用filter()过滤 单词太少的句子过滤掉

3.3截断句子

4.创建数据加载器Dataloader

5. 下游任务模型

6.测试预测代码

7.训练代码

8.保存与加载模型

1.加载编码器

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained(r'../data/model/distilroberta-base/')

print(tokenizer)

RobertaTokenizerFast(name_or_path='../data/model/distilroberta-base/', vocab_size=50265, model_max_length=512, is_fast=True, padding_side='right', truncation_side='right', special_tokens={'bos_token': '<s>', 'eos_token': '</s>', 'unk_token': '<unk>', 'sep_token': '</s>', 'pad_token': '<pad>', 'cls_token': '<s>', 'mask_token': '<mask>'}, clean_up_tokenization_spaces=False), added_tokens_decoder={ 0: AddedToken("<s>", rstrip=False, lstrip=False, single_word=False, normalized=True, special=True), 1: AddedToken("<pad>", rstrip=False, lstrip=False, single_word=False, normalized=True, special=True), 2: AddedToken("</s>", rstrip=False, lstrip=False, single_word=False, normalized=True, special=True), 3: AddedToken("<unk>", rstrip=False, lstrip=False, single_word=False, normalized=True, special=True), 50264: AddedToken("<mask>", rstrip=False, lstrip=True, single_word=False, normalized=False, special=True), }

1.1编码试算

tokenizer.batch_encode_plus([

'hide new secretions from the parental units',

'this moive is great'

])

{'input_ids': [[0, 37265, 92, 3556, 2485, 31, 5, 20536, 2833, 2], [0, 9226, 7458, 2088, 16, 372, 2]], 'attention_mask': [[1, 1, 1, 1, 1, 1, 1, 1, 1, 1], [1, 1, 1, 1, 1, 1, 1]]}

# 'input_ids'中的0:表示 'bos_token': '<s>'

#'input_ids'中的2:表示 'eos_token': '</s>'

#Bert模型有特殊字符!!!!!!!

2.加载数据集

from datasets import load_from_disk #从本地加载已经下载好的数据集

dataset_dict = load_from_disk('../data/datasets/glue_sst2/')

dataset_dict

DatasetDict({ train: Dataset({ features: ['sentence', 'label', 'idx'], num_rows: 67349 }) validation: Dataset({ features: ['sentence', 'label', 'idx'], num_rows: 872 }) test: Dataset({ features: ['sentence', 'label', 'idx'], num_rows: 1821 }) })

#若是从网络下载(国内容易网络错误,下载不了,最好还是先去镜像网站下载,本地加载)

# from datasets import load_dataset

# dataset_dict2 = load_dataset(path='glue', name='sst2')

# dataset_dict2

3.数据集处理

3.1 map映射:只对数据集中的'sentence'数据进行编码

#预测中间词任务:只需要'sentence' ,不需要'label'和'idx'

#用map()函数,映射:只对数据集中的'sentence'数据进行编码

def f_1(data, tokenizer):

return tokenizer.batch_encode_plus(data['sentence'])

dataset_dict = dataset_dict.map(f_1,

batched=True,

batch_size=16,

drop_last_batch=True,

remove_columns=['sentence', 'label', 'idx'],

fn_kwargs={'tokenizer': tokenizer},

num_proc=8) #8个进程, 查看任务管理器>性能>逻辑处理器

dataset_dict

DatasetDict({ train: Dataset({ features: ['input_ids', 'attention_mask'], num_rows: 67328 }) validation: Dataset({ features: ['input_ids', 'attention_mask'], num_rows: 768 }) test: Dataset({ features: ['input_ids', 'attention_mask'], num_rows: 1792 }) })

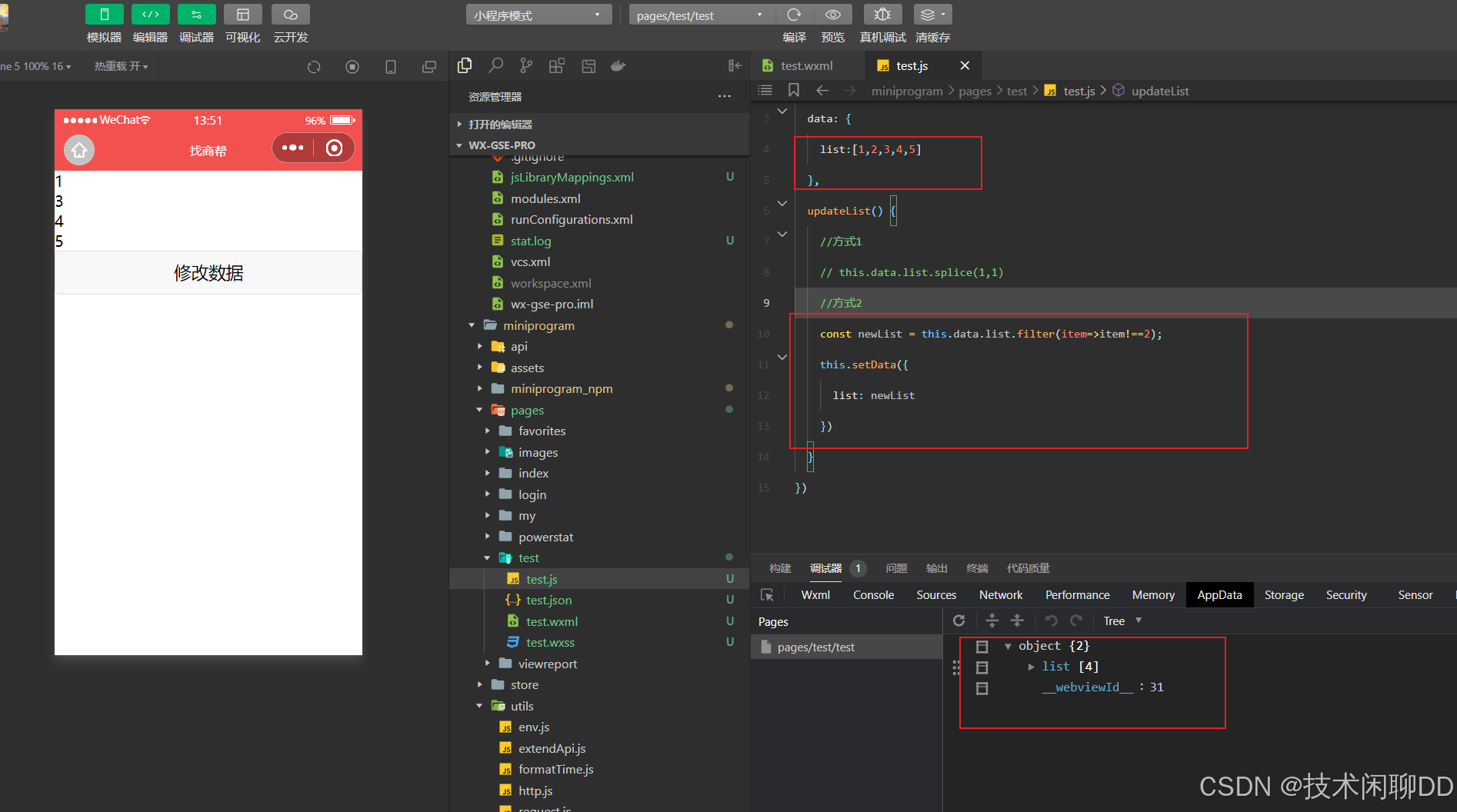

3.2用filter()过滤 单词太少的句子过滤掉

#处理句子,让每一个句子的都至少有9个单词,单词太少的句子过滤掉

#用filter()过滤

def f_2(data):

return [len(i) >= 9 for i in data['input_ids']]

dataset_dict = dataset_dict.filter(f_2, batched=True,

batch_size=1000,

num_proc=8)

dataset_dict

DatasetDict({

train: Dataset({

features: ['input_ids', 'attention_mask'],

num_rows: 44264

})

validation: Dataset({

features: ['input_ids', 'attention_mask'],

num_rows: 758

})

test: Dataset({

features: ['input_ids', 'attention_mask'],

num_rows: 1747

})

})

tokenizer.vocab['<mask>']

tokenizer.get_vocab()['<mask>']

#两句输出都是:

#502643.3截断句子

#截断句子, 同时将数据集的句子整理成预训练模型需要的格式

def f_3(data):

b = len(data['input_ids'])

data['labels'] = data['attention_mask'].copy() #复制,不影响原数据

for i in range(b):

#将句子长度就裁剪到9

data['input_ids'][i] = data['input_ids'][i][:9]

data['attention_mask'][i] = [1] * 9

data['labels'][i] = [-100] * 9

#使用的distilroberta-base是基于Bert模型的,每个句子de 'input_ids'最后一个单词需要设置成2

data['input_ids'][i][-1] = 2

#每一个句子的第四个词需要被预测,赋值给‘labels’,成为标签真实值

data['labels'][i][4] = data['input_ids'][i][4]

#每一个句子的第四个词为mask

# data['input_ids'][i][4] = tokenizer.vocab['<mask>']

data['input_ids'][i][4] = 50264

return data

#map()函数是对传入数据的下一层级的数据进行操作

#dataset_dict是一个字典, map函数会直接对dataset_dict['train']下的数据操作

dataset_dict = dataset_dict.map(f_3, batched=True, batch_size=1000, num_proc=12)

dataset_dict

DatasetDict({ train: Dataset({ features: ['input_ids', 'attention_mask', 'labels'], num_rows: 44264 }) validation: Dataset({ features: ['input_ids', 'attention_mask', 'labels'], num_rows: 758 }) test: Dataset({ features: ['input_ids', 'attention_mask', 'labels'], num_rows: 1747 }) })

dataset_dict['train'][0]

{'input_ids': [0, 37265, 92, 3556, 50264, 31, 5, 20536, 2], 'attention_mask': [1, 1, 1, 1, 1, 1, 1, 1, 1], 'labels': [-100, -100, -100, -100, 2485, -100, -100, -100, -100]}

dataset_dict['train']['input_ids']

输出太多,部分展示 [[0, 37265, 92, 3556, 50264, 31, 5, 20536, 2], [0, 10800, 5069, 117, 50264, 2156, 129, 6348, 2], [0, 6025, 6138, 63, 50264, 8, 39906, 402, 2], [0, 5593, 5069, 19223, 50264, 7, 1091, 5, 2], [0, 261, 5, 2373, 50264, 12, 1116, 12, 2], [0, 6025, 128, 29, 50264, 350, 8805, 7, 2], [0, 34084, 6031, 1626, 50264, 5, 736, 9, 2],。。。。。]]

4.创建数据加载器Dataloader

import torch

from transformers.data.data_collator import default_data_collator #将从一条一条数据传输变成一批批数据传输

loader = torch.utils.data.DataLoader(

dataset=dataset_dict['train'],

batch_size=8,

shuffle=True,

collate_fn=default_data_collator,

drop_last=True #最后一批数据不满足一批的数据量batch_size,就删掉

)

for data in loader:

break #遍历赋值,不输出

len(loader), data

#'labels'中的-100就是占个位置,没有其他含义, 后面用交叉熵计算损失时, -100的计算结果接近0,损失没有用

(5533, {'input_ids': tensor([[ 0, 2962, 5, 20577, 50264, 31, 36331, 7, 2], [ 0, 627, 2471, 16, 50264, 15, 8, 1437, 2], [ 0, 6968, 192, 24, 50264, 209, 26757, 11641, 2], [ 0, 405, 128, 29, 50264, 25306, 9438, 15796, 2], [ 0, 8344, 6343, 8, 50264, 1342, 7790, 38984, 2], [ 0, 10800, 5069, 5, 50264, 2156, 34934, 2156, 2], [ 0, 102, 1531, 284, 50264, 14, 128, 29, 2], [ 0, 6, 3007, 648, 50264, 38854, 480, 1437, 2]]), 'attention_mask': tensor([[1, 1, 1, 1, 1, 1, 1, 1, 1], [1, 1, 1, 1, 1, 1, 1, 1, 1], [1, 1, 1, 1, 1, 1, 1, 1, 1], [1, 1, 1, 1, 1, 1, 1, 1, 1], [1, 1, 1, 1, 1, 1, 1, 1, 1], [1, 1, 1, 1, 1, 1, 1, 1, 1], [1, 1, 1, 1, 1, 1, 1, 1, 1], [1, 1, 1, 1, 1, 1, 1, 1, 1]]), 'labels': tensor([[ -100, -100, -100, -100, 1182, -100, -100, -100, -100], [ -100, -100, -100, -100, 1514, -100, -100, -100, -100], [ -100, -100, -100, -100, 11, -100, -100, -100, -100], [ -100, -100, -100, -100, 372, -100, -100, -100, -100], [ -100, -100, -100, -100, 33572, -100, -100, -100, -100], [ -100, -100, -100, -100, 12073, -100, -100, -100, -100], [ -100, -100, -100, -100, 1569, -100, -100, -100, -100], [ -100, -100, -100, -100, 2156, -100, -100, -100, -100]])})

5. 下游任务模型

from transformers import AutoModelForCausalLM, RobertaModel

class Model(torch.nn.Module):

def __init__(self):

super().__init__()

#相当于encoder

self.pretrained_model = RobertaModel.from_pretrained(r'../data/model/distilroberta-base/')

#decoder:就是一层全连接层(线性层)

#bert-base模型输出是768

decoder = torch.nn.Linear(768, tokenizer.vocab_size)

#全连接线性层有bias, 初始化为0 , size=tokenizer.vocab_size

decoder.bias = torch.nn.Parameter(torch.zeros(tokenizer.vocab_size))

#全连接

self.fc = torch.nn.Sequential(

#全连接索引0层

torch.nn.Linear(768, 768),

#全连接索引1层

torch.nn.GELU(), #激活函数,把线性的变成非线性的

#一般 线性层+激活函数+BN层标准化

#在NLP中,一般为: 线性层+激活函数+LN层标准化

#全连接索引2层

torch.nn.LayerNorm(768, eps=1e-5),

#全连接索引3层

decoder) #输出层:一层全连接,不用加激活函数

#加载预训练模型的参数

pretrained_parameters_model = AutoModelForCausalLM.from_pretrained(r'../data/model/distilroberta-base/')

self.fc[0].load_state_dict(pretrained_parameters_model.lm_head.dense.state_dict())

self.fc[2].load_state_dict(pretrained_parameters_model.lm_head.layer_norm.state_dict())

self.fc[3].load_state_dict(pretrained_parameters_model.lm_head.decoder.state_dict())

self.criterion = torch.nn.CrossEntropyLoss()

#forward拼写错误将无法接收传入的参数!!!

def forward(self, input_ids, attention_mask, labels=None):

#labels算损失时再传入

logits = self.pretrained_model(input_ids, attention_mask)

logits = logits.last_hidden_state #最后一层的hidden_state

logits = self.fc(logits)

#计算损失

loss = None

if labels is not None: #若传入了labels

#distilroberta-base这个模型本身做的任务是:根据上一个词预测下一个词

#在这个模型里会有一个偏移量,

#我么需要对labels和logits都做一个shift偏移

#logits的shape是(batch_Size, 一句话的长度, vocab_size)

shifted_logits = logits[:, :-1].reshape(-1, tokenizer.vocab_size) #三维tensor数组变成二维

#labels是二维tensor数组, shape(batch_size, 一句话的长度)

shifted_labels = labels[:,1:].reshape(-1) #二维变成一维

#计算损失

loss = self.criterion(shifted_logits, shifted_labels)

return {'loss': loss, 'logits': logits} #logits是预测值model = Model()

#参数量

#for i in model.parameters() 获取一层的参数 i, 是一个tensor()

# i.numel()是用于返回一个数组或矩阵中元素的数量。函数名称“numel”是“number of elements”的缩写

print(sum(i.numel() for i in model.parameters()))121364313 #1.2多亿的参数量,6G显存还可以跑

#未训练之前,先看一下加载的预训练模型的效果

out = model(**data)

out['loss'], out['logits'].shape

#out['logits']的shape(batch_size, 每个句子的长度, vocab_size)

#8:就是一批中8个句子,每个句子有9个单词, 每个单词有50265个类别(tensor(19.4897, grad_fn=<NllLossBackward0>), torch.Size([8, 9, 50265]))

6.测试预测代码

def test(model):

model.eval() #调回评估模式,适用于预测

#创建测试数据的加载器

loader_test = torch.utils.data.DataLoader(

dataset=dataset_dict['test'],

batch_size=8,

collate_fn = default_data_collator, #默认的批量取数据

shuffle=True,

drop_last=True)

correct = 0

total = 0

for i, data in enumerate(loader_test):

#克隆data['labels']中索引为4的元素,取出来

label = data['labels'][:, 4].clone()

#从数据中抹掉label, 防止模型作弊

data['labels'] = None

#计算

with torch.no_grad(): #不求导,不进行梯度下降

#out:下游任务模型的输出结果,是一个字典,包含loss和logits

out = model(**data) #**data直接把data这个字典解包成关键字参数

#计算出logits最大概率

##out['logits']的shape(batch_size, 每个句子的长度, vocab_size)

#argmax()之后,shape变成(8, 9)

out = out['logits'].argmax(dim=2)[:, 4] #取出第四个才是我们的预测结果

correct += (label == out).sum().item()

total += 8 #batch_size=8, 每一批数据处理完,处理的数据总量+8

#每隔10次输出信息

if i % 10 == 0:

print(i)

print(label)

print(out)

if i == 50:

break

print('accuracy:', correct / total)

for i in range(8): #输出8句话的预测与真实值的对比

print(tokenizer.decode(data['input_ids'][i]))

print(tokenizer.decode(label[i]), tokenizer.decode(out[i]))

print()test(model)0 tensor([ 5466, 3136, 10, 1397, 19, 16362, 281, 11398]) tensor([ 1617, 26658, 10, 1545, 30, 5167, 281, 11398]) 10 tensor([ 10, 4891, 3156, 14598, 21051, 2156, 6707, 25]) tensor([ 10, 1910, 12774, 2156, 16801, 77, 31803, 25]) 20 tensor([ 9635, 15305, 2441, 197, 22364, 2156, 9, 203]) tensor([ 9635, 43143, 3021, 939, 1363, 14, 9, 9712]) 30 tensor([6717, 19, 1073, 364, 67, 710, 14, 3739]) tensor([ 6717, 17428, 23250, 30842, 350, 4712, 14, 3739]) 40 tensor([ 998, 3117, 4132, 1318, 9, 99, 5, 3834]) tensor([ 6545, 1049, 41407, 27931, 263, 5016, 5, 13295]) 50 tensor([ 10, 53, 98, 5393, 6373, 19255, 236, 35499]) tensor([ 10, 8, 98, 4521, 17626, 14598, 236, 3374]) accuracy: 0.3088235294117647 <s>the picture is<mask> primer on what</s> a a <s>well-shot<mask> badly written tale</s> but and <s>some actors have<mask> much charisma that</s> so so <s>so few movies<mask> religion that it</s> explore mention <s>this delicately<mask> story , deeply</s> observed crafted <s>awkward but<mask> and , ultimately</s> sincere hilarious <s>you might not<mask> to hang out</s> want want <s>the film often<mask> a mesmerizing</s> achieves becomes

7.训练代码

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

devicedevice(type='cuda', index=0)

from transformers import AdamW

from transformers.optimization import get_scheduler

#训练代码

def train():

optimizer = AdamW(model.parameters(), lr=2e-5)

#学习率下降计划

scheduler = get_scheduler(name='linear',

num_warmup_steps=0, #从一开始就预热,没有缓冲区

num_training_steps=len(loader),

optimizer=optimizer)

model.to(device) #将模型设置到设备上

model.train() #调到训练模式

for i,data in enumerate(loader): #一个loader的数据传完算一个epoch,NLP中很少写epoch,因为文本样本数太大

#接收数据

input_ids, attention_mask, labels = data['input_ids'], data['attention_mask'], data['labels']

#将数据都传送到设备上

input_ids, attention_mask, labels = input_ids.to(device), attention_mask.to(device), labels.to(device)

#将出入设备的数据再次传入到设备上的模型中

#out是一个字典,包括‘loss’和‘logits’预测的每个类别的概率

out = model(input_ids=input_ids, attention_mask=attention_mask, labels=labels)

#从返回结果中获取损失

loss = out['loss']

#反向传播

loss.backward()

#为了稳定训练, 进行梯度裁剪

torch.nn.utils.clip_grad_norm_(model.parameters(), 1.0) #让公式中的c=1.0

#梯度更新

optimizer.step()

scheduler.step()

#梯度清零

optimizer.zero_grad()

model.zero_grad()

if i % 50 == 0 :

#训练时,data['labels']不需要抹除掉设置成空

#只需要把每句话第四个单词取出来,赋值给label做为真实值即可

label = data['labels'][:, 4].to(device)

#获取预测的第4个单词字符

out = out['logits'].argmax(dim=2)[:, 4]

#计算预测准确的数量

correct = (label == out).sum().item()

#一批次计算处理的句子总量

total = 8

#计算准确率

accuracy = correct / total

lr = optimizer.state_dict()['param_groups'][0]['lr']

print(i, loss.item(), accuracy, lr) train() #相当于训练了一个epoch

#8G显存一次跑两亿参数就差不多,再多放不下跑不了

#我的是6G,一次跑1.3亿左右就行 输出太多,显示部分:

0 19.273836135864258 0.125 1.9996385324417135e-05 50 5.71422004699707 0.25 1.9815651545273814e-05 100 5.9950056076049805 0.25 1.9634917766130493e-05 150 3.5554256439208984 0.25 1.945418398698717e-05 200 3.5855653285980225 0.375 1.9273450207843848e-05 250 3.3996706008911133 0.125 1.9092716428700527e-05。。。。

5200 2.7103517055511475 0.5 1.2000722935116574e-06 5250 2.340709686279297 0.75 1.0193385143683355e-06 5300 2.316523551940918 0.375 8.386047352250136e-07 5350 2.541797399520874 0.5 6.578709560816917e-07 5400 1.3354747295379639 0.625 4.771371769383698e-07 5450 2.276153326034546 0.75 2.964033977950479e-07 5500 1.8481557369232178 0.75 1.1566961865172602e-07

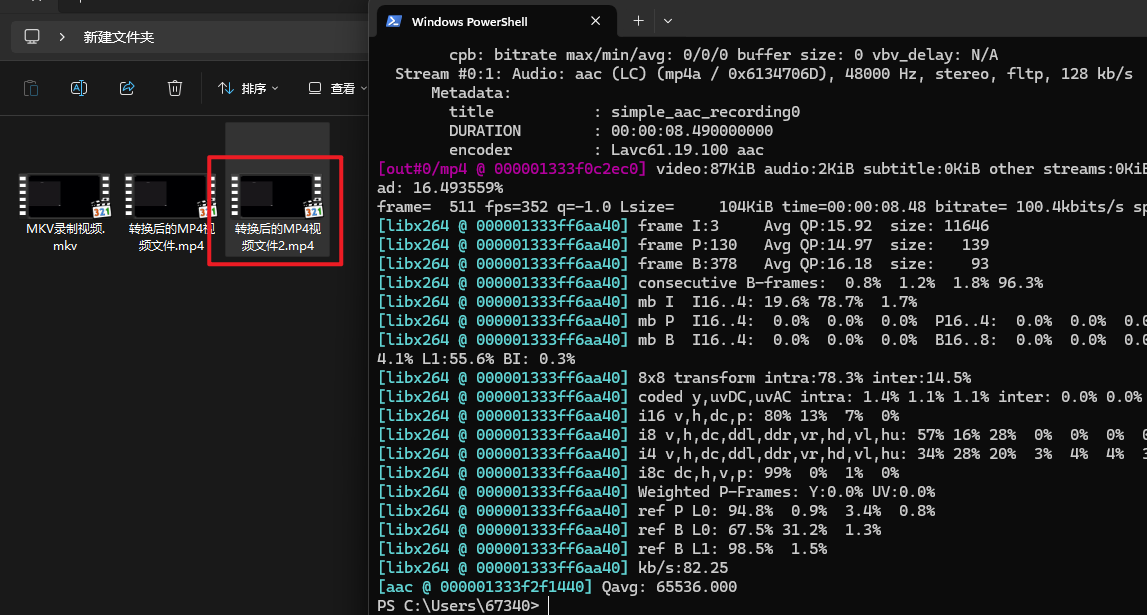

8.保存与加载模型

#保存模型

torch.save(model, '../data/model/预测中间词.model')

#加载模型 需要加载到cpu,不然会报错

model_2 = torch.load('../data//model/预测中间词.model', map_location='cpu')test(model_2)

0 tensor([ 2156, 562, 23, 1372, 128, 41, 7078, 13836]) tensor([ 2156, 562, 30, 13074, 128, 41, 23460, 13836]) 10 tensor([ 615, 1239, 15369, 30340, 1224, 39913, 32, 7]) tensor([ 3159, 1239, 15369, 30340, 1447, 13938, 2156, 7]) 20 tensor([ 29, 47, 3739, 21051, 9, 10, 98, 9599]) tensor([ 29, 17504, 3739, 7580, 9, 10, 98, 9599]) 30 tensor([3668, 29, 172, 147, 8, 1630, 10, 2156]) tensor([ 98, 2696, 53, 14, 2156, 1630, 10, 6269]) 40 tensor([ 213, 16, 3541, 1081, 117, 352, 5, 65]) tensor([213, 16, 24, 480, 117, 352, 5, 65]) 50 tensor([35402, 19, 101, 4356, 1085, 45, 615, 5313]) tensor([35966, 19, 95, 4356, 1085, 45, 615, 676]) accuracy: 0.5122549019607843 <s>accuracy and<mask> are terrific ,</s> realism pacing <s>a film made<mask> as little wit</s> with with <s>the film is<mask> a series of</s> like just <s>this is what<mask>ax was made</s> im im <s>there 's<mask> provocative about this</s> nothing nothing <s>the story may<mask> be new ,</s> not not <s>there are just<mask> twists in the</s> enough enough <s>a pleasant enough<mask> that should have</s> comedy experience

![[论文精读]Multi-Channel Graph Neural Network for Entity Alignment](https://i-blog.csdnimg.cn/direct/3bcbb7be063140a1a770b8aef53756ff.png)