论文网址:Multi-Channel Graph Neural Network for Entity Alignment (aclanthology.org)

论文代码:https:// github.com/thunlp/MuGNN

英文是纯手打的!论文原文的summarizing and paraphrasing。可能会出现难以避免的拼写错误和语法错误,若有发现欢迎评论指正!文章偏向于笔记,谨慎食用

目录

1. 心得

2. 论文逐段精读

2.1. Abstract

2.2. Introduction

2.3. Preliminaries and Framework

2.3.1. Preliminaries

2.3.2. Framework

2.4. KG Completion

2.4.1. Rule Inference and Transfer

2.4.2. Rule Grounding

2.5. Multi-Channel Graph Neural Network

2.5.1. Relation Weighting

2.5.2. Multi-Channel GNN Encoder

2.5.3. Align Model

2.6. Experiment

2.6.1. Experiment Settings

2.6.2. Overall Performance

2.6.3. Impact of Two Channels and Rule Transfer

2.6.4. Impact of Seed Alignments

2.6.5. Qualitative Analysis

2.7. Related Work

2.8. Conclusions

3. 知识补充

3.1. Adagrad Optimizer

4. Reference

1. 心得

(1)是比较容易理解的论文

2. 论文逐段精读

2.1. Abstract

①Limitations of entity alignment: structural heterogeneity and limited seed alignments

②They proposed Multi-channel Graph Neural Network model (MuGNN)

2.2. Introduction

①Knowledge graph (KG) stores information by directed graph, where the nodes are entity and the edges denote relationship

②Mother tongue information usually stores more information:

(作者觉得KG1的Jilin会对齐KG2的Jilin City,因为他们有相似的方言和连接的长春。这个感觉不是一定吧?取决于具体模型?感觉还是挺有差别的啊这俩东西,结构上也没有很相似)

③To solve the problem, it is necessary to fill in missing entities and eliminate unnecessary ones

2.3. Preliminaries and Framework

2.3.1. Preliminaries

(1)KG

①Defining a directed graph , which contains entity set

, relation set

and triplets

②Triplet

(2)Rule knowledge

①For rule ,

, it means there are

(3)Rule Grounding

①通过上面的递推,实体可以找到更进一步的关系

(4)Entity alignment

①Alignments in two entities:

②Alignment relation:

2.3.2. Framework

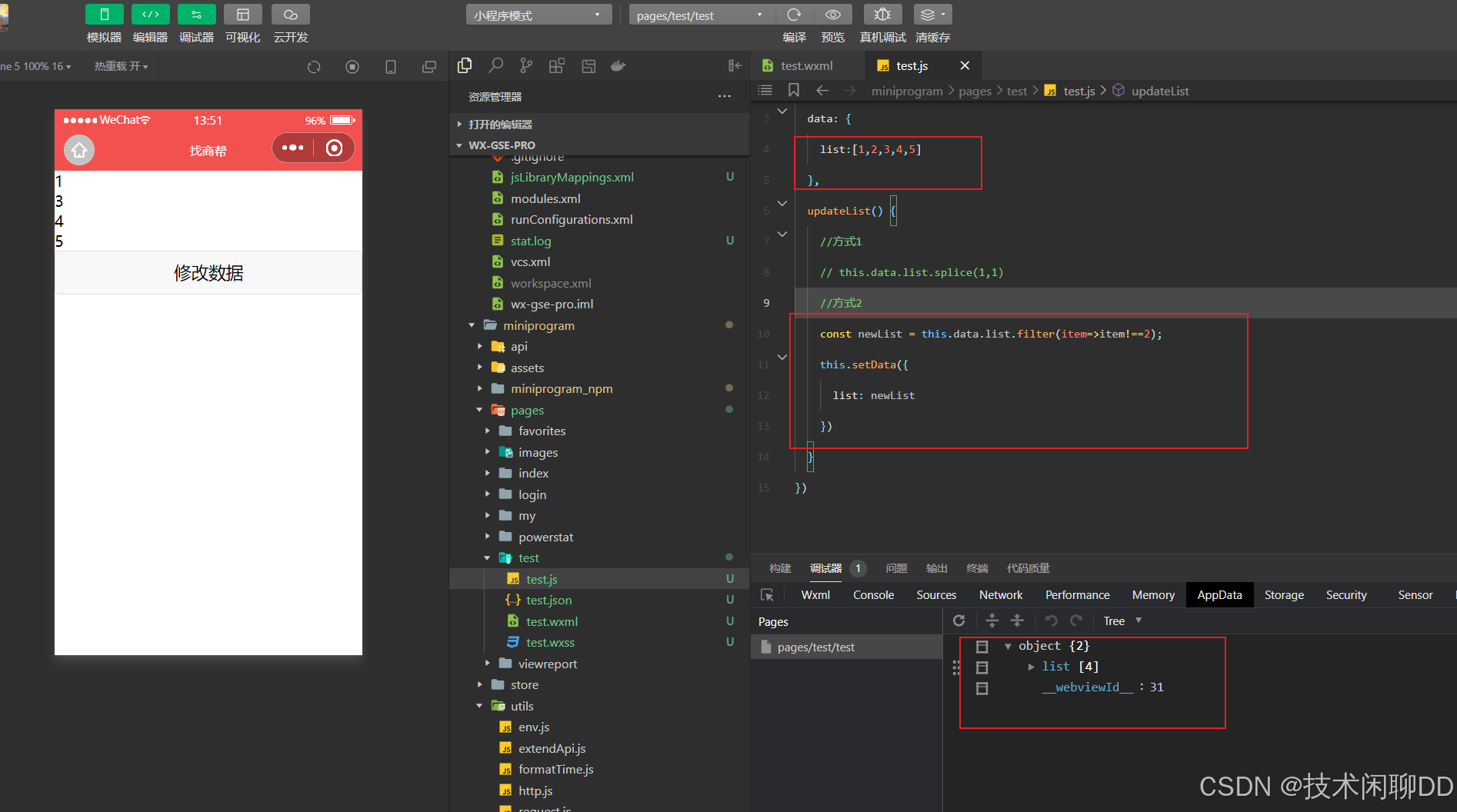

①Workflow of MuGNN:

(1)KG completion

①Adopt rule mining system AMIE+

(2)Multi-channel Graph Neural Network

①Encoding KG in different channels

2.4. KG Completion

2.4.1. Rule Inference and Transfer

2.4.2. Rule Grounding

①比如从KG2中找到关系,就可以补充到KG1中去

2.5. Multi-Channel Graph Neural Network

2.5.1. Relation Weighting

①They will generate a weighted relationship matrix

②They construct self attention adjacency matrix and cross-KG attention adjacency matrix for each channel

(1)KG Self-Attention(这个是为了补齐)

①Normalized connection weights:

where contains self loop and

denotes the neighbors of

② denotes the attention coefficient between two entities:

where and

are trainable parameters

(2)Cross-KG Attention(这个是为了修剪,是另一个邻接矩阵)

①Pruning operation :

if is true then it will be 1 otherwise 0,

denotes inner product similarity measure

2.5.2. Multi-Channel GNN Encoder

①Propagation of GNN:

and they chose as ReLU

②Multi GNN encoder:

where denotes the number of channels

③Updating function:

④Pooling strategy: mean pooling

2.5.3. Align Model

①Embedding two KG to the same vector space and measure the distance to judge the equivalence relation:

where ,

,

and

are negative pairs in the original sets,

and

are margin hyper-parameters separating positive and negative entity and relation alignments

②Triplet loss:

③ denotes the true value function for triplet

:

then it can be recursively transformed into:

where is the embedding size

④The overall loss:

2.6. Experiment

2.6.1. Experiment Settings

(1)Datasets

①Datasets: DBP15K (contains DBPZH-EN(Chinese to English), DBPJA-EN (Japanese to English), and DBPFREN (French to English)) and DWY100K (contains DWY-WD (DBpedia to Wikidata) and DWY-YG (DBpedia to YAGO3))

②Statistics of datasets:

③Statistics of KG in datasets:

(2)Baselines

①MTransE

②JAPE

③GCN-Align

④AlignEA

(3)Training Details

①Training ratio: 30% for training and 70% for testing

②All the embedding size: 128

③All the GNN layers: 2

④Optimizer: Adagrad

⑤Hyperparameter:

⑥Grid search to learning rate in {0.1,0.01,0.001}, L2 in {0.01,0.001,0.0001}, dropout rate in {0.1,0.2,0.5}. They finally got 0.001,0.01,0.2 optimal each

2.6.2. Overall Performance

2.6.3. Impact of Two Channels and Rule Transfer

①Module ablation:

2.6.4. Impact of Seed Alignments

①Ratio of seeds:

2.6.5. Qualitative Analysis

①Two examples of how the rule works:

2.7. Related Work

Introduces some related works

2.8. Conclusions

They aim to further research word ambiguity

3. 知识补充

3.1. Adagrad Optimizer

(1)补充学习:Deep Learning 最优化方法之AdaGrad - 知乎 (zhihu.com)

4. Reference

Cao, Y. et al. (2019) 'Multi-Channel Graph Neural Network for Entity Alignment', Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, doi: 10.18653/v1/P19-1140