prometheus 收集监控数据

alertmanager 制定告警路由

PrometheusAlert 连通告警webhook

一、prometheus配置

https://prometheus.io/download/

1.1、prometheus安装

包的下载直接wget就行,放在data目录下,解压后在prometheus目录下创建config和rule目录

配置了热重启,可按需配置数据保存天数

cat >/etc/systemd/system/prometheus.service<<EOF

[Unit]

Description=Prometheus Service

Wants=network-online.target

After=network-online.target

[Service]

User=root

Group=root

Type=simple

ExecStart=/data/prometheus/prometheus --config.file=/data/prometheus/prometheus.yml --web.enable-lifecycle --storage.tsdb.path=/data/prometheus/data

Restart=on-failure

[Install]

WantedBy=multi-user.target

1.2、prometheus配置文件

相同job使用了锚点省略一些重复配置,blackbox监控业务url

root@prometheus-monitor-c210:/data/prometheus# cat prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

- 10.198.199.210:9093

rule_files:

- "/data/prometheus/rules/*.yaml"

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: "prometheus"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"]

- job_name: 'node-exporter-ops'

file_sd_configs:

- files:

- ./config/node-ops.yml

relabel_configs: &node_relabel_configs

- source_labels: [__address__]

regex: (.*)

target_label: instance

replacement: $1

- source_labels: [__address__]

regex: (.*)

target_label: __address__

replacement: $1:9100

- job_name: 'node-exporter-rd'

file_sd_configs:

- files:

- ./config/node-rd.yml

relabel_configs: *node_relabel_configs

- job_name: 'blackbox'

metrics_path: /probe

params:

module: [http_2xx] # 使用的探测模块

static_configs:

- targets:

- https://test-1254308391.cos.ap-beijing.myqcloud.com

- https://www.baidu.com

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: service_url

- target_label: __address__

replacement: 10.198.199.28:9115 # Blackbox Exporter 的地址和端口

1.3、主机配置

主机配置文件放在config目录下,上面prometheus文件读取这个目录下的文件,而不是直接将target写在prometheus文件中

这些主机名配置在hosts文件中,aws可以使用route53私有托管

$ cat config/node-ops.yml

- labels:

service: node-ops

targets:

- sin-ops-logging-monitor-c210

- sin-ops-cicd-archery-c10

1.4、role配置

role都配置在统一目录

$ cat blackbox.yaml

groups:

- name: blackbox-trace

rules:

- alert: web服务超时了

expr: probe_http_status_code{job="blackbox"} == 0

for: 2m

labels:

severity: critical

service: probe-service

annotations:

title: 'Service timeout ({{ $labels.service_url }}'

description: "Service timeout ({{ $labels.service_url }})"

node-baseic role

$ cat node-basic-monitor.yaml

groups:

- name: node-basic-monitor

rules:

- alert: 主机宕机了

expr: up{job=~"node-exporter.+"} == 0

for: 0m

labels:

severity: critical

service: node-basic

annotations:

title: 'Instance down'

description: "主机: 【{{ $labels.instance }}】has been down for more than 1 minute"

- alert: 服务器已重新启动

expr: (node_time_seconds - node_boot_time_seconds) / 60 <3

for: 0m

labels:

severity: warning

service: node-basic

annotations:

title: '服务器已重新启动'

description: "主机: 【{{ $labels.instance }}】刚刚启动,请检查服务状态"

- alert: socket数量超预期

expr: node_sockstat_TCP_alloc >25000

for: 2m

labels:

severity: critical

service: node-basic

annotations:

title: 'socket数量超预期'

description: "主机: 【{{ $labels.instance }}】socket(当前值:{{ $value }})"

# ops cpu内存告警

- alert: CPU使用率到达%90

expr: (1-((sum(increase(node_cpu_seconds_total{mode="idle"}[5m])) by (instance))/ (sum(increase(node_cpu_seconds_total[5m])) by (instance))))*100 > 90

for: 5m

annotations:

title: "CPU使用率到达%90"

description: "主机: 【{{ $labels.instance }}】 5分钟内CPU使用率超过90% (当前值: {{ printf \"%.2f\" $value }}%)"

labels:

severity: 'critical'

service: node-basic

- alert: 内存使用率到达%90

expr: (1-((node_memory_Buffers_bytes + node_memory_Cached_bytes + node_memory_MemFree_bytes)/node_memory_MemTotal_bytes))*100 >90

for: 3m

annotations:

title: "主机内存使用率超过90%"

description: "主机: 【{{ $labels.instance }}】 内存使用率超过90% (当前使用率:{{ humanize $value }}%)"

labels:

severity: 'critical'

service: node-basic

- alert: 磁盘空间剩余%15

expr: (1-(node_filesystem_free_bytes{fstype=~"ext4|xfs",mountpoint!~".*tmp|.*boot"}/node_filesystem_size_bytes{fstype=~"ext4|xfs",mountpoint!~".*tmp|.*boot",service!~"benchmark" }))*100 > 90

for: 1m

annotations:

title: "磁盘空间剩余%15"

description: "主机: 【{{ $labels.instance }}】 {{ $labels.mountpoint }}分区使用率超过85%, 当前值使用率:{{ $value }}%"

labels:

severity: 'critical'

service: node-basic

- alert: 主机ESTABLISHED连接数

expr: sum(node_netstat_Tcp_CurrEstab{instance!~"benchmark.+"}) by (instance) > 1000

for: 10m

labels:

severity: 'warning'

service: node-basic

annotations:

title: "主机ESTABLISHED连接数过高"

description: "主机: 【{{ $labels.instance }}】 ESTABLISHED连接数超过1000, 当前ESTABLISHED连接数: {{ $value }}"

- alert: 主机TIME_WAIT数量

expr: sum(node_sockstat_TCP_tw{instance!~"benchmark.+"}) by (instance) > 6500

for: 1h

labels:

severity: 'warning'

service: node-basic

annotations:

title: "主机TIME_WAIT连接数过高"

description: "主机: 【{{ $labels.instance }}】 TIME_WAIT连接数超过6500, 当前TIME_WAIT连接数持续一小时: {{ $value }}"

- alert: 主机网卡出口流量过高于150MB/s

expr: sum by (instance, device) (rate(node_network_transmit_bytes_total{device=~"ens.*"}[2m])) / 1024 / 1024 > 150

for: 20m

labels:

severity: 'critical'

service: node-basic

annotations:

title: "主机网卡出口流量高于100MB/s"

description: "主机: 【{{ $labels.instance }}】, 网卡: {{ $labels.device }} 出口流量超过 (> 100 MB/s), 当前值: {{ $value }}"

- alert: 主机磁盘写入速率过高

expr: sum by (instance, device) (rate(node_disk_written_bytes_total{device=~"nvme.+"}[2m])) / 1024 / 1024 > 150

for: 10m

labels:

severity: 'critical'

service: node-basic

annotations:

title: "主机磁盘写入速率过高"

description: "主机: 【{{ $labels.instance }}】, 磁盘: {{ $labels.device }} 写入速度超过(100 MB/s), 当前值: {{ $value }}"

- alert: 主机分区Inode不足

expr: node_filesystem_files_free{fstype=~"ext4|xfs",mountpoint!~".*tmp|.*boot" } / node_filesystem_files{fstype=~"ext4|xfs",mountpoint!~".*tmp|.*boot" } * 100 < 10

for: 2m

labels:

severity: 'warning'

service: node-basic

annotations:

title: "主机分区Inode节点不足"

description: "主机: 【{{ $labels.instance }}】 {{ $labels.mountpoint }}分区inode节点不足 (可用值小于{{ $value }}%)"

- alert: 主机磁盘Read延迟高

expr: rate(node_disk_read_time_seconds_total{device=~"nvme.+"}[1m]) / rate(node_disk_reads_completed_total{device=~"nvme.+"}[1m]) > 0.1 and rate(node_disk_reads_completed_total{device=~"nvme.+"}[1m]) > 0

for: 2m

labels:

severity: 'warning'

service: node-basic

annotations:

title: "主机磁盘Read延迟过高"

description: "主机: 【{{ $labels.instance }}】, 磁盘: {{ $labels.device }} Read延迟过高 (read operations > 100ms), 当前延迟值: {{ $value }}ms"

- alert: 主机磁盘Write延迟过高

expr: rate(node_disk_write_time_seconds_total{device=~"nvme.+"}[1m]) / rate(node_disk_writes_completed_total{device=~"nvme.+"}[1m]) > 0.1 and rate(node_disk_writes_completed_total{device=~"nvme.+"}[1m]) > 0

for: 2m

labels:

severity: 'warning'

service: node-basic

annotations:

title: "主机磁盘Write延迟过高"

description: "主机: 【{{ $labels.instance }}】, 磁盘: {{ $labels.device }} Write延迟过高 (write operations > 100ms), 当前延迟值: {{ $value }}ms"

- alert: 服务器负载高

expr: (node_load1{instance !~ "sin-cicd-DRONE_.+"} / count without (cpu, mode) (node_cpu_seconds_total{mode="system"})) > 1

for: 30m

labels:

severity: warning

service: node-basic

annotations:

title: 服务器负载高

description: "{{ $labels.instance }} 每颗cpu的平均负载到达 {{ $value }} "

1.5、热加载

curl -X POST http://localhost:9090/-/reload

二、alertmanager配置

2.1、alertmanager安装

安装到/data下,配置systemd启动

cat >/etc/systemd/system/alertmanager.service<<EOF

[Unit]

Description=Alertmanager Service

Wants=network-online.target

After=network-online.target

[Service]

User=root

Group=root

Type=simple

ExecStart=/data/alertmanager/alertmanager --config.file=/data/alertmanager/alertmanager.yml

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

2.2、alertmanager配置文件

这里的808是PrometheusAlert提供的webhook端口,三种不同级别的告警通知到三个机器人

cat alertmanager.yml

global:

resolve_timeout: 1m

route:

receiver: 'default'

group_by: ['alertname']

group_wait: 10s

group_interval: 10s

repeat_interval: 30m

routes:

- receiver: 'node-critical'

group_wait: 10s

continue: true

matchers:

- 'service=~"node-basic|probe-service"'

- 'severity="critical"'

- receiver: 'node-warning'

group_wait: 10s

continue: true

matchers:

- 'service=~"node-basic"'

- 'severity="warning"'

receivers:

- name: 'default'

webhook_configs:

- url: 'http://127.0.0.1:8080/prometheusalert?type=fs&tpl=prometheus-fs&fsurl=https://open.feishu.cn/open-apis/bot/v2/hook/9264abcdc9c5'

send_resolved: true

- name: 'node-critical'

webhook_configs:

- url: 'http://127.0.0.1:8080/prometheusalert?type=fs&tpl=prometheus-fs&fsurl=https://open.feishu.cn/open-apis/bot/v2/hook/2fc0f6048e0'

send_resolved: true

- name: 'node-warning'

webhook_configs:

- url: 'http://127.0.0.1:8080/prometheusalert?type=fs&tpl=prometheus-fs&fsurl=https://open.feishu.cn/open-apis/bot/v2/hook/74dbb053ae33'

send_resolved: true

inhibit_rules:

- source_match:

severity: 'critical'

target_match:

severity: 'warning'

equal: ['alertname', 'dev', 'instance']

三、PrometheusAlert配置

拉包https://github.com/feiyu563/PrometheusAlert

启动后配置模板

3.1、模板配置

我这里是飞书通知,所以修改飞书的模板

{{ range $k,$v:=.alerts }}{{if eq $v.status "resolved"}}<font color="green">**{{$v.labels.service}}恢复信息**</font>

事件: **{{$v.labels.alertname}}**

告警类型:{{$v.status}}

告警级别: {{$v.labels.severity}}

开始时间: {{GetCSTtime $v.startsAt}}

结束时间: {{GetCSTtime $v.endsAt}}

主机: {{$v.labels.instance}}

服务组: {{$v.labels.service}}

<font color="green">**事件详情: {{$v.annotations.description}}**</font>

{{else}}**{{$v.labels.service}}告警**

事件: **{{$v.labels.alertname}}**

告警类型:{{$v.status}}

告警级别: {{$v.labels.severity}}

开始时间: {{GetCSTtime $v.startsAt}}

主机: {{$v.labels.instance}}

服务组: {{$v.labels.service}}

<font color="red">**事件详情: {{$v.annotations.description}}**</font>

{{end}}

{{ end }}

优化了一下告警内容,我这里使用prometheus-fsv2发送报错,只能用v1发送,暂时没考虑k8s发送告警

3.2、优化告警标题

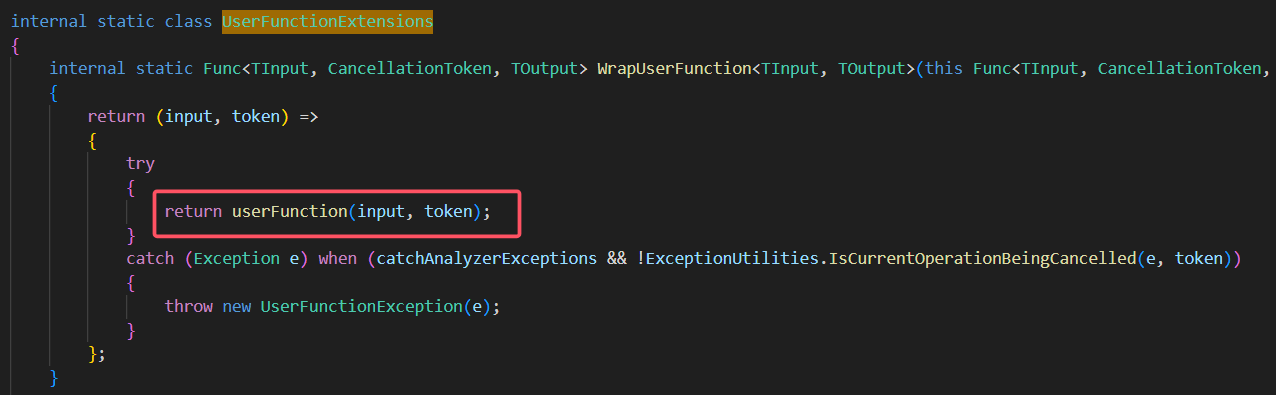

https://github.com/feiyu563/PrometheusAlert/blob/master/controllers/prometheusalert.go

重新定义告警Title的值,默认是配置文件指定的,但任何告警都是一个标题

} else {

json.Unmarshal(c.Ctx.Input.RequestBody, &p_json)

//针对prometheus的消息特殊处理

json.Unmarshal(c.Ctx.Input.RequestBody, &p_alertmanager_json)

}

//大概113行

title := p_alertmanager_json["alerts"].([]interface{})[0].(map[string]interface{})["labels"].(map[string]interface{})["alertname"].(string)

// alertgroup

alertgroup := c.Input().Get("alertgroup")

openAg := beego.AppConfig.String("open-alertgroup")

var agMap map[string]string

if openAg == "1" && len(alertgroup) != 0 {

agMap = Alertgroup(alertgroup)

}

更新完之后打个包启动

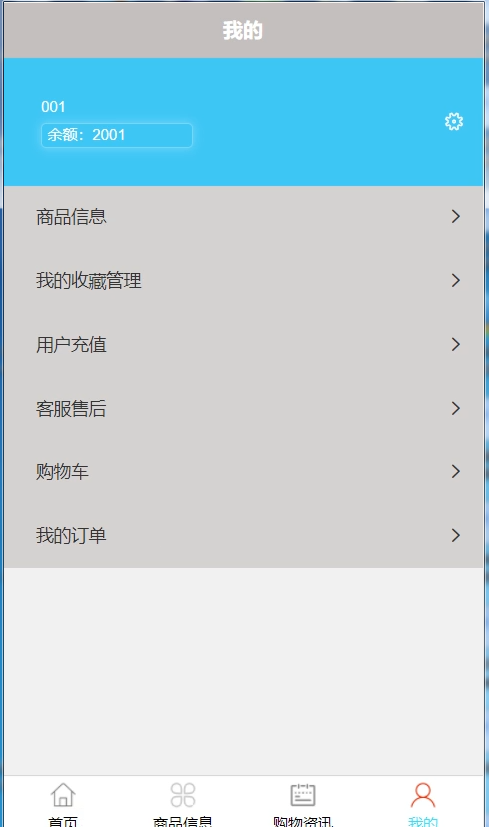

告警效果如下