文章目录

- Register Two Point Sets 注册两个点集

- Visualize Gradient Descent 可视化梯度下降

- Hyperparameter Search 超参数搜索

- JensenHavrdaCharvatTsallisPointSetToPointSetMetricv4类说明

原文url: https://examples.itk.org/src/registration/metricsv4/registertwopointsets/registertwopointsets

Register Two Point Sets 注册两个点集

与图像配准类似,可以对 n 维“移动”点集进行重新采样,以与“固定”点集对齐。可以使用 ITK 点集度量和 ITK 优化器来注册这两个集合。

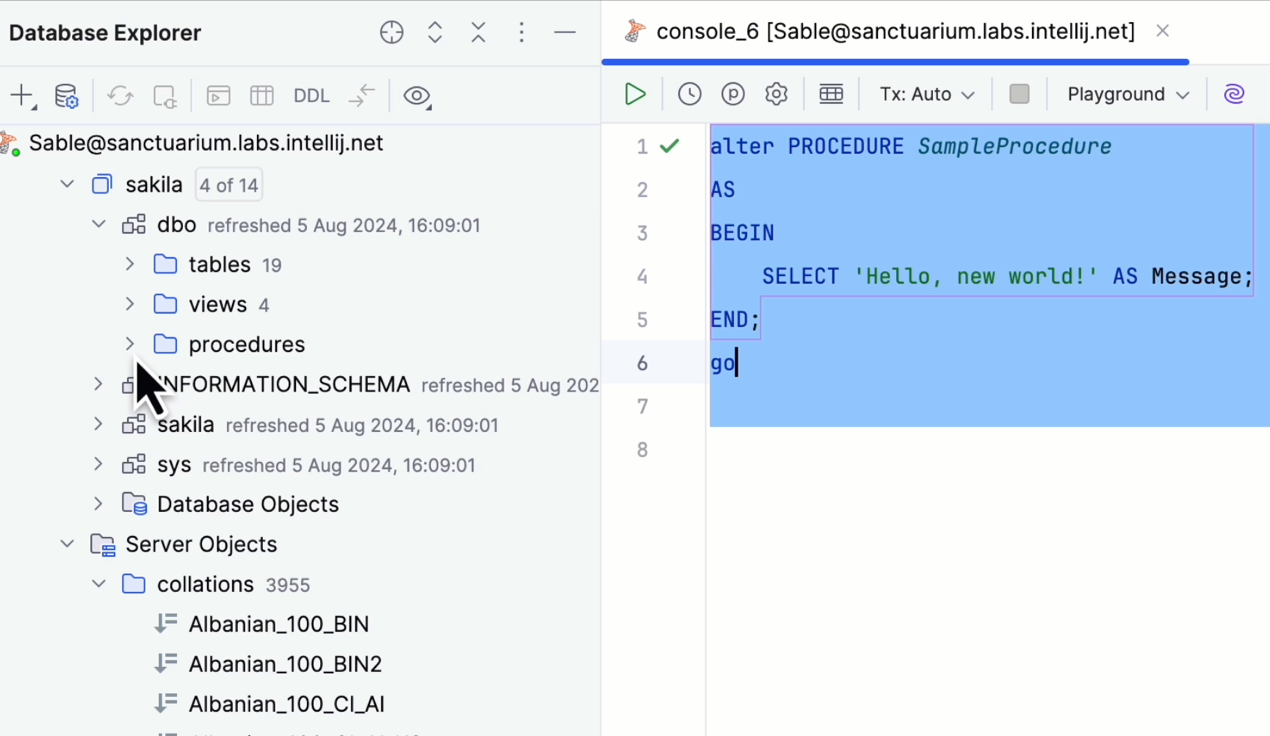

在此示例中,我们创建两个具有任意偏移量的 itk.PointSet 表示,并选择参数以将它们与 TranslationTransform 对齐。我们使用 JensenHavrdaCharvatTsallisPointSetToPointSetMetricv4 类来量化点集之间的差异,并使用 GradientDescentOptimizerv4 类通过修改变换参数来迭代减少这种差异。我们的示例还包括使用 matplotlib 和 itkwidgets 可视化参数表面的示例代码以及用于优化梯度下降性能的示例超参数搜索。

import os

import sys

import itertools

from math import pi, sin, cos, sqrt

import matplotlib.pyplot as plt

import numpy as np

import itk

from itkwidgets import view

# 构造二个点集

# Generate two circles with a small offset

def make_circles(dimension: int = 2, offset: list = None):

PointSetType = itk.PointSet[itk.F, dimension]

RADIUS = 100

if not offset or len(offset) != dimension:

offset = [2.0] * dimension

fixed_points = PointSetType.New()

moving_points = PointSetType.New()

fixed_points.Initialize()

moving_points.Initialize()

count = 0

step = 0.1

for count in range(0, int(2 * pi / step) + 1):

theta = count * step

fixed_point = list()

fixed_point.append(RADIUS * cos(theta))

for dim in range(1, dimension):

fixed_point.append(RADIUS * sin(theta))

fixed_points.SetPoint(count, fixed_point)

moving_point = [fixed_point[dim] + offset[dim] for dim in range(0, dimension)]

moving_points.SetPoint(count, moving_point)

return fixed_points, moving_points

POINT_SET_OFFSET = [15.0, 15.0]

fixed_set, moving_set = make_circles(offset=POINT_SET_OFFSET)

# Visualize point sets with matplotlib

fig = plt.figure()

ax = plt.axes()

n_points = fixed_set.GetNumberOfPoints()

ax.scatter(

[fixed_set.GetPoint(i)[0] for i in range(0, n_points)],

[fixed_set.GetPoint(i)[1] for i in range(0, n_points)],

)

ax.scatter(

[moving_set.GetPoint(i)[0] for i in range(0, n_points)],

[moving_set.GetPoint(i)[1] for i in range(0, n_points)],

)

# 运行梯度下降优化

# 我们将使用 JensenHavrdaCharvatTsallisPointSetToPointSetMetricv4 量化点集偏移,并在 10 次梯度下降迭代中最小化度量值。

ExhaustiveOptimizerType = itk.ExhaustiveOptimizerv4[itk.D]

dim = 2

# Define translation parameters to update iteratively

TransformType = itk.TranslationTransform[itk.D, dim]

transform = TransformType.New()

transform.SetIdentity()

PointSetType = type(fixed_set)

# Define a metric to reflect the difference between point sets

PointSetMetricType = itk.JensenHavrdaCharvatTsallisPointSetToPointSetMetricv4[PointSetType]

metric = PointSetMetricType.New(

FixedPointSet=fixed_set,

MovingPointSet=moving_set,

MovingTransform=transform,

PointSetSigma=5.0,

KernelSigma=10.0,

UseAnisotropicCovariances=False,

CovarianceKNeighborhood=5,

EvaluationKNeighborhood=10,

Alpha=1.1,

)

metric.Initialize()

# Define an estimator to help determine step sizes along each transform parameter2

ShiftScalesType = itk.RegistrationParameterScalesFromPhysicalShift[PointSetMetricType]

shift_scale_estimator = ShiftScalesType.New(

Metric=metric, VirtualDomainPointSet=metric.GetVirtualTransformedPointSet(), TransformForward=True

)

max_iterations = 10

# Define the gradient descent optimzer

OptimizerType = itk.GradientDescentOptimizerv4Template[itk.D]

optimizer = OptimizerType.New(

Metric=metric,

NumberOfIterations=max_iterations,

ScalesEstimator=shift_scale_estimator,

MaximumStepSizeInPhysicalUnits=8.0,

MinimumConvergenceValue=-1,

DoEstimateLearningRateAtEachIteration=False,

DoEstimateLearningRateOnce=True,

ReturnBestParametersAndValue=True,

)

iteration_data = dict()

# Track gradient descent iterations with observers

def print_iteration():

print(

f"It: {optimizer.GetCurrentIteration()}"

f" metric value: {optimizer.GetCurrentMetricValue():.6f} "

# f' transform position: {list(optimizer.GetCurrentPosition())}'

f" learning rate: {optimizer.GetLearningRate()}"

)

def log_iteration():

iteration_data[optimizer.GetCurrentIteration() + 1] = list(optimizer.GetCurrentPosition())

optimizer.AddObserver(itk.AnyEvent(), print_iteration)

optimizer.AddObserver(itk.IterationEvent(), log_iteration)

# Set first value to default transform position

iteration_data[0] = list(optimizer.GetCurrentPosition())

# Run optimization and print out results

optimizer.StartOptimization()

print(f"Number of iterations: {optimizer.GetCurrentIteration() - 1}")

print(f"Moving-source final value: {optimizer.GetCurrentMetricValue()}")

print(f"Moving-source final position: {list(optimizer.GetCurrentPosition())}")

print(f"Optimizer scales: {list(optimizer.GetScales())}")

print(f"Optimizer learning rate: {optimizer.GetLearningRate()}")

print(f"Stop reason: {optimizer.GetStopConditionDescription()}")

# Resample Moving Point Set 重采样移动点集

moving_inverse = metric.GetMovingTransform().GetInverseTransform()

fixed_inverse = metric.GetFixedTransform().GetInverseTransform()

transformed_fixed_set = PointSetType.New()

transformed_moving_set = PointSetType.New()

for n in range(0, metric.GetNumberOfComponents()):

transformed_moving_point = moving_inverse.TransformPoint(moving_set.GetPoint(n))

transformed_moving_set.SetPoint(n, transformed_moving_point)

transformed_fixed_point = fixed_inverse.TransformPoint(fixed_set.GetPoint(n))

transformed_fixed_set.SetPoint(n, transformed_fixed_point)

# Compare fixed point set with resampled moving point set to see alignment

fig = plt.figure()

ax = plt.axes()

n_points = fixed_set.GetNumberOfPoints()

ax.scatter(

[fixed_set.GetPoint(i)[0] for i in range(0, n_points)],

[fixed_set.GetPoint(i)[1] for i in range(0, n_points)],

)

ax.scatter(

[transformed_moving_set.GetPoint(i)[0] for i in range(0, n_points)],

[transformed_moving_set.GetPoint(i)[1] for i in range(0, n_points)],

)

使用 matplotlib 可视化点集:

将固定点集与重采样移动点集进行比较以查看对齐情况:

Visualize Gradient Descent 可视化梯度下降

我们可以使用 ITK ExhaustiveOptimizerv4 类来查看优化器如何沿着变换参数和度量定义的表面移动。

# Set up the new optimizer

# Create a new transform and metric for analysis

transform = TransformType.New()

transform.SetIdentity()

metric = PointSetMetricType.New(

FixedPointSet=fixed_set,

MovingPointSet=moving_set,

MovingTransform=transform,

PointSetSigma=5,

KernelSigma=10.0,

UseAnisotropicCovariances=False,

CovarianceKNeighborhood=5,

EvaluationKNeighborhood=10,

Alpha=1.1,

)

metric.Initialize()

# Create a new observer to map out the parameter surface

optimizer.RemoveAllObservers()

optimizer = ExhaustiveOptimizerType.New(Metric=metric)

# Use observers to collect points on the surface

param_space = dict()

def log_exhaustive_iteration():

param_space[tuple(optimizer.GetCurrentPosition())] = optimizer.GetCurrentValue()

optimizer.AddObserver(itk.IterationEvent(), log_exhaustive_iteration)

# Collect a moderate number of steps along each transform parameter

step_count = 25

optimizer.SetNumberOfSteps([step_count, step_count])

# Step a reasonable distance along each transform parameter

scales = optimizer.GetScales()

scales.SetSize(2)

scale_size = 1.0

scales.SetElement(0, scale_size)

scales.SetElement(1, scale_size)

optimizer.SetScales(scales)

optimizer.StartOptimization()

print(

f"MinimumMetricValue: {optimizer.GetMinimumMetricValue():.4f}\t"

f"MaximumMetricValue: {optimizer.GetMaximumMetricValue():.4f}\n"

f"MinimumMetricValuePosition: {list(optimizer.GetMinimumMetricValuePosition())}\t"

f"MaximumMetricValuePosition: {list(optimizer.GetMaximumMetricValuePosition())}\n"

f"StopConditionDescription: {optimizer.GetStopConditionDescription()}\t"

)

# Reformat gradient descent data to overlay on the plot

descent_x_vals = [iteration_data[i][0] for i in range(0, len(iteration_data))]

descent_y_vals = [iteration_data[i][1] for i in range(0, len(iteration_data))]

# Plot the surface, extrema, and gradient descent data in a matplotlib scatter plot

fig = plt.figure()

ax = plt.axes()

ax.scatter(

[x for (x, y) in param_space.keys()],

[y for (x, y) in param_space.keys()],

c=list(param_space.values()),

cmap="Greens",

zorder=1,

)

ax.plot(

optimizer.GetMinimumMetricValuePosition()[0], optimizer.GetMinimumMetricValuePosition()[1], "kv"

)

ax.plot(

optimizer.GetMaximumMetricValuePosition()[0], optimizer.GetMaximumMetricValuePosition()[1], "w^"

)

for i in range(0, len(iteration_data)):

ax.plot(descent_x_vals[i : i + 2], descent_y_vals[i : i + 2], "rx-")

ax.plot(descent_x_vals[0], descent_y_vals[0], "ro")

ax.plot(descent_x_vals[len(iteration_data) - 1], descent_y_vals[len(iteration_data) - 1], "bo")

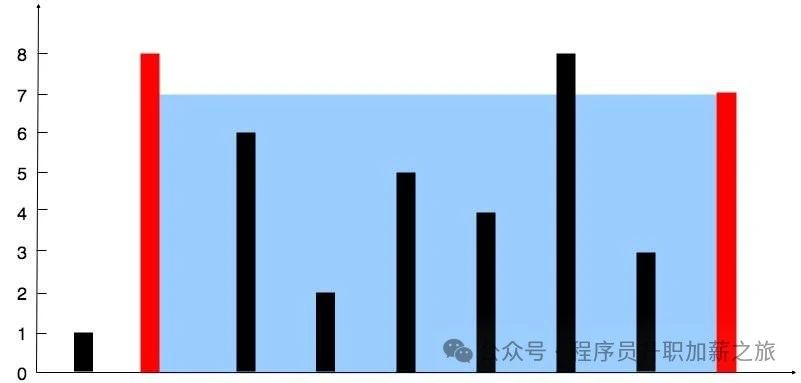

在 matplotlib 散点图中绘制表面、极值和梯度下降数据:

我们还可以使用 itkwidgets 查看表面并将其导出为图像.

x_vals = list(set(x for (x, y) in param_space.keys()))

y_vals = list(set(y for (x, y) in param_space.keys()))

x_vals.sort()

y_vals.sort(reverse=True)

array = np.array([[param_space[(x, y)] for x in x_vals] for y in y_vals])

image_view = itk.GetImageViewFromArray(array)

view(image_view)

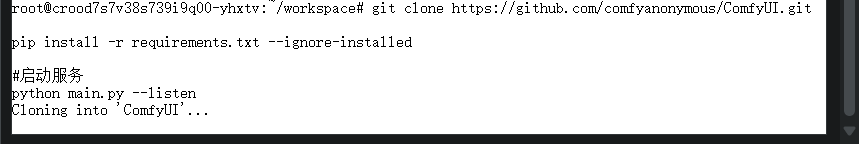

Hyperparameter Search 超参数搜索

为了找到具有不同变换、度量和优化器的适当结果,通常需要比较超参数变化的结果。在此示例中,需要评估 JensenHavrdaCharvatTsallisPointSetToPointSetMetricv4.PointSetSigma 参数和 GradientDescentOptimizerv4.DoEstimateLearningRate 参数的不同值的性能。梯度下降迭代数据沿二维散点图绘制,以比较并选择产生最佳性能的超参数组合。

# Index values for gradient descent logging

FINAL_OPT_INDEX = 0

DESCENT_DATA_INDEX = 1

hyper_data = dict()

final_optimizers = dict()

# sigma must be sufficiently large to avoid negative entropy results

point_set_sigmas = (1.0, 2.5, 5.0, 10.0, 20.0, 50.0)

# Compare performance with repeated or one-time learning rate estimation

estimate_rates = [(False, False), (False, True), (True, False)]

# Run gradient descent optimization for each combination of hyperparameters

for trial_values in itertools.product(point_set_sigmas, estimate_rates):

hyper_data[trial_values] = dict()

(point_set_sigma, est_rate) = trial_values

fixed_set, moving_set = make_circles(offset=POINT_SET_OFFSET)

transform = TransformType.New()

transform.SetIdentity()

metric = PointSetMetricType.New(

FixedPointSet=fixed_set,

MovingPointSet=moving_set,

PointSetSigma=point_set_sigma,

KernelSigma=10.0,

UseAnisotropicCovariances=False,

CovarianceKNeighborhood=5,

EvaluationKNeighborhood=10,

MovingTransform=transform,

Alpha=1.1,

)

shift_scale_estimator = ShiftScalesType.New(

Metric=metric, VirtualDomainPointSet=metric.GetVirtualTransformedPointSet()

)

metric.Initialize()

optimizer = OptimizerType.New(

Metric=metric,

NumberOfIterations=100,

MaximumStepSizeInPhysicalUnits=3.0,

MinimumConvergenceValue=-1,

DoEstimateLearningRateOnce=est_rate[0],

DoEstimateLearningRateAtEachIteration=est_rate[1],

LearningRate=1e6, # Ignored if either est_rate argument is True

ReturnBestParametersAndValue=False,

)

optimizer.SetScalesEstimator(shift_scale_estimator)

def log_hyper_iteration_data():

hyper_data[trial_values][optimizer.GetCurrentIteration()] = round(

optimizer.GetCurrentMetricValue(), 8

)

optimizer.AddObserver(itk.IterationEvent(), log_hyper_iteration_data)

optimizer.StartOptimization()

final_optimizers[trial_values] = optimizer

# Print results for each set of hyperparameters

print("PS_sigma\test once/each:\tfinal index\tfinal metric")

for trial_values in itertools.product(point_set_sigmas, estimate_rates):

print(

f"{trial_values[0]}\t\t{trial_values[1]}:\t\t"

f"{final_optimizers[trial_values].GetCurrentIteration()}\t"

f"{final_optimizers[trial_values].GetCurrentMetricValue():10.8f}"

)

我们可以使用 matplotlib 子图和条形图来比较每组超参数的梯度下降性能和最终度量值。在这个例子中,我们看到,一次估计学习率通常会随着时间的推移带来最佳性能,而每次迭代都估计学习率可能会阻止梯度下降有效收敛。提供最佳和最一致度量值的超参数集是 PointSetSigma=5.0 和 DoEstimateLearningRateOnce=True,这是我们在本笔记本中使用的值。

# Visualize metric over gradient descent iterations as matplotlib subplots.

f, axn = plt.subplots(len(point_set_sigmas), len(estimate_rates), sharex=True)

for (i, j) in [(i, j) for i in range(0, len(point_set_sigmas)) for j in range(0, len(estimate_rates))]:

axn[i, j].scatter(

x=list(hyper_data[point_set_sigmas[i], estimate_rates[j]].keys())[1:],

y=list(hyper_data[point_set_sigmas[i], estimate_rates[j]].values())[1:],

)

axn[i, j].set_title(f"sigma={point_set_sigmas[i]},est={estimate_rates[j]}")

axn[i, j].set_ylim(-0.08, 0)

plt.subplots_adjust(top=5, bottom=1, right=5)

plt.show()

# Compare final metric magnitudes

fig = plt.figure()

ax = fig.gca()

labels = [

f'{round(sig,0)}{"T" if est0 else "F"}{"T" if est1 else "F"}'

for (sig, (est0, est1)) in itertools.product(point_set_sigmas, estimate_rates)

]

vals = [

final_optimizers[trial_values].GetCurrentMetricValue()

for trial_values in itertools.product(point_set_sigmas, estimate_rates)

]

ax.bar(labels, vals)

plt.show()

将梯度下降迭代中的度量可视化为 matplotlib 子图:

比较最终的度量值:

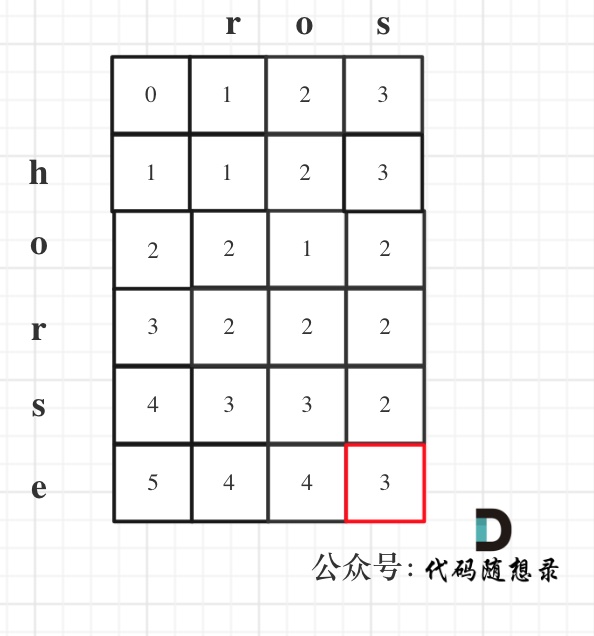

JensenHavrdaCharvatTsallisPointSetToPointSetMetricv4类说明

url : https://itk.org/Doxygen/html/classitk_1_1JensenHavrdaCharvatTsallisPointSetToPointSetMetricv4.html

Jensen Havrda Charvat Tsallis 点集度量的实现。

给定指定的变换和方向,此类使用 Havrda-Charvat-Tsallis 熵族(众所周知的香农熵的泛化)和 Jensen 散度计算“固定”和“移动”点集对之间的值和导数。另一种看待信息理论度量族的方式是,这些点用于构建相应的可能密度函数。

此外,我们允许用户调用数据的流形 parzen 窗口。我们实际上可以计算每个点的协方差矩阵,以反映定位点集结构,而不是将各向同性高斯与每个点相关联。

为了加快度量计算速度,我们使用 ITK 的 K-d 树仅查询给定邻域的度量值。考虑到可能只需要一小部分点就可以很好地近似单个点的度量值,这可能是有道理的。所以我们要做的是转换每个点(使用指定的变换)并从转换后的点构建 k-d 树。

由 Nicholas J. Tustison、James C. Gee 在 Insight Journal 论文中贡献:https://www.insight-journal.org/browse/publication/317

注意

Tustison 等人在 2011 年报告的原始工作可选地采用了正则化项来防止移动点集合并到单个点位置。然而,在配准框架内,该术语的实用性有限,因为这种正则化由变换和任何显式正则化项决定。还请注意,已发表的工作适用于多个点集,每个点集都可以被视为“移动”,但这也不适用于此特定实现。