目录

1 描述

2 结构体

2.1 block_device_operations

2.2 gendisk

2.3 block_device

2.4 request_queue

2.5 request

2.6 bio

3.7 blk_mq_tag_set

3.8 blk_mq_ops

3 相关函数

3.1 注册注销块设备

3.1.1 register_blkdev

3.1.2 unregister_blkdev

3.2 gendisk 结构体操作

3.2.1 alloc_disk

3.2.2 set_capacity

3.2.3 add_disk

3.2.4 del_gendisk

3.3 块设备 I/O 请求

3.3.1 blk_mq_alloc_tag_set

3.3.2 blk_mq_free_tag_set

3.3.3 blk_mq_init_queue

3.3.4 blk_cleanup_queue

3.4 请求 request

3.4.1 blk_mq_start_request

3.4.1 blk_mq_end_request

3.5 数据操作

3.5.1 bio_data

3.5.2 rq_data_dir

4 实验

4.1 代码

4.2 操作

1 描述

块设备是针对存储设备的,比如 SD 卡、EMMC、NAND Flash、Nor Flash、SPI Flash、机械硬盘、固态硬盘等。因此块设备驱动其实就是这些存储设备驱动,块设备驱动相比字符设备驱动的主要区别如下:

①块设备只能以块为单位进行读写访问,块是 linux 虚拟文件系统(VFS)基本的数据传输单位。字符设备是以字节为单位进行数据传输的,不需要缓冲。

②块设备在结构上是可以进行随机访问的,对于这些设备的读写都是按块进行的,块设备使用缓冲区来暂时存放数据,等到条件成熟以后再一次性将缓冲区中的数据写入块设备中。这么做的目的为了提高块设备寿命,大家如果仔细观察的话就会发现有些硬盘或者 NAND Flash 就会标明擦除次数(flash 的特性,写之前要先擦除),比如擦除 100000 次等。因此,为了提高块设备寿命而引入了缓冲区,数据先写入到缓冲区中,等满足一定条件后再一次性写入到真正的物理存储设备中,这样就减少了对块设备的擦除次数,提高了块设备寿命。

字符设备是顺序的数据流设备,字符设备是按照字节进行读写访问的。字符设备不需要缓冲区,对于字符设备的访问都是实时的,而且也不需要按照固定的块大小进行访问。

块设备结构的不同其 I/O 算法也会不同,比如对于 EMMC、SD 卡、NAND Flash 这类没有任何机械设备的存储设备就可以任意读写任何的扇区(块设备物理存储单元)。但是对于机械硬盘这样带有磁头的设备,读取不同的盘面或者磁道里面的数据,磁头都需要进行移动,因此对于机械硬盘而言,将那些杂乱的访问按照一定的顺序进行排列可以有效提高磁盘性能,linux 里面针对不同的存储设备实现了不同的 I/O 调度算法。

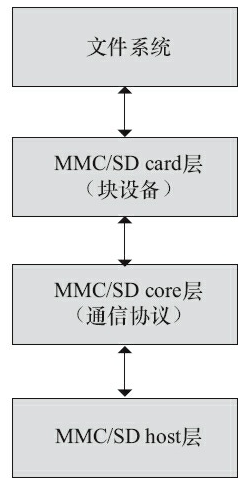

Linux MMC/SD存储卡是一种典型的块设备,它的实现位于drivers/mmc。drivers/mmc下又分为card、core和host这3个子目录。card实际上跟Linux的块设备子系统对接,实现块设备驱动以及完成请求,但是具体的协议经过core层的接口,最终通过host完成传输,因此整个MMC子系统的框架结构如图所示。另外,card目录除了实现标准的MMC/SD存储卡以外,该目录还包含一些SDIO外设的卡驱动,如drivers/mmc/card/sdio_uart.c。core目录除了给card提供接口外,实际上也定义好了host驱动的框架。

2 结构体

2.1 block_device_operations

struct block_device_operations 定义了一组与块设备操作相关的函数指针。这些函数实现了设备的打开、关闭、读写、I/O控制等功能,使得不同的块设备能够通过统一的接口与内核进行交互。这个结构体是块设备驱动程序的核心部分,为块设备的管理提供了灵活性和扩展性。

1983 struct block_device_operations {

1984 int (*open) (struct block_device *, fmode_t);

1985 void (*release) (struct gendisk *, fmode_t);

1986 int (*rw_page)(struct block_device *, sector_t, struct page *, unsigned int);

1987 int (*ioctl) (struct block_device *, fmode_t, unsigned, unsigned long);

1988 int (*compat_ioctl) (struct block_device *, fmode_t, unsigned, unsigned long);

1989 unsigned int (*check_events) (struct gendisk *disk,

1990 unsigned int clearing);

1991 /* ->media_changed() is DEPRECATED, use ->check_events() instead */

1992 int (*media_changed) (struct gendisk *);

1993 void (*unlock_native_capacity) (struct gendisk *);

1994 int (*revalidate_disk) (struct gendisk *);

1995 int (*getgeo)(struct block_device *, struct hd_geometry *);

1996 /* this callback is with swap_lock and sometimes page table lock held */

1997 void (*swap_slot_free_notify) (struct block_device *, unsigned long);

1998 struct module *owner;

1999 const struct pr_ops *pr_ops;

2000 }; int (*open) (struct block_device *, fmode_t);

用于打开块设备的回调函数。它接收一个指向块设备的指针和打开模式,返回一个整数,通常表示成功与否。

void (*release) (struct gendisk *, fmode_t);

用于释放块设备的回调函数。当设备不再使用时调用,清理相关资源。

int (*rw_page)(struct block_device *, sector_t, struct page *, unsigned int);

用于读写页面的回调函数,支持页面级别的I/O操作。

int (*ioctl) (struct block_device *, fmode_t, unsigned, unsigned long);

用于处理设备特定的控制请求。允许用户空间程序与设备进行交互。

int (*compat_ioctl) (struct block_device *, fmode_t, unsigned, unsigned long);

处理兼容性I/O控制请求,通常用于旧版本的系统调用。

unsigned int (*check_events) (struct gendisk *disk, unsigned int clearing);

用于检查设备事件,比如介质是否发生变化,取代了 media_changed。

int (*media_changed) (struct gendisk *);

过时的函数,用于检测介质是否改变,现已被 check_events 取代。

void (*unlock_native_capacity) (struct gendisk *);

解锁设备的原生容量,允许设备在容量发生变化时更新。

int (*revalidate_disk) (struct gendisk *);

重新验证块设备的状态,通常在设备状态可能发生变化时调用。

int (*getgeo)(struct block_device *, struct hd_geometry *);

用于获取设备几何信息,例如扇区数、每个扇区的大小等。

void (*swap_slot_free_notify) (struct block_device *, unsigned long);

当交换槽被释放时的通知函数,通常用于处理内存管理。

struct module *owner;

指向拥有该设备操作的模块的指针,用于管理模块引用计数。

const struct pr_ops *pr_ops;

指向打印操作的结构体,通常用于设备的日志和调试输出。

2.2 gendisk

在Linux内核中,使用gendisk(通用磁盘)结构体来表示一个独立的磁盘设备(或分区)

183 struct gendisk {

184 /* major, first_minor and minors are input parameters only,

185 * don't use directly. Use disk_devt() and disk_max_parts().

186 */

187 int major; /* major number of driver */

188 int first_minor;

189 int minors; /* maximum number of minors, =1 for

190 * disks that can't be partitioned. */

191

192 char disk_name[DISK_NAME_LEN]; /* name of major driver */

193 char *(*devnode)(struct gendisk *gd, umode_t *mode);

194

195 unsigned int events; /* supported events */

196 unsigned int async_events; /* async events, subset of all */

197

198 /* Array of pointers to partitions indexed by partno.

199 * Protected with matching bdev lock but stat and other

200 * non-critical accesses use RCU. Always access through

201 * helpers.

202 */

203 struct disk_part_tbl __rcu *part_tbl;

204 struct hd_struct part0;

205

206 const struct block_device_operations *fops;

207 struct request_queue *queue;

208 void *private_data;

209

210 int flags;

211 struct rw_semaphore lookup_sem;

212 struct kobject *slave_dir;

213

214 struct timer_rand_state *random;

215 atomic_t sync_io; /* RAID */

216 struct disk_events *ev;

217 #ifdef CONFIG_BLK_DEV_INTEGRITY

218 struct kobject integrity_kobj;

219 #endif /* CONFIG_BLK_DEV_INTEGRITY */

220 int node_id;

221 struct badblocks *bb;

222 struct lockdep_map lockdep_map;

223

224 ANDROID_KABI_RESERVE(1);

225 ANDROID_KABI_RESERVE(2);

226 ANDROID_KABI_RESERVE(3);

227 ANDROID_KABI_RESERVE(4);

228

229 };major: 驱动程序的主设备号。

first_minor: 起始次设备号。

minors: 最大次设备数量。如果设备不可分区,通常为 1。

disk_name: 磁盘的名称(如 "sda")。

devnode: 指向生成设备节点名称的函数指针。

events: 设备支持的事件位掩码。

async_events: 异步事件的子集。

part_tbl: 指向分区表的指针,受 bdev 锁保护。

part0: 设备的第一个分区。

fops: 指向设备操作函数的结构体(如读写操作)。

queue: 请求队列,用于处理 I/O 请求。

private_data: 设备私有数据指针。

flags: 标志位,用于设备状态。

lookup_sem: 读写信号量,用于同步。

slave_dir: 指向与设备相关的 kobject。

random: 随机数状态(与设备随机性相关)。

sync_io: 原子计数器,用于 RAID 操作。

ev: 指向设备事件结构体的指针。

integrity_kobj: (可选)用于设备完整性的 kobject。

node_id: 设备节点 ID。

bb: 指向坏块列表的指针。

lockdep_map: 用于锁依赖检查的结构体。

2.3 block_device

内核使用 block_device 来表示一个具体的块设备对象,比如一个硬盘或者分区

454 struct block_device {

455 dev_t bd_dev; /* not a kdev_t - it's a search key */

456 int bd_openers;

457 struct inode * bd_inode; /* will die */

458 struct super_block * bd_super;

459 struct mutex bd_mutex; /* open/close mutex */

460 void * bd_claiming;

461 void * bd_holder;

462 int bd_holders;

463 bool bd_write_holder;

464 #ifdef CONFIG_SYSFS

465 struct list_head bd_holder_disks;

466 #endif

467 struct block_device * bd_contains;

468 unsigned bd_block_size;

469 u8 bd_partno;

470 struct hd_struct * bd_part;

471 /* number of times partitions within this device have been opened. */

472 unsigned bd_part_count;

473 int bd_invalidated;

474 struct gendisk * bd_disk;

475 struct request_queue * bd_queue;

476 struct backing_dev_info *bd_bdi;

477 struct list_head bd_list;

478 /*

479 * Private data. You must have bd_claim'ed the block_device

480 * to use this. NOTE: bd_claim allows an owner to claim

481 * the same device multiple times, the owner must take special

482 * care to not mess up bd_private for that case.

483 */

484 unsigned long bd_private;

485

486 /* The counter of freeze processes */

487 int bd_fsfreeze_count;

488 /* Mutex for freeze */

489 struct mutex bd_fsfreeze_mutex;

490

491 ANDROID_KABI_RESERVE(1);

492 ANDROID_KABI_RESERVE(2);

493 ANDROID_KABI_RESERVE(3);

494 ANDROID_KABI_RESERVE(4);

495 } __randomize_layout;2.4 request_queue

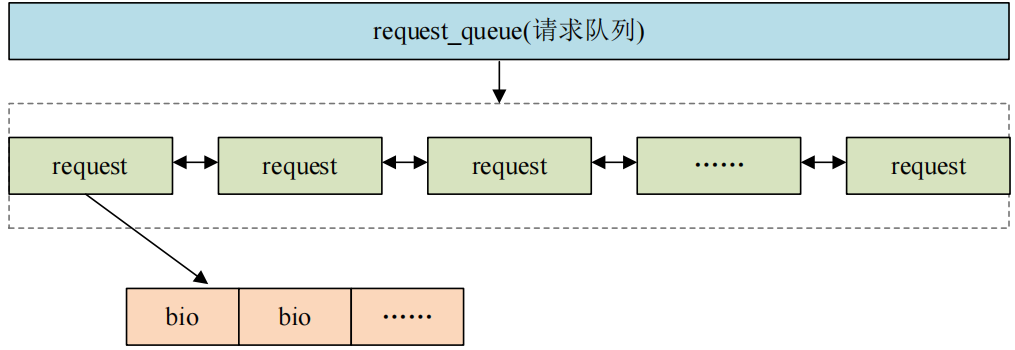

内核将对块设备的读写都发送到请求队列 request_queue 中,request_queue 中是大量的request(请求结构体),而 request 又包含了 bio,bio 保存了读写相关数据

434 struct request_queue {

435 /*

436 * Together with queue_head for cacheline sharing

437 */

438 struct list_head queue_head;

439 struct request *last_merge;

440 struct elevator_queue *elevator;

441 int nr_rqs[2]; /* # allocated [a]sync rqs */

442 int nr_rqs_elvpriv; /* # allocated rqs w/ elvpriv */

443

444 struct blk_queue_stats *stats;

445 struct rq_qos *rq_qos;

446

447 /*

448 * If blkcg is not used, @q->root_rl serves all requests. If blkcg

449 * is used, root blkg allocates from @q->root_rl and all other

450 * blkgs from their own blkg->rl. Which one to use should be

451 * determined using bio_request_list().

452 */

453 struct request_list root_rl;

454

455 request_fn_proc *request_fn;

456 make_request_fn *make_request_fn;

457 poll_q_fn *poll_fn;

458 prep_rq_fn *prep_rq_fn;

459 unprep_rq_fn *unprep_rq_fn;

460 softirq_done_fn *softirq_done_fn;

461 rq_timed_out_fn *rq_timed_out_fn;

462 dma_drain_needed_fn *dma_drain_needed;

463 lld_busy_fn *lld_busy_fn;

464 /* Called just after a request is allocated */

465 init_rq_fn *init_rq_fn;

466 /* Called just before a request is freed */

467 exit_rq_fn *exit_rq_fn;

468 /* Called from inside blk_get_request() */

469 void (*initialize_rq_fn)(struct request *rq);

470

471 const struct blk_mq_ops *mq_ops;

472

473 unsigned int *mq_map;

474

475 /* sw queues */

476 struct blk_mq_ctx __percpu *queue_ctx;

477 unsigned int nr_queues;

478

479 unsigned int queue_depth;

480

481 /* hw dispatch queues */

482 struct blk_mq_hw_ctx **queue_hw_ctx;

483 unsigned int nr_hw_queues;

484

485 /*

486 * Dispatch queue sorting

487 */

488 sector_t end_sector;

489 struct request *boundary_rq;

490

491 /*

492 * Delayed queue handling

493 */

494 struct delayed_work delay_work;

495

496 struct backing_dev_info *backing_dev_info;

497

498 /*

499 * The queue owner gets to use this for whatever they like.

500 * ll_rw_blk doesn't touch it.

501 */

502 void *queuedata;

503

504 /*

505 * various queue flags, see QUEUE_* below

506 */

507 unsigned long queue_flags;

508 /*

509 * Number of contexts that have called blk_set_pm_only(). If this

510 * counter is above zero then only RQF_PM and RQF_PREEMPT requests are

511 * processed.

512 */

513 atomic_t pm_only;

514

515 /*

516 * ida allocated id for this queue. Used to index queues from

517 * ioctx.

518 */

519 int id;

520

521 /*

522 * queue needs bounce pages for pages above this limit

523 */

524 gfp_t bounce_gfp;

525

526 /*

527 * protects queue structures from reentrancy. ->__queue_lock should

528 * _never_ be used directly, it is queue private. always use

529 * ->queue_lock.

530 */

531 spinlock_t __queue_lock;

532 spinlock_t *queue_lock;

533

534 /*

535 * queue kobject

536 */

537 struct kobject kobj;

538

539 /*

540 * mq queue kobject

541 */

542 struct kobject mq_kobj;

543

544 #ifdef CONFIG_BLK_DEV_INTEGRITY

545 struct blk_integrity integrity;

546 #endif /* CONFIG_BLK_DEV_INTEGRITY */

547

548 #ifdef CONFIG_PM

549 struct device *dev;

550 int rpm_status;

551 unsigned int nr_pending;

552 #endif

553

554 /*

555 * queue settings

556 */

557 unsigned long nr_requests; /* Max # of requests */

558 unsigned int nr_congestion_on;

559 unsigned int nr_congestion_off;

560 unsigned int nr_batching;

561

562 unsigned int dma_drain_size;

563 void *dma_drain_buffer;

564 unsigned int dma_pad_mask;

565 unsigned int dma_alignment;

566

567 struct blk_queue_tag *queue_tags;

568

569 unsigned int nr_sorted;

570 unsigned int in_flight[2];

571

572 /*

573 * Number of active block driver functions for which blk_drain_queue()

574 * must wait. Must be incremented around functions that unlock the

575 * queue_lock internally, e.g. scsi_request_fn().

576 */

577 unsigned int request_fn_active;

578 #ifdef CONFIG_BLK_INLINE_ENCRYPTION

579 /* Inline crypto capabilities */

580 struct keyslot_manager *ksm;

581 #endif

582

583 unsigned int rq_timeout;

584 int poll_nsec;

585

586 struct blk_stat_callback *poll_cb;

587 struct blk_rq_stat poll_stat[BLK_MQ_POLL_STATS_BKTS];

588

589 struct timer_list timeout;

590 struct work_struct timeout_work;

591 struct list_head timeout_list;

592

593 struct list_head icq_list;

594 #ifdef CONFIG_BLK_CGROUP

595 DECLARE_BITMAP (blkcg_pols, BLKCG_MAX_POLS);

596 struct blkcg_gq *root_blkg;

597 struct list_head blkg_list;

598 #endif

599

600 struct queue_limits limits;

601

602 #ifdef CONFIG_BLK_DEV_ZONED

603 /*

604 * Zoned block device information for request dispatch control.

605 * nr_zones is the total number of zones of the device. This is always

606 * 0 for regular block devices. seq_zones_bitmap is a bitmap of nr_zones

607 * bits which indicates if a zone is conventional (bit clear) or

608 * sequential (bit set). seq_zones_wlock is a bitmap of nr_zones

609 * bits which indicates if a zone is write locked, that is, if a write

610 * request targeting the zone was dispatched. All three fields are

611 * initialized by the low level device driver (e.g. scsi/sd.c).

612 * Stacking drivers (device mappers) may or may not initialize

613 * these fields.

614 *

615 * Reads of this information must be protected with blk_queue_enter() /

616 * blk_queue_exit(). Modifying this information is only allowed while

617 * no requests are being processed. See also blk_mq_freeze_queue() and

618 * blk_mq_unfreeze_queue().

619 */

620 unsigned int nr_zones;

621 unsigned long *seq_zones_bitmap;

622 unsigned long *seq_zones_wlock;

623 #endif /* CONFIG_BLK_DEV_ZONED */

624

625 /*

626 * sg stuff

627 */

628 unsigned int sg_timeout;

629 unsigned int sg_reserved_size;

630 int node;

631 #ifdef CONFIG_BLK_DEV_IO_TRACE

632 struct blk_trace __rcu *blk_trace;

633 struct mutex blk_trace_mutex;

634 #endif

635 /*

636 * for flush operations

637 */

638 struct blk_flush_queue *fq;

639

640 struct list_head requeue_list;

641 spinlock_t requeue_lock;

642 struct delayed_work requeue_work;

643

644 struct mutex sysfs_lock;

645

646 int bypass_depth;

647 atomic_t mq_freeze_depth;

648

649 bsg_job_fn *bsg_job_fn;

650 struct bsg_class_device bsg_dev;

651

652 #ifdef CONFIG_BLK_DEV_THROTTLING

653 /* Throttle data */

654 struct throtl_data *td;

655 #endif

656 struct rcu_head rcu_head;

657 wait_queue_head_t mq_freeze_wq;

658 struct percpu_ref q_usage_counter;

659 struct list_head all_q_node;

660

661 struct blk_mq_tag_set *tag_set;

662 struct list_head tag_set_list;

663 struct bio_set bio_split;

664

665 #ifdef CONFIG_BLK_DEBUG_FS

666 struct dentry *debugfs_dir;

667 struct dentry *sched_debugfs_dir;

668 #endif

669

670 bool mq_sysfs_init_done;

671

672 size_t cmd_size;

673 void *rq_alloc_data;

674

675 struct work_struct release_work;

676

677 #define BLK_MAX_WRITE_HINTS 5

678 u64 write_hints[BLK_MAX_WRITE_HINTS];

679 };2.5 request

151 struct request {

152 struct request_queue *q;

153 struct blk_mq_ctx *mq_ctx;

154

155 int cpu;

156 unsigned int cmd_flags; /* op and common flags */

157 req_flags_t rq_flags;

158

159 int internal_tag;

160

161 /* the following two fields are internal, NEVER access directly */

162 unsigned int __data_len; /* total data len */

163 int tag;

164 sector_t __sector; /* sector cursor */

165

166 struct bio *bio;

167 struct bio *biotail;

168

169 struct list_head queuelist;

170

171 /*

172 * The hash is used inside the scheduler, and killed once the

173 * request reaches the dispatch list. The ipi_list is only used

174 * to queue the request for softirq completion, which is long

175 * after the request has been unhashed (and even removed from

176 * the dispatch list).

177 */

178 union {

179 struct hlist_node hash; /* merge hash */

180 struct list_head ipi_list;

181 };

182

183 /*

184 * The rb_node is only used inside the io scheduler, requests

185 * are pruned when moved to the dispatch queue. So let the

186 * completion_data share space with the rb_node.

187 */

188 union {

189 struct rb_node rb_node; /* sort/lookup */

190 struct bio_vec special_vec;

191 void *completion_data;

192 int error_count; /* for legacy drivers, don't use */

193 };

194

195 /*

196 * Three pointers are available for the IO schedulers, if they need

197 * more they have to dynamically allocate it. Flush requests are

198 * never put on the IO scheduler. So let the flush fields share

199 * space with the elevator data.

200 */

201 union {

202 struct {

203 struct io_cq *icq;

204 void *priv[2];

205 } elv;

206

207 struct {

208 unsigned int seq;

209 struct list_head list;

210 rq_end_io_fn *saved_end_io;

211 } flush;

212 };

213

214 struct gendisk *rq_disk;

215 struct hd_struct *part;

216 /* Time that I/O was submitted to the kernel. */

217 u64 start_time_ns;

218 /* Time that I/O was submitted to the device. */

219 u64 io_start_time_ns;

220

221 #ifdef CONFIG_BLK_WBT

222 unsigned short wbt_flags;

223 #endif

224 #ifdef CONFIG_BLK_DEV_THROTTLING_LOW

225 unsigned short throtl_size;

226 #endif

227

228 /*

229 * Number of scatter-gather DMA addr+len pairs after

230 * physical address coalescing is performed.

231 */

232 unsigned short nr_phys_segments;

233

234 #if defined(CONFIG_BLK_DEV_INTEGRITY)

235 unsigned short nr_integrity_segments;

236 #endif

237

238 unsigned short write_hint;

239 unsigned short ioprio;

240

241 void *special; /* opaque pointer available for LLD use */

242

243 unsigned int extra_len; /* length of alignment and padding */

244

245 enum mq_rq_state state;

246 refcount_t ref;

247

248 unsigned int timeout;

249

250 /* access through blk_rq_set_deadline, blk_rq_deadline */

251 unsigned long __deadline;

252

253 struct list_head timeout_list;

254

255 union {

256 struct __call_single_data csd;

257 u64 fifo_time;

258 };

259

260 /*

261 * completion callback.

262 */

263 rq_end_io_fn *end_io;

264 void *end_io_data;

265

266 /* for bidi */

267 struct request *next_rq;

268

269 #ifdef CONFIG_BLK_CGROUP

270 struct request_list *rl; /* rl this rq is alloced from */

271 #endif

272 };2.6 bio

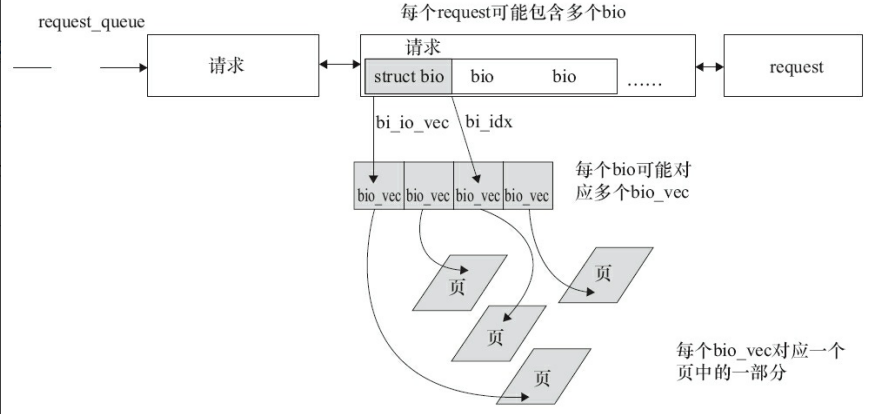

每个 request 里面里面会有多个 bio,bio 保存着最终要读写的数据、地址等信息。上层应用程序对于块设备的读写会被构造成一个或多个 bio 结构,bio 结构描述了要读写的起始扇区、要读写的扇区数量、是读取还是写入、页便宜、数据长度等等信息。上层会将 bio 提交给 I/O 调度器,I/O 调度器会将这些 bio 构造成 request 结构,request_queue 里面顺序存放着一系列的 request。新产生的 bio 可能被合并到 request_queue 里现有的 request 中,也可能产生新的 request,然后插入到 request_queue 中合适的位置,这一切都是由 I/O 调度器来完成的。

request_queue、request 和 bio 之间的关系如图

146 struct bio {

147 struct bio *bi_next; /* request queue link */

148 struct gendisk *bi_disk;

149 unsigned int bi_opf; /* bottom bits req flags,

150 * top bits REQ_OP. Use

151 * accessors.

152 */

153 unsigned short bi_flags; /* status, etc and bvec pool number */

154 unsigned short bi_ioprio;

155 unsigned short bi_write_hint;

156 blk_status_t bi_status;

157 u8 bi_partno;

158

159 /* Number of segments in this BIO after

160 * physical address coalescing is performed.

161 */

162 unsigned int bi_phys_segments;

163

164 /*

165 * To keep track of the max segment size, we account for the

166 * sizes of the first and last mergeable segments in this bio.

167 */

168 unsigned int bi_seg_front_size;

169 unsigned int bi_seg_back_size;

170

171 struct bvec_iter bi_iter;

172

173 atomic_t __bi_remaining;

174 bio_end_io_t *bi_end_io;

175

176 void *bi_private;

177 #ifdef CONFIG_BLK_CGROUP

178 /*

179 * Optional ioc and css associated with this bio. Put on bio

180 * release. Read comment on top of bio_associate_current().

181 */

182 struct io_context *bi_ioc;

183 struct cgroup_subsys_state *bi_css;

184 struct blkcg_gq *bi_blkg;

185 struct bio_issue bi_issue;

186 #endif

187

188 #ifdef CONFIG_BLK_INLINE_ENCRYPTION

189 struct bio_crypt_ctx *bi_crypt_context;

190 #if IS_ENABLED(CONFIG_DM_DEFAULT_KEY)

191 bool bi_skip_dm_default_key;

192 #endif

193 #endif

194

195 union {

196 #if defined(CONFIG_BLK_DEV_INTEGRITY)

197 struct bio_integrity_payload *bi_integrity; /* data integrity */

198 #endif

199 };

200

201 unsigned short bi_vcnt; /* how many bio_vec's */

202

203 /*

204 * Everything starting with bi_max_vecs will be preserved by bio_reset()

205 */

206

207 unsigned short bi_max_vecs; /* max bvl_vecs we can hold */

208

209 atomic_t __bi_cnt; /* pin count */

210

211 struct bio_vec *bi_io_vec; /* the actual vec list */

212

213 struct bio_set *bi_pool;

214

215 ktime_t bi_alloc_ts; /* for mm_event */

216

217 ANDROID_KABI_RESERVE(1);

218 ANDROID_KABI_RESERVE(2);

219

220 /*

221 * We can inline a number of vecs at the end of the bio, to avoid

222 * double allocations for a small number of bio_vecs. This member

223 * MUST obviously be kept at the very end of the bio.

224 */

225 struc

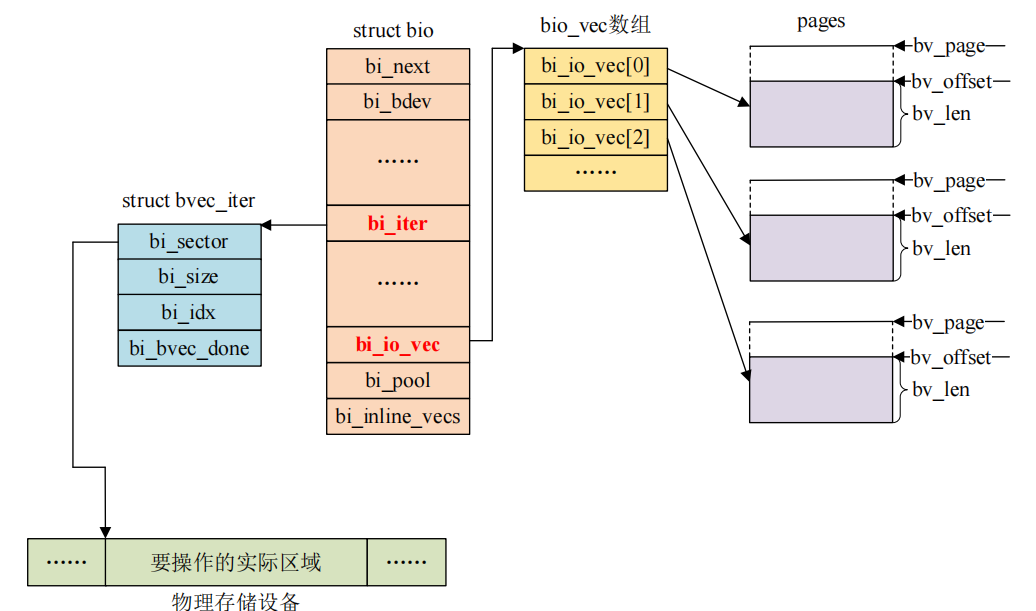

226 };bvec_iter 结构体描述了要操作的设备扇区等信息

36 struct bvec_iter {

37 sector_t bi_sector; /* device address in 512 byte

38 sectors */

39 unsigned int bi_size; /* residual I/O count */

40

41 unsigned int bi_idx; /* current index into bvl_vec */

42

43 unsigned int bi_done; /* number of bytes completed */

44

45 unsigned int bi_bvec_done; /* number of bytes completed in

46 current bvec */

47 }; bio_vec结构体用来描述与这个bio请求对应的所有的内存,它可能不总是在一个页面里面,因此需要一个向量,向量中的每个元素实际是一个[page,offset,len],我们一般也称它为一个片段

30 struct bio_vec {

31 struct page *bv_page;

32 unsigned int bv_len;

33 unsigned int bv_offset;

34 }; bio、bvec_iter 以及 bio_vec 这三个结构体之间的关系如图

3.7 blk_mq_tag_set

该结构体是块设备多队列支持的核心,提供了管理请求标签的必要信息和功能,使得设备能够高效地处理并发 I/O 操作

77 struct blk_mq_tag_set {

78 unsigned int *mq_map;

79 const struct blk_mq_ops *ops;

80 unsigned int nr_hw_queues;

81 unsigned int queue_depth; /* max hw supported */

82 unsigned int reserved_tags;

83 unsigned int cmd_size; /* per-request extra data */

84 int numa_node;

85 unsigned int timeout;

86 unsigned int flags; /* BLK_MQ_F_* */

87 void *driver_data;

88

89 struct blk_mq_tags **tags;

90

91 struct mutex tag_list_lock;

92 struct list_head tag_list;

93 };unsigned int *mq_map: 指向用于映射请求到队列的数组,帮助管理请求分发。

const struct blk_mq_ops *ops: 指向操作集的指针,定义了与标签相关的操作,如请求分配和释放。

unsigned int nr_hw_queues: 表示硬件支持的队列数量,用于配置和优化并发操作。

unsigned int queue_depth: 硬件支持的最大队列深度,定义了每个队列可以同时处理的请求数。

unsigned int reserved_tags: 保留的标签数量,用于特殊用途或特定条件下的请求处理。

unsigned int cmd_size: 每个请求额外数据的大小,用于存储与请求相关的附加信息。

int numa_node: NUMA节点信息,帮助优化内存访问以提高性能。

unsigned int timeout: 请求超时时间,指定请求等待响应的最长时间。

unsigned int flags: 标志位,使用 BLK_MQ_F_* 宏定义,以配置标签集合的行为。

void *driver_data: 指向驱动程序特定数据的指针,可以存储额外的上下文信息。

struct blk_mq_tags **tags: 指向存储标签信息的数组,管理多个请求标签。

struct mutex tag_list_lock: 互斥锁,用于保护标签列表的并发访问,确保线程安全。

struct list_head tag_list: 链表头,用于维护标签列表,便于管理和遍历。

3.8 blk_mq_ops

struct blk_mq_ops 是一个用于定义块设备(block device)驱动程序的操作集合的结构体,这些操作涉及到请求队列的管理和处理。

120 struct blk_mq_ops {

121 /*

122 * Queue request

123 */

124 queue_rq_fn *queue_rq;

125

126 /*

127 * Reserve budget before queue request, once .queue_rq is

128 * run, it is driver's responsibility to release the

129 * reserved budget. Also we have to handle failure case

130 * of .get_budget for avoiding I/O deadlock.

131 */

132 get_budget_fn *get_budget;

133 put_budget_fn *put_budget;

134

135 /*

136 * Called on request timeout

137 */

138 timeout_fn *timeout;

139

140 /*

141 * Called to poll for completion of a specific tag.

142 */

143 poll_fn *poll;

144

145 softirq_done_fn *complete;

146

147 /*

148 * Called when the block layer side of a hardware queue has been

149 * set up, allowing the driver to allocate/init matching structures.

150 * Ditto for exit/teardown.

151 */

152 init_hctx_fn *init_hctx;

153 exit_hctx_fn *exit_hctx;

154

155 /*

156 * Called for every command allocated by the block layer to allow

157 * the driver to set up driver specific data.

158 *

159 * Tag greater than or equal to queue_depth is for setting up

160 * flush request.

161 *

162 * Ditto for exit/teardown.

163 */

164 init_request_fn *init_request;

165 exit_request_fn *exit_request;

166 /* Called from inside blk_get_request() */

167 void (*initialize_rq_fn)(struct request *rq);

168

169 /*

170 * Called before freeing one request which isn't completed yet,

171 * and usually for freeing the driver private data

172 */

173 cleanup_rq_fn *cleanup_rq;

174

175 map_queues_fn *map_queues;

176

177 #ifdef CONFIG_BLK_DEBUG_FS

178 /*

179 * Used by the debugfs implementation to show driver-specific

180 * information about a request.

181 */

182 void (*show_rq)(struct seq_file *m, struct request *rq);

183 #endif

184 };queue_rq_fn * queue_rq:

描述: 指向一个函数,该函数负责将请求(request)排入队列。它是块设备处理 I/O 请求的核心函数。

get_budget_fn * get_budget:

描述: 在提交请求之前,用于获取预算(budget)。它允许驱动在处理请求之前预留资源。

put_budget_fn * put_budget:

描述: 用于释放先前保留的预算,确保资源被正确管理。

timeout_fn * timeout:

描述: 当请求超时时调用的函数,通常用于处理超时情况下的清理或错误处理。

poll_fn * poll:

描述: 用于轮询特定标签的请求完成情况的函数。

softirq_done_fn * complete:

描述: 当请求处理完成时调用的函数,用于通知系统。

init_hctx_fn * init_hctx:

描述: 在硬件上下文(hardware context)设置完成后调用的函数,驱动可以在此分配和初始化相关的数据结构。

exit_hctx_fn * exit_hctx:

描述: 当硬件上下文被销毁时调用的函数,负责清理和释放资源。

init_request_fn * init_request:

描述: 每次分配请求时调用的函数,允许驱动设置特定的数据。

exit_request_fn * exit_request:

描述: 在请求处理完成后调用的函数,负责清理和释放与请求相关的资源。

void (*initialize_rq_fn)(struct request *rq):

描述: 在获取请求(request)时调用的函数,通常用于初始化请求的状态或数据。

cleanup_rq_fn * cleanup_rq:

描述: 在请求未完成时被调用,通常用于释放驱动专用的数据。

map_queues_fn * map_queues:

描述: 用于映射队列的函数,可能用于复杂的多队列场景。

3 相关函数

3.1 注册注销块设备

3.1.1 register_blkdev

| 函数原型 | int register_blkdev(unsigned int major, const char *name) | |

| 参数 | unsigned int major | 指定主设备号。主设备号是用于区分不同类型设备的标识符。 |

| const char *name | 设备的名称,通常是在 /dev 目录下显示的名称。 | |

| 返回值 | int | 成功:主设备号 失败:负数 |

| 功能 | 将一个块设备注册到内核,使其能够被用户空间访问。块设备是支持随机访问的数据存储设备,如硬盘、闪存等。注册后,内核会将设备名称与主设备号关联,这样用户空间程序就可以通过设备名称访问这个块设备 | |

343 int register_blkdev(unsigned int major, const char *name)

344 {

345 struct blk_major_name **n, *p;

346 int index, ret = 0;

347

348 mutex_lock(&block_class_lock);

349

350 /* temporary */

351 if (major == 0) {

352 for (index = ARRAY_SIZE(major_names)-1; index > 0; index--) {

353 if (major_names[index] == NULL)

354 break;

355 }

356

357 if (index == 0) {

358 printk("register_blkdev: failed to get major for %s\n",

359 name);

360 ret = -EBUSY;

361 goto out;

362 }

363 major = index;

364 ret = major;

365 }

366

367 if (major >= BLKDEV_MAJOR_MAX) {

368 pr_err("register_blkdev: major requested (%u) is greater than the maximum (%u) for %s\n",

369 major, BLKDEV_MAJOR_MAX-1, name);

370

371 ret = -EINVAL;

372 goto out;

373 }

374

375 p = kmalloc(sizeof(struct blk_major_name), GFP_KERNEL);

376 if (p == NULL) {

377 ret = -ENOMEM;

378 goto out;

379 }

380

381 p->major = major;

382 strlcpy(p->name, name, sizeof(p->name));

383 p->next = NULL;

384 index = major_to_index(major);

385

386 for (n = &major_names[index]; *n; n = &(*n)->next) {

387 if ((*n)->major == major)

388 break;

389 }

390 if (!*n)

391 *n = p;

392 else

393 ret = -EBUSY;

394

395 if (ret < 0) {

396 printk("register_blkdev: cannot get major %u for %s\n",

397 major, name);

398 kfree(p);

399 }

400 out:

401 mutex_unlock(&block_class_lock);

402 return ret;

403 }3.1.2 unregister_blkdev

| 函数原型 | void unregister_blkdev(unsigned int major, const char *name) | |

| 参数 | unsigned int major | 指定主设备号。主设备号是用于区分不同类型设备的标识符。 |

| const char *name | 设备的名称,通常是在 /dev 目录下显示的名称。 | |

| 返回值 | ||

| 功能 | 从内核中注销先前注册的块设备。它通过主设备号和设备名称找到对应的设备,并将其从设备列表中移除。 | |

407 void unregister_blkdev(unsigned int major, const char *name)

408 {

409 struct blk_major_name **n;

410 struct blk_major_name *p = NULL;

411 int index = major_to_index(major);

412

413 mutex_lock(&block_class_lock);

414 for (n = &major_names[index]; *n; n = &(*n)->next)

415 if ((*n)->major == major)

416 break;

417 if (!*n || strcmp((*n)->name, name)) {

418 WARN_ON(1);

419 } else {

420 p = *n;

421 *n = p->next;

422 }

423 mutex_unlock(&block_class_lock);

424 kfree(p);

425 }3.2 gendisk 结构体操作

3.2.1 alloc_disk

| 函数原型 | #define alloc_disk(minors) alloc_disk_node(minors, NUMA_NO_NODE) | |

| 参数 | int minors | 次设备号数量,也就是 gendisk 对应的分区数量 |

| 返回值 | struct gendisk * | 成功:返回申请到的 gendisk,失败:NULL |

| 功能 | 用于分配一个新的块设备(gendisk)结构体 | |

#define alloc_disk(minors) alloc_disk_node(minors, NUMA_NO_NODE)

655 #define alloc_disk_node(minors, node_id) \

656 ({ \

657 static struct lock_class_key __key; \

658 const char *__name; \

659 struct gendisk *__disk; \

660 \

661 __name = "(gendisk_completion)"#minors"("#node_id")"; \

662 \

663 __disk = __alloc_disk_node(minors, node_id); \

664 \

665 if (__disk) \

666 lockdep_init_map(&__disk->lockdep_map, __name, &__key, 0); \

667 \

668 __disk; \

669 })1438 struct gendisk *__alloc_disk_node(int minors, int node_id)

1439 {

1440 struct gendisk *disk;

1441 struct disk_part_tbl *ptbl;

1442

1443 if (minors > DISK_MAX_PARTS) {

1444 printk(KERN_ERR

1445 "block: can't allocate more than %d partitions\n",

1446 DISK_MAX_PARTS);

1447 minors = DISK_MAX_PARTS;

1448 }

1449

1450 disk = kzalloc_node(sizeof(struct gendisk), GFP_KERNEL, node_id);

1451 if (disk) {

1452 if (!init_part_stats(&disk->part0)) {

1453 kfree(disk);

1454 return NULL;

1455 }

1456 init_rwsem(&disk->lookup_sem);

1457 disk->node_id = node_id;

1458 if (disk_expand_part_tbl(disk, 0)) {

1459 free_part_stats(&disk->part0);

1460 kfree(disk);

1461 return NULL;

1462 }

1463 ptbl = rcu_dereference_protected(disk->part_tbl, 1);

1464 rcu_assign_pointer(ptbl->part[0], &disk->part0);

1465

1466 /*

1467 * set_capacity() and get_capacity() currently don't use

1468 * seqcounter to read/update the part0->nr_sects. Still init

1469 * the counter as we can read the sectors in IO submission

1470 * patch using seqence counters.

1471 *

1472 * TODO: Ideally set_capacity() and get_capacity() should be

1473 * converted to make use of bd_mutex and sequence counters.

1474 */

1475 seqcount_init(&disk->part0.nr_sects_seq);

1476 if (hd_ref_init(&disk->part0)) {

1477 hd_free_part(&disk->part0);

1478 kfree(disk);

1479 return NULL;

1480 }

1481

1482 disk->minors = minors;

1483 rand_initialize_disk(disk);

1484 disk_to_dev(disk)->class = &block_class;

1485 disk_to_dev(disk)->type = &disk_type;

1486 device_initialize(disk_to_dev(disk));

1487 }

1488 return disk;

1489 }3.2.2 set_capacity

| 函数原型 | void set_capacity(struct gendisk *disk, sector_t size) | |

| 参数 | struct gendisk *disk | 指向 gendisk 结构体的指针,表示要设置容量的块设备 |

| sector_t size | 表示设备的总容量,以扇区为单位。块设备中最小的可寻址单元是扇区,一个扇区一般是 512 字节,有些设备的物理扇区可能不是 512 字节。不管物理扇区是多少,内核和块设备驱动之间的扇区都是 512 字节 | |

| 返回值 | ||

| 功能 | 设置块设备(gendisk 结构体)容量 | |

460 static inline void set_capacity(struct gendisk *disk, sector_t size)

461 {

462 disk->part0.nr_sects = size;

463 }3.2.3 add_disk

| 函数原型 | void add_disk(struct gendisk *disk) | |

| 参数 | struct gendisk *disk | 指向 gendisk 结构体的指针,代表要添加的块设备 |

| 返回值 | ||

| 功能 | 将给定的块设备(gendisk)添加到设备模型中 | |

421 static inline void add_disk(struct gendisk *disk)

422 {

423 device_add_disk(NULL, disk);

424 } 730 void device_add_disk(struct device *parent, struct gendisk *disk)

731 {

732 __device_add_disk(parent, disk, true);

733 }

672 static void __device_add_disk(struct device *parent, struct gendisk *disk,

673 bool register_queue)

674 {

675 dev_t devt;

676 int retval;

677

678 /* minors == 0 indicates to use ext devt from part0 and should

679 * be accompanied with EXT_DEVT flag. Make sure all

680 * parameters make sense.

681 */

682 WARN_ON(disk->minors && !(disk->major || disk->first_minor));

683 WARN_ON(!disk->minors &&

684 !(disk->flags & (GENHD_FL_EXT_DEVT | GENHD_FL_HIDDEN)));

685

686 disk->flags |= GENHD_FL_UP;

687

688 retval = blk_alloc_devt(&disk->part0, &devt);

689 if (retval) {

690 WARN_ON(1);

691 return;

692 }

693 disk->major = MAJOR(devt);

694 disk->first_minor = MINOR(devt);

695

696 disk_alloc_events(disk);

697

698 if (disk->flags & GENHD_FL_HIDDEN) {

699 /*

700 * Don't let hidden disks show up in /proc/partitions,

701 * and don't bother scanning for partitions either.

702 */

703 disk->flags |= GENHD_FL_SUPPRESS_PARTITION_INFO;

704 disk->flags |= GENHD_FL_NO_PART_SCAN;

705 } else {

706 int ret;

707

708 /* Register BDI before referencing it from bdev */

709 disk_to_dev(disk)->devt = devt;

710 ret = bdi_register_owner(disk->queue->backing_dev_info,

711 disk_to_dev(disk));

712 WARN_ON(ret);

713 blk_register_region(disk_devt(disk), disk->minors, NULL,

714 exact_match, exact_lock, disk);

715 }

716 register_disk(parent, disk);

717 if (register_queue)

718 blk_register_queue(disk);

719

720 /*

721 * Take an extra ref on queue which will be put on disk_release()

722 * so that it sticks around as long as @disk is there.

723 */

724 WARN_ON_ONCE(!blk_get_queue(disk->queue));

725

726 disk_add_events(disk);

727 blk_integrity_add(disk);

728 }3.2.4 del_gendisk

| 函数原型 | void del_gendisk(struct gendisk *disk) | |

| 参数 | struct gendisk *disk | 指向 gendisk 结构体的指针,代表要删除的块设备 |

| 返回值 | ||

| 功能 | 用于删除块设备(gendisk 结构体) | |

742 void del_gendisk(struct gendisk *disk)

743 {

744 struct disk_part_iter piter;

745 struct hd_struct *part;

746

747 blk_integrity_del(disk);

748 disk_del_events(disk);

749

750 /*

751 * Block lookups of the disk until all bdevs are unhashed and the

752 * disk is marked as dead (GENHD_FL_UP cleared).

753 */

754 down_write(&disk->lookup_sem);

755 /* invalidate stuff */

756 disk_part_iter_init(&piter, disk,

757 DISK_PITER_INCL_EMPTY | DISK_PITER_REVERSE);

758 while ((part = disk_part_iter_next(&piter))) {

759 invalidate_partition(disk, part->partno);

760 bdev_unhash_inode(part_devt(part));

761 delete_partition(disk, part->partno);

762 }

763 disk_part_iter_exit(&piter);

764

765 invalidate_partition(disk, 0);

766 bdev_unhash_inode(disk_devt(disk));

767 set_capacity(disk, 0);

768 disk->flags &= ~GENHD_FL_UP;

769 up_write(&disk->lookup_sem);

770

771 if (!(disk->flags & GENHD_FL_HIDDEN))

772 sysfs_remove_link(&disk_to_dev(disk)->kobj, "bdi");

773 if (disk->queue) {

774 /*

775 * Unregister bdi before releasing device numbers (as they can

776 * get reused and we'd get clashes in sysfs).

777 */

778 if (!(disk->flags & GENHD_FL_HIDDEN))

779 bdi_unregister(disk->queue->backing_dev_info);

780 blk_unregister_queue(disk);

781 } else {

782 WARN_ON(1);

783 }

784

785 if (!(disk->flags & GENHD_FL_HIDDEN))

786 blk_unregister_region(disk_devt(disk), disk->minors);

787 /*

788 * Remove gendisk pointer from idr so that it cannot be looked up

789 * while RCU period before freeing gendisk is running to prevent

790 * use-after-free issues. Note that the device number stays

791 * "in-use" until we really free the gendisk.

792 */

793 blk_invalidate_devt(disk_devt(disk));

794

795 kobject_put(disk->part0.holder_dir);

796 kobject_put(disk->slave_dir);

797

798 part_stat_set_all(&disk->part0, 0);

799 disk->part0.stamp = 0;

800 if (!sysfs_deprecated)

801 sysfs_remove_link(block_depr, dev_name(disk_to_dev(disk)));

802 pm_runtime_set_memalloc_noio(disk_to_dev(disk), false);

803 device_del(disk_to_dev(disk));

804 }3.3 块设备 I/O 请求

3.3.1 blk_mq_alloc_tag_set

| 函数原型 | int blk_mq_alloc_tag_set(struct blk_mq_tag_set *set) | |

| 参数 | struct blk_mq_tag_set *set | 指向块设备标签集的结构体指针,包含了与请求标签相关的信息,比如锁、调度策略等。这个结构体定义了如何管理并发请求,以及如何分配和回收请求标签。 |

| 返回值 | int | 成功:0 失败:负数 |

| 功能 | 初始化和分配一个标签集合,这个集合用于管理并发请求的标识符(标签),以支持多队列的块设备操作。 | |

2785 int blk_mq_alloc_tag_set(struct blk_mq_tag_set *set)

2786 {

2787 int ret;

2788

2789 BUILD_BUG_ON(BLK_MQ_MAX_DEPTH > 1 << BLK_MQ_UNIQUE_TAG_BITS);

2790

2791 if (!set->nr_hw_queues)

2792 return -EINVAL;

2793 if (!set->queue_depth)

2794 return -EINVAL;

2795 if (set->queue_depth < set->reserved_tags + BLK_MQ_TAG_MIN)

2796 return -EINVAL;

2797

2798 if (!set->ops->queue_rq)

2799 return -EINVAL;

2800

2801 if (!set->ops->get_budget ^ !set->ops->put_budget)

2802 return -EINVAL;

2803

2804 if (set->queue_depth > BLK_MQ_MAX_DEPTH) {

2805 pr_info("blk-mq: reduced tag depth to %u\n",

2806 BLK_MQ_MAX_DEPTH);

2807 set->queue_depth = BLK_MQ_MAX_DEPTH;

2808 }

2809

2810 /*

2811 * If a crashdump is active, then we are potentially in a very

2812 * memory constrained environment. Limit us to 1 queue and

2813 * 64 tags to prevent using too much memory.

2814 */

2815 if (is_kdump_kernel()) {

2816 set->nr_hw_queues = 1;

2817 set->queue_depth = min(64U, set->queue_depth);

2818 }

2819 /*

2820 * There is no use for more h/w queues than cpus.

2821 */

2822 if (set->nr_hw_queues > nr_cpu_ids)

2823 set->nr_hw_queues = nr_cpu_ids;

2824

2825 set->tags = kcalloc_node(nr_cpu_ids, sizeof(struct blk_mq_tags *),

2826 GFP_KERNEL, set->numa_node);

2827 if (!set->tags)

2828 return -ENOMEM;

2829

2830 ret = -ENOMEM;

2831 set->mq_map = kcalloc_node(nr_cpu_ids, sizeof(*set->mq_map),

2832 GFP_KERNEL, set->numa_node);

2833 if (!set->mq_map)

2834 goto out_free_tags;

2835

2836 ret = blk_mq_update_queue_map(set);

2837 if (ret)

2838 goto out_free_mq_map;

2839

2840 ret = blk_mq_alloc_rq_maps(set);

2841 if (ret)

2842 goto out_free_mq_map;

2843

2844 mutex_init(&set->tag_list_lock);

2845 INIT_LIST_HEAD(&set->tag_list);

2846

2847 return 0;

2848

2849 out_free_mq_map:

2850 kfree(set->mq_map);

2851 set->mq_map = NULL;

2852 out_free_tags:

2853 kfree(set->tags);

2854 set->tags = NULL;

2855 return ret;

2856 }3.3.2 blk_mq_free_tag_set

| 函数原型 | void blk_mq_free_tag_set(struct blk_mq_tag_set *set) | |

| 参数 | struct blk_mq_tag_set *set | 指向块设备标签集的结构体指针,包含了与请求标签相关的信息,比如锁、调度策略等。这个结构体定义了如何管理并发请求,以及如何分配和回收请求标签。 |

| 返回值 | ||

| 功能 | Linux 内核中用于释放块设备标签集的函数。这个函数的主要作用是清理和释放与 blk_mq_tag_set 结构相关联的资源,以防止内存泄漏和保持系统的稳定性。 | |

2859 void blk_mq_free_tag_set(struct blk_mq_tag_set *set)

2860 {

2861 int i;

2862

2863 for (i = 0; i < nr_cpu_ids; i++)

2864 blk_mq_free_map_and_requests(set, i);

2865

2866 kfree(set->mq_map);

2867 set->mq_map = NULL;

2868

2869 kfree(set->tags);

2870 set->tags = NULL;

2871 }3.3.3 blk_mq_init_queue

| 函数原型 | struct request_queue *blk_mq_init_queue(struct blk_mq_tag_set *set) | |

| 参数 | struct blk_mq_tag_set *set | 指向块设备标签集的结构体指针,包含了与请求标签相关的信息,比如锁、调度策略等。这个结构体定义了如何管理并发请求,以及如何分配和回收请求标签。 |

| 返回值 | struct request_queue * | 指向初始化后的请求队列的指针;如果初始化失败,则返回 NULL。 |

| 功能 | linux 内核中用于初始化块设备的请求队列的函数,特别是针对多队列(multi-queue)块层的实现。这个函数主要用于设置和准备一个新的请求队列,以便能够接收和处理来自上层的 I/O 请求。 | |

2496 struct request_queue *blk_mq_init_queue(struct blk_mq_tag_set *set)

2497 {

2498 struct request_queue *uninit_q, *q;

2499

2500 uninit_q = blk_alloc_queue_node(GFP_KERNEL, set->numa_node, NULL);

2501 if (!uninit_q)

2502 return ERR_PTR(-ENOMEM);

2503

2504 q = blk_mq_init_allocated_queue(set, uninit_q);

2505 if (IS_ERR(q))

2506 blk_cleanup_queue(uninit_q);

2507

2508 return q;

2509 } 3.3.4 blk_cleanup_queue

| 函数原型 | void blk_cleanup_queue(struct request_queue *q) | |

| 参数 | struct request_queue *q | 指向请求队列的指针,该队列用于管理对块设备的请求 |

| 返回值 | ||

| 功能 | Linux 内核中用于清理请求队列的函数。它的主要作用是释放与请求队列相关的资源,以便在不再需要该队列时进行清理 | |

757 void blk_cleanup_queue(struct request_queue *q)

758 {

759 spinlock_t *lock = q->queue_lock;

760

761 /* mark @q DYING, no new request or merges will be allowed afterwards */

762 mutex_lock(&q->sysfs_lock);

763 blk_set_queue_dying(q);

764 spin_lock_irq(lock);

765

766 /*

767 * A dying queue is permanently in bypass mode till released. Note

768 * that, unlike blk_queue_bypass_start(), we aren't performing

769 * synchronize_rcu() after entering bypass mode to avoid the delay

770 * as some drivers create and destroy a lot of queues while

771 * probing. This is still safe because blk_release_queue() will be

772 * called only after the queue refcnt drops to zero and nothing,

773 * RCU or not, would be traversing the queue by then.

774 */

775 q->bypass_depth++;

776 queue_flag_set(QUEUE_FLAG_BYPASS, q);

777

778 queue_flag_set(QUEUE_FLAG_NOMERGES, q);

779 queue_flag_set(QUEUE_FLAG_NOXMERGES, q);

780 queue_flag_set(QUEUE_FLAG_DYING, q);

781 spin_unlock_irq(lock);

782 mutex_unlock(&q->sysfs_lock);

783

784 /*

785 * Drain all requests queued before DYING marking. Set DEAD flag to

786 * prevent that q->request_fn() gets invoked after draining finished.

787 */

788 blk_freeze_queue(q);

789

790 rq_qos_exit(q);

791

792 spin_lock_irq(lock);

793 queue_flag_set(QUEUE_FLAG_DEAD, q);

794 spin_unlock_irq(lock);

795

796 /*

797 * make sure all in-progress dispatch are completed because

798 * blk_freeze_queue() can only complete all requests, and

799 * dispatch may still be in-progress since we dispatch requests

800 * from more than one contexts.

801 *

802 * We rely on driver to deal with the race in case that queue

803 * initialization isn't done.

804 */

805 if (q->mq_ops && blk_queue_init_done(q))

806 blk_mq_quiesce_queue(q);

807

808 /* for synchronous bio-based driver finish in-flight integrity i/o */

809 blk_flush_integrity();

810

811 /* @q won't process any more request, flush async actions */

812 del_timer_sync(&q->backing_dev_info->laptop_mode_wb_timer);

813 blk_sync_queue(q);

814

815 /*

816 * I/O scheduler exit is only safe after the sysfs scheduler attribute

817 * has been removed.

818 */

819 WARN_ON_ONCE(q->kobj.state_in_sysfs);

820

821 blk_exit_queue(q);

822

823 if (q->mq_ops)

824 blk_mq_exit_queue(q);

825

826 percpu_ref_exit(&q->q_usage_counter);

827

828 spin_lock_irq(lock);

829 if (q->queue_lock != &q->__queue_lock)

830 q->queue_lock = &q->__queue_lock;

831 spin_unlock_irq(lock);

832

833 /* @q is and will stay empty, shutdown and put */

834 blk_put_queue(q);

835 }3.4 请求 request

3.4.1 blk_mq_start_request

| 函数原型 | void blk_mq_start_request(struct request *rq) | |

| 参数 | struct request *rq | 指向待处理请求的指针。该请求结构体包含了有关 I/O 操作的信息,如操作类型、目标设备、数据缓冲区等 |

| 返回值 | ||

| 功能 | Linux 内核中用于开始处理块设备请求的函数。它主要用于标记一个请求为正在处理状态,并触发相关的处理流程,以确保请求能够被正确地调度和执行。 | |

630 void blk_mq_start_request(struct request *rq)

631 {

632 struct request_queue *q = rq->q;

633

634 blk_mq_sched_started_request(rq);

635

636 trace_block_rq_issue(q, rq);

637

638 if (test_bit(QUEUE_FLAG_STATS, &q->queue_flags)) {

639 rq->io_start_time_ns = ktime_get_ns();

640 #ifdef CONFIG_BLK_DEV_THROTTLING_LOW

641 rq->throtl_size = blk_rq_sectors(rq);

642 #endif

643 rq->rq_flags |= RQF_STATS;

644 rq_qos_issue(q, rq);

645 }

646

647 WARN_ON_ONCE(blk_mq_rq_state(rq) != MQ_RQ_IDLE);

648

649 blk_add_timer(rq);

650 WRITE_ONCE(rq->state, MQ_RQ_IN_FLIGHT);

651

652 if (q->dma_drain_size && blk_rq_bytes(rq)) {

653 /*

654 * Make sure space for the drain appears. We know we can do

655 * this because max_hw_segments has been adjusted to be one

656 * fewer than the device can handle.

657 */

658 rq->nr_phys_segments++;

659 }

660 }3.4.1 blk_mq_end_request

| 函数原型 | void blk_mq_end_request(struct request *rq, blk_status_t error) | |

| 参数 | struct request *rq | 指向已处理请求的指针。该请求结构体包含了关于 I/O 操作的各种信息。 |

| blk_status_t error | 表示请求执行结果的状态码。它指示请求是成功还是失败,通常会传递一个错误代码或状态值。 | |

| 返回值 | ||

| 功能 | Linux 内核中用于结束块设备请求的函数。它在请求处理完成后被调用,用于更新请求状态并进行必要的清理工作。 | |

542 void blk_mq_end_request(struct request *rq, blk_status_t error)

543 {

544 if (blk_update_request(rq, error, blk_rq_bytes(rq)))

545 BUG();

546 __blk_mq_end_request(rq, error);

547 }

520 inline void __blk_mq_end_request(struct request *rq, blk_status_t error)

521 {

522 u64 now = ktime_get_ns();

523

524 if (rq->rq_flags & RQF_STATS) {

525 blk_mq_poll_stats_start(rq->q);

526 blk_stat_add(rq, now);

527 }

528

529 blk_account_io_done(rq, now);

530

531 if (rq->end_io) {

532 rq_qos_done(rq->q, rq);

533 rq->end_io(rq, error);

534 } else {

535 if (unlikely(blk_bidi_rq(rq)))

536 blk_mq_free_request(rq->next_rq);

537 blk_mq_free_request(rq);

538 }

539 }3.5 数据操作

3.5.1 bio_data

| 函数原型 | void *bio_data(struct bio *bio) | |

| 参数 | struct bio *bio | 指向 bio 结构体的指针。bio 结构体用于描述一次块 I/O 操作,包括请求的数据位置、长度、数据缓冲区等信息。 |

| 返回值 | ||

| 功能 | Linux 内核中用于获取块 I/O 操作(即 bio 结构体)中数据缓冲区的指针的函数。它提供了一种方便的方式来访问与特定 I/O 操作相关联的数据 | |

124 static inline void *bio_data(struct bio *bio)

125 {

126 if (bio_has_data(bio))

127 return page_address(bio_page(bio)) + bio_offset(bio);

128

129 return NULL;

130 }3.5.2 rq_data_dir

| 函数原型 | #define rq_data_dir(rq) (op_is_write(req_op(rq)) ? WRITE : READ) | |

| 参数 | struct request *rq | 指向待处理请求的指针。该请求结构体包含了有关 I/O 操作的信息,如操作类型、目标设备、数据缓冲区等 |

| 返回值 | WRITE 或者 READ | |

| 功能 | 确定给定请求(request)是读取操作还是写入操作。这个宏在块设备的 I/O 请求处理中非常有用,特别是在进行读写操作时。 | |

771 #define rq_data_dir(rq) (op_is_write(req_op(rq)) ? WRITE : READ)

386 #define req_op(req) ((req)->cmd_flags & REQ_OP_MASK)

396 static inline bool op_is_write(unsigned int op)

397 {

398 return (op & 1);

399 }4 实验

4.1 代码

#include <linux/module.h>

#include <linux/moduleparam.h>

#include <linux/init.h>

#include <linux/sched.h>

#include <linux/kernel.h>

#include <linux/slab.h>

#include <linux/fs.h>

#include <linux/errno.h>

#include <linux/types.h>

#include <linux/fcntl.h>

#include <linux/hdreg.h>

#include <linux/kdev_t.h>

#include <linux/vmalloc.h>

#include <linux/genhd.h>

#include <linux/blk-mq.h>

#include <linux/buffer_head.h>

#include <linux/bio.h>

#define RAMDISK_SIZE (2 * 1024 * 1024) /* 容量大小为2MB */

#define RAMDISK_NAME "ramdisk" /* 名字 */

#define RADMISK_MINOR 3 /* 表示有三个磁盘分区!不是次设备号为3!*/

/* ramdisk设备结构体 */

struct ramdisk_dev{

int major; /* 主设备号 */

unsigned char *ramdiskbuf; /* ramdisk内存空间,用于模拟块设备 */

struct gendisk *gendisk; /* gendisk */

struct request_queue *queue; /* 请求队列 */

struct blk_mq_tag_set tag_set; /* blk_mq_tag_set */

spinlock_t lock; /* 自旋锁 */

};

struct ramdisk_dev *ramdisk = NULL; /* ramdisk设备指针 */

/*

* @description : 处理传输过程

* @param-req : 请求

* @return : 0,成功;其它表示失败

*/

static int ramdisk_transfer(struct request *req)

{

unsigned long start = blk_rq_pos(req) << 9; /* blk_rq_pos获取到的是扇区地址,左移9位转换为字节地址 */

unsigned long len = blk_rq_cur_bytes(req); /* 大小 */

/* bio中的数据缓冲区

* 读:从磁盘读取到的数据存放到buffer中

* 写:buffer保存这要写入磁盘的数据

*/

void *buffer = bio_data(req->bio);

if(rq_data_dir(req) == READ) /* 读数据 */

memcpy(buffer, ramdisk->ramdiskbuf + start, len);

else if(rq_data_dir(req) == WRITE) /* 写数据 */

memcpy(ramdisk->ramdiskbuf + start, buffer, len);

return 0;

}

/*

* @description : 开始处理传输数据的队列

* @hctx : 硬件相关的队列结构体

* @bd : 数据相关的结构体

* @return : 0,成功;其它值为失败

*/

static blk_status_t _queue_rq(struct blk_mq_hw_ctx *hctx, const struct blk_mq_queue_data* bd)

{

struct request *req = bd->rq; /* 通过bd获取到request队列*/

struct ramdisk_dev *dev = req->rq_disk->private_data;

int ret;

blk_mq_start_request(req); /* 开启处理队列 */

spin_lock(&dev->lock);

ret = ramdisk_transfer(req); /* 处理数据 */

blk_mq_end_request(req, ret); /* 结束处理队列 */

spin_unlock(&dev->lock);

return BLK_STS_OK;

}

/*

* 队列操作函数

*/

static struct blk_mq_ops mq_ops = {

.queue_rq = _queue_rq,

};

int ramdisk_open(struct block_device *dev, fmode_t mode)

{

printk("ramdisk open\r\n");

return 0;

}

void ramdisk_release(struct gendisk *disk, fmode_t mode)

{

printk("ramdisk release\r\n");

}

/*

* @description : 获取磁盘信息

* @param - dev : 块设备

* @param - geo : 模式

* @return : 0 成功;其他 失败

*/

int ramdisk_getgeo(struct block_device *dev, struct hd_geometry *geo)

{

/* 这是相对于机械硬盘的概念 */

geo->heads = 2; /* 磁头 */

geo->cylinders = 32; /* 柱面 */

geo->sectors = RAMDISK_SIZE / (2 * 32 *512); /* 一个磁道上的扇区数量 */

return 0;

}

/*

* 块设备操作函数

*/

static struct block_device_operations ramdisk_fops =

{

.owner = THIS_MODULE,

.open = ramdisk_open,

.release = ramdisk_release,

.getgeo = ramdisk_getgeo,

};

/*

* @description : 初始化队列相关操作

* @set : blk_mq_tag_set对象

* @return : request_queue的地址

*/

static struct request_queue * create_req_queue(struct blk_mq_tag_set *set)

{

struct request_queue *q;

#if 0

/*

*这里是使用了blk_mq_init_sq_queue 函数

*进行初始化的。

*/

q = blk_mq_init_sq_queue(set, &mq_ops, 2, BLK_MQ_F_SHOULD_MERGE);

#else

int ret;

memset(set, 0, sizeof(*set));

set->ops = &mq_ops; //操作函数

set->nr_hw_queues = 2; //硬件队列

set->queue_depth = 2; //队列深度

set->numa_node = NUMA_NO_NODE;//numa节点

set->flags = BLK_MQ_F_SHOULD_MERGE; //标记在bio下发时需要合并

ret = blk_mq_alloc_tag_set(set); //使用函数进行再次初始化

if (ret) {

printk(KERN_WARNING "sblkdev: unable to allocate tag set\n");

return ERR_PTR(ret);

}

q = blk_mq_init_queue(set); //分配请求队列

if(IS_ERR(q)) {

blk_mq_free_tag_set(set);

return q;

}

#endif

return q;

}

/*

* @description : 创建块设备,为应用层提供接口。

* @set : ramdisk_dev对象

* @return : 0,表示成功;其它值为失败

*/

static int create_req_gendisk(struct ramdisk_dev *set)

{

struct ramdisk_dev *dev = set;

/* 1、分配并初始化 gendisk */

dev->gendisk = alloc_disk(RADMISK_MINOR);

if(dev == NULL)

return -ENOMEM;

/* 2、添加(注册)disk */

dev->gendisk->major = ramdisk->major; /* 主设备号 */

dev->gendisk->first_minor = 0; /* 起始次设备号 */

dev->gendisk->fops = &ramdisk_fops; /* 操作函数 */

dev->gendisk->private_data = set; /* 私有数据 */

dev->gendisk->queue = dev->queue; /* 请求队列 */

sprintf(dev->gendisk->disk_name, RAMDISK_NAME); /* 名字 */

set_capacity(dev->gendisk, RAMDISK_SIZE/512); /* 设备容量(单位为扇区)*/

add_disk(dev->gendisk);

return 0;

}

static int __init ramdisk_init(void)

{

int ret = 0;

struct ramdisk_dev * dev;

printk("ramdisk init\r\n");

/* 1、申请内存 */

dev = kzalloc(sizeof(*dev), GFP_KERNEL);

if(dev == NULL) {

return -ENOMEM;

}

dev->ramdiskbuf = kmalloc(RAMDISK_SIZE, GFP_KERNEL);

if(dev->ramdiskbuf == NULL) {

printk(KERN_WARNING "dev->ramdiskbuf: vmalloc failure.\n");

return -ENOMEM;

}

ramdisk = dev;

/* 2、初始化自旋锁 */

spin_lock_init(&dev->lock);

/* 3、注册块设备 */

dev->major = register_blkdev(0, RAMDISK_NAME); /* 由系统自动分配主设备号 */

if(dev->major < 0) {

goto register_blkdev_fail;

}

/* 4、创建多队列 */

dev->queue = create_req_queue(&dev->tag_set);

if(dev->queue == NULL) {

goto create_queue_fail;

}

/* 5、创建块设备 */

ret = create_req_gendisk(dev);

if(ret < 0)

goto create_gendisk_fail;

return 0;

create_gendisk_fail:

blk_cleanup_queue(dev->queue);

blk_mq_free_tag_set(&dev->tag_set);

create_queue_fail:

unregister_blkdev(dev->major, RAMDISK_NAME);

register_blkdev_fail:

kfree(dev->ramdiskbuf);

kfree(dev);

return -ENOMEM;

}

static void __exit ramdisk_exit(void)

{

printk("ramdisk exit\r\n");

/* 释放gendisk */

del_gendisk(ramdisk->gendisk);

put_disk(ramdisk->gendisk);

/* 清除请求队列 */

blk_cleanup_queue(ramdisk->queue);

/* 释放blk_mq_tag_set */

blk_mq_free_tag_set(&ramdisk->tag_set);

/* 注销块设备 */

unregister_blkdev(ramdisk->major, RAMDISK_NAME);

/* 释放内存 */

kfree(ramdisk->ramdiskbuf);

kfree(ramdisk);

}

module_init(ramdisk_init);

module_exit(ramdisk_exit);

MODULE_LICENSE("GPL");

MODULE_AUTHOR("ALIENTEK");

MODULE_INFO(intree, "Y");4.2 操作

加载驱动:insmod ramdisk_withrequest_test.ko

console:/data # insmod ramdisk_withrequest_test.ko

[ 916.610832] ramdisk init

[ 916.613432] ramdisk open

[ 916.613718] ramdisk release

[ 916.613758] type=1400 audit(1727152399.923:65): avc: denied { create } for comm="kdevtmpfs" name="ramdisk" scontext=u:r:kernel:s0 tcontext=u:object_r:device:s0 tclass=blk_file permissive=1

[ 916.614331] type=1400 audit(1727152399.923:66): avc: denied { setattr } for comm="kdevtmpfs" name="ramdisk" dev="devtmpfs" ino=38456 scontext=u:r:kernel:s0 tcontext=u:object_r:device:s0 tclass=blk_file permissive=1

console:/data # cat /proc/partitions,可以看到ramdisk的信息

console:/dev/block # cat /proc/partitions

major minor #blocks name

1 0 8192 ram0

1 1 8192 ram1

1 2 8192 ram2

1 3 8192 ram3

1 4 8192 ram4

1 5 8192 ram5

1 6 8192 ram6

1 7 8192 ram7

1 8 8192 ram8

1 9 8192 ram9

1 10 8192 ram10

1 11 8192 ram11

1 12 8192 ram12

1 13 8192 ram13

1 14 8192 ram14

1 15 8192 ram15

254 0 1952508 zram0

179 0 30535680 mmcblk2

179 1 4096 mmcblk2p1

179 2 4096 mmcblk2p2

179 3 4096 mmcblk2p3

179 4 4096 mmcblk2p4

179 5 4096 mmcblk2p5

179 6 1024 mmcblk2p6

179 7 73728 mmcblk2p7

179 8 98304 mmcblk2p8

179 9 393216 mmcblk2p9

179 10 393216 mmcblk2p10

179 11 16384 mmcblk2p11

179 12 1024 mmcblk2p12

179 13 3186688 mmcblk2p13

179 14 26347488 mmcblk2p14

253 0 1589636 dm-0

253 1 120540 dm-1

253 2 201604 dm-2

253 3 208704 dm-3

253 4 608 dm-4

253 5 26347488 dm-5

8 0 30720000 sda

8 4 30719872 sda4

252 0 2048 ramdisk

console:/dev/block # ls /dev/block

console:/data # ls /dev/block/

by-name loop10 loop5 mmcblk2p1 mmcblk2p5 ram11 ram6

dm-0 loop11 loop6 mmcblk2p10 mmcblk2p6 ram12 ram7

dm-1 loop12 loop7 mmcblk2p11 mmcblk2p7 ram13 ram8

dm-2 loop13 loop8 mmcblk2p12 mmcblk2p8 ram14 ram9

dm-3 loop14 loop9 mmcblk2p13 mmcblk2p9 ram15 ramdisk

dm-4 loop15 mapper mmcblk2p14 platform ram2 sda

dm-5 loop2 mmcblk2 mmcblk2p2 ram0 ram3 sda4

loop0 loop3 mmcblk2boot0 mmcblk2p3 ram1 ram4 vold

loop1 loop4 mmcblk2boot1 mmcblk2p4 ram10 ram5 zram0

console:/data #

console:/data # ls /dev/block/ramdisk -l

brw------- 1 root root 252, 0 2024-09-23 09:24 /dev/block/ramdisk

console:/data # 挂载:mount /dev/block/ramdisk disk/

1|console:/data # mount /dev/block/ramdisk disk/

[224737.460103] ramdisk open

[224737.460238] ramdisk release

[224737.460275] ramdisk open

[224737.460316] ramdisk release

[224737.460348] ramdisk open

[224737.460389] ramdisk release

[224737.460420] ramdisk open

[224737.460480] ramdisk relemount: /dev/block/ramdisk: need -ta

se

[224737.460552] ramdisk open

[224737.460599] ramdisk release

[221|console:/data # 4737.460637] ramdisk open

[224737.461348] ramdisk release

[224737.461398] ramdisk open

[224737.461424] ramdisk release

[224737.461456] ramdisk open

[224737.461589] ramdisk release

[224737.461627] ramdisk open

[224737.461689] F2FS-fs (ramdisk): Magic Mismatch, valid(0xf2f52010) - read(0xffffffff)

[224737.461704] F2FS-fs (ramdisk): Can't find valid F2FS filesystem in 1th superblock

[224737.461724] F2FS-fs (ramdisk): Magic Mismatch, valid(0xf2f52010) - read(0xffffffff)

[224737.461738] F2FS-fs (ramdisk): Can't find valid F2FS filesystem in 2th superblock

[224737.461758] ramdisk release

1|console:/data # 格式化:mke2fs /dev/block/ramdisk

127|console:/data # mke2fs /dev/block/ramdisk

mke2fs 1.45.4 (23-Sep-2019)

[225588.883514] ramdisk open

[225588.883552] ramdisk release

[225588.883644] ramdisk open

/dev/block/ramdisk contains a ext4 file system

[225588.884440] ramdisk release

[225588.884578] ramdisk open

created on Mon Sep 23 09:38:55 2024

Proceed anyway? (y,N) [225588.885256] ramdisk release

y

[225596.336292] ramdisk open

[225596.336323] ramdisk release

[225596.336338] ramdisk open

[225596.336353] ramdisk releaCreating filesystem with 2048 1k blsocks and 256 inodes

s: done

[2255W9r6i.t3ing inode tables: 36415] ramdisk open

[225done 5

96.336426] ramdisk release

[225596.336438] ramdisk open

[225596.336447] ramdisk release

[225596.3365Writing superblocks and filesystem accounting information: 19] ramdisk open

[225596.341289] ramdisk release

done

console:/data #