一 数据准备

1.导入数据

import matplotlib.pyplot as plt

import tensorflow as tf

import warnings as w

w.filterwarnings('ignore')

# 支持中文

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

import os,PIL,pathlib

#隐藏警告

import warnings

warnings.filterwarnings('ignore')

data_dir = "./data"

data_dir = pathlib.Path(data_dir)

image_count = len(list(data_dir.glob('*/*')))

print("图片总数为:",image_count)图片总数为: 5192

2.数据预处理

batch_size = 64

img_height = 224

img_width = 224train_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.3,

subset="training",

seed=12,

image_size=(img_height, img_width),

batch_size=batch_size)Found 5192 files belonging to 2 classes. Using 3635 files for training.

val_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.3,

subset="validation",

seed=12,

image_size=(img_height, img_width),

batch_size=batch_size)Found 5192 files belonging to 2 classes. Using 1557 files for validation.

class_names = train_ds.class_names

print(class_names)['Normal', 'OSCC']

for image_batch, labels_batch in train_ds:

print(image_batch.shape)

print(labels_batch.shape)

break(64, 224, 224, 3) (64,)

AUTOTUNE = tf.data.AUTOTUNE

def preprocess_image(image,label):

return (image/255.0,label)

# 归一化处理

train_ds = train_ds.map(preprocess_image, num_parallel_calls=AUTOTUNE)

val_ds = val_ds.map(preprocess_image, num_parallel_calls=AUTOTUNE)

train_ds = train_ds.cache().shuffle(1000).prefetch(buffer_size=AUTOTUNE)

val_ds = val_ds.cache().prefetch(buffer_size=AUTOTUNE)3.可视化数据

plt.figure(figsize=(15, 10)) # 图形的宽为15高为10

for images, labels in train_ds.take(1):

for i in range(15):

ax = plt.subplot(3, 5, i + 1)

plt.imshow(images[i])

plt.title(class_names[labels[i]])

plt.axis("off")

二 ResNet50模型的构建

from keras import layers

from keras.layers import Input,Activation,BatchNormalization,Flatten, Dropout

from keras.layers import Dense,Conv2D,MaxPooling2D,ZeroPadding2D,AveragePooling2D

from keras.models import Model

import tensorflow as tf

def identity_block(input_tensor,kernel_size,filters,stage,block):

'''

:param input_tensor: 输入张量,通常是前一层的输出

:param kernel_size: 卷积核大小,用于第二个卷积层

:param filters: 一个包含三个整数的元组,分别表示三个卷积层的过滤器数量

:param stage: 当前块的阶段,用于命名

:param block: 当前块的名称,用于命名

:return:

'''

# 提取过滤器数量

filters1,filters2,filters3 = filters

# 基础名称生成

name_base = str(stage) + block +'_identity_block_'

# 第一个卷积层,使用1x1卷积对输入进行处理,减少通道数。卷积层之后跟着批归一化和ReLU激活

x = Conv2D(filters1,(1,1),name=name_base+'conv1')(input_tensor)

x = BatchNormalization(name=name_base + 'bn1')(x)

x = Activation('relu',name=name_base+'relu1')(x)

# 第二个卷积层,使用给定的kernel_size进行卷积,保持输入和输出的空间尺寸相同(通过padding='same')。同样后续跟着批归一化和ReLU激活

x = Conv2D(filters2,kernel_size,padding='same',name=name_base+'conv2')(x)

x = BatchNormalization(name=name_base + 'bn2')(x)

x = Activation('relu',name=name_base+'relu2')(x)

# 第三个卷积层,再次使用1x1卷积来调整输出通道数,随后进行批归一化

x = Conv2D(filters3, (1, 1), name=name_base + 'conv3')(x)

x = BatchNormalization(name=name_base + 'bn3')(x)

# 残差连接,将输入张量和经过卷积层处理后的输出张量相加。这种残差连接有助于缓解梯度消失问题,促进信息流动

x = layers.add([x,input_tensor],name=name_base+'add')

# 加和后的结果上应用ReLU激活函数

x = Activation('relu',name=name_base+'relu4')(x)

return x

'''

在残差网络中,广泛的使用了BN层;但是没有使用MaxPooling以便减小特征图尺寸

作为替代,在每个模块的第一层,都使用了strides = (2,2)的方式进行特征图尺寸缩减

与使用MaxPooling相比,毫无疑问是减少了卷积的次数,输入图像分辨率较大时比较适合

在残差网络的最后一级,先利用layer.add()实现H(x) = x + F(x)

'''

def conv_block(input_tensor,kernel_size,filters,stage,block,strides=(2,2)):

'''

input_tensor: 输入张量,通常是前一层的输出。

kernel_size: 卷积核的大小,用于第二个卷积层。

filters: 一个包含三个整数的元组,分别表示三个卷积层的过滤器数量。

stage: 当前块的阶段,通常用于命名。

block: 当前块的名称,用于命名。

strides: 卷积的步幅,默认值为(2, 2),用于下采样。

'''

# 提取过滤器数量

filters1, filters2, filters3 = filters

# 基础名称生成

res_name_base = str(stage) + block +'_conv_block_res_'

name_base = str(stage) + block +'_conv_block_'

# 使用1x1卷积对输入进行处理,减少通道数。strides参数用于控制下采样,默认步幅为(2, 2),这将使输出特征图的尺寸减半。后续跟着批归一化和ReLU激活

x = Conv2D(filters1, (1, 1), strides=strides,name=name_base + 'conv1')(input_tensor)

x = BatchNormalization(name=name_base + 'bn1')(x)

x = Activation('relu', name=name_base + 'relu1')(x)

# 使用给定的kernel_size进行卷积,保持输入和输出的空间尺寸相同(通过padding='same')。后续同样进行批归一化和ReLU激活

x = Conv2D(filters2, kernel_size, padding='same', name=name_base + 'conv2')(x)

x = BatchNormalization(name=name_base + 'bn2')(x)

x = Activation('relu', name=name_base + 'relu2')(x)

# 使用1x1卷积来调整输出通道数,随后进行批归一化

x = Conv2D(filters3, (1, 1), name=name_base + 'conv3')(x)

x = BatchNormalization(name=name_base + 'bn3')(x)

# 对输入张量进行卷积处理,以匹配输出张量的维度,确保在加法操作时两者具有相同的形状。此卷积层的步幅与主卷积块相同,确保特征图的尺寸一致。随后进行批归一化

shortcut = Conv2D(filters3,(1,1),strides=strides,name=res_name_base+'conv')(input_tensor)

shortcut = BatchNormalization(name=res_name_base+'bn')(shortcut)

x = layers.add([x,shortcut],name=name_base+'add')

x = Activation('relu',name=name_base+'relu4')(x)

return x

'''

定义一个ResNet50模型:

输入层:接收形状为 224x224x3 的图像。

零填充:对输入进行 3 像素的零填充,以保持特征图的边界。

初始卷积:使用 64 个 7x7 的卷积核,步幅为 2,之后进行批归一化和 ReLU 激活。

最大池化:进行 3x3 的最大池化,步幅为 2,减少特征图尺寸。

残差块:通过堆叠卷积块(conv_block)和身份块(identity_block)实现特征提取,逐步增加通道数,从 64 到 2048。

平均池化:在最后应用 7x7 的平均池化,降低特征维度。

展平和全连接层:展平特征图,接入一个具有 softmax 激活的全连接层,用于多类分类(2 类)。

加载预训练权重:从指定文件加载预训练的模型权重,便于迁移学习。

该架构旨在有效捕捉图像特征,适合深度学习任务

'''

def ResNet50(input_shape=[224,224,3],classes=2):

img_input = Input(shape=input_shape)

x = ZeroPadding2D((3,3))(img_input)

x = Conv2D(64,(7,7),strides=(2,2),name='conv1')(x)

x = BatchNormalization(name='bn_conv1')(x)

x = Activation('relu')(x)

x = MaxPooling2D((3,3),strides=(2,2))(x)

x = conv_block(x,3,[64,64,256],stage=2,block='a',strides=(1,1))

x = identity_block(x,3,[64,64,256],stage=2,block='b')

x = identity_block(x,3,[64,64,256],stage=2,block='c')

x = conv_block(x,3,[128,128,512],stage=3,block='a')

x = identity_block(x,3,[128,128,512],stage=3,block='b')

x = identity_block(x,3,[128,128,512],stage=3,block='c')

x = identity_block(x,3,[128,128,512],stage=3,block='d')

x = conv_block(x, 3, [256,256,1024], stage=4, block='a')

x = identity_block(x, 3, [256,256,1024], stage=4, block='b')

x = identity_block(x, 3, [256,256,1024], stage=4, block='c')

x = identity_block(x, 3, [256,256,1024], stage=4, block='d')

x = identity_block(x, 3, [256,256,1024], stage=4, block='e')

x = identity_block(x, 3, [256,256,1024], stage=4, block='f')

x = conv_block(x,3,[512,512,2048],stage=5,block='a')

x = identity_block(x,3,[512,512,2048],stage=5,block='b')

x = identity_block(x,3,[512,512,2048],stage=5,block='c')

x = AveragePooling2D((7,7),name='avg_pool')(x)

x = Flatten()(x)

# 在全连接层之前添加 Dropout 层

x = Dropout(0.5)(x) # 这里设置 Dropout 比率为 50%

x = Dense(classes,activation='softmax',name='fc2')(x)

model = Model(img_input,x,name='resnet50')

# 加载预训练模型

# model.load_weights("resnet50_weights_tf_dim_ordering_tf_kernels.h5")

return model

model = ResNet50()

model.summary()Model: "resnet50"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_2 (InputLayer) [(None, 224, 224, 3 0 []

)]

zero_padding2d_1 (ZeroPadding2 (None, 230, 230, 3) 0 ['input_2[0][0]']

D)

conv1 (Conv2D) (None, 112, 112, 64 9472 ['zero_padding2d_1[0][0]']

)

bn_conv1 (BatchNormalization) (None, 112, 112, 64 256 ['conv1[0][0]']

)

activation_1 (Activation) (None, 112, 112, 64 0 ['bn_conv1[0][0]']

)

max_pooling2d_1 (MaxPooling2D) (None, 55, 55, 64) 0 ['activation_1[0][0]']

2a_conv_block_conv1 (Conv2D) (None, 55, 55, 64) 4160 ['max_pooling2d_1[0][0]']

2a_conv_block_bn1 (BatchNormal (None, 55, 55, 64) 256 ['2a_conv_block_conv1[0][0]']

ization)

2a_conv_block_relu1 (Activatio (None, 55, 55, 64) 0 ['2a_conv_block_bn1[0][0]']

n)

2a_conv_block_conv2 (Conv2D) (None, 55, 55, 64) 36928 ['2a_conv_block_relu1[0][0]']

2a_conv_block_bn2 (BatchNormal (None, 55, 55, 64) 256 ['2a_conv_block_conv2[0][0]']

ization)

2a_conv_block_relu2 (Activatio (None, 55, 55, 64) 0 ['2a_conv_block_bn2[0][0]']

n)

2a_conv_block_conv3 (Conv2D) (None, 55, 55, 256) 16640 ['2a_conv_block_relu2[0][0]']

2a_conv_block_res_conv (Conv2D (None, 55, 55, 256) 16640 ['max_pooling2d_1[0][0]']

)

2a_conv_block_bn3 (BatchNormal (None, 55, 55, 256) 1024 ['2a_conv_block_conv3[0][0]']

ization)

2a_conv_block_res_bn (BatchNor (None, 55, 55, 256) 1024 ['2a_conv_block_res_conv[0][0]']

malization)

2a_conv_block_add (Add) (None, 55, 55, 256) 0 ['2a_conv_block_bn3[0][0]',

'2a_conv_block_res_bn[0][0]']

2a_conv_block_relu4 (Activatio (None, 55, 55, 256) 0 ['2a_conv_block_add[0][0]']

n)

2b_identity_block_conv1 (Conv2 (None, 55, 55, 64) 16448 ['2a_conv_block_relu4[0][0]']

D)

2b_identity_block_bn1 (BatchNo (None, 55, 55, 64) 256 ['2b_identity_block_conv1[0][0]']

rmalization)

2b_identity_block_relu1 (Activ (None, 55, 55, 64) 0 ['2b_identity_block_bn1[0][0]']

ation)

2b_identity_block_conv2 (Conv2 (None, 55, 55, 64) 36928 ['2b_identity_block_relu1[0][0]']

D)

2b_identity_block_bn2 (BatchNo (None, 55, 55, 64) 256 ['2b_identity_block_conv2[0][0]']

rmalization)

2b_identity_block_relu2 (Activ (None, 55, 55, 64) 0 ['2b_identity_block_bn2[0][0]']

ation)

2b_identity_block_conv3 (Conv2 (None, 55, 55, 256) 16640 ['2b_identity_block_relu2[0][0]']

D)

2b_identity_block_bn3 (BatchNo (None, 55, 55, 256) 1024 ['2b_identity_block_conv3[0][0]']

rmalization)

2b_identity_block_add (Add) (None, 55, 55, 256) 0 ['2b_identity_block_bn3[0][0]',

'2a_conv_block_relu4[0][0]']

2b_identity_block_relu4 (Activ (None, 55, 55, 256) 0 ['2b_identity_block_add[0][0]']

ation)

2c_identity_block_conv1 (Conv2 (None, 55, 55, 64) 16448 ['2b_identity_block_relu4[0][0]']

D)

2c_identity_block_bn1 (BatchNo (None, 55, 55, 64) 256 ['2c_identity_block_conv1[0][0]']

rmalization)

2c_identity_block_relu1 (Activ (None, 55, 55, 64) 0 ['2c_identity_block_bn1[0][0]']

ation)

2c_identity_block_conv2 (Conv2 (None, 55, 55, 64) 36928 ['2c_identity_block_relu1[0][0]']

D)

2c_identity_block_bn2 (BatchNo (None, 55, 55, 64) 256 ['2c_identity_block_conv2[0][0]']

rmalization)

2c_identity_block_relu2 (Activ (None, 55, 55, 64) 0 ['2c_identity_block_bn2[0][0]']

ation)

2c_identity_block_conv3 (Conv2 (None, 55, 55, 256) 16640 ['2c_identity_block_relu2[0][0]']

D)

2c_identity_block_bn3 (BatchNo (None, 55, 55, 256) 1024 ['2c_identity_block_conv3[0][0]']

rmalization)

2c_identity_block_add (Add) (None, 55, 55, 256) 0 ['2c_identity_block_bn3[0][0]',

'2b_identity_block_relu4[0][0]']

2c_identity_block_relu4 (Activ (None, 55, 55, 256) 0 ['2c_identity_block_add[0][0]']

ation)

3a_conv_block_conv1 (Conv2D) (None, 28, 28, 128) 32896 ['2c_identity_block_relu4[0][0]']

3a_conv_block_bn1 (BatchNormal (None, 28, 28, 128) 512 ['3a_conv_block_conv1[0][0]']

ization)

3a_conv_block_relu1 (Activatio (None, 28, 28, 128) 0 ['3a_conv_block_bn1[0][0]']

n)

3a_conv_block_conv2 (Conv2D) (None, 28, 28, 128) 147584 ['3a_conv_block_relu1[0][0]']

3a_conv_block_bn2 (BatchNormal (None, 28, 28, 128) 512 ['3a_conv_block_conv2[0][0]']

ization)

3a_conv_block_relu2 (Activatio (None, 28, 28, 128) 0 ['3a_conv_block_bn2[0][0]']

n)

3a_conv_block_conv3 (Conv2D) (None, 28, 28, 512) 66048 ['3a_conv_block_relu2[0][0]']

3a_conv_block_res_conv (Conv2D (None, 28, 28, 512) 131584 ['2c_identity_block_relu4[0][0]']

)

3a_conv_block_bn3 (BatchNormal (None, 28, 28, 512) 2048 ['3a_conv_block_conv3[0][0]']

ization)

3a_conv_block_res_bn (BatchNor (None, 28, 28, 512) 2048 ['3a_conv_block_res_conv[0][0]']

malization)

3a_conv_block_add (Add) (None, 28, 28, 512) 0 ['3a_conv_block_bn3[0][0]',

'3a_conv_block_res_bn[0][0]']

3a_conv_block_relu4 (Activatio (None, 28, 28, 512) 0 ['3a_conv_block_add[0][0]']

n)

3b_identity_block_conv1 (Conv2 (None, 28, 28, 128) 65664 ['3a_conv_block_relu4[0][0]']

D)

3b_identity_block_bn1 (BatchNo (None, 28, 28, 128) 512 ['3b_identity_block_conv1[0][0]']

rmalization)

3b_identity_block_relu1 (Activ (None, 28, 28, 128) 0 ['3b_identity_block_bn1[0][0]']

ation)

3b_identity_block_conv2 (Conv2 (None, 28, 28, 128) 147584 ['3b_identity_block_relu1[0][0]']

D)

3b_identity_block_bn2 (BatchNo (None, 28, 28, 128) 512 ['3b_identity_block_conv2[0][0]']

rmalization)

3b_identity_block_relu2 (Activ (None, 28, 28, 128) 0 ['3b_identity_block_bn2[0][0]']

ation)

3b_identity_block_conv3 (Conv2 (None, 28, 28, 512) 66048 ['3b_identity_block_relu2[0][0]']

D)

3b_identity_block_bn3 (BatchNo (None, 28, 28, 512) 2048 ['3b_identity_block_conv3[0][0]']

rmalization)

3b_identity_block_add (Add) (None, 28, 28, 512) 0 ['3b_identity_block_bn3[0][0]',

'3a_conv_block_relu4[0][0]']

3b_identity_block_relu4 (Activ (None, 28, 28, 512) 0 ['3b_identity_block_add[0][0]']

ation)

3c_identity_block_conv1 (Conv2 (None, 28, 28, 128) 65664 ['3b_identity_block_relu4[0][0]']

D)

3c_identity_block_bn1 (BatchNo (None, 28, 28, 128) 512 ['3c_identity_block_conv1[0][0]']

rmalization)

3c_identity_block_relu1 (Activ (None, 28, 28, 128) 0 ['3c_identity_block_bn1[0][0]']

ation)

3c_identity_block_conv2 (Conv2 (None, 28, 28, 128) 147584 ['3c_identity_block_relu1[0][0]']

D)

3c_identity_block_bn2 (BatchNo (None, 28, 28, 128) 512 ['3c_identity_block_conv2[0][0]']

rmalization)

3c_identity_block_relu2 (Activ (None, 28, 28, 128) 0 ['3c_identity_block_bn2[0][0]']

ation)

3c_identity_block_conv3 (Conv2 (None, 28, 28, 512) 66048 ['3c_identity_block_relu2[0][0]']

D)

3c_identity_block_bn3 (BatchNo (None, 28, 28, 512) 2048 ['3c_identity_block_conv3[0][0]']

rmalization)

3c_identity_block_add (Add) (None, 28, 28, 512) 0 ['3c_identity_block_bn3[0][0]',

'3b_identity_block_relu4[0][0]']

3c_identity_block_relu4 (Activ (None, 28, 28, 512) 0 ['3c_identity_block_add[0][0]']

ation)

3d_identity_block_conv1 (Conv2 (None, 28, 28, 128) 65664 ['3c_identity_block_relu4[0][0]']

D)

3d_identity_block_bn1 (BatchNo (None, 28, 28, 128) 512 ['3d_identity_block_conv1[0][0]']

rmalization)

3d_identity_block_relu1 (Activ (None, 28, 28, 128) 0 ['3d_identity_block_bn1[0][0]']

ation)

3d_identity_block_conv2 (Conv2 (None, 28, 28, 128) 147584 ['3d_identity_block_relu1[0][0]']

D)

3d_identity_block_bn2 (BatchNo (None, 28, 28, 128) 512 ['3d_identity_block_conv2[0][0]']

rmalization)

3d_identity_block_relu2 (Activ (None, 28, 28, 128) 0 ['3d_identity_block_bn2[0][0]']

ation)

3d_identity_block_conv3 (Conv2 (None, 28, 28, 512) 66048 ['3d_identity_block_relu2[0][0]']

D)

3d_identity_block_bn3 (BatchNo (None, 28, 28, 512) 2048 ['3d_identity_block_conv3[0][0]']

rmalization)

3d_identity_block_add (Add) (None, 28, 28, 512) 0 ['3d_identity_block_bn3[0][0]',

'3c_identity_block_relu4[0][0]']

3d_identity_block_relu4 (Activ (None, 28, 28, 512) 0 ['3d_identity_block_add[0][0]']

ation)

4a_conv_block_conv1 (Conv2D) (None, 14, 14, 256) 131328 ['3d_identity_block_relu4[0][0]']

4a_conv_block_bn1 (BatchNormal (None, 14, 14, 256) 1024 ['4a_conv_block_conv1[0][0]']

ization)

4a_conv_block_relu1 (Activatio (None, 14, 14, 256) 0 ['4a_conv_block_bn1[0][0]']

n)

4a_conv_block_conv2 (Conv2D) (None, 14, 14, 256) 590080 ['4a_conv_block_relu1[0][0]']

4a_conv_block_bn2 (BatchNormal (None, 14, 14, 256) 1024 ['4a_conv_block_conv2[0][0]']

ization)

4a_conv_block_relu2 (Activatio (None, 14, 14, 256) 0 ['4a_conv_block_bn2[0][0]']

n)

4a_conv_block_conv3 (Conv2D) (None, 14, 14, 1024 263168 ['4a_conv_block_relu2[0][0]']

)

4a_conv_block_res_conv (Conv2D (None, 14, 14, 1024 525312 ['3d_identity_block_relu4[0][0]']

) )

4a_conv_block_bn3 (BatchNormal (None, 14, 14, 1024 4096 ['4a_conv_block_conv3[0][0]']

ization) )

4a_conv_block_res_bn (BatchNor (None, 14, 14, 1024 4096 ['4a_conv_block_res_conv[0][0]']

malization) )

4a_conv_block_add (Add) (None, 14, 14, 1024 0 ['4a_conv_block_bn3[0][0]',

) '4a_conv_block_res_bn[0][0]']

4a_conv_block_relu4 (Activatio (None, 14, 14, 1024 0 ['4a_conv_block_add[0][0]']

n) )

4b_identity_block_conv1 (Conv2 (None, 14, 14, 256) 262400 ['4a_conv_block_relu4[0][0]']

D)

4b_identity_block_bn1 (BatchNo (None, 14, 14, 256) 1024 ['4b_identity_block_conv1[0][0]']

rmalization)

4b_identity_block_relu1 (Activ (None, 14, 14, 256) 0 ['4b_identity_block_bn1[0][0]']

ation)

4b_identity_block_conv2 (Conv2 (None, 14, 14, 256) 590080 ['4b_identity_block_relu1[0][0]']

D)

4b_identity_block_bn2 (BatchNo (None, 14, 14, 256) 1024 ['4b_identity_block_conv2[0][0]']

rmalization)

4b_identity_block_relu2 (Activ (None, 14, 14, 256) 0 ['4b_identity_block_bn2[0][0]']

ation)

4b_identity_block_conv3 (Conv2 (None, 14, 14, 1024 263168 ['4b_identity_block_relu2[0][0]']

D) )

4b_identity_block_bn3 (BatchNo (None, 14, 14, 1024 4096 ['4b_identity_block_conv3[0][0]']

rmalization) )

4b_identity_block_add (Add) (None, 14, 14, 1024 0 ['4b_identity_block_bn3[0][0]',

) '4a_conv_block_relu4[0][0]']

4b_identity_block_relu4 (Activ (None, 14, 14, 1024 0 ['4b_identity_block_add[0][0]']

ation) )

4c_identity_block_conv1 (Conv2 (None, 14, 14, 256) 262400 ['4b_identity_block_relu4[0][0]']

D)

4c_identity_block_bn1 (BatchNo (None, 14, 14, 256) 1024 ['4c_identity_block_conv1[0][0]']

rmalization)

4c_identity_block_relu1 (Activ (None, 14, 14, 256) 0 ['4c_identity_block_bn1[0][0]']

ation)

4c_identity_block_conv2 (Conv2 (None, 14, 14, 256) 590080 ['4c_identity_block_relu1[0][0]']

D)

4c_identity_block_bn2 (BatchNo (None, 14, 14, 256) 1024 ['4c_identity_block_conv2[0][0]']

rmalization)

4c_identity_block_relu2 (Activ (None, 14, 14, 256) 0 ['4c_identity_block_bn2[0][0]']

ation)

4c_identity_block_conv3 (Conv2 (None, 14, 14, 1024 263168 ['4c_identity_block_relu2[0][0]']

D) )

4c_identity_block_bn3 (BatchNo (None, 14, 14, 1024 4096 ['4c_identity_block_conv3[0][0]']

rmalization) )

4c_identity_block_add (Add) (None, 14, 14, 1024 0 ['4c_identity_block_bn3[0][0]',

) '4b_identity_block_relu4[0][0]']

4c_identity_block_relu4 (Activ (None, 14, 14, 1024 0 ['4c_identity_block_add[0][0]']

ation) )

4d_identity_block_conv1 (Conv2 (None, 14, 14, 256) 262400 ['4c_identity_block_relu4[0][0]']

D)

4d_identity_block_bn1 (BatchNo (None, 14, 14, 256) 1024 ['4d_identity_block_conv1[0][0]']

rmalization)

4d_identity_block_relu1 (Activ (None, 14, 14, 256) 0 ['4d_identity_block_bn1[0][0]']

ation)

4d_identity_block_conv2 (Conv2 (None, 14, 14, 256) 590080 ['4d_identity_block_relu1[0][0]']

D)

4d_identity_block_bn2 (BatchNo (None, 14, 14, 256) 1024 ['4d_identity_block_conv2[0][0]']

rmalization)

4d_identity_block_relu2 (Activ (None, 14, 14, 256) 0 ['4d_identity_block_bn2[0][0]']

ation)

4d_identity_block_conv3 (Conv2 (None, 14, 14, 1024 263168 ['4d_identity_block_relu2[0][0]']

D) )

4d_identity_block_bn3 (BatchNo (None, 14, 14, 1024 4096 ['4d_identity_block_conv3[0][0]']

rmalization) )

4d_identity_block_add (Add) (None, 14, 14, 1024 0 ['4d_identity_block_bn3[0][0]',

) '4c_identity_block_relu4[0][0]']

4d_identity_block_relu4 (Activ (None, 14, 14, 1024 0 ['4d_identity_block_add[0][0]']

ation) )

4e_identity_block_conv1 (Conv2 (None, 14, 14, 256) 262400 ['4d_identity_block_relu4[0][0]']

D)

4e_identity_block_bn1 (BatchNo (None, 14, 14, 256) 1024 ['4e_identity_block_conv1[0][0]']

rmalization)

4e_identity_block_relu1 (Activ (None, 14, 14, 256) 0 ['4e_identity_block_bn1[0][0]']

ation)

4e_identity_block_conv2 (Conv2 (None, 14, 14, 256) 590080 ['4e_identity_block_relu1[0][0]']

D)

4e_identity_block_bn2 (BatchNo (None, 14, 14, 256) 1024 ['4e_identity_block_conv2[0][0]']

rmalization)

4e_identity_block_relu2 (Activ (None, 14, 14, 256) 0 ['4e_identity_block_bn2[0][0]']

ation)

4e_identity_block_conv3 (Conv2 (None, 14, 14, 1024 263168 ['4e_identity_block_relu2[0][0]']

D) )

4e_identity_block_bn3 (BatchNo (None, 14, 14, 1024 4096 ['4e_identity_block_conv3[0][0]']

rmalization) )

4e_identity_block_add (Add) (None, 14, 14, 1024 0 ['4e_identity_block_bn3[0][0]',

) '4d_identity_block_relu4[0][0]']

4e_identity_block_relu4 (Activ (None, 14, 14, 1024 0 ['4e_identity_block_add[0][0]']

ation) )

4f_identity_block_conv1 (Conv2 (None, 14, 14, 256) 262400 ['4e_identity_block_relu4[0][0]']

D)

4f_identity_block_bn1 (BatchNo (None, 14, 14, 256) 1024 ['4f_identity_block_conv1[0][0]']

rmalization)

4f_identity_block_relu1 (Activ (None, 14, 14, 256) 0 ['4f_identity_block_bn1[0][0]']

ation)

4f_identity_block_conv2 (Conv2 (None, 14, 14, 256) 590080 ['4f_identity_block_relu1[0][0]']

D)

4f_identity_block_bn2 (BatchNo (None, 14, 14, 256) 1024 ['4f_identity_block_conv2[0][0]']

rmalization)

4f_identity_block_relu2 (Activ (None, 14, 14, 256) 0 ['4f_identity_block_bn2[0][0]']

ation)

4f_identity_block_conv3 (Conv2 (None, 14, 14, 1024 263168 ['4f_identity_block_relu2[0][0]']

D) )

4f_identity_block_bn3 (BatchNo (None, 14, 14, 1024 4096 ['4f_identity_block_conv3[0][0]']

rmalization) )

4f_identity_block_add (Add) (None, 14, 14, 1024 0 ['4f_identity_block_bn3[0][0]',

) '4e_identity_block_relu4[0][0]']

4f_identity_block_relu4 (Activ (None, 14, 14, 1024 0 ['4f_identity_block_add[0][0]']

ation) )

5a_conv_block_conv1 (Conv2D) (None, 7, 7, 512) 524800 ['4f_identity_block_relu4[0][0]']

5a_conv_block_bn1 (BatchNormal (None, 7, 7, 512) 2048 ['5a_conv_block_conv1[0][0]']

ization)

5a_conv_block_relu1 (Activatio (None, 7, 7, 512) 0 ['5a_conv_block_bn1[0][0]']

n)

5a_conv_block_conv2 (Conv2D) (None, 7, 7, 512) 2359808 ['5a_conv_block_relu1[0][0]']

5a_conv_block_bn2 (BatchNormal (None, 7, 7, 512) 2048 ['5a_conv_block_conv2[0][0]']

ization)

5a_conv_block_relu2 (Activatio (None, 7, 7, 512) 0 ['5a_conv_block_bn2[0][0]']

n)

5a_conv_block_conv3 (Conv2D) (None, 7, 7, 2048) 1050624 ['5a_conv_block_relu2[0][0]']

5a_conv_block_res_conv (Conv2D (None, 7, 7, 2048) 2099200 ['4f_identity_block_relu4[0][0]']

)

5a_conv_block_bn3 (BatchNormal (None, 7, 7, 2048) 8192 ['5a_conv_block_conv3[0][0]']

ization)

5a_conv_block_res_bn (BatchNor (None, 7, 7, 2048) 8192 ['5a_conv_block_res_conv[0][0]']

malization)

5a_conv_block_add (Add) (None, 7, 7, 2048) 0 ['5a_conv_block_bn3[0][0]',

'5a_conv_block_res_bn[0][0]']

5a_conv_block_relu4 (Activatio (None, 7, 7, 2048) 0 ['5a_conv_block_add[0][0]']

n)

5b_identity_block_conv1 (Conv2 (None, 7, 7, 512) 1049088 ['5a_conv_block_relu4[0][0]']

D)

5b_identity_block_bn1 (BatchNo (None, 7, 7, 512) 2048 ['5b_identity_block_conv1[0][0]']

rmalization)

5b_identity_block_relu1 (Activ (None, 7, 7, 512) 0 ['5b_identity_block_bn1[0][0]']

ation)

5b_identity_block_conv2 (Conv2 (None, 7, 7, 512) 2359808 ['5b_identity_block_relu1[0][0]']

D)

5b_identity_block_bn2 (BatchNo (None, 7, 7, 512) 2048 ['5b_identity_block_conv2[0][0]']

rmalization)

5b_identity_block_relu2 (Activ (None, 7, 7, 512) 0 ['5b_identity_block_bn2[0][0]']

ation)

5b_identity_block_conv3 (Conv2 (None, 7, 7, 2048) 1050624 ['5b_identity_block_relu2[0][0]']

D)

5b_identity_block_bn3 (BatchNo (None, 7, 7, 2048) 8192 ['5b_identity_block_conv3[0][0]']

rmalization)

5b_identity_block_add (Add) (None, 7, 7, 2048) 0 ['5b_identity_block_bn3[0][0]',

'5a_conv_block_relu4[0][0]']

5b_identity_block_relu4 (Activ (None, 7, 7, 2048) 0 ['5b_identity_block_add[0][0]']

ation)

5c_identity_block_conv1 (Conv2 (None, 7, 7, 512) 1049088 ['5b_identity_block_relu4[0][0]']

D)

5c_identity_block_bn1 (BatchNo (None, 7, 7, 512) 2048 ['5c_identity_block_conv1[0][0]']

rmalization)

5c_identity_block_relu1 (Activ (None, 7, 7, 512) 0 ['5c_identity_block_bn1[0][0]']

ation)

5c_identity_block_conv2 (Conv2 (None, 7, 7, 512) 2359808 ['5c_identity_block_relu1[0][0]']

D)

5c_identity_block_bn2 (BatchNo (None, 7, 7, 512) 2048 ['5c_identity_block_conv2[0][0]']

rmalization)

5c_identity_block_relu2 (Activ (None, 7, 7, 512) 0 ['5c_identity_block_bn2[0][0]']

ation)

5c_identity_block_conv3 (Conv2 (None, 7, 7, 2048) 1050624 ['5c_identity_block_relu2[0][0]']

D)

5c_identity_block_bn3 (BatchNo (None, 7, 7, 2048) 8192 ['5c_identity_block_conv3[0][0]']

rmalization)

5c_identity_block_add (Add) (None, 7, 7, 2048) 0 ['5c_identity_block_bn3[0][0]',

'5b_identity_block_relu4[0][0]']

5c_identity_block_relu4 (Activ (None, 7, 7, 2048) 0 ['5c_identity_block_add[0][0]']

ation)

avg_pool (AveragePooling2D) (None, 1, 1, 2048) 0 ['5c_identity_block_relu4[0][0]']

flatten_1 (Flatten) (None, 2048) 0 ['avg_pool[0][0]']

dropout (Dropout) (None, 2048) 0 ['flatten_1[0][0]']

fc2 (Dense) (None, 2) 4098 ['dropout[0][0]']

==================================================================================================

Total params: 23,591,810

Trainable params: 23,538,690

Non-trainable params: 53,120

__________________________________________________________________________________________________

三 编译

# 设置优化器

opt = tf.keras.optimizers.Adam(learning_rate=1e-7)

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])四 训练模型

from keras.callbacks import EarlyStopping

# 设置早停法

early_stopping = EarlyStopping(

monitor='val_loss',

patience=3,

verbose=1,

restore_best_weights=True

)

epochs = 10

history = model.fit(

train_ds,

validation_data=val_ds,

epochs=epochs,

callbacks=[early_stopping]

)Epoch 1/10 57/57 [==============================] - 709s 12s/step - loss: 0.9055 - accuracy: 0.6853 - val_loss: 1.0100 - val_accuracy: 0.4913 Epoch 2/10 57/57 [==============================] - 681s 12s/step - loss: 0.5338 - accuracy: 0.7667 - val_loss: 0.7880 - val_accuracy: 0.4978 Epoch 3/10 57/57 [==============================] - 662s 12s/step - loss: 0.4756 - accuracy: 0.7829 - val_loss: 0.7841 - val_accuracy: 0.4290 Epoch 4/10 57/57 [==============================] - 660s 12s/step - loss: 0.4223 - accuracy: 0.8102 - val_loss: 0.7710 - val_accuracy: 0.5466 Epoch 5/10 57/57 [==============================] - 662s 12s/step - loss: 0.3743 - accuracy: 0.8347 - val_loss: 0.8795 - val_accuracy: 0.5748 Epoch 6/10 57/57 [==============================] - 665s 12s/step - loss: 0.3481 - accuracy: 0.8468 - val_loss: 1.2726 - val_accuracy: 0.4579 Epoch 7/10 57/57 [==============================] - ETA: 0s - loss: 0.3365 - accuracy: 0.8556 Restoring model weights from the end of the best epoch: 4. 57/57 [==============================] - 661s 12s/step - loss: 0.3365 - accuracy: 0.8556 - val_loss: 0.9570 - val_accuracy: 0.5812 Epoch 7: early stopping

五 模型评估

# 获取实际训练轮数

actual_epochs = len(history.history['accuracy'])

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs_range = range(actual_epochs)

plt.figure(figsize=(12, 4))

# 绘制准确率

plt.subplot(1, 2, 1)

plt.plot(epochs_range, acc, label='Training Accuracy')

plt.plot(epochs_range, val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

# 绘制损失

plt.subplot(1, 2, 2)

plt.plot(epochs_range, loss, label='Training Loss')

plt.plot(epochs_range, val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

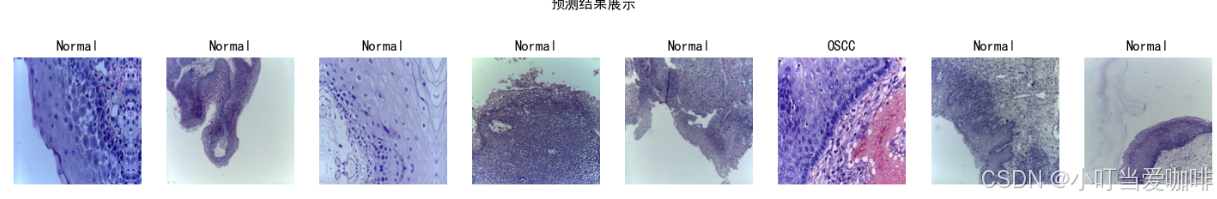

六 预测

import numpy as np

# 采用加载的模型(new_model)来看预测结果

plt.figure(figsize=(18, 3)) # 图形的宽为18高为5

plt.suptitle("预测结果展示")

for images, labels in val_ds.take(1):

for i in range(8):

ax = plt.subplot(1,8, i + 1)

# 显示图片

plt.imshow(images[i].numpy())

# 需要给图片增加一个维度

img_array = tf.expand_dims(images[i], 0)

# 使用模型预测图片中的人物

predictions = model.predict(img_array)

plt.title(class_names[np.argmax(predictions)])

plt.axis("off")

1/1 [==============================] - 1s 699ms/step 1/1 [==============================] - 0s 68ms/step 1/1 [==============================] - 0s 67ms/step 1/1 [==============================] - 0s 68ms/step 1/1 [==============================] - 0s 66ms/step 1/1 [==============================] - 0s 76ms/step 1/1 [==============================] - 0s 78ms/step 1/1 [==============================] - 0s 63ms/step