Flink快速上手

- 批处理

- Maven配置pom文件

- java编写wordcount代码

- 有界流处理

- 无界流处理

批处理

Maven配置pom文件

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.example</groupId>

<artifactId>com.atguigu</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<flink.version>1.17.0</flink.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients</artifactId>

<version>${flink.version}</version>

</dependency>

</dependencies>

</project>

java编写wordcount代码

基于DataSet API(过时的,不推荐)

之后用 DataStream API

package com.atguigu.wc;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.java.ExecutionEnvironment;

import org.apache.flink.api.java.operators.AggregateOperator;

import org.apache.flink.api.java.operators.DataSource;

import org.apache.flink.api.java.operators.FlatMapOperator;

import org.apache.flink.api.java.operators.UnsortedGrouping;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.util.Collector;

public class WordCountBatchDemo {

public static void main(String[] args) throws Exception {

//1.创建执行环境

ExecutionEnvironment env = ExecutionEnvironment.getExecutionEnvironment();

//2.读取数据,从文件中读取

DataSource<String> lineDS = env.readTextFile("input/word.txt");

//3.切分、转换(word,1)

FlatMapOperator<String, Tuple2<String, Integer>> wordAndOne = lineDS.flatMap(new FlatMapFunction<String, Tuple2<String, Integer>>() {

@Override

public void flatMap(String value, Collector<Tuple2<String, Integer>> out) throws Exception {

//Todo3.1 按照空格 切分单词

String[] words = value.split(" ");

//Todo3.2 将单词转换为(word,1)

for (String word : words) {

Tuple2<String, Integer> wordTuple2 = Tuple2.of(word, 1);

//Todo3.3 调用采集器collector 向下游发送数据

out.collect(wordTuple2);

}

}

});

//4.按照word分组

UnsortedGrouping<Tuple2<String, Integer>> wordAndOneGroupBy = wordAndOne.groupBy(0);

//5.各分组内聚合

AggregateOperator<Tuple2<String, Integer>> sum = wordAndOneGroupBy.sum(1);

//6.输出

sum.print();

}

}

有界流处理

package com.atguigu.wc;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

public class WordCountStreamDemo {

public static void main(String[] args) throws Exception {

//TODO 1. 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//TODO 2. 读取数据

DataStreamSource<String> lineDS = env.readTextFile("input/word.txt");

//TODO 3. 处理数据:切分/转换/分组/聚合

//TODO 3.1 处理数据:切分/转换

SingleOutputStreamOperator<Tuple2<String, Integer>> wordAndOneDS = lineDS.flatMap(new FlatMapFunction<String, Tuple2<String, Integer>>() {

@Override

public void flatMap(String value, Collector<Tuple2<String, Integer>> out) throws Exception {

//切分

String[] words = value.split(" ");

for (String word : words) {

//转换为二元组(word,1)

Tuple2<String, Integer> wordAndOne = Tuple2.of(word, 1);

//通过采集器 向下游发送数据

out.collect(wordAndOne);

}

}

});

//TODO 3.2 处理数据:分组

KeyedStream<Tuple2<String, Integer>, String> wordAndOneKS = wordAndOneDS.keyBy(

new KeySelector<Tuple2<String, Integer>, String>() {

@Override

public String getKey(Tuple2<String, Integer> value) throws Exception {

return value.f0;

}

});

//TODO 3.3 处理数据:聚合

SingleOutputStreamOperator<Tuple2<String, Integer>> sumDS = wordAndOneKS.sum(1);

//TODO 4. 输出数据

sumDS.print();

//TODO 5. 执行:sparkstreaming 最后 ssc.start()

env.execute();

}

}

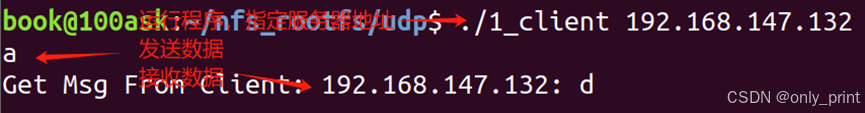

无界流处理

事件驱动

package com.atguigu.wc;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

public class WordCountSocketStream {

public static void main(String[] args) throws Exception {

//TODO 1. 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

DataStreamSource<String> socketDS = env.socketTextStream("hadoop102", 7777);

SingleOutputStreamOperator<Tuple2<String, Integer>> sum = socketDS

.flatMap((String value, Collector<Tuple2<String, Integer>> out) -> {

//切分

String[] words = value.split(" ");

for (String word : words) {

//转换为二元组(word,1)

//通过采集器 向下游发送数据

out.collect(Tuple2.of(word, 1));

}

}

)

.returns(Types.TUPLE(Types.STRING, Types.INT))

.keyBy(value -> value.f0)

.sum(1);

sum.print();

env.execute();

}

}

事件触发

来一个处理一个