华子目录

- lvs+keepalive

- 实验架构

- 实验前的准备工作

- 1.主机准备

- 2.KA1和KA2上安装`lvs+keepalive`

- 3.webserver1和webserver2上安装httpd

- 4.制作测试效果网页内容

- 5.所有主机关闭`firewalld`和`selinux`

- 6.开启httpd服务

- 实验步骤

- 1.webserver1和webserver2上配置vip

- 2.webserver1和webserver2上关闭arp响应

- 3.修改keepalived.conf配置文件

- 4.重启lvs+keepalived服务

- 测试

- vip测试

- 访问websever测试

- 高可用测试

- haproxy+keepalived

- 实验前的准备工作

- 1.主机准备

- 2.KA1和KA2上安装`haproxy+keepalive`

- 3.webserver1和webserver2上安装httpd

- 4.制作测试效果网页内容

- 5.所有主机关闭`firewalld`和`selinux`

- 6.开启httpd服务

- 实验步骤

- 1.`KA1`和`KA2`两个节点启用`内核参数`

- 2.配置`haproxy.cfg`配置文件

- 3.编写脚本,用于检测`haproxy`的状态

- 4.修改`keepalived.conf`配置文件

- 5.重启`haproxy+keepalived`

- 测试

- vip测试

- 访问websever测试

- 高可用测试

lvs+keepalive

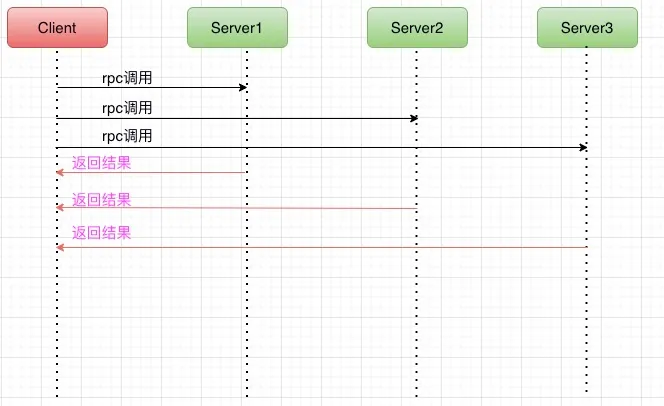

实验架构

- 实验双主的

lvs-dr模式

- 由于是

双主模式,所以需要2个vip:KA1为主时的vip172.25.254.100。KA2为主时的vip172.25.254.200 KA1的真实IP172.25.254.10- 由于是

lvs-dr模式,websever1和webserver2上同样都必须有两个vip172.25.254.100 172.25.254.200 KA2的真实IP172.25.254.20webserver1的真实IP172.25.254.110webserver2的真实IP172.25.254.120

实验前的准备工作

1.主机准备

- 这里我们准备

4台主机,两台做web服务器,两台做keepalive服务器,简称KA

2.KA1和KA2上安装lvs+keepalive

[root@KA1 ~]# yum install ipvsadm keepalived -y

[root@KA2 ~]# yum install ipvsadm keepalived -y

3.webserver1和webserver2上安装httpd

[root@webserver1 ~]# yum install httpd -y

[root@webserver2 ~]# yum install httpd -y

4.制作测试效果网页内容

[root@webserver1 ~]# echo webserver1-172.25.254.110 > /var/www/html/index.html

[root@webserver2 ~]# echo webserver2-172.25.254.120 > /var/www/html/index.html

5.所有主机关闭firewalld和selinux

[root@KA1 ~]# systemctl is-active httpd

inactive

[root@KA1 ~]# getenforce

Disabled

[root@KA2 ~]# systemctl is-active httpd

inactive

[root@KA2 ~]# getenforce

Disabled

[root@webserver1 ~]# systemctl is-active httpd

inactive

[root@webserver1 ~]# getenforce

Disabled

[root@webserver2 ~]# systemctl is-active httpd

inactive

[root@webserver2 ~]# getenforce

Disabled

6.开启httpd服务

[root@webserver1 ~]# systemctl enable --now httpd

[root@webserver2 ~]# systemctl enable --now httpd

实验步骤

1.webserver1和webserver2上配置vip

- webserver上

[root@webserver1 ~]# ip addr add 172.25.254.100/32 dev lo

[root@webserver1 ~]# ip addr add 172.25.254.200/32 dev lo

- webserver2上

[root@webserver2 ~]# ip addr add 172.25.254.100/32 dev lo

[root@webserver2 ~]# ip addr add 172.25.254.200/32 dev lo

2.webserver1和webserver2上关闭arp响应

- webserver1上(临时关闭,开机后无效)

[root@webserver1 ~]# echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

[root@webserver1 ~]# echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

[root@webserver1 ~]# echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

[root@webserver1 ~]# echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

- webserver2上(临时关闭,开机后无效)

[root@webserver2 ~]# echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

[root@webserver2 ~]# echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

[root@webserver2 ~]# echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

[root@webserver2 ~]# echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

3.修改keepalived.conf配置文件

- KA1上

[root@KA1 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

3066136553@qq.com

}

notification_email_from keepalived@timinglee.org

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id ka1.timinglee.org

vrrp_skip_check_adv_addr

#vrrp_strict #必须把这里注释掉,否则keepalived服务无法启动

vrrp_garp_interval 0

vrrp_gna_interval 0

vrrp_mcast_group4 224.0.0.18

}

vrrp_instance VI_1 { #第一组虚拟路由

state MASTER #主

interface eth0 #流量接口

virtual_router_id 100 #主备两主机上的虚拟路由id必须一致,相同id的主机为同一个组

priority 100 #优先级大的为主

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress { #虚拟出来的接口为eth0:1

172.25.254.100/24 dev eth0 label eth0:1

}

unicast_src_ip 172.25.254.10 #发单播包,主,发送方

unicast_peer {

172.25.254.20 #备,接受方

}

}

vrrp_instance VI_2 { #第二组虚拟路由

state BACKUP #备

interface eth0

virtual_router_id 200

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.254.200/24 dev eth0 label eth0:2

}

unicast_src_ip 172.25.254.10

unicast_peer {

172.25.254.20

}

}

virtual_server 172.25.254.100 80 { #当访问该vip时

delay_loop 6

lb_algo wrr #加权轮询算法

lb_kind DR

protocol TCP

real_server 172.25.254.110 80 { #转到这里主机上

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 2

delay_before_retry 2

}

}

real_server 172.25.254.120 80 { #转到这个主机上

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 2

delay_before_retry 2

}

}

}

virtual_server 172.25.254.200 80 { #当访问这个vip的80端口时

delay_loop 6

lb_algo wrr #加权轮询算法

lb_kind DR

protocol TCP

real_server 172.25.254.110 80 { #转到这个主机上

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 2

delay_before_retry 2

}

}

real_server 172.25.254.120 80 { #转到这个主机上

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 2

delay_before_retry 2

}

}

}

- KA2上

[root@KA2 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

3066136553@qq.com

}

notification_email_from keepalived@timinglee.org

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id ka1.timinglee.org

vrrp_skip_check_adv_addr

#vrrp_strict #必须把这里注释掉,否则keepalived服务无法启动

vrrp_garp_interval 0

vrrp_gna_interval 0

vrrp_mcast_group4 224.0.0.18

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 100

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.254.100/24 dev eth0 label eth0:1

}

unicast_src_ip 172.25.254.20

unicast_peer {

172.25.254.10

}

}

vrrp_instance VI_2 {

state MASTER

interface eth0

virtual_router_id 200

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.254.200/24 dev eth0 label eth0:2

}

unicast_src_ip 172.25.254.20

unicast_peer {

172.25.254.10

}

}

virtual_server 172.25.254.100 80 {

delay_loop 6

lb_algo wrr

lb_kind DR

protocol TCP

real_server 172.25.254.110 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 2

delay_before_retry 2

}

}

real_server 172.25.254.120 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 2

delay_before_retry 2

}

}

}

virtual_server 172.25.254.200 80 {

delay_loop 6

lb_algo wrr

lb_kind DR

protocol TCP

real_server 172.25.254.110 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 2

delay_before_retry 2

}

}

real_server 172.25.254.120 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 2

delay_before_retry 2

}

}

}

4.重启lvs+keepalived服务

[root@KA1 ~]# systemctl restart ipvsadm.service #lvs服务必须开

[root@KA1 ~]# systemctl restart keepalived.service

[root@KA2 ~]# systemctl restart ipvsadm.service

[root@KA2 ~]# systemctl restart keepalived.service

测试

vip测试

[root@KA1 ~]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.25.254.10 netmask 255.255.255.0 broadcast 172.25.254.255

inet6 fe80::4e21:e4b4:36e:6d14 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:a7:b6:fb txqueuelen 1000 (Ethernet)

RX packets 8373 bytes 2451524 (2.3 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 6303 bytes 625002 (610.3 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.25.254.100 netmask 255.255.255.0 broadcast 0.0.0.0

ether 00:0c:29:a7:b6:fb txqueuelen 1000 (Ethernet)

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 56 bytes 4228 (4.1 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 56 bytes 4228 (4.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@KA2 ~]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.25.254.20 netmask 255.255.255.0 broadcast 172.25.254.255

inet6 fe80::7baa:9520:639b:5e48 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:85:04:e5 txqueuelen 1000 (Ethernet)

RX packets 8714 bytes 7279852 (6.9 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 4561 bytes 417141 (407.3 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0:2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.25.254.200 netmask 255.255.255.0 broadcast 0.0.0.0

ether 00:0c:29:85:04:e5 txqueuelen 1000 (Ethernet)

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 96 bytes 11546 (11.2 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 96 bytes 11546 (11.2 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

访问websever测试

- 访问

172.25.254.100

- 访问

172.25.254.200

高可用测试

- 当KA1宕机后,vip会跑到KA2上

- web服务正常

- 当webserver1宕机后,keepalive也可以检测到,并会让webserver2提供web服务

haproxy+keepalived

- 实验

双主的haproxy-dr模式

- 由于是

双主模式,所以需要2个vip:KA1为主时的vip172.25.254.100。KA2为主时的vip172.25.254.200 KA1的真实IP172.25.254.10- 由于是

lvs-dr模式,websever1和webserver2上同样都必须有两个vip172.25.254.100 172.25.254.200 KA2的真实IP172.25.254.20webserver1的真实IP172.25.254.110webserver2的真实IP172.25.254.120

实验前的准备工作

- 重置上面的实验环境,搭建新的环境

1.主机准备

- 这里我们准备

4台主机,两台做web服务器,两台做keepalive服务器,简称KA

2.KA1和KA2上安装haproxy+keepalive

[root@KA1 ~]# yum install haproxy -y

[root@KA1 ~]# yum install keepalived -y

[root@KA2 ~]# yum install haproxy -y

[root@KA2 ~]# yum install keepalived -y

3.webserver1和webserver2上安装httpd

[root@webserver1 ~]# yum install httpd -y

[root@webserver2 ~]# yum install httpd -y

4.制作测试效果网页内容

[root@webserver1 ~]# echo webserver1-172.25.254.110 > /var/www/html/index.html

[root@webserver2 ~]# echo webserver2-172.25.254.120 > /var/www/html/index.html

5.所有主机关闭firewalld和selinux

[root@KA1 ~]# systemctl is-active httpd

inactive

[root@KA1 ~]# getenforce

Disabled

[root@KA2 ~]# systemctl is-active httpd

inactive

[root@KA2 ~]# getenforce

Disabled

[root@webserver1 ~]# systemctl is-active httpd

inactive

[root@webserver1 ~]# getenforce

Disabled

[root@webserver2 ~]# systemctl is-active httpd

inactive

[root@webserver2 ~]# getenforce

Disabled

6.开启httpd服务

[root@webserver1 ~]# systemctl enable --now httpd

[root@webserver2 ~]# systemctl enable --now httpd

实验步骤

1.KA1和KA2两个节点启用内核参数

[root@KA1 ~]# vim /etc/sysctl.conf

net.ipv4.ip_nonlocal_bind=1

[root@KA1 ~]# sysctl -p

net.ipv4.ip_nonlocal_bind = 1

[root@KA2 ~]# vim /etc/sysctl.conf

net.ipv4.ip_nonlocal_bind=1

[root@KA2 ~]# sysctl -p

net.ipv4.ip_nonlocal_bind = 1

2.配置haproxy.cfg配置文件

- 在

KA1上haproxy.cfg文件末尾添加以下内容

[root@KA1 ~]# vim /etc/haproxy/haproxy.cfg

listen webserver

bind 172.25.254.100:80,172.25.254.200:80

mode http

balance roundrobin

server web1 172.25.254.110:80 check inter 2 fall 3 rise 5

server web2 172.25.254.120:80 check inter 2 fall 3 rise 5

- 在

KA2上haproxy.cfg文件末尾添加以下内容

[root@KA2 ~]# vim /etc/haproxy/haproxy.cfg

listen webserver

bind 172.25.254.100:80,172.25.254.200:80

mode http

balance roundrobin

server web1 172.25.254.110:80 check inter 2 fall 3 rise 5

server web2 172.25.254.120:80 check inter 2 fall 3 rise 5

3.编写脚本,用于检测haproxy的状态

- 在

KA1上

[root@KA1 ~]# vim /etc/keepalived/test.sh

#!/bin/bash

killall -0 haproxy

[root@KA1 ~]# chmod +x /etc/keepalived/test.sh

- 在

KA2上

[root@KA2 ~]# vim /etc/keepalived/test.sh

#!/bin/bash

killall -0 haproxy

[root@KA2 ~]# chmod +x /etc/keepalived/test.sh

4.修改keepalived.conf配置文件

- 在

KA1上

[root@KA1 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

3066136553@qq.com

}

notification_email_from keepalived@timinglee.org

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id ka1.timinglee.org

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

vrrp_mcast_group4 224.0.0.18

}

vrrp_script check_haproxy { #在虚拟路由模块的前面添加这个模块

script "/etc/keepalived/test.sh" #这里写检测脚本的路径

interval 1

weight -30 #当检测到haproxy挂掉后,降低优先级

fall 2

rise 2

timeout 2

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 100

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.254.100/24 dev eth0 label eth0:1

}

unicast_src_ip 172.25.254.10

unicast_peer {

172.25.254.20

}

track_script { #在虚拟路由模块中添加这个小模块

check_haproxy #这里的名字要和上面vrrp_script模块中的名字一致

}

}

vrrp_instance VI_2 {

state BACKUP

interface eth0

virtual_router_id 200

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.254.200/24 dev eth0 label eth0:2

}

unicast_src_ip 172.25.254.10

unicast_peer {

172.25.254.20

}

track_script { #在虚拟路由模块中添加这个小模块

check_haproxy #这里的名字要和上面vrrp_script模块中的名字一致

}

}

- 在KA2上

[root@KA2 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

3066136553@qq.com

}

notification_email_from keepalived@timinglee.org

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id ka1.timinglee.org

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

vrrp_mcast_group4 224.0.0.18

}

vrrp_script check_haproxy { #在虚拟路由模块的前面添加这个模块

script "/etc/keepalived/test.sh" #这里写检测脚本的路径

interval 1

weight -30 #当检测到haproxy挂掉后,降低优先级

fall 2

rise 2

timeout 2

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 100

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.254.100/24 dev eth0 label eth0:1

}

unicast_src_ip 172.25.254.20

unicast_peer {

172.25.254.10

}

track_script { #在虚拟路由模块中添加这个小模块

check_haproxy #这里的名字要和上面vrrp_script模块中的名字一致

}

}

vrrp_instance VI_2 {

state MASTER

interface eth0

virtual_router_id 200

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.254.200/24 dev eth0 label eth0:2

}

unicast_src_ip 172.25.254.20

unicast_peer {

172.25.254.10

}

track_script { #在虚拟路由模块中添加这个小模块

check_haproxy #这里的名字要和上面vrrp_script模块中的名字一致

}

}

5.重启haproxy+keepalived

[root@KA1 ~]# systemctl restart haproxy.service

[root@KA1 ~]# systemctl restart keepalived.service

[root@KA2 ~]# systemctl restart haproxy.service

[root@KA2 ~]# systemctl restart keepalived.service

测试

vip测试

- KA1上

- KA2上

访问websever测试

- 访问vip1

172.25.254.100

- 访问vip2

172.25.254.200

高可用测试

- 当KA1宕机时,vip就会跑到KA2上

- 当

webserver1宕机时,keepalived会自动检测到,并让webserver2提供服务