提出什么模块

解决什么问题

图、贡献,模型架构

图1

图1:COUNTGD能够同时使用视觉示例和文本提示来产生高度准确的对象计数(a),但也无缝支持仅使用文本查询或仅使用视觉示例进行计数(b)。多模态视觉示例和文本查询为开放世界计数任务带来了额外的灵活性,例如使用一个短语(c),或者添加额外的约束(“左”或“右”的字样)来选择对象的一个子集(d)。这些示例取自FSC-147 [39]和CountBench [36]测试集。视觉示例显示为黄色框。(d)展示了模型预测的置信度图,其中颜色强度高表示置信度高。

详细讲解:

-

COUNTGD:这似乎是一个模型或系统的名称,它能够进行对象计数任务,并且可以接受不同类型的输入。

-

视觉示例和文本提示:COUNTGD可以接受视觉示例(如图像中的对象)和文本提示(如描述性文本)作为输入,以提高计数的准确性。

-

多模态查询:模型支持多模态输入,即同时使用视觉和文本信息,这增加了处理计数任务的灵活性。

-

开放世界计数任务:指的是在现实世界环境中进行的对象计数,可能涉及各种不同的场景和条件。

-

短语和约束的使用:用户可以使用短语或添加约束词(如“左”或“右”)来指定计数任务的特定要求,模型能够根据这些指令选择计数的对象子集。

-

FSC-147和CountBench测试集:这些是用于评估COUNTGD性能的数据集,示例图像来自这些测试集。

-

视觉示例的可视化:在图像中,视觉示例通常用黄色框表示,以便于用户识别模型正在计数的对象。

-

置信度图:模型预测的置信度图是一种可视化工具,用于展示模型对其计数预测的确定程度。颜色强度高的地方表示模型对该区域包含对象的预测更有信心。

这段文献摘要说明了COUNTGD模型在进行对象计数时的多功能性和灵活性,以及它如何通过多模态输入提高计数的准确性和适应性。

图2

图3

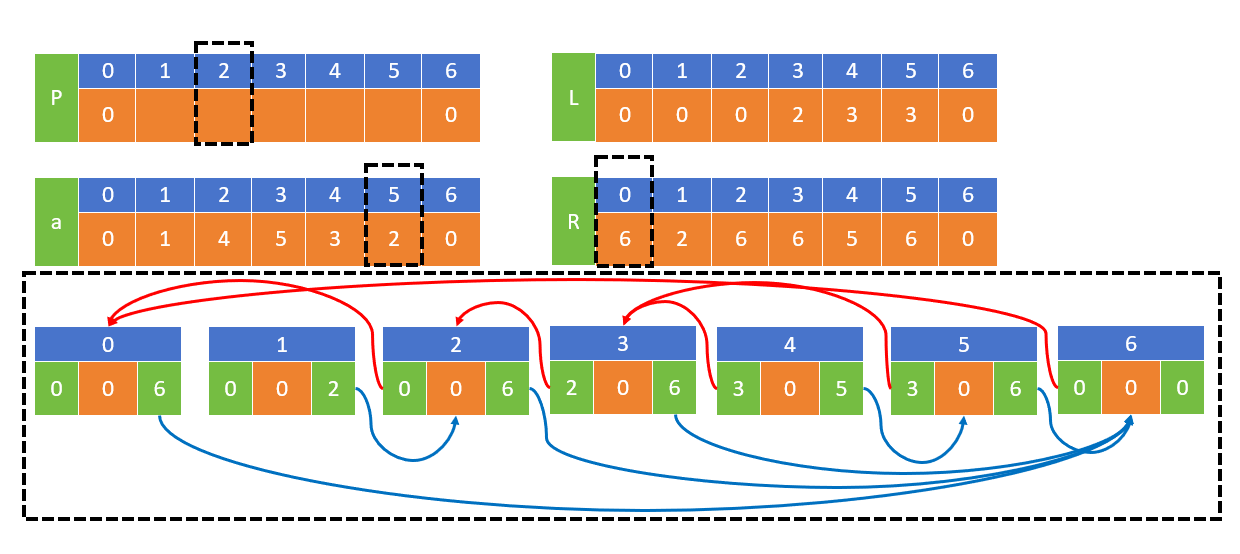

图3:图像和视觉示例的视觉特征提取管道。(a) 对于输入图像,使用标准的Swin Transformer模型在多个空间分辨率下提取视觉特征图。(b) 对于具有相应边界框的视觉示例,首先将输入图像的多尺度视觉特征图上采样到相同的分辨率,然后将这些特征图连接在一起,并通过1×1卷积将其投影到256个通道。最后,我们应用RoIAlign(区域兴趣对齐)算法,将边界框坐标与这些特征图匹配,从而获取视觉示例的特征。

这张图展示了COUNTGD模型中用于提取图像和视觉示例特征的流程。

-

部分(a):

对输入的图像,Swin Transformer模型被用来生成在不同分辨率下的视觉特征图,这些特征图可以表示图像中不同尺度的信息。通过在多个分辨率(如1/32, 1/16, 1/8)下提取特征,模型能够捕捉到图像中的细节。

3.2 COUNTGD Architecture Components

Design choices and relation to GroundingDINO. We choose GroundingDINO [30] over other VLMs due to its pretraining on visual grounding data, providing it with more fine-grained features in comparison to other VLMs such as CLIP [17].

设计选择与GroundingDINO的关系。我们选择GroundingDINO [30] 而不是其他视觉语言模型(VLMs),因为它在视觉定位数据上进行了预训练,与CLIP [17] 等其他VLMs相比,能够提供更细粒度的特征。

To extend GroundingDINO to accept visual exemplars, we cast them as text tokens. Because both the visual exemplars and the text specify the object, we posit that the visual exemplars can be treated in the same way as the text tokens by GroundingDINO and integrate them into the training and inference procedures as such. In treating the visual exemplars as additional text tokens within a phrase, we add self-attention between the phrase corresponding to the visual exemplar and the visual exemplar rather than keeping them separate. This allows COUNTGD to learn to fuse the visual exemplar and text tokens to form a more informative specification of the object to count. Similarly, cross-attention between the image and text features in GroundingDINO’s feature enhancer and crossmodality decoder becomes cross-attention between the image and the fused visual exemplar and text features in COUNTGD. Language-guided query selection in GroundingDINO becomes language and visual exemplar-guided query selection in COUNTGD. In this way, COUNTGD naturally extends GroundingDINO to input both text and visual exemplars to describe the object.

为了扩展 GroundingDINO 以接受视觉范例,我们将其视为文本标记。由于视觉范例和文本都指定了对象,我们假设视觉范例可以像文本标记一样由 GroundingDINO 处理,并将其整合到训练和推理过程中。通过将视觉范例视为短语中的额外文本标记,我们在与视觉范例对应的短语和视觉范例之间添加了自注意力,而不是将它们分开。这使得 COUNTGD 能够学习融合视觉范例和文本标记,以形成更具信息性的对象计数规格。同样地,GroundingDINO 的特征增强器和交叉模态解码器中的图像和文本特征之间的交叉注意力,变成了 COUNTGD 中图像与融合后的视觉范例和文本特征之间的交叉注意力。GroundingDINO 中的语言引导查询选择变成了 COUNTGD 中的语言和视觉范例引导查询选择。通过这种方式,COUNTGD 自然地扩展了 GroundingDINO,使其可以输入文本和视觉范例来描述对象。

In GroundingDINO, the image encoder fθSwinT is pre-trained on abundant detection and phrase grounding data with the text encoder, fθTT, providing it with rich region and text-aware features. Since we wish to build on this pre-trained joint vision-language embedding, we keep the image encoder fθSwinT and the text encoder fθTT frozen

4.4 Ablation Study

Uni-Modal vs. Multi-Modal Training. In Table 3, we compare COUNTGD’s performance using different training and inference procedures on FSC-147 [39]. Training on text only and testing with text only achieves performance comparable to state-of-the-art counting accuracy for text-only approaches, demonstrating the superiority of the GroundingDINO [30] architecture that we leverage. Training with visual exemplars only and testing with visual exemplars only results in state-of-the-art performance on two out of four of the metrics (mean absolute errors on both the validation and test sets) for visual exemplar-only approaches. This is surprising given that GroundingDINO was pretrained to relate text to images not visual exemplars to images. Despite this, COUNTGD performs remarkably well in this setting. Multi-modal training and testing with both visual exemplars and text beats both uni-modal approaches and sets a new state-of-the-art for open-world object counting. This ablation study shows that the visual exemplars provide more information than the text in FSC-147 as the performance with visual exemplars only is significantly better than the performance with text only. It also demonstrates that multi-modal training and inference is the superior strategy as it allows COUNTGD to take advantage of two sources of information about the object instead of one. In Table 4 in the Appendix, we additionally include an ablation study showing the influence of our proposed SAM Test-time normalization and adaptive cropping strategies.

单模态与多模态训练。 在表3中,我们比较了 COUNTGD 在 FSC-147 [39] 数据集上使用不同训练和推理程序的性能。仅使用文本进行训练并用文本进行测试的结果表现出与现有文本单一方法的最先进计数准确性相当,展示了我们利用的 GroundingDINO [30] 架构的优越性。仅使用视觉范例进行训练并用视觉范例进行测试在四个指标中的两个(验证集和测试集上的平均绝对误差)上取得了最先进的性能。这一点令人惊讶,因为 GroundingDINO 是在将文本与图像关联的情况下进行预训练的,而不是将视觉范例与图像关联。尽管如此,COUNTGD 在这种设置下表现得非常出色。多模态训练和同时使用视觉范例与文本进行测试的表现优于单模态方法,并为开放世界物体计数设立了新的最先进水平。这项消融研究显示,在 FSC-147 数据集中,视觉范例提供的信息比文本更多,因为仅使用视觉范例的性能显著优于仅使用文本的性能。它还表明,多模态训练和推理是更优策略,因为它使 COUNTGD 能够利用有关对象的两个信息来源,而不是一个。在附录中的表4中,我们还包括了一项消融研究,展示了我们提出的 SAM 测试时归一化和自适应裁剪策略的影响。

4.5 Language and Exemplar Interactions

Up to this point we have used the text and visual exemplar prompts to specify the target object in a complementary manner; for example giving a visual exemplar of a ‘strawberry’ with the text ‘strawberry’. It has been seen that the counting performance with prompts in both modalities is, in general, equal or superior to text alone. In this section we investigate qualitatively the case where the text refines or filters the visual information provided by the exemplars. For example, where the visual exemplar is car, but the text specifies the color, and only cars of that color are counted.

到目前为止,我们已经使用文本和视觉范例提示以互补的方式来指定目标对象;例如,给出“草莓”的视觉范例和“草莓”的文本。已经观察到,使用这两种模态的提示进行计数的性能通常与仅使用文本相当或更优。在这一部分,我们定性地研究了文本如何细化或过滤由范例提供的视觉信息的情况。例如,视觉范例是“汽车”,但文本指定了颜色,那么只有该颜色的汽车才会被计数。

In this study, unlike before, we freeze the feature enhancer in addition to the image and text encoders and finetune the rest of the model on FSC-147 [39]. We find that freezing the feature enhancer is necessary for many of these interactions to emerge. Once trained, the new model can use the text to filter instances picked out by the exemplar, and the exemplar can increase the confidence when it reinforces the text. In Figure 5 we show several examples of the interactions observed.

在这项研究中,与之前不同,我们除了冻结图像和文本编码器外,还冻结了特征增强器,并在 FSC-147 [39] 数据集上对模型的其余部分进行微调。我们发现,冻结特征增强器对于许多这种交互的出现是必要的。一旦训练完成,新模型可以利用文本来过滤由范例挑选出的实例,而当范例强化文本时,范例可以增加置信度。在图5中,我们展示了观察到的几种交互示例。

5 Conclusion & Future Work

We have extended the generality of open-world counting by introducing a model that can accept visual exemplars or text descriptions or both as prompts to specify the target object to count. The complementarity of these prompts in turn leads to improved counting performance. There are three research directions that naturally follow on from this work: (i) the performance could probably be further improved by training on larger scale datasets, for example using synthetic data as demonstrated recently for counting [24]; (ii) a larger training set would enable a thorough investigation of freezing more of the GroundingDINO model when adding our new visual exemplar modules; and finally, (iii) the model does not currently predict the errors of its counting. We discuss this point in the Limitations in the Appendix.

我们通过引入一个可以接受视觉范例、文本描述或两者作为提示来指定要计数的目标对象的模型,扩展了开放世界计数的通用性。这些提示的互补性反过来又提高了计数性能。基于这项工作,有三个自然的研究方向: (i) 通过在更大规模的数据集上进行训练,可能会进一步提高性能,例如,最近在计数中展示的合成数据 [24]; (ii) 更大的训练集将使我们能够彻底研究在添加新的视觉范例模块时冻结更多 GroundingDINO 模型的可能性;最后,(iii) 目前模型并未预测其计数的错误。我们在附录中的限制部分讨论了这一点。