大模型RAG企业级项目实战:Chatdoc智能助手文档(从零开始,适合新手)

大模型RAG企业级项目实战完整链接

LLM模型缺陷:

知识是有局限性的(缺少垂直领域/非公开知识/数据安全)

知识实时性(训练周期长、成本高)

幻觉问题(模型生成的问题)

方法:Retrieval-Augmented Generation(RAG)

检索:根据用户请求从外部知识源检索相关上下文

增强:用户查询和检索到的附加上下文填充至prompt

生成:检索增强提示输入到LLM生成请求响应

步骤1:构建数据索引(Index)

1)加载不同来源、不同格式文档

2)将文档分割块(chunk)

3)对切块的数据进行向量化(embedding)并存储到向量数据库

完整的 RAG 应用流程主要包含两个阶段:

数据准备阶段:(A)数据提取–> (B)分块(Chunking)–> (C)向量化(embedding)–> (D)数据入库

检索生成阶段:(1)问题向量化–> (2)根据问题查询匹配数据–> (3)获取索引数据 --> (4)将数据注入Prompt–> (5)LLM生成答案

实战:Chatdoc 智能助手文档

读取pdf、excel、doc三种常见的文档格式

根据文档内容,智能抽取内容并输出相应格式

1、安装相关的包

pip install docx2txt

pip install pypdf

pip install nltk

pip install -U langchain-community

2、第一个测试,加载docx文件

#导入必须的包

from langchain.document_loaders import Docx2txtLoader

#定义chatdoc

class Chatdoc():

def getFile():

#读取文件

loader = Docx2txtLoader('/kaggle/input/data-docxdata-docx/.docx')

text = loader.load()

return text

Chatdoc.getFile()

3、第二个测试 加载pdf文档

from langchain.document_loaders import PyPDFLoader

#定义chatpdf

class ChatPDF():

def getFile():

try:

#读取文件

loader = PyPDFLoader('/kaggle/input/data-pdf/-2022.pdf')

text = loader.load()

return text

except Exception as e:

print(f"Error loading files:{e}")

ChatPDF.getFile()

4、第三个测试,加载xlsx

#出现这个问题:Error loading files:No module named 'unstructured'

pip install unstructured

from langchain.document_loaders import UnstructuredExcelLoader

#定义chatxls

class Chatxls():

def getFile():

try:

#读取文件

loader = UnstructuredExcelLoader('/kaggle/input/data-xls/TCGA.xlsx',mode = 'elements')

text = loader.load()

return text

except Exception as e:

print(f"Error loading files:{e}")

Chatxls.getFile()

5、整合优化,动态加载三种文件格式

#导入包

from langchain.document_loaders import UnstructuredExcelLoader,PyPDFLoader,Docx2txtLoader

#定义chatdoc

class ChatDoc():

def getFile(self):

doc = self.doc

loader = {

"docx": Docx2txtLoader,

"pdf": PyPDFLoader,

"xlsx": UnstructuredExcelLoader,

}

file_extension = doc.split('.')[-1] #加载文件,选择文件类型

loader_class = loader.get(file_extension)

if loader_class:

try:

loader = loader_class(doc)

text = loader.load()

return text

except Exception as e:

print(f'Error loading{file_extension} files:{e}')

else:

print(f'Unsupporyed file extension: {file_extension}')

chat_doc = ChatDoc()

chat_doc.doc = '/kaggle/input/data-docxdata-docx/.docx'

chat_doc.getFile()

6、添加切割器,对文本进行切割

#导入包

from langchain.document_loaders import UnstructuredExcelLoader,PyPDFLoader,Docx2txtLoader

from langchain.text_splitter import CharacterTextSplitter

#定义chatdoc

class ChatDoc():

def __init__(self):

self.doc = None

self.splitText = []

def getFile(self):

doc = self.doc

loader = {

"docx": Docx2txtLoader,

"pdf": PyPDFLoader,

"xlsx": UnstructuredExcelLoader,

}

file_extension = doc.split('.')[-1] #加载文件,选择文件类型

loader_class = loader.get(file_extension)

if loader_class:

try:

loader = loader_class(doc)

text = loader.load()

return text

except Exception as e:

print(f'Error loading{file_extension} files:{e}')

return None

else:

print(f'Unsupporyed file extension: {file_extension}')

return None

def splitsentences(self):

full_text = self.getFile() #获取文档内容

if full_text != None:

#对文档进行切分,chunk_size 指定了每个文本块的最大字符数,而 chunk_overlap 指定了每个块之间的重叠字符数

text_splitter = CharacterTextSplitter(chunk_size = 200,chunk_overlap = 20)

self.splitText = text_splitter.split_documents(full_text)

chat_doc = ChatDoc()

chat_doc.doc = '/kaggle/input/data-docxdata-docx/.docx'

chat_doc.splitsentences()

print(chat_doc.splitText)

7、向量化与存储索引

#导入包

from langchain.document_loaders import UnstructuredExcelLoader,PyPDFLoader,Docx2txtLoader

from langchain.text_splitter import CharacterTextSplitter

from langchain.embeddings import HuggingFaceEmbeddings

from langchain.vectorstores import FAISS

__import__('pysqlite3')

import sys

sys.modules['sqlite3'] = sys.modules.pop('pysqlite3')

#定义chatdoc

class ChatDoc():

def __init__(self):

self.doc = None

self.splitText = []

#加载文本

def getFile(self):

doc = self.doc

loader = {

"docx": Docx2txtLoader,

"pdf": PyPDFLoader,

"xlsx": UnstructuredExcelLoader,

}

file_extension = doc.split('.')[-1] #加载文件,选择文件类型

loader_class = loader.get(file_extension)

if loader_class:

try:

loader = loader_class(doc)

text = loader.load()

return text

except Exception as e:

print(f'Error loading{file_extension} files:{e}')

return None

else:

print(f'Unsupporyed file extension: {file_extension}')

return None

#处理文本的函数

def splitsentences(self):

full_text = self.getFile() #获取文档内容

if full_text != None:

#对文档进行切分,chunk_size 指定了每个文本块的最大字符数,而 chunk_overlap 指定了每个块之间的重叠字符数

text_splitter = CharacterTextSplitter(chunk_size = 200,chunk_overlap = 20)

self.splitText = text_splitter.split_documents(full_text)

#向量化与向量存储

def embeddingAndVectorDB(self):

embeddings = HuggingFaceEmbeddings()

db = FAISS.from_documents(documents = self.splitText, embedding = embeddings)

return db

chat_doc = ChatDoc()

chat_doc.doc = '/kaggle/input/data-docxdata-docx/.docx'

chat_doc.splitsentences()

chat_doc.embeddingAndVectorDB()

<langchain_community.vectorstores.faiss.FAISS at 0x7b0d79747160>

8、索引并使用自然语言找出相关的文本块

#导入包

from langchain.document_loaders import UnstructuredExcelLoader,PyPDFLoader,Docx2txtLoader

from langchain.text_splitter import CharacterTextSplitter

from langchain.embeddings import HuggingFaceEmbeddings

from langchain.vectorstores import FAISS

__import__('pysqlite3')

import sys

sys.modules['sqlite3'] = sys.modules.pop('pysqlite3')

#定义chatdoc

class ChatDoc():

def __init__(self):

self.doc = None

self.splitText = []

#加载文本

def getFile(self):

doc = self.doc

loader = {

"docx": Docx2txtLoader,

"pdf": PyPDFLoader,

"xlsx": UnstructuredExcelLoader,

}

file_extension = doc.split('.')[-1] #加载文件,选择文件类型

loader_class = loader.get(file_extension)

if loader_class:

try:

loader = loader_class(doc)

text = loader.load()

return text

except Exception as e:

print(f'Error loading{file_extension} files:{e}')

return None

else:

print(f'Unsupporyed file extension: {file_extension}')

return None

#处理文本的函数

def splitsentences(self):

full_text = self.getFile() #获取文档内容

if full_text != None:

#对文档进行切分,chunk_size 指定了每个文本块的最大字符数,而 chunk_overlap 指定了每个块之间的重叠字符数

text_splitter = CharacterTextSplitter(chunk_size = 200,chunk_overlap = 20)

self.splitText = text_splitter.split_documents(full_text)

#向量化与向量存储

def embeddingAndVectorDB(self):

embeddings = HuggingFaceEmbeddings()

db = FAISS.from_documents(documents = self.splitText, embedding = embeddings)

return db

#提问并找到相关的文本块

def askAndFindFiles(self, question):

db = self.embeddingAndVectorDB()

#用于设置数据库以供检索操作使用

retriever = db.as_retriever()

results = retriever.invoke(question)

return results

chat_doc = ChatDoc()

chat_doc.doc = '/kaggle/input/data-docxdata-docx/.docx'

chat_doc.splitsentences()

chat_doc.askAndFindFiles("这篇文章的题目是什么?")

此种方式可以找到一些相关的信息块,但是不够精准,接下来使用多重查询进行优化,提高查询的精度

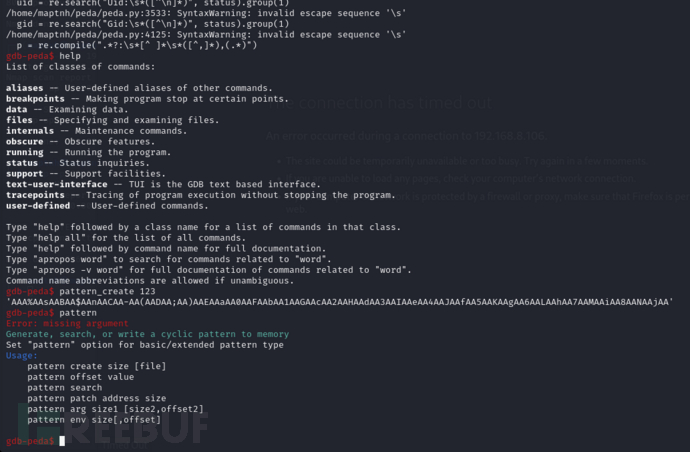

9、使用多重查询提高文档检索精确度

#导入包

from langchain.document_loaders import UnstructuredExcelLoader,PyPDFLoader,Docx2txtLoader

from langchain.text_splitter import CharacterTextSplitter

from langchain.embeddings import HuggingFaceEmbeddings

from langchain.vectorstores import FAISS

#引入多重查询检索和LLM

from langchain.retrievers.multi_query import MultiQueryRetriever

from langchain.chat_models import ChatAnthropic

__import__('pysqlite3')

import sys

sys.modules['sqlite3'] = sys.modules.pop('pysqlite3')

#定义chatdoc

class ChatDoc():

def __init__(self):

self.doc = None

self.splitText = []

#加载文本

def getFile(self):

doc = self.doc

loader = {

"docx": Docx2txtLoader,

"pdf": PyPDFLoader,

"xlsx": UnstructuredExcelLoader,

}

file_extension = doc.split('.')[-1] #加载文件,选择文件类型

loader_class = loader.get(file_extension)

if loader_class:

try:

loader = loader_class(doc)

text = loader.load()

return text

except Exception as e:

print(f'Error loading{file_extension} files:{e}')

return None

else:

print(f'Unsupporyed file extension: {file_extension}')

return None

#处理文本的函数

def splitsentences(self):

full_text = self.getFile() #获取文档内容

if full_text != None:

#对文档进行切分,chunk_size 指定了每个文本块的最大字符数,而 chunk_overlap 指定了每个块之间的重叠字符数

text_splitter = CharacterTextSplitter(chunk_size = 200,chunk_overlap = 20)

self.splitText = text_splitter.split_documents(full_text)

#向量化与向量存储

def embeddingAndVectorDB(self):

embeddings = HuggingFaceEmbeddings()

db = FAISS.from_documents(documents = self.splitText, embedding = embeddings)

return db

#提问并找到相关的文本块

def askAndFindFiles(self, question):

db = self.embeddingAndVectorDB()

#把问题交给LLM进行多角度扩展

llm = ChatAnthropic(anthropic_api_key="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")

retriever_from_llm = MultiQueryRetriever.from_llm(

retriever = db.as_retriever(),

llm = llm

)

return retriever_from_llm.aget_relevant_documents(question)

chat_doc = ChatDoc()

chat_doc.doc = '/kaggle/input/data-docxdata-docx/.docx'

chat_doc.splitsentences()

unique_doc = await chat_doc.askAndFindFiles("文章的题目是什么?")

print(unique_doc)