backbone

CCFF

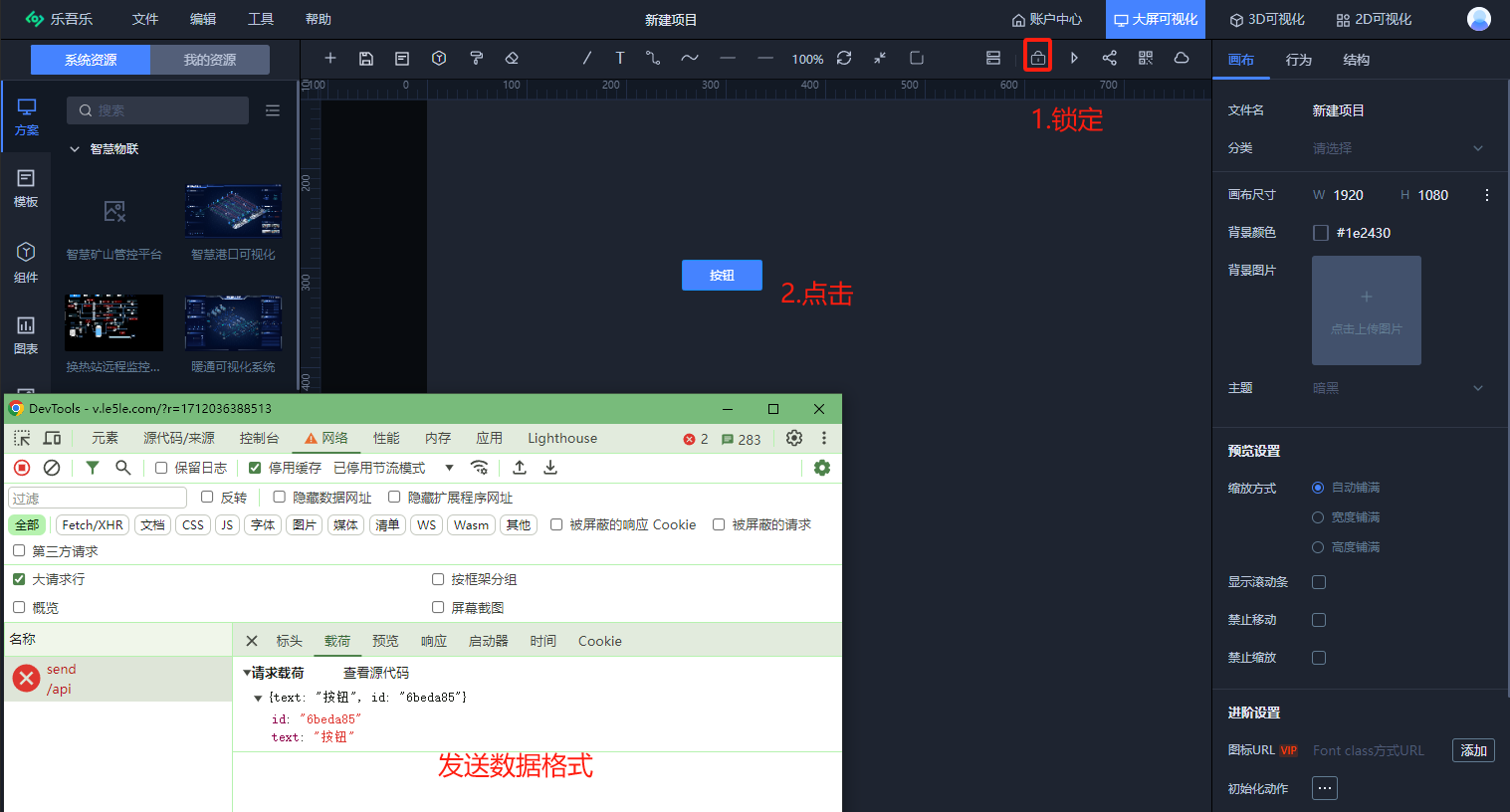

还不知道网络连接方式,只是知道了每一层

backbone

- backbone.backbone.conv1.weight torch.Size([64, 3, 7, 7])

- backbone.backbone.layer1.0.conv1.weight torch.Size([64, 64, 1, 1])

- backbone.backbone.layer1.0.conv2.weight torch.Size([64, 64, 3, 3])

- backbone.backbone.layer1.0.conv3.weight torch.Size([256, 64, 1, 1])

- backbone.backbone.layer1.0.downsample.0.weight torch.Size([256, 64, 1, 1])

- backbone.backbone.layer1.1.conv1.weight torch.Size([64, 256, 1, 1])

- backbone.backbone.layer1.1.conv2.weight torch.Size([64, 64, 3, 3])

- backbone.backbone.layer1.1.conv3.weight torch.Size([256, 64, 1, 1])

- backbone.backbone.layer1.2.conv1.weight torch.Size([64, 256, 1, 1])

- backbone.backbone.layer1.2.conv2.weight torch.Size([64, 64, 3, 3])

- backbone.backbone.layer1.2.conv3.weight torch.Size([256, 64, 1, 1])

- backbone.backbone.layer2.0.conv1.weight torch.Size([128, 256, 1, 1])

- backbone.backbone.layer2.0.conv2.weight torch.Size([128, 128, 3, 3])

- backbone.backbone.layer2.0.conv3.weight torch.Size([512, 128, 1, 1])

- backbone.backbone.layer2.0.downsample.0.weight torch.Size([512, 256, 1, 1])

- backbone.backbone.layer2.1.conv1.weight torch.Size([128, 512, 1, 1])

- backbone.backbone.layer2.1.conv2.weight torch.Size([128, 128, 3, 3])

- backbone.backbone.layer2.1.conv3.weight torch.Size([512, 128, 1, 1])

- backbone.backbone.layer2.2.conv1.weight torch.Size([128, 512, 1, 1])

- backbone.backbone.layer2.2.conv2.weight torch.Size([128, 128, 3, 3])

- backbone.backbone.layer2.2.conv3.weight torch.Size([512, 128, 1, 1])

- backbone.backbone.layer2.3.conv1.weight torch.Size([128, 512, 1, 1])

- backbone.backbone.layer2.3.conv2.weight torch.Size([128, 128, 3, 3])

- backbone.backbone.layer2.3.conv3.weight torch.Size([512, 128, 1, 1])

- backbone.backbone.layer3.0.conv1.weight torch.Size([256, 512, 1, 1])

- backbone.backbone.layer3.0.conv2.weight torch.Size([256, 256, 3, 3])

- backbone.backbone.layer3.0.conv3.weight torch.Size([1024, 256, 1, 1])

- backbone.backbone.layer3.0.downsample.0.weight torch.Size([1024, 512, 1, 1])

- backbone.backbone.layer3.1.conv1.weight torch.Size([256, 1024, 1, 1])

- backbone.backbone.layer3.1.conv2.weight torch.Size([256, 256, 3, 3])

- backbone.backbone.layer3.1.conv3.weight torch.Size([1024, 256, 1, 1])

- backbone.backbone.layer3.2.conv1.weight torch.Size([256, 1024, 1, 1])

- backbone.backbone.layer3.2.conv2.weight torch.Size([256, 256, 3, 3])

- backbone.backbone.layer3.2.conv3.weight torch.Size([1024, 256, 1, 1])

- backbone.backbone.layer3.3.conv1.weight torch.Size([256, 1024, 1, 1])

- backbone.backbone.layer3.3.conv2.weight torch.Size([256, 256, 3, 3])

- backbone.backbone.layer3.3.conv3.weight torch.Size([1024, 256, 1, 1])

- backbone.backbone.layer3.4.conv1.weight torch.Size([256, 1024, 1, 1])

- backbone.backbone.layer3.4.conv2.weight torch.Size([256, 256, 3, 3])

- backbone.backbone.layer3.4.conv3.weight torch.Size([1024, 256, 1, 1])

- backbone.backbone.layer3.5.conv1.weight torch.Size([256, 1024, 1, 1])

- backbone.backbone.layer3.5.conv2.weight torch.Size([256, 256, 3, 3])

- backbone.backbone.layer3.5.conv3.weight torch.Size([1024, 256, 1, 1])

- backbone.backbone.layer4.0.conv1.weight torch.Size([512, 1024, 1, 1])

- backbone.backbone.layer4.0.conv2.weight torch.Size([512, 512, 3, 3])

- backbone.backbone.layer4.0.conv3.weight torch.Size([2048, 512, 1, 1])

- backbone.backbone.layer4.0.downsample.0.weight torch.Size([2048, 1024, 1, 1])

- backbone.backbone.layer4.1.conv1.weight torch.Size([512, 2048, 1, 1])

- backbone.backbone.layer4.1.conv2.weight torch.Size([512, 512, 3, 3])

- backbone.backbone.layer4.1.conv3.weight torch.Size([2048, 512, 1, 1])

- backbone.backbone.layer4.2.conv1.weight torch.Size([512, 2048, 1, 1])

- backbone.backbone.layer4.2.conv2.weight torch.Size([512, 512, 3, 3])

- backbone.backbone.layer4.2.conv3.weight torch.Size([2048, 512, 1, 1])

- backbone.backbone.fc.weight torch.Size([1000, 2048])

- backbone.backbone.fc.bias torch.Size([1000])

ccf

- ccff.conv1.conv.weight torch.Size([3584, 3584, 1, 1])

- ccff.conv1.norm.weight torch.Size([3584])

- ccff.conv1.norm.bias torch.Size([3584])

- ccff.conv2.conv.weight torch.Size([3584, 3584, 1, 1])

- ccff.conv2.norm.weight torch.Size([3584])

- ccff.conv2.norm.bias torch.Size([3584])

- ccff.bottlenecks.0.conv1.conv.weight torch.Size([3584, 3584, 3, 3])

- ccff.bottlenecks.0.conv1.norm.weight torch.Size([3584])

- ccff.bottlenecks.0.conv1.norm.bias torch.Size([3584])

- ccff.bottlenecks.0.conv2.conv.weight torch.Size([3584, 3584, 1, 1])

- ccff.bottlenecks.0.conv2.norm.weight torch.Size([3584])

- ccff.bottlenecks.0.conv2.norm.bias torch.Size([3584])

- ccff.bottlenecks.1.conv1.conv.weight torch.Size([3584, 3584, 3, 3])

- ccff.bottlenecks.1.conv1.norm.weight torch.Size([3584])

- ccff.bottlenecks.1.conv1.norm.bias torch.Size([3584])

- ccff.bottlenecks.1.conv2.conv.weight torch.Size([3584, 3584, 1, 1])

- ccff.bottlenecks.1.conv2.norm.weight torch.Size([3584])

- ccff.bottlenecks.1.conv2.norm.bias torch.Size([3584])

- ccff.bottlenecks.2.conv1.conv.weight torch.Size([3584, 3584, 3, 3])

- ccff.bottlenecks.2.conv1.norm.weight torch.Size([3584])

- ccff.bottlenecks.2.conv1.norm.bias torch.Size([3584])

- ccff.bottlenecks.2.conv2.conv.weight torch.Size([3584, 3584, 1, 1])

- ccff.bottlenecks.2.conv2.norm.weight torch.Size([3584])

- ccff.bottlenecks.2.conv2.norm.bias torch.Size([3584])

input_proj

- input_proj.weight torch.Size([256, 3584, 1, 1])

- input_proj.bias torch.Size([256])

encoder

- encoder.layers.0.norm1.weight torch.Size([256])

- encoder.layers.0.norm1.bias torch.Size([256])

- encoder.layers.0.norm2.weight torch.Size([256])

- encoder.layers.0.norm2.bias torch.Size([256])

- encoder.layers.0.self_attn.in_proj_weight torch.Size([768, 256])

- encoder.layers.0.self_attn.in_proj_bias torch.Size([768])

- encoder.layers.0.self_attn.out_proj.weight torch.Size([256, 256])

- encoder.layers.0.self_attn.out_proj.bias torch.Size([256])

- encoder.layers.0.mlp.linear1.weight torch.Size([2048, 256])

- encoder.layers.0.mlp.linear1.bias torch.Size([2048])

- encoder.layers.0.mlp.linear2.weight torch.Size([256, 2048])

- encoder.layers.0.mlp.linear2.bias torch.Size([256])

- encoder.layers.1.norm1.weight torch.Size([256])

- encoder.layers.1.norm1.bias torch.Size([256])

- encoder.layers.1.norm2.weight torch.Size([256])

- encoder.layers.1.norm2.bias torch.Size([256])

- encoder.layers.1.self_attn.in_proj_weight torch.Size([768, 256])

- encoder.layers.1.self_attn.in_proj_bias torch.Size([768])

- encoder.layers.1.self_attn.out_proj.weight torch.Size([256, 256])

- encoder.layers.1.self_attn.out_proj.bias torch.Size([256])

- encoder.layers.1.mlp.linear1.weight torch.Size([2048, 256])

- encoder.layers.1.mlp.linear1.bias torch.Size([2048])

- encoder.layers.1.mlp.linear2.weight torch.Size([256, 2048])

- encoder.layers.1.mlp.linear2.bias torch.Size([256])

- encoder.layers.2.norm1.weight torch.Size([256])

- encoder.layers.2.norm1.bias torch.Size([256])

- encoder.layers.2.norm2.weight torch.Size([256])

- encoder.layers.2.norm2.bias torch.Size([256])

- encoder.layers.2.self_attn.in_proj_weight torch.Size([768, 256])

- encoder.layers.2.self_attn.in_proj_bias torch.Size([768])

- encoder.layers.2.self_attn.out_proj.weight torch.Size([256, 256])

- encoder.layers.2.self_attn.out_proj.bias torch.Size([256])

- encoder.layers.2.mlp.linear1.weight torch.Size([2048, 256])

- encoder.layers.2.mlp.linear1.bias torch.Size([2048])

- encoder.layers.2.mlp.linear2.weight torch.Size([256, 2048])

- encoder.layers.2.mlp.linear2.bias torch.Size([256])

- encoder.norm.weight torch.Size([256])

- encoder.norm.bias torch.Size([256])

ope

- ope.iterative_adaptation.layers.0.norm1.weight torch.Size([256])

- ope.iterative_adaptation.layers.0.norm1.bias torch.Size([256])

- ope.iterative_adaptation.layers.0.norm2.weight torch.Size([256])

- ope.iterative_adaptation.layers.0.norm2.bias torch.Size([256])

- ope.iterative_adaptation.layers.0.norm3.weight torch.Size([256])

- ope.iterative_adaptation.layers.0.norm3.bias torch.Size([256])

- ope.iterative_adaptation.layers.0.self_attn.in_proj_weight torch.Size([768, 256])

- ope.iterative_adaptation.layers.0.self_attn.in_proj_bias torch.Size([768])

- ope.iterative_adaptation.layers.0.self_attn.out_proj.weight torch.Size([256, 256])

- ope.iterative_adaptation.layers.0.self_attn.out_proj.bias torch.Size([256])

- ope.iterative_adaptation.layers.0.enc_dec_attn.in_proj_weight torch.Size([768, 256])

- ope.iterative_adaptation.layers.0.enc_dec_attn.in_proj_bias torch.Size([768])

- ope.iterative_adaptation.layers.0.enc_dec_attn.out_proj.weight torch.Size([256, 256])

- ope.iterative_adaptation.layers.0.enc_dec_attn.out_proj.bias torch.Size([256])

- ope.iterative_adaptation.layers.0.mlp.linear1.weight torch.Size([2048, 256])

- ope.iterative_adaptation.layers.0.mlp.linear1.bias torch.Size([2048])

- ope.iterative_adaptation.layers.0.mlp.linear2.weight torch.Size([256, 2048])

- ope.iterative_adaptation.layers.0.mlp.linear2.bias torch.Size([256])

- ope.iterative_adaptation.layers.1.norm1.weight torch.Size([256])

- ope.iterative_adaptation.layers.1.norm1.bias torch.Size([256])

- ope.iterative_adaptation.layers.1.norm2.weight torch.Size([256])

- ope.iterative_adaptation.layers.1.norm2.bias torch.Size([256])

- ope.iterative_adaptation.layers.1.norm3.weight torch.Size([256])

- ope.iterative_adaptation.layers.1.norm3.bias torch.Size([256])

- ope.iterative_adaptation.layers.1.self_attn.in_proj_weight torch.Size([768, 256])

- ope.iterative_adaptation.layers.1.self_attn.in_proj_bias torch.Size([768])

- ope.iterative_adaptation.layers.1.self_attn.out_proj.weight torch.Size([256, 256])

- ope.iterative_adaptation.layers.1.self_attn.out_proj.bias torch.Size([256])

- ope.iterative_adaptation.layers.1.enc_dec_attn.in_proj_weight torch.Size([768, 256])

- ope.iterative_adaptation.layers.1.enc_dec_attn.in_proj_bias torch.Size([768])

- ope.iterative_adaptation.layers.1.enc_dec_attn.out_proj.weight torch.Size([256, 256])

- ope.iterative_adaptation.layers.1.enc_dec_attn.out_proj.bias torch.Size([256])

- ope.iterative_adaptation.layers.1.mlp.linear1.weight torch.Size([2048, 256])

- ope.iterative_adaptation.layers.1.mlp.linear1.bias torch.Size([2048])

- ope.iterative_adaptation.layers.1.mlp.linear2.weight torch.Size([256, 2048])

- ope.iterative_adaptation.layers.1.mlp.linear2.bias torch.Size([256])

- ope.iterative_adaptation.layers.2.norm1.weight torch.Size([256])

- ope.iterative_adaptation.layers.2.norm1.bias torch.Size([256])

- ope.iterative_adaptation.layers.2.norm2.weight torch.Size([256])

- ope.iterative_adaptation.layers.2.norm2.bias torch.Size([256])

- ope.iterative_adaptation.layers.2.norm3.weight torch.Size([256])

- ope.iterative_adaptation.layers.2.norm3.bias torch.Size([256])

- ope.iterative_adaptation.layers.2.self_attn.in_proj_weight torch.Size([768, 256])

- ope.iterative_adaptation.layers.2.self_attn.in_proj_bias torch.Size([768])

- ope.iterative_adaptation.layers.2.self_attn.out_proj.weight torch.Size([256, 256])

- ope.iterative_adaptation.layers.2.self_attn.out_proj.bias torch.Size([256])

- ope.iterative_adaptation.layers.2.enc_dec_attn.in_proj_weight torch.Size([768, 256])

- ope.iterative_adaptation.layers.2.enc_dec_attn.in_proj_bias torch.Size([768])

- ope.iterative_adaptation.layers.2.enc_dec_attn.out_proj.weight torch.Size([256, 256])

- ope.iterative_adaptation.layers.2.enc_dec_attn.out_proj.bias torch.Size([256])

- ope.iterative_adaptation.layers.2.mlp.linear1.weight torch.Size([2048, 256])

- ope.iterative_adaptation.layers.2.mlp.linear1.bias torch.Size([2048])

- ope.iterative_adaptation.layers.2.mlp.linear2.weight torch.Size([256, 2048])

- ope.iterative_adaptation.layers.2.mlp.linear2.bias torch.Size([256])

- ope.iterative_adaptation.norm.weight torch.Size([256])

- ope.iterative_adaptation.norm.bias torch.Size([256])

ope.shape_or_objectness

- ope.shape_or_objectness.0.weight torch.Size([64, 2])

- ope.shape_or_objectness.0.bias torch.Size([64])

- ope.shape_or_objectness.2.weight torch.Size([256, 64])

- ope.shape_or_objectness.2.bias torch.Size([256])

- ope.shape_or_objectness.4.weight torch.Size([2304, 256])

- ope.shape_or_objectness.4.bias torch.Size([2304])

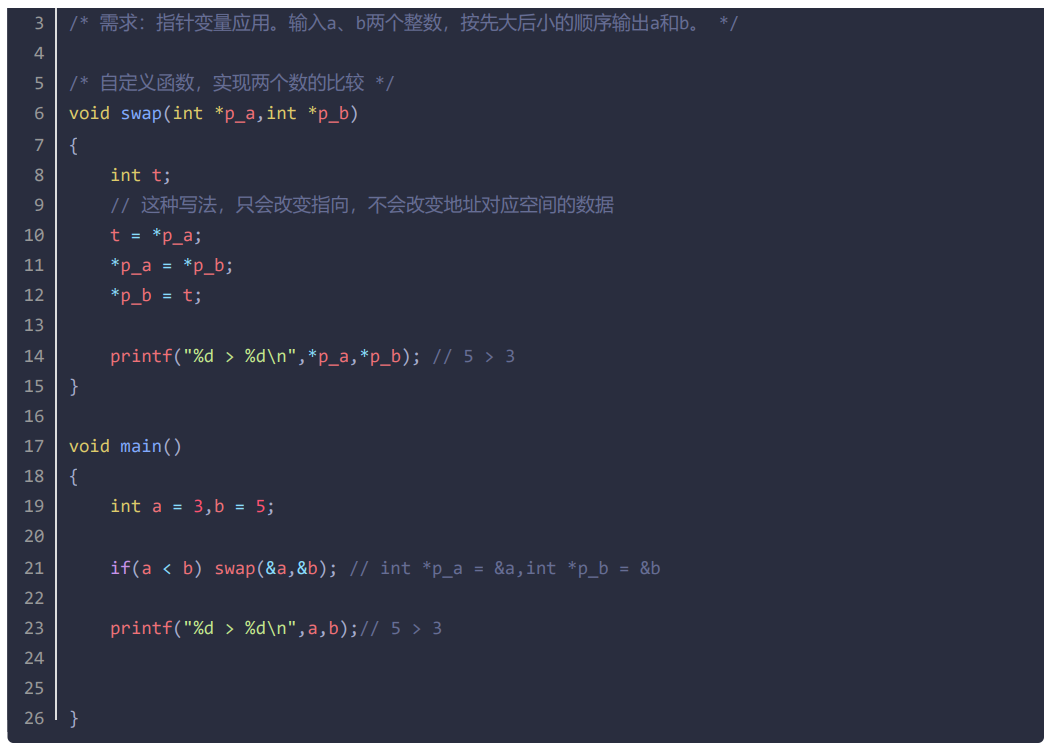

回归头

- regression_head.regressor.0.layer.0.weight torch.Size([128, 256, 3, 3])

- regression_head.regressor.0.layer.0.bias torch.Size([128])

- regression_head.regressor.1.layer.0.weight torch.Size([64, 128, 3, 3])

- regression_head.regressor.1.layer.0.bias torch.Size([64])

- regression_head.regressor.2.layer.0.weight torch.Size([32, 64, 3, 3])

- regression_head.regressor.2.layer.0.bias torch.Size([32])

- regression_head.regressor.3.weight torch.Size([1, 32, 1, 1])

- regression_head.regressor.3.bias torch.Size([1])

辅助头

- aux_heads.0.regressor.0.layer.0.weight torch.Size([128, 256, 3, 3])

- aux_heads.0.regressor.0.layer.0.bias torch.Size([128])

- aux_heads.0.regressor.1.layer.0.weight torch.Size([64, 128, 3, 3])

- aux_heads.0.regressor.1.layer.0.bias torch.Size([64])

- aux_heads.0.regressor.2.layer.0.weight torch.Size([32, 64, 3, 3])

- aux_heads.0.regressor.2.layer.0.bias torch.Size([32])

- aux_heads.0.regressor.3.weight torch.Size([1, 32, 1, 1])

- aux_heads.0.regressor.3.bias torch.Size([1])

- aux_heads.1.regressor.0.layer.0.weight torch.Size([128, 256, 3, 3])

- aux_heads.1.regressor.0.layer.0.bias torch.Size([128])

- aux_heads.1.regressor.1.layer.0.weight torch.Size([64, 128, 3, 3])

- aux_heads.1.regressor.1.layer.0.bias torch.Size([64])

- aux_heads.1.regressor.2.layer.0.weight torch.Size([32, 64, 3, 3])

- aux_heads.1.regressor.2.layer.0.bias torch.Size([32])

- aux_heads.1.regressor.3.weight torch.Size([1, 32, 1, 1])

- aux_heads.1.regressor.3.bias torch.Size([1])

Total number of parameters in LOCA: 447974251

Total number of parameters in CCFF: 411099136(这个模块,参数量好大)