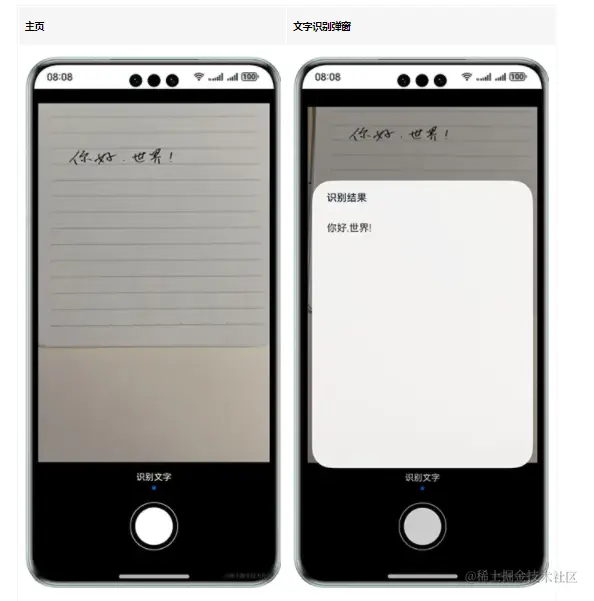

拍照识别文字

介绍

本示例通过使用@ohos.multimedia.camera (相机管理)和textRecognition(文字识别)接口来实现识别提取照片内文字的功能。

效果预览

使用说明

1.点击界面下方圆形文字识别图标,弹出文字识别结果信息界面,显示当前照片的文字识别结果;

2.点击除了弹窗外的空白区域,弹窗关闭,返回主页。

具体实现

- 本实例完成AI文字识别的功能模块主要封装在CameraModel,源码参考:[CameraModel.ets]。

/*

* Copyright (c) 2023 Huawei Device Co., Ltd.

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

import { BusinessError } from '@kit.BasicServicesKit';

import { camera } from '@kit.CameraKit';

import { common } from '@kit.AbilityKit';

import { image } from '@kit.ImageKit';

import { textRecognition } from '@kit.CoreVisionKit';

import Logger from './Logger';

import CommonConstants from '../constants/CommonConstants';

const TAG: string = '[CameraModel]';

export default class Camera {

private cameraMgr: camera.CameraManager | undefined = undefined;

private cameraDevice: camera.CameraDevice | undefined = undefined;

private capability: camera.CameraOutputCapability | undefined = undefined;

private cameraInput: camera.CameraInput | undefined = undefined;

public previewOutput: camera.PreviewOutput | undefined = undefined;

private receiver: image.ImageReceiver | undefined = undefined;

private photoSurfaceId: string | undefined = undefined;

private photoOutput: camera.PhotoOutput | undefined = undefined;

public captureSession: camera.PhotoSession | undefined = undefined;

public result: string = '';

private imgReceive: Function | undefined = undefined;

async initCamera(surfaceId: string): Promise<void> {

this.cameraMgr = camera.getCameraManager(getContext(this) as common.UIAbilityContext);

let cameraArray = this.getCameraDevices(this.cameraMgr);

this.cameraDevice = cameraArray[CommonConstants.INPUT_DEVICE_INDEX];

this.cameraInput = this.getCameraInput(this.cameraDevice, this.cameraMgr) as camera.CameraInput;

await this.cameraInput.open();

this.capability = this.cameraMgr.getSupportedOutputCapability(this.cameraDevice, camera.SceneMode.NORMAL_PHOTO);

this.previewOutput = this.getPreviewOutput(this.cameraMgr, this.capability, surfaceId) as camera.PreviewOutput;

this.photoOutput = this.getPhotoOutput(this.cameraMgr, this.capability) as camera.PhotoOutput;

this.photoOutput.on('photoAvailable', (errCode: BusinessError, photo: camera.Photo): void => {

let imageObj = photo.main;

imageObj.getComponent(image.ComponentType.JPEG,async (errCode: BusinessError, component: image.Component)=> {

if (errCode || component === undefined) {

return;

}

let buffer: ArrayBuffer;

buffer = component.byteBuffer

this.result = await this.recognizeImage(buffer);

})

})

// Session Init

this.captureSession = this.getCaptureSession(this.cameraMgr) as camera.PhotoSession;

this.beginConfig(this.captureSession);

this.startSession(this.captureSession, this.cameraInput, this.previewOutput, this.photoOutput);

}

async takePicture() {

this.result = '';

this.photoOutput!.capture();

}

async recognizeImage(buffer: ArrayBuffer): Promise<string> {

let imageResource = image.createImageSource(buffer);

let pixelMapInstance = await imageResource.createPixelMap();

let visionInfo: textRecognition.VisionInfo = {

pixelMap: pixelMapInstance

};

let textConfiguration: textRecognition.TextRecognitionConfiguration = {

isDirectionDetectionSupported: true

};

let recognitionString: string = '';

if (canIUse("SystemCapability.AI.OCR.TextRecognition")) {

await textRecognition.recognizeText(visionInfo, textConfiguration).then((TextRecognitionResult) => {

if (TextRecognitionResult.value === '') {

let context = getContext(this) as common.UIAbilityContext

recognitionString = context.resourceManager.getStringSync($r('app.string.unrecognizable').id);

} else {

recognitionString = TextRecognitionResult.value;

}

})

pixelMapInstance.release();

imageResource.release();

} else {

let context = getContext(this) as common.UIAbilityContext

recognitionString = context.resourceManager.getStringSync($r('app.string.Device_not_support').id);

Logger.error(TAG, `device not support`);

}

return recognitionString;

}

async releaseCamera(): Promise<void> {

if (this.cameraInput) {

await this.cameraInput.close();

Logger.info(TAG, 'cameraInput release');

}

if (this.previewOutput) {

await this.previewOutput.release();

Logger.info(TAG, 'previewOutput release');

}

if (this.receiver) {

await this.receiver.release();

Logger.info(TAG, 'receiver release');

}

if (this.photoOutput) {

await this.photoOutput.release();

Logger.info(TAG, 'photoOutput release');

}

if (this.captureSession) {

await this.captureSession.release();

Logger.info(TAG, 'captureSession release');

this.captureSession = undefined;

}

this.imgReceive = undefined;

}

getCameraDevices(cameraManager: camera.CameraManager): Array<camera.CameraDevice> {

let cameraArray: Array<camera.CameraDevice> = cameraManager.getSupportedCameras();

if (cameraArray != undefined && cameraArray.length > 0) {

return cameraArray;

} else {

Logger.error(TAG, `getSupportedCameras faild`);

return [];

}

}

getCameraInput(cameraDevice: camera.CameraDevice, cameraManager: camera.CameraManager): camera.CameraInput | undefined {

let cameraInput: camera.CameraInput | undefined = undefined;

cameraInput = cameraManager.createCameraInput(cameraDevice);

return cameraInput;

}

getPreviewOutput(cameraManager: camera.CameraManager, cameraOutputCapability: camera.CameraOutputCapability,

surfaceId: string): camera.PreviewOutput | undefined {

let previewProfilesArray: Array<camera.Profile> = cameraOutputCapability.previewProfiles;

let previewOutput: camera.PreviewOutput | undefined = undefined;

previewOutput = cameraManager.createPreviewOutput(previewProfilesArray[CommonConstants.OUTPUT_DEVICE_INDEX], surfaceId);

return previewOutput;

}

async getImageReceiverSurfaceId(receiver: image.ImageReceiver): Promise<string | undefined> {

let photoSurfaceId: string | undefined = undefined;

if (receiver !== undefined) {

photoSurfaceId = await receiver.getReceivingSurfaceId();

Logger.info(TAG, `getReceivingSurfaceId success`);

}

return photoSurfaceId;

}

getPhotoOutput(cameraManager: camera.CameraManager, cameraOutputCapability: camera.CameraOutputCapability): camera.PhotoOutput | undefined {

let photoProfilesArray: Array<camera.Profile> = cameraOutputCapability.photoProfiles;

Logger.info(TAG, JSON.stringify(photoProfilesArray));

if (!photoProfilesArray) {

Logger.info(TAG, `createOutput photoProfilesArray == null || undefined`);

}

let photoOutput: camera.PhotoOutput | undefined = undefined;

try {

photoOutput = cameraManager.createPhotoOutput(photoProfilesArray[CommonConstants.OUTPUT_DEVICE_INDEX]);

} catch (error) {

Logger.error(TAG, `Failed to createPhotoOutput. error: ${JSON.stringify(error as BusinessError)}`);

}

return photoOutput;

}

getCaptureSession(cameraManager: camera.CameraManager): camera.PhotoSession | undefined {

let captureSession: camera.PhotoSession | undefined = undefined;

try {

captureSession = cameraManager.createSession(1) as camera.PhotoSession;

} catch (error) {

Logger.error(TAG, `Failed to create the CaptureSession instance. error: ${JSON.stringify(error as BusinessError)}`);

}

return captureSession;

}

beginConfig(captureSession: camera.PhotoSession): void {

try {

captureSession.beginConfig();

Logger.info(TAG, 'captureSession beginConfig')

} catch (error) {

Logger.error(TAG, `Failed to beginConfig. error: ${JSON.stringify(error as BusinessError)}`);

}

}

async startSession(captureSession: camera.PhotoSession, cameraInput: camera.CameraInput, previewOutput:

camera.PreviewOutput, photoOutput: camera.PhotoOutput): Promise<void> {

captureSession.addInput(cameraInput);

captureSession.addOutput(previewOutput);

captureSession.addOutput(photoOutput);

await captureSession.commitConfig().then(() => {

Logger.info(TAG, 'Promise returned to captureSession the session start success.')

}).catch((err: BusinessError) => {

Logger.info(TAG, 'captureSession error')

Logger.info(TAG, JSON.stringify(err))

});

await captureSession.start().then(() => {

Logger.info(TAG, 'Promise returned to indicate the session start success.')

}).catch((err: BusinessError) => {

Logger.info(TAG, JSON.stringify(err))

})

}

}

- 相机模块:在Camera中封装了相机初始化、相机释放。

- 在Index页面通过点击事件触发相机拍摄,在获取到照片输出流后通过@hms.ai.ocr.textRecognition文字识别接口进行识别。