有借鉴网上部分博客

首先,我先使用该数据集,通过线性回归的方法,做了一个预测问题

import numpy as np

import scipy.io as sio

import matplotlib.pyplot as plt

from scipy.optimize import minimize

#读取数据

path = "./ex5data1.mat"

data = sio.loadmat(path)

# print(type(data))

# print(data.keys())

X = data.get("X")

y = data.get("y")

Xval = data.get("Xval")

yval = data.get("yval")

Xtest = data.get("Xtest")

ytest = data.get("ytest")

X = np.insert(X,0,values=1,axis=1)

Xval = np.insert(Xval,0,values=1,axis=1)

Xtest = np.insert(Xtest,0,values=1,axis=1)

# theta = np.zeros((2,1))

# print(X.shape)

# print(y.shape)

#可视化数据

fig,ax = plt.subplots()

ax.scatter(X[:,1:],y)

ax.set_xlabel('water level')

ax.set_ylabel('water flowing out')

plt.show()

#代价函数

def cost_func(theta,X,y,lamda):

m = len(X)

cost = np.sum(np.power((X @ theta - y.flatten()),2))/(2*m)

reg = lamda * np.sum(np.power(theta[1:],2))/(2*m)

return cost+reg

#梯度函数

def gradient_descent(theta,X,y,lamda):

m = len(X)

grad = (X @ theta - y.flatten()) @ X / m

reg = lamda * theta / m

return grad + reg

lamda = 0

#优化

def train_final(X,y,lamda):

theta = np.ones(X.shape[1])

fmin = minimize(fun=cost_func,

x0=theta,

args=(X, y, lamda),

method='TNC',

jac=gradient_descent)

return fmin.x

theta_final = train_final(X,y,lamda)

print(theta_final)

#拟合图像

fig,ax = plt.subplots()

ax.scatter(X[:,1:],y)

ax.set_xlabel('water level')

ax.set_ylabel('water flowing out')

plt.plot(X[:,1:],X @ theta_final)

plt.show()

在这一部分,先读取数据,进行可视化,然后写出对应的代价函数和梯度函数,调用minimize进行优化,得到最佳的参数。

---------------------------------------------------------------------------------------------------------------------------------

拟合完函数,发现效果并不好,所以画出学习曲线分析问题

#绘画学习曲线

def learning_curve(X,y,Xval,yval,lamda):

train_cost = []

val_cost = []

x = range(1,len(X+1))

for i in x:

theta_i = train_final(X[:i,:],y[:i,:],lamda)

train_cost_i = cost_func(theta_i,X[:i,:],y[:i,:],lamda)

val_cost_i = cost_func(theta_i,Xval,yval,lamda)

train_cost.append(train_cost_i)

val_cost.append(val_cost_i)

plt.plot(x,train_cost,label="train_cost")

plt.plot(x,val_cost,label="val_cost")

plt.xlabel('number of training examples')

plt.ylabel('costs')

plt.show()

learning_curve(X,y,Xval,yval,lamda)

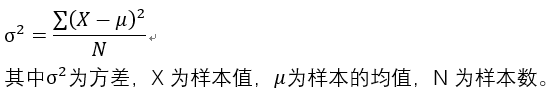

发现训练误差和验证误差都比较大,并且到后面趋于水平。分析可能是欠拟合问题。

因此,我选择增加特征次数的方法,也就是使特征项变多,最后得到一个比较不错的拟合函数。

#特征映射

def feature_mapping(X,power):

for i in range(2 , power + 1):

X = np.insert(X,X.shape[1],np.power(X[:, 1], i),axis=1)

return X

#标准化

def normalization(X):

mean = np.mean(X,axis=0)

stds = np.std(X, axis=0)

X[:, 1:] = (X[:, 1:] - mean[1:]) / stds[1:]

return X

power = 6

X_train_poly = feature_mapping(X,power)

X_val_poly = feature_mapping(Xval,power)

X_test_poly = feature_mapping(Xtest,power)

X_train_norm = normalization(X_train_poly)

X_val_norm = normalization(X_val_poly)

X_test_norm = normalization(X_test_poly)

theta_final_map = train_final(X_train_norm,y,lamda=0)

print(theta_final_map)

#拟合

fig,ax = plt.subplots()

ax.scatter(X[:,1:],y)

ax.set_xlabel('water level') # 设置坐标轴标签

ax.set_ylabel('water flowing out')

x = np.linspace(-60,60,100)

xReshape = x.reshape(100,1)

xReshape = np.insert(xReshape ,0,values=1,axis=1)

xReshape = feature_mapping(xReshape ,power)

xReshape = normalization(xReshape )

plt.plot(x, xReshape @ theta_final_map,c="r")

plt.show()

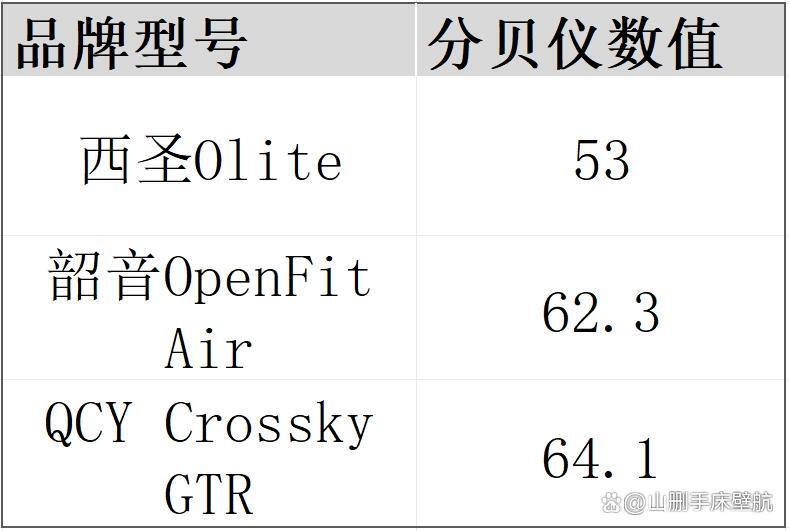

最后,我们需要选择合适的lamda,这里使用验证集选择。也就是说使用训练集得到合适的参数theta,使用验证集得到合适的参数lamda。

#选择lamda

lamdas = [0, 0.001, 0.003, 0.01, 0.03, 0.1, 0.3, 1, 3, 10]

train_cost = []

val_cost = []

for lamda in lamdas:

theta_lamda = train_final(X_train_norm,y,lamda)

train_cost_lamda = cost_func(theta_lamda,X_train_norm,y,lamda=0)

vai_cost_lamda = cost_func(theta_lamda,X_val_norm,yval,lamda=0)

train_cost.append(train_cost_lamda)

val_cost.append(vai_cost_lamda)

plt.plot(lamdas,train_cost,label="train_cost")

plt.plot(lamdas,val_cost,label="val_cost")

plt.show()

然后,我们得到的最佳的lamda

# 最佳lamda

bestLamda = lamdas[np.argmin(val_cost)]

print(bestLamda)

# 训练测试集

res = train_final(X_train_norm, y, bestLamda)

print(cost_func(res, X_test_norm, ytest, lamda=0))