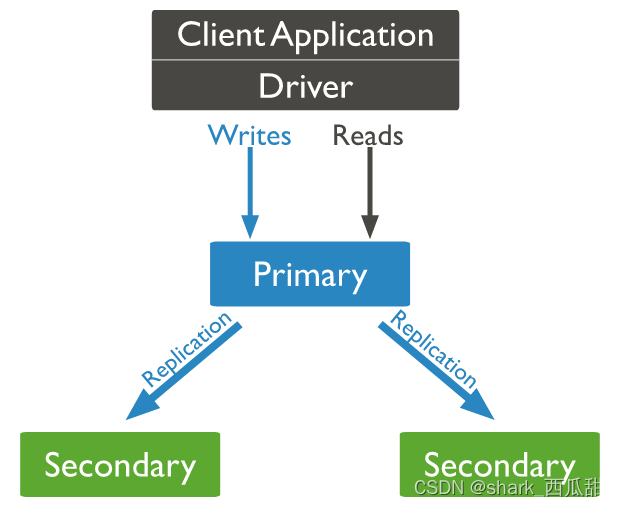

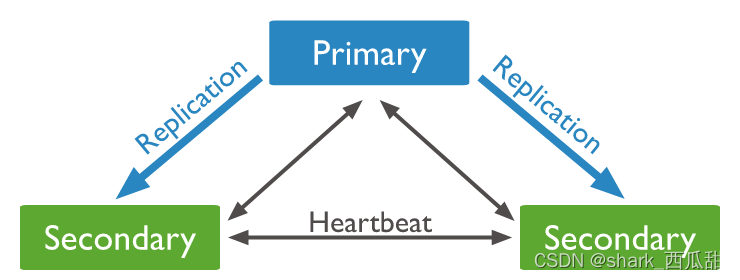

一 副本集介绍

集群中每个节点有心跳检测

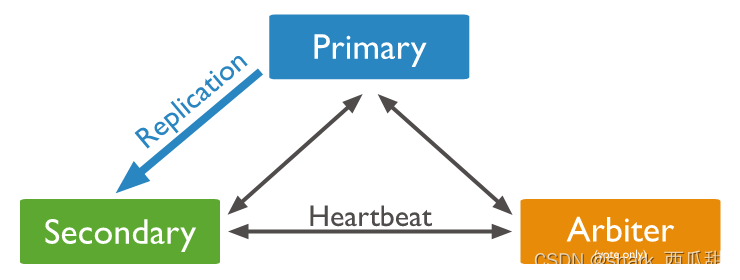

如果由于资源限制,可以部署一主一从一仲裁

副本集集群可以实现主从的自动切换

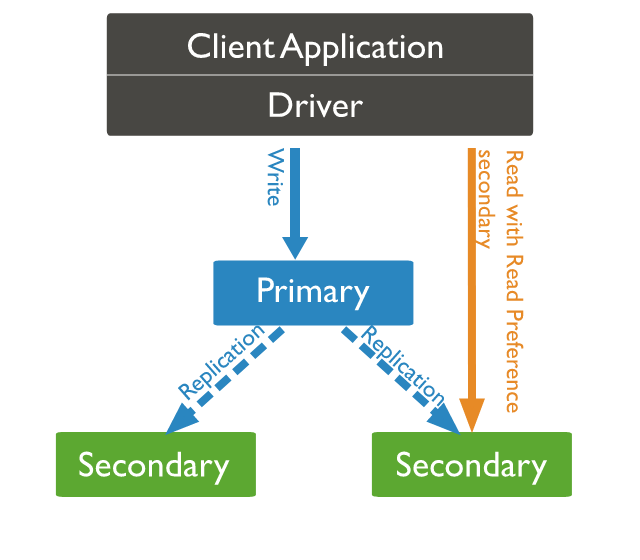

Read Preference

在客户端连接中,可以实现读取优先,就是连接器会自动判断,把读取请求发送到副本集中的从节点,默认读写都发送到主节点。

二 部署

下面使用部署 3 成员的副本集集群为例介绍副本集的部署。

并且假设,部署方式是 docker-compose

参考官方连接 https://www.mongodb.com/docs/manual/tutorial/deploy-replica-set-with-keyfile-access-control/

compose 文档内容如下:

version: '3.9'

services:

# mongodb server

mongo:

labels:

co.elastic.logs/module: mongodb

image: mongo:6.0.4

command: --replSet rs0 --bind_ip localhost,<hostname> --wiredTigerCacheSizeGB 2 --keyFile /etc/keyfile.key

network_mode: host

restart: always

environment:

# 管理员用户

MONGO_INITDB_ROOT_USERNAME: mongoadmin

# 管理员密码

MONGO_INITDB_ROOT_PASSWORD: password

volumes:

- "/etc/localtime:/etc/localtime"

- "./data:/data/db"

- "./keyfile.key:/etc/keyfile.key"

expose:

- "27017:27017"

<hostname>是每个节点的主机名

生成 密钥文件 用于集群成员之间的认证。 6.0 版本使用认证所必须的

openssl rand -base64 756 >

应该将集群的每个节点不是在不同的服务器上,这里假设部署的服务器主机名为: node1,node2,node3

mongdb 的 docker-compose 目前结构

mongodb:

|- data

|- keyfile.key

|_ compose.yml

将 mongodb 目前传输到每个节点,并修改 keyfile.key 权限

chmod 400 keyfile.key

chown 999.999 keyfile.key data

启动每个节点的 mongo 后,停 35 秒左右之后,只需在其中 任意一个节点执行如下命令初始化集群即可:

docker-compose exec mongo mongosh -u mongoadmin -p password --eval

"rs.initiate({_id : 'rs0', members: [{ _id : 0, host: 'node1:27017' },{ _id : 1, host : 'node2:27017' },{ _id : 2, host : 'node3:27017' }]})"

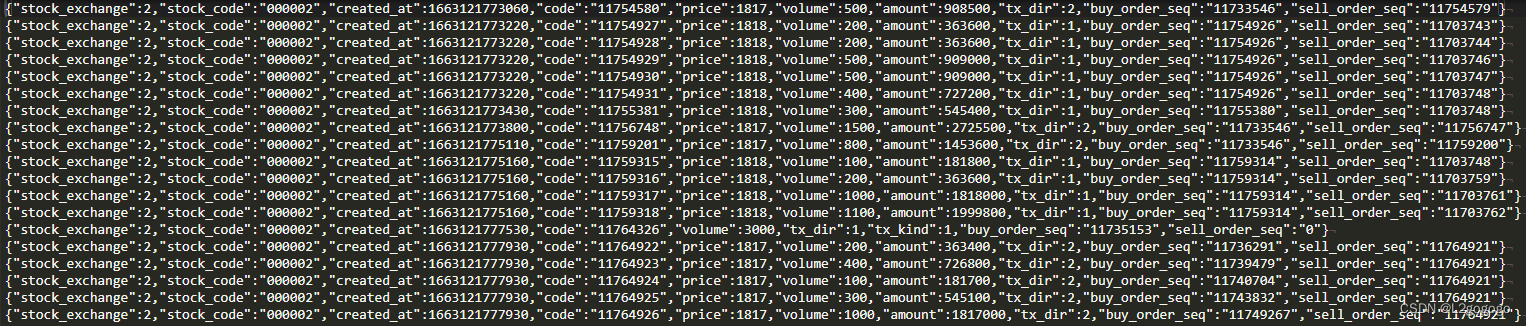

检查集群状态

任意一个节点执行如下命令即可

docker-compose exec mongo mongosh -u root -p mongo123 --eval "rs.status()"

输出示例:

主要观察 stateStr 字段的值: PRIMARY|SECONDARY

...

members: [

{

_id: 0,

name: 'node1:27017',

health: 1,

state: 1,

stateStr: 'PRIMARY',

uptime: 1874,

optime: { ts: Timestamp({ t: 1675232833, i: 1 }), t: Long("1") },

optimeDate: ISODate("2023-02-01T06:27:13.000Z"),

lastAppliedWallTime: ISODate("2023-02-01T06:27:13.799Z"),

lastDurableWallTime: ISODate("2023-02-01T06:27:13.799Z"),

syncSourceHost: '',

syncSourceId: -1,

infoMessage: '',

electionTime: Timestamp({ t: 1675231003, i: 1 }),

electionDate: ISODate("2023-02-01T05:56:43.000Z"),

configVersion: 1,

configTerm: 1,

self: true,

lastHeartbeatMessage: ''

},

{

_id: 1,

name: 'node2:27017',

health: 1,

state: 2,

stateStr: 'SECONDARY',

uptime: 1847,

optime: { ts: Timestamp({ t: 1675232833, i: 1 }), t: Long("1") },

optimeDurable: { ts: Timestamp({ t: 1675232833, i: 1 }), t: Long("1") },

optimeDate: ISODate("2023-02-01T06:27:13.000Z"),

optimeDurableDate: ISODate("2023-02-01T06:27:13.000Z"),

lastAppliedWallTime: ISODate("2023-02-01T06:27:13.799Z"),

lastDurableWallTime: ISODate("2023-02-01T06:27:13.799Z"),

lastHeartbeat: ISODate("2023-02-01T06:27:19.573Z"),

lastHeartbeatRecv: ISODate("2023-02-01T06:27:18.571Z"),

pingMs: Long("0"),

lastHeartbeatMessage: '',

syncSourceHost: 'node1:27017',

syncSourceId: 0,

infoMessage: '',

configVersion: 1,

configTerm: 1

},

{

_id: 2,

name: 'node3:27017',

health: 1,

state: 2,

stateStr: 'SECONDARY',

uptime: 1847,

optime: { ts: Timestamp({ t: 1675232833, i: 1 }), t: Long("1") },

optimeDurable: { ts: Timestamp({ t: 1675232833, i: 1 }), t: Long("1") },

optimeDate: ISODate("2023-02-01T06:27:13.000Z"),

optimeDurableDate: ISODate("2023-02-01T06:27:13.000Z"),

lastAppliedWallTime: ISODate("2023-02-01T06:27:13.799Z"),

lastDurableWallTime: ISODate("2023-02-01T06:27:13.799Z"),

lastHeartbeat: ISODate("2023-02-01T06:27:19.565Z"),

lastHeartbeatRecv: ISODate("2023-02-01T06:27:18.593Z"),

pingMs: Long("0"),

lastHeartbeatMessage: '',

syncSourceHost: 'node1:27017',

syncSourceId: 0,

infoMessage: '',

configVersion: 1,

configTerm: 1

}

],

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t: 1675232833, i: 1 }),

signature: {

hash: Binary(Buffer.from("5d771f4d9b817a799ab4c221590e12a6da31c9b6", "hex"), 0),

keyId: Long("7195062371130277893")

}

},

operationTime: Timestamp({ t: 1675232833, i: 1 })

}