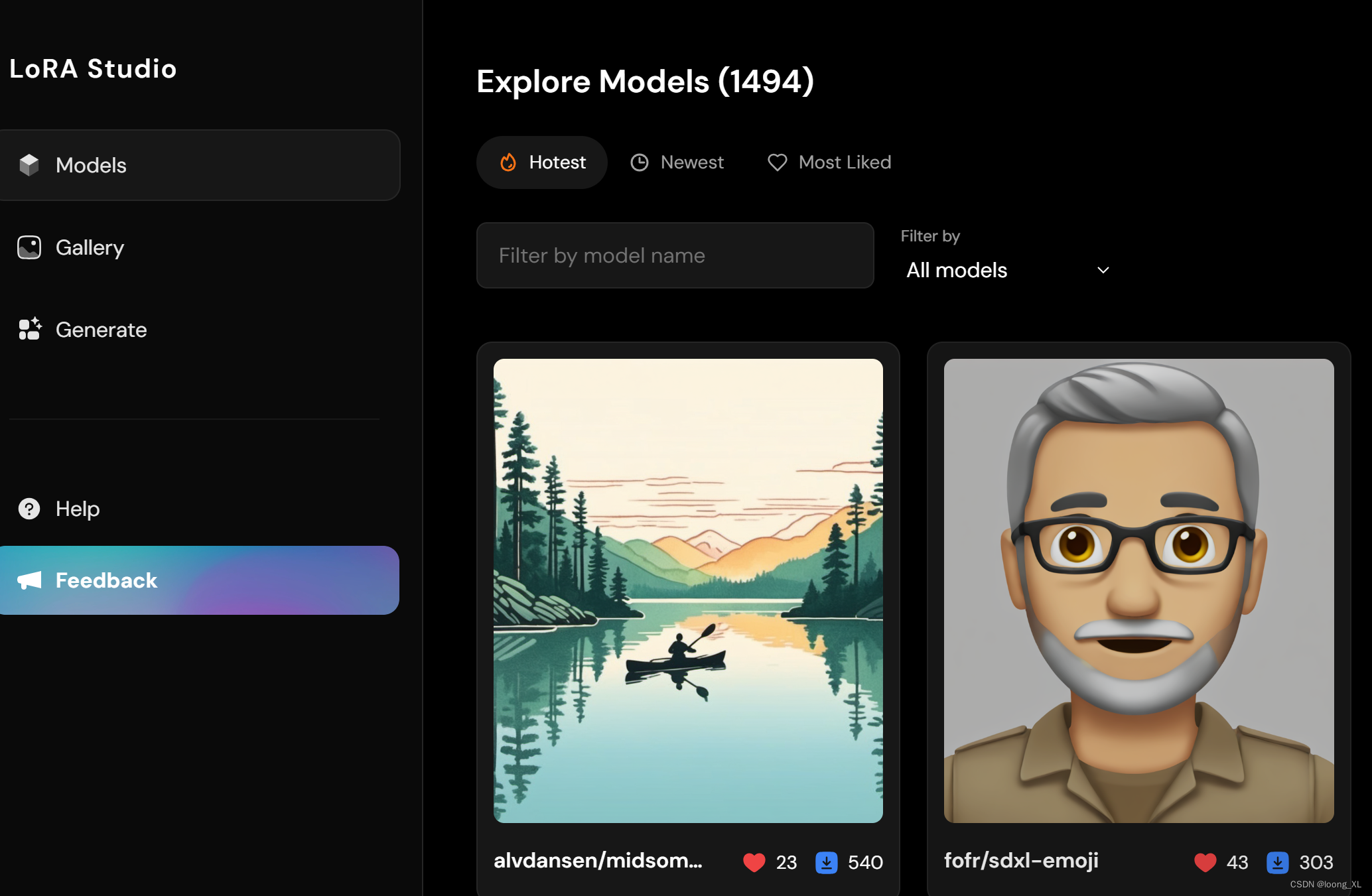

lora仓库(1000多个lora微调模型分享):

https://lorastudio.co/models

参考:

https://huggingface.co/blog/lora

https://github.com/huggingface/diffusers/blob/main/examples/text_to_image/train_text_to_image_lora.py

https://civitai.com/articles/3105/essential-to-advanced-guide-to-training-a-lora

https://github.com/PixArt-alpha/PixArt-alpha?tab=readme-ov-file (微调脚本基本也是上面官方diffusers的例子)

#下载

git clone https://github.com/PixArt-alpha/PixArt-alpha.git

#运行

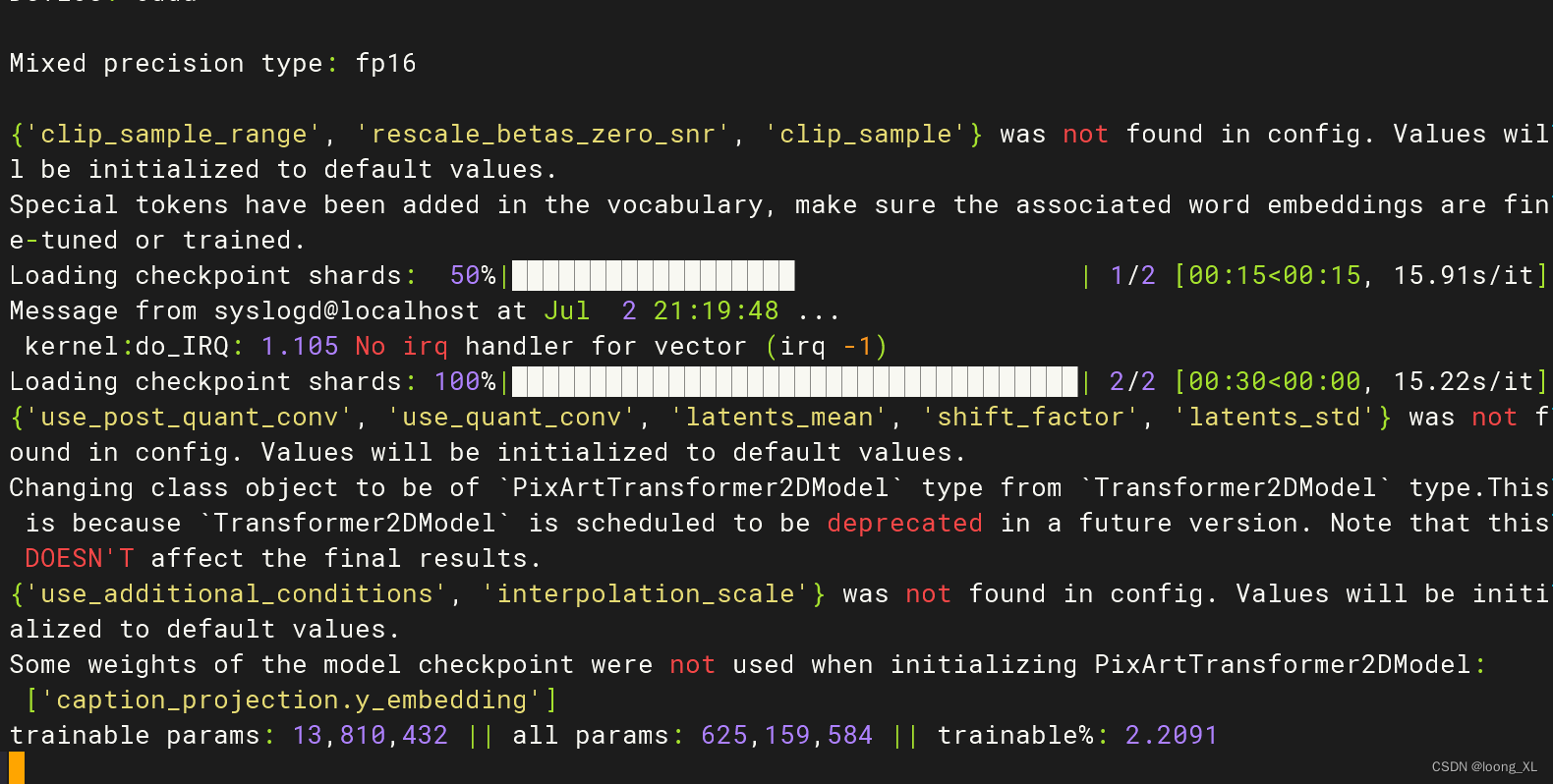

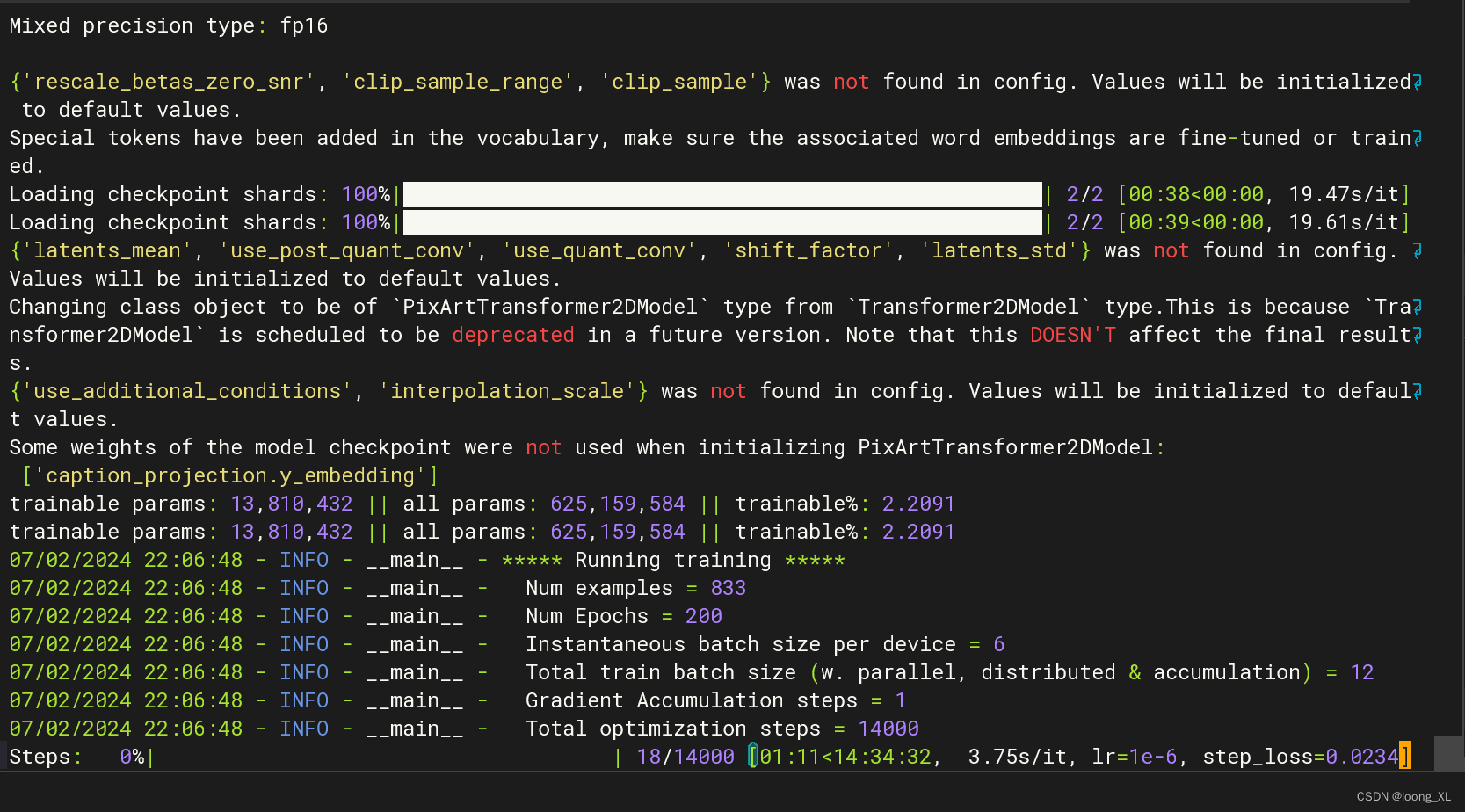

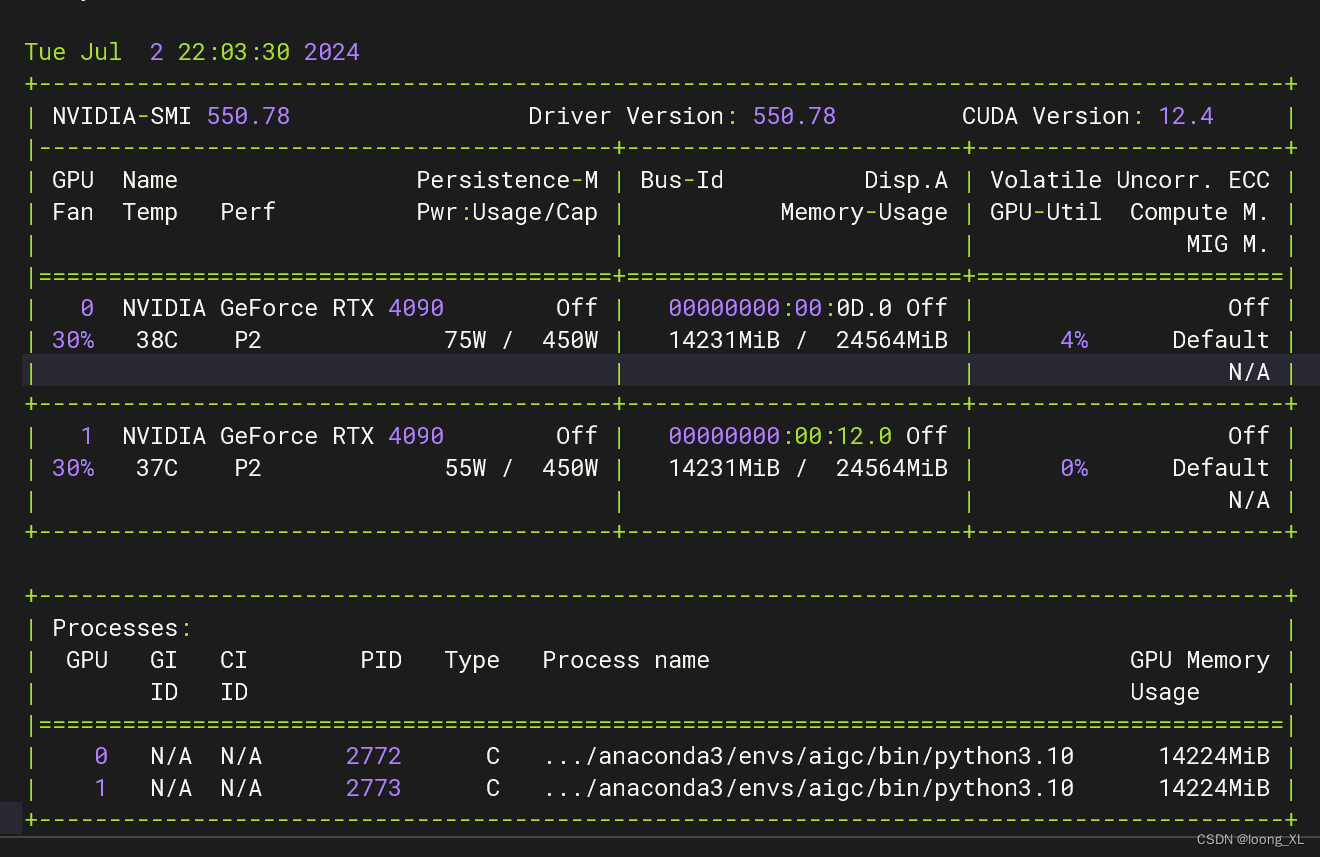

一张4090(更改num_processes=1即可)

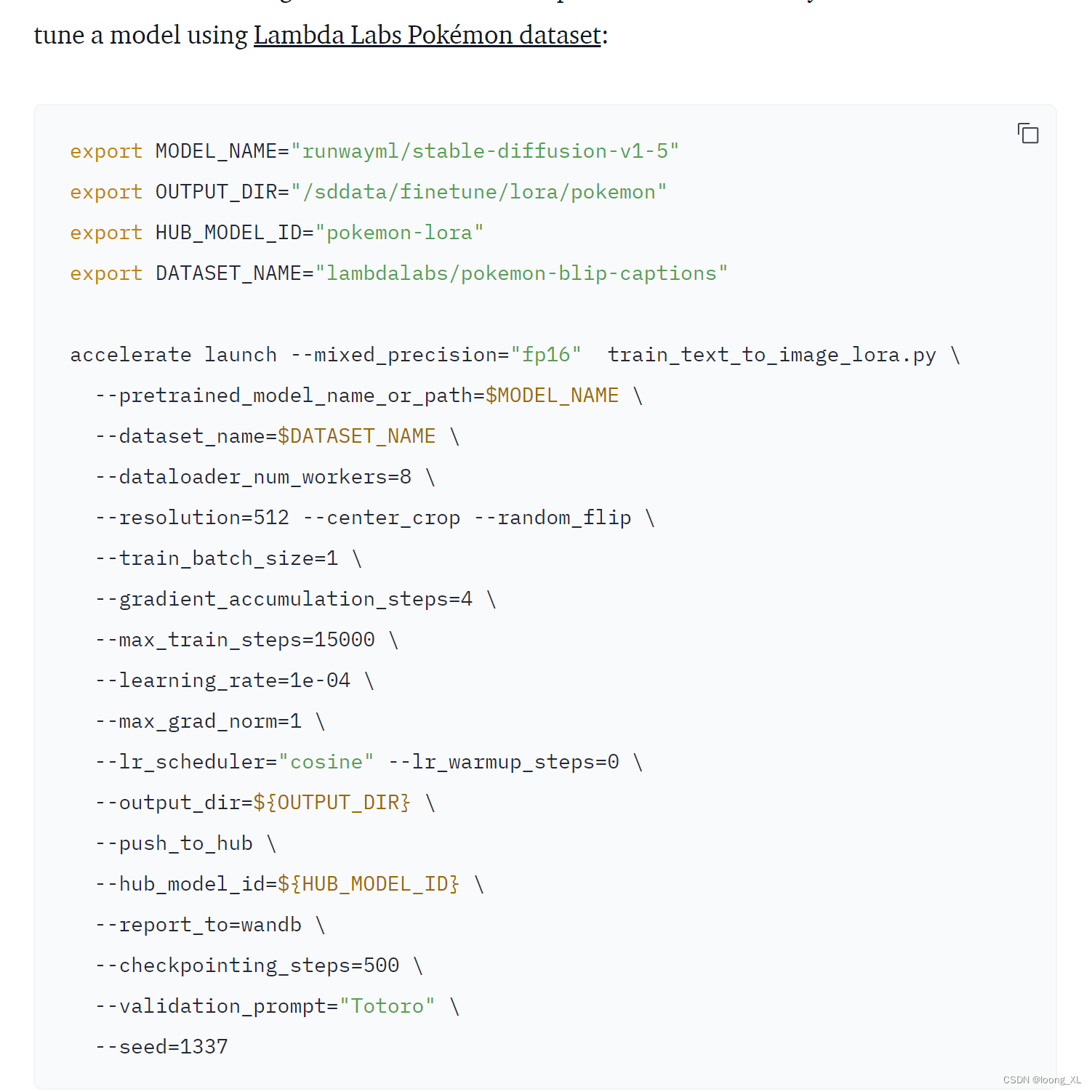

accelerate launch --num_processes=1 --main_process_port=36667 train_scripts/train_pixart_lora_hf.py --mixed_precision="fp16" --pretrained_model_name_or_path=/ai/PixArt-XL-2-1024-MS --dataset_name=reach-vb/pokemon-blip-captions --caption_column="text" --resolution=1024 --random_flip --train_batch_size=6 --num_train_epochs=200 --checkpointing_steps=100 --learning_rate=1e-06 --lr_scheduler="constant" --lr_warmup_steps=0 --seed=42 --output_dir="pixart-pokemon-model" --validation_prompt="cute dragon creature" --report_to="tensorboard" --gradient_checkpointing --checkpoints_total_limit=10 --validation_epochs=5 --rank=16

两张4090

accelerate launch --num_processes=2 --main_process_port=36667 train_scripts/train_pixart_lora_hf.py --mixed_precision="fp16" --pretrained_model_name_or_path=/ai/PixArt-XL-2-1024-MS --dataset_name=reach-vb/pokemon-blip-captions --caption_column="text" --resolution=1024 --random_flip --train_batch_size=6 --num_train_epochs=200 --checkpointing_steps=100 --learning_rate=1e-06 --lr_scheduler="constant" --lr_warmup_steps=0 --seed=42 --output_dir="pixart-pokemon-model" --validation_prompt="cute dragon creature" --report_to="tensorboard" --gradient_checkpointing --checkpoints_total_limit=10 --validation_epochs=5 --rank=16

训练时间有点久,200轮

2、SD-Train lora

参考:

https://github.com/Akegarasu/lora-scripts/blob/main/README-zh.md

https://www.bilibili.com/video/BV15E421G7Qb/?spm_id_from=333.788&vd_source=34d74181abefaf9d8141bbf0d485cde7