部署環境信息:

(base) root@alg-dev17:/opt# lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 45 bits physical, 48 bits virtual

Byte Order: Little Endian

CPU(s): 8

On-line CPU(s) list: 0-7

Vendor ID: GenuineIntel

Model name: Intel(R) Xeon(R) Gold 6140 CPU @ 2.30GHz

CPU family: 6

Model: 85

Thread(s) per core: 1

Core(s) per socket: 1

Socket(s): 8

Stepping: 4

BogoMIPS: 4589.21

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon nopl xtopology tsc_reliable no

nstop_tsc cpuid tsc_known_freq pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch cpuid

_fault invpcid_single pti ssbd ibrs ibpb stibp fsgsbase tsc_adjust bmi1 avx2 smep bmi2 invpcid avx512f avx512dq rdseed adx smap clflushopt clwb avx512cd avx512bw avx512vl xsaveopt xsavec

xgetbv1 xsaves arat pku ospke md_clear flush_l1d arch_capabilities

Virtualization features:

Hypervisor vendor: VMware

Virtualization type: full

Caches (sum of all):

L1d: 256 KiB (8 instances)

L1i: 256 KiB (8 instances)

L2: 8 MiB (8 instances)

L3: 198 MiB (8 instances)

NUMA:

NUMA node(s): 1

NUMA node0 CPU(s): 0-7

Vulnerabilities:

Gather data sampling: Unknown: Dependent on hypervisor status

Itlb multihit: KVM: Mitigation: VMX unsupported

L1tf: Mitigation; PTE Inversion

Mds: Mitigation; Clear CPU buffers; SMT Host state unknown

Meltdown: Mitigation; PTI

Mmio stale data: Mitigation; Clear CPU buffers; SMT Host state unknown

Retbleed: Mitigation; IBRS

Spec rstack overflow: Not affected

Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl and seccomp

Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Spectre v2: Mitigation; IBRS; IBPB conditional; STIBP disabled; RSB filling; PBRSB-eIBRS Not affected; BHI Syscall hardening, KVM SW loop

Srbds: Not affected

Tsx async abort: Not affected

(base) root@alg-dev17:/opt# free -h

total used free shared buff/cache available

Mem: 15Gi 2.4Gi 12Gi 18Mi 1.2Gi 10Gi

Swap: 3.8Gi 0B 3.8Gi

(base) root@alg-dev17:/opt# nvidia-smi

Fri Jun 28 09:17:11 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 555.42.02 Driver Version: 555.42.02 CUDA Version: 12.5 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce GTX 1080 Ti On | 00000000:0B:00.0 Off | N/A |

| 23% 28C P8 8W / 250W | 3MiB / 11264MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+![]()

大模型:

(base) root@alg-dev17:/opt# ollama list

NAME ID SIZE MODIFIED

qwen2:7b e0d4e1163c58 4.4 GB 22 hours ago

ollama服務配置:

(base) root@alg-dev17:/opt# cat /etc/systemd/system/ollama.service

[Unit]

Description=Ollama Service

After=network-online.target

[Service]

ExecStart=/usr/local/bin/ollama serve

User=ollama

Group=ollama

Restart=always

RestartSec=3

Environment="PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin:/usr/local/cuda/bin"

Environment="OLLAMA_NUM_PARALLEL=16"

Environment="OLLAMA_MAX_LOADED_MODELS=4"

Environment="OLLAMA_HOST=0.0.0.0"

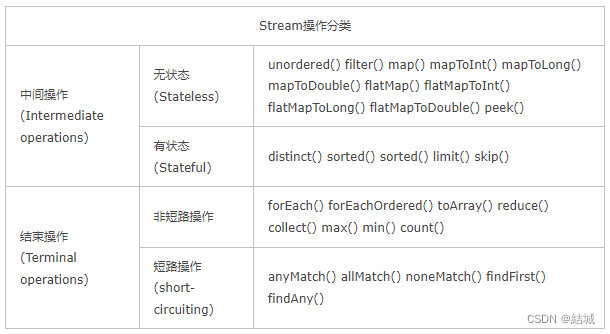

執行脚本:参照一个csdn用户的分享的脚本

import aiohttp

import asyncio

import time

from tqdm import tqdm

import random

questions = [

"Why is the sky blue?", "Why do we dream?", "Why is the ocean salty?", "Why do leaves change color?",

"Why do birds sing?", "Why do we have seasons?", "Why do stars twinkle?", "Why do we yawn?",

"Why is the sun hot?", "Why do cats purr?", "Why do dogs bark?", "Why do fish swim?",

"Why do we have fingerprints?", "Why do we sneeze?", "Why do we have eyebrows?", "Why do we have hair?",

"Why do we have nails?", "Why do we have teeth?", "Why do we have bones?", "Why do we have muscles?",

"Why do we have blood?", "Why do we have a heart?", "Why do we have lungs?", "Why do we have a brain?",

"Why do we have skin?", "Why do we have ears?", "Why do we have eyes?", "Why do we have a nose?",

"Why do we have a mouth?", "Why do we have a tongue?", "Why do we have a stomach?", "Why do we have intestines?",

"Why do we have a liver?", "Why do we have kidneys?", "Why do we have a bladder?", "Why do we have a pancreas?",

"Why do we have a spleen?", "Why do we have a gallbladder?", "Why do we have a thyroid?", "Why do we have adrenal glands?",

"Why do we have a pituitary gland?", "Why do we have a hypothalamus?", "Why do we have a thymus?", "Why do we have lymph nodes?",

"Why do we have a spinal cord?", "Why do we have nerves?", "Why do we have a circulatory system?", "Why do we have a respiratory system?",

"Why do we have a digestive system?", "Why do we have an immune system?"

]

async def fetch(session, url):

"""

参数:

session (aiohttp.ClientSession): 用于请求的会话。

url (str): 要发送请求的 URL。

返回:

tuple: 包含完成 token 数量和请求时间。

"""

start_time = time.time()

# 随机选择一个问题

question = random.choice(questions) # <--- 这两个必须注释一个

# 固定问题

# question = questions[0] # <--- 这两个必须注释一个

# 请求的内容

json_payload = {

"model": "qwen2:7b",

"messages": [{"role": "user", "content": question}],

"stream": False,

"temperature": 0.7 # 参数使用 0.7 保证每次的结果略有区别

}

async with session.post(url, json=json_payload) as response:

response_json = await response.json()

print(f"{response_json}")

end_time = time.time()

request_time = end_time - start_time

completion_tokens = response_json['usage']['completion_tokens'] # 从返回的参数里获取生成的 token 的数量

return completion_tokens, request_time

async def bound_fetch(sem, session, url, pbar):

# 使用信号量 sem 来限制并发请求的数量,确保不会超过最大并发请求数

async with sem:

result = await fetch(session, url)

pbar.update(1)

return result

async def run(load_url, max_concurrent_requests, total_requests):

"""

通过发送多个并发请求来运行基准测试。

参数:

load_url (str): 要发送请求的URL。

max_concurrent_requests (int): 最大并发请求数。

total_requests (int): 要发送的总请求数。

返回:

tuple: 包含完成 token 总数列表和响应时间列表。

"""

# 创建 Semaphore 来限制并发请求的数量

sem = asyncio.Semaphore(max_concurrent_requests)

# 创建一个异步的HTTP会话

async with aiohttp.ClientSession() as session:

tasks = []

# 创建一个进度条来可视化请求的进度

with tqdm(total=total_requests) as pbar:

# 循环创建任务,直到达到总请求数

for _ in range(total_requests):

# 为每个请求创建一个任务,确保它遵守信号量的限制

task = asyncio.ensure_future(bound_fetch(sem, session, load_url, pbar))

tasks.append(task) # 将任务添加到任务列表中

# 等待所有任务完成并收集它们的结果

results = await asyncio.gather(*tasks)

# 计算所有结果中的完成token总数

completion_tokens = sum(result[0] for result in results)

# 从所有结果中提取响应时间

response_times = [result[1] for result in results]

# 返回完成token的总数和响应时间的列表

return completion_tokens, response_times

if __name__ == '__main__':

import sys

if len(sys.argv) != 3:

print("Usage: python bench.py <C> <N>")

sys.exit(1)

C = int(sys.argv[1]) # 最大并发数

N = int(sys.argv[2]) # 请求总数

# vllm 和 ollama 都兼容了 openai 的 api 让测试变得更简单了

url = 'http://10.1.9.167:11434/v1/chat/completions'

start_time = time.time()

completion_tokens, response_times = asyncio.run(run(url, C, N))

end_time = time.time()

# 计算总时间

total_time = end_time - start_time

# 计算每个请求的平均时间

avg_time_per_request = sum(response_times) / len(response_times)

# 计算每秒生成的 token 数量

tokens_per_second = completion_tokens / total_time

print(f'Performance Results:')

print(f' Total requests : {N}')

print(f' Max concurrent requests : {C}')

print(f' Total time : {total_time:.2f} seconds')

print(f' Average time per request : {avg_time_per_request:.2f} seconds')

print(f' Tokens per second : {tokens_per_second:.2f}')

運行結果1:

Performance Results:

Total requests : 2000

Max concurrent requests : 50

Total time : 8360.14 seconds

Average time per request : 206.93 seconds

Tokens per second : 83.43

運行結果2:

显存占用情况:

(base) root@alg-dev17:~# nvidia-smi

Thu Jun 27 16:21:36 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 555.42.02 Driver Version: 555.42.02 CUDA Version: 12.5 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce GTX 1080 Ti On | 00000000:0B:00.0 Off | N/A |

| 35% 64C P2 212W / 250W | 7899MiB / 11264MiB | 83% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 9218 C ...unners/cuda_v11/ollama_llama_server 7896MiB |

+-----------------------------------------------------------------------------------------+仅供参照,转载请注明出处!