前言

2023 年 11 月 6 日,OpenAI DevDay 发表了一系列新能力,其中包括:GPT Store 和 Assistants API。

GPTs 和 Assistants API 本质是降低开发门槛

可操控性和易用性之间的权衡与折中:

- 更多技术路线选择:原生 API、GPTs 和 Assistants API

- GPTs 的示范,起到教育客户的作用,有助于打开市场

- 要更大自由度,需要用 Assistants API 开发

- 想极致调优,还得原生 API + RAG

- 国内大模型的 Assistants API,参考 Minimax

Assistants API 的主要能力

已有能力:

- 创建和管理 assistant,每个 assistant 有独立的配置

- 支持无限长的多轮对话,对话历史保存在 OpenAI 的服务器上

- 通过自有向量数据库支持基于文件的 RAG

- 支持 Code Interpreter

- 在沙箱里编写并运行 Python 代码

- 自我修正代码

- 可传文件给 Code Interpreter

- 支持 Function Calling

- 支持在线调试的 Playground

承诺未来会有的能力:

- 支持 DALL·E

- 支持图片消息

- 支持自定义调整 RAG 的配置项

收费:

- 按 token 收费。无论多轮对话,还是 RAG,所有都按实际消耗的 token 收费

- 如果对话历史过多超过大模型上下文窗口,会自动放弃最老的对话消息

- 文件按数据大小和存放时长收费。1 GB 向量存储 一天收费 0.10 美元

- Code interpreter 跑一次 $0.03

创建一个 Assistant

可以为每个应用,甚至应用中的每个有对话历史的使用场景,创建一个 assistant。

虽然可以用代码创建,也不复杂,例如:

from openai import OpenAI

# 初始化 OpenAI 服务

client = OpenAI()

# 创建助手

assistant = client.beta.assistants.create(

name="小U",

instructions="你叫小U,你是JAVA技术框架 UAP的助手,你负责回答用户关于UAP的问题。",

model="gpt-4-turbo",

)但是,更佳做法是,到 Playground 在线创建,因为:

- 更方便调整

- 更方便测试

样例 Assistant 的配置

Instructions:

你叫小U,你是JAVA技术框架 UAP的助手,你负责回答用户关于UAP的问题。Functions:

{

"name": "ask_database",

"description": "Use this function to answer user questions about course schedule. Output should be a fully formed SQL query.",

"parameters": {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "SQL query extracting info to answer the user's question.\nSQL should be written using this database schema:\n\nCREATE TABLE Courses (\n\tid INT AUTO_INCREMENT PRIMARY KEY,\n\tcourse_date DATE NOT NULL,\n\tstart_time TIME NOT NULL,\n\tend_time TIME NOT NULL,\n\tcourse_name VARCHAR(255) NOT NULL,\n\tinstructor VARCHAR(255) NOT NULL\n);\n\nThe query should be returned in plain text, not in JSON.\nThe query should only contain grammars supported by SQLite."

}

},

"required": [

"query"

]

}

}代码访问 Assistant

管理 thread

Threads:

- Threads 里保存的是对话历史,即 messages

- 一个 assistant 可以有多个 thread

- 一个 thread 可以有无限条 message

- 一个用户与 assistant 的多轮对话历史可以维护在一个 thread 里

import json

def show_json(obj):

"""把任意对象用排版美观的 JSON 格式打印出来"""

print(json.dumps(

json.loads(obj.model_dump_json()),

indent=4,

ensure_ascii=False

))from openai import OpenAI

import os

from dotenv import load_dotenv, find_dotenv

_ = load_dotenv(find_dotenv())

# 初始化 OpenAI 服务

client = OpenAI() # openai >= 1.3.0 起,OPENAI_API_KEY 和 OPENAI_BASE_URL 会被默认使用

# 创建 thread

thread = client.beta.threads.create()

show_json(thread){

"id": "thread_zkT0xybD8VslhJzJJNY3IHuX",

"created_at": 1716553797,

"metadata": {},

"object": "thread",

"tool_resources": {

"code_interpreter": null,

"file_search": null

}

}

可以根据需要,自定义 metadata,比如创建 thread 时,把 thread 归属的用户信息存入。

thread = client.beta.threads.create(

metadata={"fullname": "王卓然", "username": "taliux"}

)

show_json(thread){

"id": "thread_1LMmbLMx2ZrRQjymDOGQGYGX",

"created_at": 1716553801,

"metadata": {

"fullname": "王卓然",

"username": "taliux"

},

"object": "thread",

"tool_resources": {

"code_interpreter": null,

"file_search": null

}

}

Thread ID 如果保存下来,是可以在下次运行时继续对话的。

从 thread ID 获取 thread 对象的代码:

thread = client.beta.threads.retrieve(thread.id)

show_json(thread){

"id": "thread_1LMmbLMx2ZrRQjymDOGQGYGX",

"created_at": 1716553801,

"metadata": {

"fullname": "王卓然",

"username": "taliux"

},

"object": "thread",

"tool_resources": {

"code_interpreter": {

"file_ids": []

},

"file_search": null

}

}

此外,还有:

threads.modify()修改 thread 的metadata和tool_resourcesthreads.retrieve()获取 threadthreads.delete()删除 thread。

具体文档参考:https://platform.openai.com/docs/api-reference/threads

给 Threads 添加 Messages

这里的 messages 结构要复杂一些:

- 不仅有文本,还可以有图片和文件

- 也有

metadata

message = client.beta.threads.messages.create(

thread_id=thread.id, # message 必须归属于一个 thread

role="user", # 取值是 user 或者 assistant。但 assistant 消息会被自动加入,我们一般不需要自己构造

content="你都能做什么?",

)

show_json(message){

"id": "msg_wDZxbO2oi2rAvk9Yxgqw0hVP",

"assistant_id": null,

"attachments": [],

"completed_at": null,

"content": [

{

"text": {

"annotations": [],

"value": "你都能做什么?"

},

"type": "text"

}

],

"created_at": 1716553809,

"incomplete_at": null,

"incomplete_details": null,

"metadata": {},

"object": "thread.message",

"role": "user",

"run_id": null,

"status": null,

"thread_id": "thread_1LMmbLMx2ZrRQjymDOGQGYGX"

}

还有如下函数:

threads.messages.retrieve()获取 messagethreads.messages.update()更新 message 的metadatathreads.messages.list()列出给定 thread 下的所有 messages

具体文档参考:https://platform.openai.com/docs/api-reference/messages

也可以在创建 thread 同时初始化一个 message 列表:

thread = client.beta.threads.create(

messages=[

{

"role": "user",

"content": "你好",

},

{

"role": "assistant",

"content": "有什么可以帮您?",

},

{

"role": "user",

"content": "你是谁?",

},

]

)

show_json(thread) # 显示 thread

print("-----")

show_json(client.beta.threads.messages.list(

thread.id)) # 显示指定 thread 中的 message 列表{

"id": "thread_Qf32rY62YZrpW8d3nEm51mLn",

"created_at": 1716553813,

"metadata": {},

"object": "thread",

"tool_resources": {

"code_interpreter": null,

"file_search": null

}

}

-----

{

"data": [

{

"id": "msg_MkAm2f87yE3ynnLOzXCLq5XR",

"assistant_id": null,

"attachments": [],

"completed_at": null,

"content": [

{

"text": {

"annotations": [],

"value": "你是谁?"

},

"type": "text"

}

],

"created_at": 1716553813,

"incomplete_at": null,

"incomplete_details": null,

"metadata": {},

"object": "thread.message",

"role": "user",

"run_id": null,

"status": null,

"thread_id": "thread_Qf32rY62YZrpW8d3nEm51mLn"

},

{

"id": "msg_tTgI3MS6t19pzQM7ItFJkZaA",

"assistant_id": null,

"attachments": [],

"completed_at": null,

"content": [

{

"text": {

"annotations": [],

"value": "有什么可以帮您?"

},

"type": "text"

}

],

"created_at": 1716553813,

"incomplete_at": null,

"incomplete_details": null,

"metadata": {},

"object": "thread.message",

"role": "assistant",

"run_id": null,

"status": null,

"thread_id": "thread_Qf32rY62YZrpW8d3nEm51mLn"

},

{

"id": "msg_imlUh5ckePY0SaBmE6dDB3AY",

"assistant_id": null,

"attachments": [],

"completed_at": null,

"content": [

{

"text": {

"annotations": [],

"value": "你好"

},

"type": "text"

}

],

"created_at": 1716553813,

"incomplete_at": null,

"incomplete_details": null,

"metadata": {},

"object": "thread.message",

"role": "user",

"run_id": null,

"status": null,

"thread_id": "thread_Qf32rY62YZrpW8d3nEm51mLn"

}

],

"object": "list",

"first_id": "msg_MkAm2f87yE3ynnLOzXCLq5XR",

"last_id": "msg_imlUh5ckePY0SaBmE6dDB3AY",

"has_more": false

}

开始 Run

- 用 run 把 assistant 和 thread 关联,进行对话

- 一个 prompt 就是一次 run

直接运行

assistant_id = "asst_pxKAiBW0VB62glWPjeRDA7mR" # 从 Playground 中拷贝

run = client.beta.threads.runs.create_and_poll(

thread_id=thread.id,

assistant_id=assistant_id,

)if run.status == 'completed':

messages = client.beta.threads.messages.list(

thread_id=thread.id

)

show_json(messages)

else:

print(run.status)Run 的状态

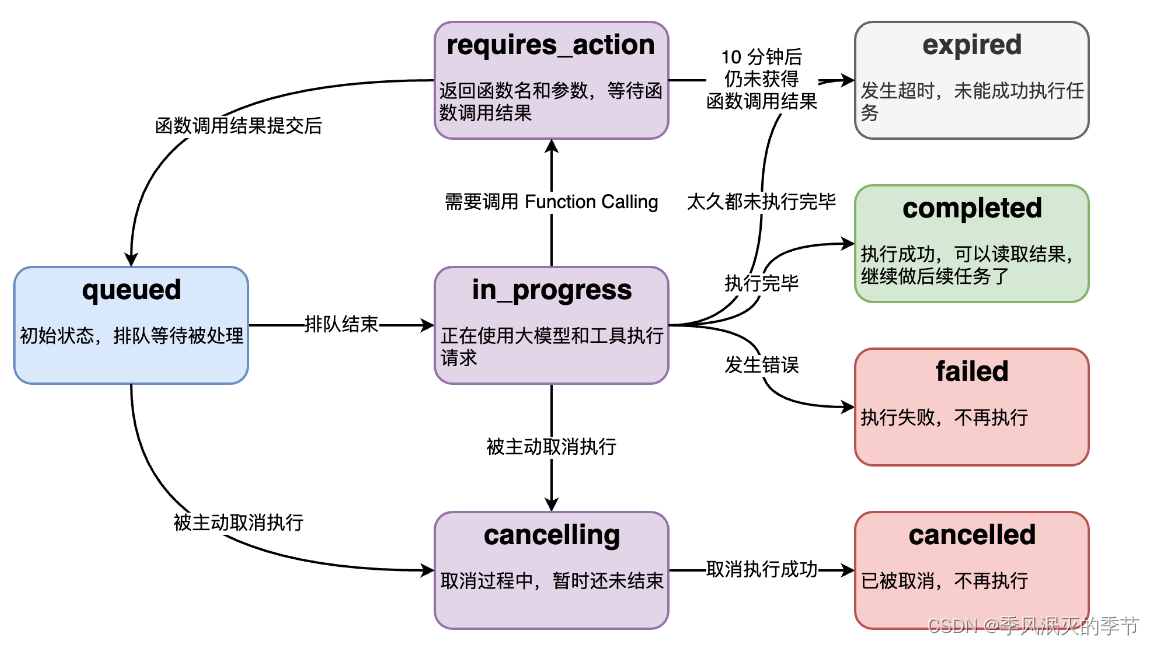

Run 的底层是个异步调用,意味着它不等大模型处理完,就返回。我们通过 run.status 了解大模型的工作进展情况,来判断下一步该干什么。

run.status 有的状态,和状态之间的转移关系如图。

流式运行

- 创建回调函数

from typing_extensions import override

from openai import AssistantEventHandler

class EventHandler(AssistantEventHandler):

@override

def on_text_created(self, text) -> None:

"""响应输出创建事件"""

print(f"\nassistant > ", end="", flush=True)

@override

def on_text_delta(self, delta, snapshot):

"""响应输出生成的流片段"""

print(delta.value, end="", flush=True)2. 运行 run

# 添加新一轮的 user message

message = client.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content="你说什么?",

)

# 使用 stream 接口并传入 EventHandler

with client.beta.threads.runs.stream(

thread_id=thread.id,

assistant_id=assistant_id,

event_handler=EventHandler(),

) as stream:

stream.until_done()还有如下函数:

threads.runs.list()列出 thread 归属的 runthreads.runs.retrieve()获取 runthreads.runs.update()修改 run 的 metadatathreads.runs.cancel()取消in_progress状态的 run

具体文档参考:https://platform.openai.com/docs/api-reference/runs

使用 Tools

创建 Assistant 时声明 Code_Interpreter

如果用代码创建:

assistant = client.beta.assistants.create(

name="Demo Assistant",

instructions="你是人工智能助手。你可以通过代码回答很多数学问题。",

tools=[{"type": "code_interpreter"}],

model="gpt-4-turbo"

)在回调中加入 code_interpreter 的事件响应

from typing_extensions import override

from openai import AssistantEventHandler

class EventHandler(AssistantEventHandler):

@override

def on_text_created(self, text) -> None:

"""响应输出创建事件"""

print(f"\nassistant > ", end="", flush=True)

@override

def on_text_delta(self, delta, snapshot):

"""响应输出生成的流片段"""

print(delta.value, end="", flush=True)

@override

def on_tool_call_created(self, tool_call):

"""响应工具调用"""

print(f"\nassistant > {tool_call.type}\n", flush=True)

@override

def on_tool_call_delta(self, delta, snapshot):

"""响应工具调用的流片段"""

if delta.type == 'code_interpreter':

if delta.code_interpreter.input:

print(delta.code_interpreter.input, end="", flush=True)

if delta.code_interpreter.outputs:

print(f"\n\noutput >", flush=True)

for output in delta.code_interpreter.outputs:

if output.type == "logs":

print(f"\n{output.logs}", flush=True)发个 Code Interpreter 请求:

# 创建 thread

thread = client.beta.threads.create()

# 添加新一轮的 user message

message = client.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content="用代码计算 1234567 的平方根",

)

# 使用 stream 接口并传入 EventHandler

with client.beta.threads.runs.stream(

thread_id=thread.id,

assistant_id=assistant_id,

event_handler=EventHandler(),

) as stream:

stream.until_done()assistant > code_interpreter import math # 计算 1234567 的平方根 square_root = math.sqrt(1234567) square_root output > 1111.1107055554814 assistant > 1234567 的平方根是约 1111.11。

Code_Interpreter 操作文件

# 上传文件到 OpenAI

file = client.files.create(

file=open("mydata.csv", "rb"),

purpose='assistants'

)

# 创建 assistant

my_assistant = client.beta.assistants.create(

name="CodeInterpreterWithFileDemo",

instructions="你是数据分析师,按要求分析数据。",

model="gpt-4-turbo",

tools=[{"type": "code_interpreter"}],

tool_resources={

"code_interpreter": {

"file_ids": [file.id] # 为 code_interpreter 关联文件

}

}

)# 创建 thread

thread = client.beta.threads.create()

# 添加新一轮的 user message

message = client.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content="统计总销售额",

)

# 使用 stream 接口并传入 EventHandler

with client.beta.threads.runs.stream(

thread_id=thread.id,

assistant_id=my_assistant.id,

event_handler=EventHandler(),

) as stream:

stream.until_done()关于文件操作,还有如下函数:

client.files.list()列出所有文件client.files.retrieve()获取文件对象client.files.delete()删除文件client.files.content()读取文件内容

具体文档参考:https://platform.openai.com/docs/api-reference/files

创建 Assistant 时声明 Function

如果用代码创建:

'''

python

assistant = client.beta.assistants.create(

instructions="你叫瓜瓜。你是AGI课堂的助手。你只回答跟AI大模型有关的问题。不要跟学生闲聊。每次回答问题前,你要拆解问题并输出一步一步的思考过程。",

model="gpt-4o",

tools=[{

"type": "function",

"function": {

"name": "ask_database",

"description": "Use this function to answer user questions about course schedule. Output should be a fully formed SQL query.",

"parameters": {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "SQL query extracting info to answer the user's question.\nSQL should be written using this database schema:\n\nCREATE TABLE Courses (\n\tid INT AUTO_INCREMENT PRIMARY KEY,\n\tcourse_date DATE NOT NULL,\n\tstart_time TIME NOT NULL,\n\tend_time TIME NOT NULL,\n\tcourse_name VARCHAR(255) NOT NULL,\n\tinstructor VARCHAR(255) NOT NULL\n);\n\nThe query should be returned in plain text, not in JSON.\nThe query should only contain grammars supported by SQLite."

}

},

"required": [

"query"

]

}

}]

)

'''创建一个 Function

# 定义本地函数和数据库

import sqlite3

# 创建数据库连接

conn = sqlite3.connect(':memory:')

cursor = conn.cursor()

# 创建orders表

cursor.execute("""

CREATE TABLE Courses (

id INT AUTO_INCREMENT PRIMARY KEY,

course_date DATE NOT NULL,

start_time TIME NOT NULL,

end_time TIME NOT NULL,

course_name VARCHAR(255) NOT NULL,

instructor VARCHAR(255) NOT NULL

);

""")

# 插入5条明确的模拟记录

timetable = [

('2024-01-23', '20:00', '22:00', 'aaaaa', '张三'),

('2024-01-25', '20:00', '22:00', 'bbbbbbbb', '张三'),

('2024-01-29', '20:00', '22:00', 'ccccc', '张三'),

('2024-02-20', '20:00', '22:00', 'dddddd', '张三'),

('2024-02-22', '20:00', '22:00', 'eeeeeee', '李四'),

]

for record in timetable:

cursor.execute('''

INSERT INTO Courses (course_date, start_time, end_time, course_name, instructor)

VALUES (?, ?, ?, ?, ?)

''', record)

# 提交事务

conn.commit()

def ask_database(query):

cursor.execute(query)

records = cursor.fetchall()

return str(records)

# 可以被回调的函数放入此字典

available_functions = {

"ask_database": ask_database,

}增加回调事件的响应

from typing_extensions import override

from openai import AssistantEventHandler

class EventHandler(AssistantEventHandler):

@override

def on_text_created(self, text) -> None:

"""响应回复创建事件"""

print(f"\nassistant > ", end="", flush=True)

@override

def on_text_delta(self, delta, snapshot):

"""响应输出生成的流片段"""

print(delta.value, end="", flush=True)

@override

def on_tool_call_created(self, tool_call):

"""响应工具调用"""

print(f"\nassistant > {tool_call.type}\n", flush=True)

@override

def on_tool_call_delta(self, delta, snapshot):

"""响应工具调用的流片段"""

if delta.type == 'code_interpreter':

if delta.code_interpreter.input:

print(delta.code_interpreter.input, end="", flush=True)

if delta.code_interpreter.outputs:

print(f"\n\noutput >", flush=True)

for output in delta.code_interpreter.outputs:

if output.type == "logs":

print(f"\n{output.logs}", flush=True)

@override

def on_event(self, event):

"""

响应 'requires_action' 事件

"""

if event.event == 'thread.run.requires_action':

run_id = event.data.id # 获取 run ID

self.handle_requires_action(event.data, run_id)

def handle_requires_action(self, data, run_id):

tool_outputs = []

for tool in data.required_action.submit_tool_outputs.tool_calls:

arguments = json.loads(tool.function.arguments)

print(

f"{tool.function.name}({arguments})",

flush=True

)

# 运行 function

tool_outputs.append({

"tool_call_id": tool.id,

"output": available_functions[tool.function.name](

**arguments

)}

)

# 提交 function 的结果,并继续运行 run

self.submit_tool_outputs(tool_outputs, run_id)

def submit_tool_outputs(self, tool_outputs, run_id):

"""提交function结果,并继续流"""

with client.beta.threads.runs.submit_tool_outputs_stream(

thread_id=self.current_run.thread_id,

run_id=self.current_run.id,

tool_outputs=tool_outputs,

event_handler=EventHandler(),

) as stream:

stream.until_done()# 创建 thread

thread = client.beta.threads.create()

# 添加 user message

message = client.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content="平均一堂课多长时间",

)

# 使用 stream 接口并传入 EventHandler

with client.beta.threads.runs.stream(

thread_id=thread.id,

assistant_id=assistant_id,

event_handler=EventHandler(),

) as stream:

stream.until_done()两个无依赖的 function 会在一次请求中一起被调用

# 创建 thread

thread = client.beta.threads.create()

# 添加 user message

message = client.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content="张三上几堂课,比李四多上几堂",

)

# 使用 stream 接口并传入 EventHandler

with client.beta.threads.runs.stream(

thread_id=thread.id,

assistant_id=assistant_id,

event_handler=EventHandler(),

) as stream:

stream.until_done()assistant > function

assistant > function

ask_database({'query': "SELECT COUNT(*) AS count FROM Courses WHERE instructor = '张三';"})

ask_database({'query': "SELECT COUNT(*) AS count FROM Courses WHERE instructor = '李四';"})

assistant > 张三上了4堂课,而李四上了1堂课。因此,张三比李四多上了3堂课。

更多流中的 Event: https://platform.openai.com/docs/api-reference/assistants-streaming/events