背景

-

Defog

-

llama-3

意义

翻译自然语言到sql,类似脑机接口,大模型重要应用领域

-

sql是数据库查询标准;关系数据库,工具(datax,sqoop,logstash,hive),非关系数据库(MongoDB,图数据库)等都支持sql查询

-

BI,数字化运营,商业分析,大数据分析

-

智能问数

-

智能问答

-

没有大模型前智能问答方案 :

-

开源项目 QABasedOnMedicaKnowledgeGraph

-

https://gitcode.com/liuhuanyong/QASystemOnMedicalKG/overview?utm_source=csdn_github_accelerator&isLogin=1

待完善

-

可靠性

-

复杂,不规范的数据库表

-

信息安全

llama-3-sqlcoder-8b

要求

-

能翻墙

-

Nvidia 显卡

模型下载

-

https://huggingface.co/defog/llama-3-sqlcoder-8b

-

https://aifasthub.com/models/defog

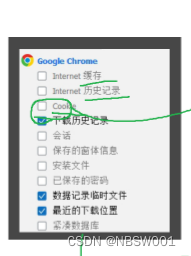

环境配置

cuda

-

检查电脑适配cuda版本

D:\working\code> nvidia-smi

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 528.49 Driver Version: 528.49 CUDA Version: 12.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name TCC/WDDM | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... WDDM | 00000000:03:00.0 On | N/A |

| N/A 32C P8 9W / 80W | 616MiB / 12288MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 1476 C+G C:\Windows\System32\dwm.exe N/A |

| 0 N/A N/A 2572 C+G ...wekyb3d8bbwe\Video.UI.exe N/A |

| 0 N/A N/A 2964 C+G ...d\runtime\WeChatAppEx.exe N/A |

| 0 N/A N/A 4280 C+G ...2txyewy\TextInputHost.exe N/A |

| 0 N/A N/A 4656 C+G ...artMenuExperienceHost.exe N/A |

| 0 N/A N/A 7636 C+G C:\Windows\explorer.exe N/A |

| 0 N/A N/A 7924 C+G ...icrosoft VS Code\Code.exe N/A |

| 0 N/A N/A 8796 C+G ...5n1h2txyewy\SearchApp.exe N/A |

| 0 N/A N/A 9376 C+G ...me\Application\chrome.exe N/A |

| 0 N/A N/A 10540 C ...rograms\Ollama\ollama.exe N/A |

| 0 N/A N/A 11720 C+G ...y\ShellExperienceHost.exe N/A |

| 0 N/A N/A 13676 C+G ...ontend\Docker Desktop.exe N/A |

+-----------------------------------------------------------------------------+得到CUDA版本为12.0

-

下载

https://developer.nvidia.com/cuda-toolkit-archive

安装后的信息

Installed:

- Nsight for Visual Studio 2022

- Nsight Monitor

Not Installed:

- Nsight for Visual Studio 2019

Reason: VS2019 was not found

- Nsight for Visual Studio 2017

Reason: VS2017 was not found

- Integrated Graphics Frame Debugger and Profiler

Reason: see https://developer.nvidia.com/nsight-vstools

- Integrated CUDA Profilers

Reason: see https://developer.nvidia.com/nsight-vstools-

查看版本

C:\Users\Administrator>nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2022 NVIDIA Corporation

Built on Mon_Oct_24_19:40:05_Pacific_Daylight_Time_2022

Cuda compilation tools, release 12.0, V12.0.76

Build cuda_12.0.r12.0/compiler.31968024_0torch

-

torch是一个Python库,用于构建和训练深度学习和张量计算模型

-

去torch官网中查看老版本CUDA适配的torch版本:

https://pytorch.org/get-started/locally/

C:\Users\Administrator>pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

Looking in indexes: https://download.pytorch.org/whl/cu121

Requirement already satisfied: torch in c:\python312\lib\site-packages (2.3.0)

Collecting torchvision

Downloading https://download.pytorch.org/whl/cu121/torchvision-0.18.1%2Bcu121-cp312-cp312-win_amd64.whl (5.7 MB)

---------------------------------------- 5.7/5.7 MB 5.9 MB/s eta 0:00:00

Collecting torchaudio

Downloading https://download.pytorch.org/whl/cu121/torchaudio-2.3.1%2Bcu121-cp312-cp312-win_amd64.whl (4.1 MB)

---------------------------------------- 4.1/4.1 MB 7.2 MB/s eta 0:00:00

Requirement already satisfied: filelock in c:\python312\lib\site-packages (from torch) (3.14.0)

Requirement already satisfied: typing-extensions>=4.8.0 in c:\python312\lib\site-packages (from torch) (4.12.1)

Requirement already satisfied: sympy in c:\python312\lib\site-packages (from torch) (1.12.1)

Requirement already satisfied: networkx in c:\python312\lib\site-packages (from torch) (3.3)

Requirement already satisfied: jinja2 in c:\python312\lib\site-packages (from torch) (3.1.4)

Requirement already satisfied: fsspec in c:\python312\lib\site-packages (from torch) (2024.5.0)

Requirement already satisfied: mkl<=2021.4.0,>=2021.1.1 in c:\python312\lib\site-packages (from torch) (2021.4.0)

Requirement already satisfied: numpy in c:\python312\lib\site-packages (from torchvision) (1.26.4)

Collecting torch

Downloading https://download.pytorch.org/whl/cu121/torch-2.3.1%2Bcu121-cp312-cp312-win_amd64.whl (2423.5 MB)

---------------------------------------- 2.4/2.4 GB 501.6 kB/s eta 0:00:00

Collecting pillow!=8.3.*,>=5.3.0 (from torchvision)

Downloading https://download.pytorch.org/whl/pillow-10.2.0-cp312-cp312-win_amd64.whl (2.6 MB)

---------------------------------------- 2.6/2.6 MB 2.5 MB/s eta 0:00:00

Requirement already satisfied: intel-openmp==2021.* in c:\python312\lib\site-packages (from mkl<=2021.4.0,>=2021.1.1->torch) (2021.4.0)

Requirement already satisfied: tbb==2021.* in c:\python312\lib\site-packages (from mkl<=2021.4.0,>=2021.1.1->torch) (2021.12.0)

Requirement already satisfied: MarkupSafe>=2.0 in c:\python312\lib\site-packages (from jinja2->torch) (2.1.5)

Requirement already satisfied: mpmath<1.4.0,>=1.1.0 in c:\python312\lib\site-packages (from sympy->torch) (1.3.0)

Installing collected packages: pillow, torch, torchvision, torchaudio

Attempting uninstall: torch

Found existing installation: torch 2.3.0

Uninstalling torch-2.3.0:

Successfully uninstalled torch-2.3.0

Successfully installed pillow-10.2.0 torch-2.3.1+cu121 torchaudio-2.3.1+cu121 torchvision-0.18.1+cu121transformers

pip install transformers

编写脚本

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

import sqlparse

print("是否可用:", torch.cuda.is_available()) # 查看GPU是否可用

print("GPU数量:", torch.cuda.device_count()) # 查看GPU数量

print("torch方法查看CUDA版本:", torch.version.cuda) # torch方法查看CUDA版本

print("GPU索引号:", torch.cuda.current_device()) # 查看GPU索引号

print("GPU名称:", torch.cuda.get_device_name(0)) # 根据索引号得到GPU名称

available_memory = torch.cuda.get_device_properties(0).total_memory

print("GPU内存大小 :",available_memory)

model_name = "llama-3-sqlcoder-8b"

tokenizer = AutoTokenizer.from_pretrained(model_name)

if available_memory > 20e9:

# if you have atleast 20GB of GPU memory, run load the model in float16

model = AutoModelForCausalLM.from_pretrained(

model_name,

trust_remote_code=True,

torch_dtype=torch.float16,

device_map="auto",

use_cache=True,

)

else:

# else, load in 4 bits – this is slower and less accurate

model = AutoModelForCausalLM.from_pretrained(

model_name,

trust_remote_code=True,

# torch_dtype=torch.float16,

load_in_4bit=True,

device_map="auto",

use_cache=True,

)

prompt = """<|begin_of_text|><|start_header_id|>user<|end_header_id|>

Generate a SQL query to answer this question: `{question}`

DDL statements:

CREATE TABLE products (

product_id INTEGER PRIMARY KEY, -- Unique ID for each product

name VARCHAR(50), -- Name of the product

price DECIMAL(10,2), -- Price of each unit of the product

quantity INTEGER -- Current quantity in stock

);

CREATE TABLE customers (

customer_id INTEGER PRIMARY KEY, -- Unique ID for each customer

name VARCHAR(50), -- Name of the customer

address VARCHAR(100) -- Mailing address of the customer

);

CREATE TABLE salespeople (

salesperson_id INTEGER PRIMARY KEY, -- Unique ID for each salesperson

name VARCHAR(50), -- Name of the salesperson

region VARCHAR(50) -- Geographic sales region

);

CREATE TABLE sales (

sale_id INTEGER PRIMARY KEY, -- Unique ID for each sale

product_id INTEGER, -- ID of product sold

customer_id INTEGER, -- ID of customer who made purchase

salesperson_id INTEGER, -- ID of salesperson who made the sale

sale_date DATE, -- Date the sale occurred

quantity INTEGER -- Quantity of product sold

);

CREATE TABLE product_suppliers (

supplier_id INTEGER PRIMARY KEY, -- Unique ID for each supplier

product_id INTEGER, -- Product ID supplied

supply_price DECIMAL(10,2) -- Unit price charged by supplier

);

-- sales.product_id can be joined with products.product_id

-- sales.customer_id can be joined with customers.customer_id

-- sales.salesperson_id can be joined with salespeople.salesperson_id

-- product_suppliers.product_id can be joined with products.product_id<|eot_id|><|start_header_id|>assistant<|end_header_id|>

The following SQL query best answers the question `{question}`:

```sql

"""

def generate_query(question):

updated_prompt = prompt.format(question=question)

inputs = tokenizer(updated_prompt, return_tensors="pt").to("cuda")

generated_ids = model.generate(

**inputs,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

pad_token_id=tokenizer.eos_token_id,

max_new_tokens=400,

do_sample=False,

num_beams=1,

temperature=0.0,

top_p=1,

)

outputs = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)

torch.cuda.empty_cache()

torch.cuda.synchronize()

# empty cache so that you do generate more results w/o memory crashing

# particularly important on Colab – memory management is much more straightforward

# when running on an inference service

# return sqlparse.format(outputs[0].split("[SQL]")[-1], reindent=True)

return outputs[0].split("```sql")[1].split(";")[0]

question = "What was our revenue by product in the new york region last month?"

generated_sql = generate_query(question)

print(sqlparse.format(generated_sql, reindent=True))运行过程

D:\working\code> & C:/Python312/python.exe d:/working/code/sqlcode_v3.py

是否可用: True

GPU数量: 1

torch方法查看CUDA版本: 12.1

GPU索引号: 0

GPU名称: NVIDIA GeForce RTX 3060 Laptop GPU

GPU内存大小 : 12884377600

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

The `load_in_4bit` and `load_in_8bit` arguments are deprecated and will be removed in the future versions. Please, pass a `BitsAndBytesConfig` object in `quantization_config` argument instead.

Loading checkpoint shards: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 4/4 [00:26<00:00, 6.59s/it]

C:\Python312\Lib\site-packages\transformers\generation\configuration_utils.py:515: UserWarning: `do_sample` is set to `False`. However, `temperature` is set to `0.0` -- this flag is only used in sample-based generation modes. You should set `do_sample=True` or unset `temperature`.

warnings.warn(

C:\Python312\Lib\site-packages\bitsandbytes\nn\modules.py:426: UserWarning: Input type into Linear4bit is torch.float16, but bnb_4bit_compute_dtype=torch.float32 (default). This

will lead to slow inference or training speed.

warnings.warn(

C:\Python312\Lib\site-packages\transformers\models\llama\modeling_llama.py:649: UserWarning: 1Torch was not compiled with flash attention. (Triggered internally at ..\aten\src\ATen\native\transformers\cuda\sdp_utils.cpp:455.)

attn_output = torch.nn.functional.scaled_dot_product_attention(

SELECT p.name,

SUM(s.quantity * p.price) AS total_revenue

FROM products p

JOIN sales s ON p.product_id = s.product_id

JOIN salespeople sp ON s.salesperson_id = sp.salesperson_id

WHERE sp.region = 'New York'

AND s.sale_date >= CURRENT_DATE - INTERVAL '1 month'

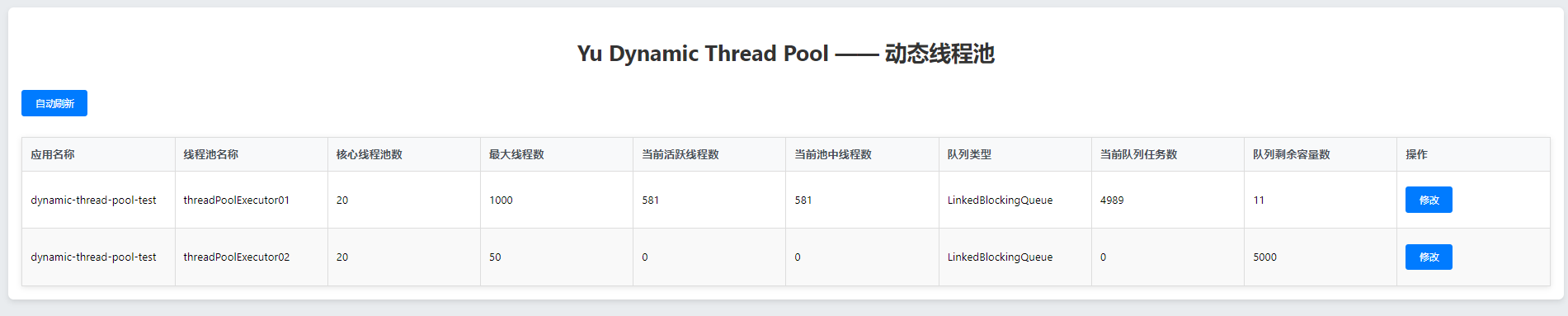

GROUP BY p.name延伸场景

连接真实数据库

参照文档 Getting Started | Defog Docs

界面交互

-

百度智能云,千帆大模型

SQLCoder-7B是由Defog研发、基于Mistral-7B微调的语言模型 https://cloud.baidu.com/doc/WENXINWORKSHOP/s/Hlo472sa2