GGML是一个用于机器学习的张量库,可以在商用硬件上实现大型模型和高性能。它被llama.cpp和whisper.cpp使用

C语言编写

16位浮点支撑

整数量化支持(如4位、5位、8位)

自动分化

内置优化算法(如ADAM, L-BFGS)

针对苹果芯片进行优化

在x86架构上利用AVX / AVX2的内在特性

通过WebAssembly和WASM SIMD的Web支持

无第三方依赖

运行时期间零内存分配

引导语言输出支持

随着自然语言处理(NLP)技术的不断发展,大型语言模型(LLM)如Llama2在多个领域展现出强大的潜力。然而,这些模型的计算需求通常很高,尤其是在推理阶段。为了解决这个问题,量化和在资源受限的环境中运行模型成为了研究的热点。GGML和LangChain是两个开源框架,它们可以帮助你在CPU上高效地运行量化的Llama2模型。

网站

ggml.ai![]() http://ggml.ai/

http://ggml.ai/

开源地址:https://github.com/ggerganov/ggml

Examples

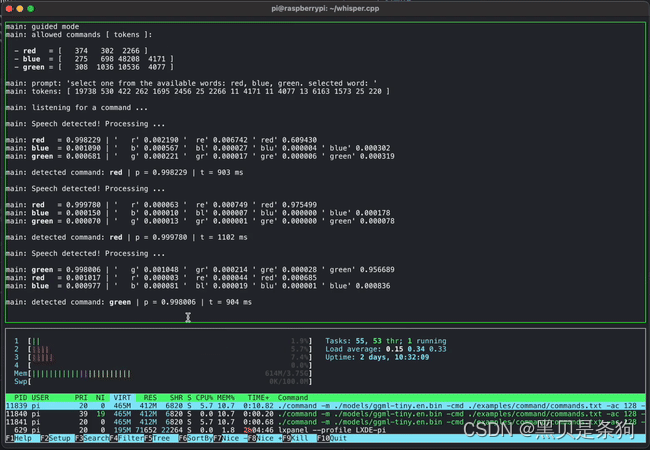

Short voice command detection on a Raspberry Pi 4 using whisper.cpp

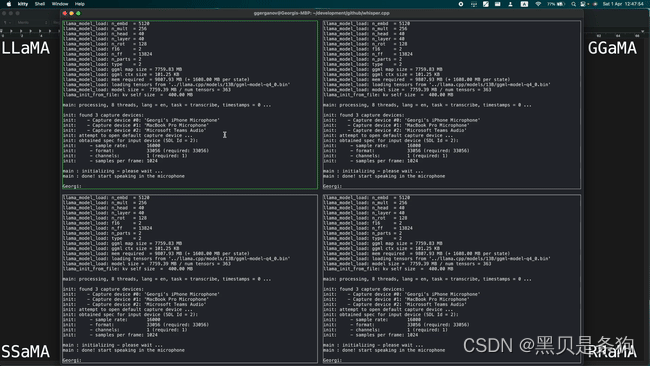

Simultaneously running 4 instances of 13B LLaMA + Whisper Small on a single M1 Pro

Running 7B LLaMA at 40 tok/s on M2 Max

Here are some sample performance stats on Apple Silicon June 2023:

- Whisper Small Encoder, M1 Pro, 7 CPU threads: 600 ms / run

- Whisper Small Encoder, M1 Pro, ANE via Core ML: 200 ms / run

- 7B LLaMA, 4-bit quantization, 3.5 GB, M1 Pro, 8 CPU threads: 43 ms / token

- 13B LLaMA, 4-bit quantization, 6.8 GB, M1 Pro, 8 CPU threads: 73 ms / token

- 7B LLaMA, 4-bit quantization, 3.5 GB, M2 Max GPU: 25 ms / token

- 13B LLaMA, 4-bit quantization, 6.8 GB, M2 Max GPU: 42 ms / token

The ggml way

- Minimal

We like simplicity and aim to keep the codebase as small and as simple as possible

- Open Core

The library and related projects are freely available under the MIT license. The development process is open and everyone is welcome to join. In the future we may choose to develop extensions that are licensed for commercial use

- Explore and have fun!

We built ggml in the spirit of play. Contributors are encouraged to try crazy ideas, build wild demos, and push the edge of what’s possible

Projects

- whisper.cpp

High-performance inference of OpenAI's Whisper automatic speech recognition model

The project provides a high-quality speech-to-text solution that runs on Mac, Windows, Linux, iOS, Android, Raspberry Pi, and Web

- llama.cpp

Inference of Meta's LLaMA large language model

The project demonstrates efficient inference on Apple Silicon hardware and explores a variety of optimization techniques and applications of LLMs

Contributing

-

The best way to support the project is by contributing to the codebase

-

If you wish to financially support the project, please consider becoming a sponsor to any of the contributors that are already involved:

- llama.cpp contributors

- whisper.cpp contributors

- ggml contributors

Company

ggml.ai is a company founded by Georgi Gerganov to support the development of ggml. Nat Friedman and Daniel Gross provided the pre-seed funding.

We are currently seeking to hire full-time developers that share our vision and would like to help advance the idea of on-device inference. If you are interested and if you have already been a contributor to any of the related projects, please contact us at jobs@ggml.ai

![【代码随想录】【算法训练营】【第36天】[452]用最少数量的箭引爆气球 [435]无重叠区间 [763]划分字母区间](https://img-blog.csdnimg.cn/direct/29ef6da0cb8246ae8ed6b27c1f0e8585.png)