头文件:

xvideoview.h

#ifndef XVIDEO_VIEW_H

#define XVIDEO_VIEW_H

#include <mutex>

#include <fstream>

struct AVFrame;

void MSleep(unsigned int ms);

//获取当前时间戳 毫秒

long long NowMs();

/// 视频渲染接口类

/// 隐藏SDL实现

/// 渲染方案可替代

// 线程安全

class XVideoView

{

public:

enum Format //枚举的值和ffmpeg中一致

{

YUV420P = 0,

NV12 = 23,

ARGB = 25,

RGBA = 26,

BGRA = 28

};

enum RenderType

{

SDL = 0

};

static XVideoView* Create(RenderType type = SDL);

/// 初始化渲染窗口 线程安全 可多次调用

/// @para w 窗口宽度

/// @para h 窗口高度

/// @para fmt 绘制的像素格式

/// @para win_id 窗口句柄,如果为空,创建新窗口

/// @return 是否创建成功

virtual bool Init(int w, int h,

Format fmt = RGBA) = 0;

//清理所有申请的资源,包括关闭窗口

virtual void Close() = 0;

//处理窗口退出事件

virtual bool IsExit() = 0;

//

/// 渲染图像 线程安全

///@para data 渲染的二进制数据

///@para linesize 一行数据的字节数,对于YUV420P就是Y一行字节数

/// linesize<=0 就根据宽度和像素格式自动算出大小

/// @return 渲染是否成功

virtual bool Draw(const unsigned char* data, int linesize = 0) = 0;

virtual bool Draw(

const unsigned char* y, int y_pitch,

const unsigned char* u, int u_pitch,

const unsigned char* v, int v_pitch

) = 0;

//显示缩放

void Scale(int w, int h)

{

scale_w_ = w;

scale_h_ = h;

}

bool DrawFrame(AVFrame* frame);

int render_fps() { return render_fps_; }

//打开文件

bool Open(std::string filepath);

//

/// 读取一帧数据,并维护AVFrame空间

/// 每次调用会覆盖上一次数据

AVFrame* Read();

void set_win_id(void* win) { win_id_ = win; }

virtual ~XVideoView();

protected:

void* win_id_ = nullptr; //窗口句柄

int render_fps_ = 0; //显示帧率

int width_ = 0; //材质宽高

int height_ = 0;

Format fmt_ = RGBA; //像素格式

std::mutex mtx_; //确保线程安全

int scale_w_ = 0; //显示大小

int scale_h_ = 0;

long long beg_ms_ = 0; //计时开始时间

int count_ = 0; //统计显示次数

unsigned char* cache_ = nullptr;//用于复制NV12缓冲

private:

std::ifstream ifs_;

AVFrame* frame_ = nullptr;

};

#endif

xsdl.h

#pragma once

#include "xvideoview.h"

struct SDL_Window;

struct SDL_Renderer;

struct SDL_Texture;

class XSDL :public XVideoView

{

public:

void Close() override;

/// 初始化渲染窗口 线程安全

/// @para w 窗口宽度

/// @para h 窗口高度

/// @para fmt 绘制的像素格式

/// @para win_id 窗口句柄,如果为空,创建新窗口

/// @return 是否创建成功

bool Init(int w, int h,

Format fmt = RGBA) override;

//

/// 渲染图像 线程安全

///@para data 渲染的二进制数据

///@para linesize 一行数据的字节数,对于YUV420P就是Y一行字节数

/// linesize<=0 就根据宽度和像素格式自动算出大小

/// @return 渲染是否成功

bool Draw(const unsigned char* data,

int linesize = 0) override;

bool Draw(

const unsigned char* y, int y_pitch,

const unsigned char* u, int u_pitch,

const unsigned char* v, int v_pitch

) override;

bool IsExit() override;

private:

SDL_Window* win_ = nullptr;

SDL_Renderer* render_ = nullptr;

SDL_Texture* texture_ = nullptr;

};

源文件:

xvideoview.cpp

#include "xsdl.h"

#include <thread>

#include <iostream>

using namespace std;

extern "C"

{

#include <libavcodec/avcodec.h>

}

#pragma comment(lib,"avutil.lib")

void MSleep(unsigned int ms)

{

auto beg = clock();

for (int i = 0; i < ms; i++)

{

this_thread::sleep_for(1ms);

if ((clock() - beg) / (CLOCKS_PER_SEC / 1000) >= ms)

break;

}

}

long long NowMs()

{

return clock() / (CLOCKS_PER_SEC / 1000);

}

AVFrame* XVideoView::Read()

{

if (width_ <= 0 || height_ <= 0 || !ifs_)return NULL;

//AVFrame空间已经申请,如果参数发生变化,需要释放空间

if (frame_)

{

if (frame_->width != width_

|| frame_->height != height_

|| frame_->format != fmt_)

{

//释放AVFrame对象空间,和buf引用计数减一

av_frame_free(&frame_);

}

}

if (!frame_)

{

//分配对象空间和像素空间

frame_ = av_frame_alloc();

frame_->width = width_;

frame_->height = height_;

frame_->format = fmt_;

frame_->linesize[0] = width_ * 4;

if (frame_->format == AV_PIX_FMT_YUV420P)

{

frame_->linesize[0] = width_; // Y

frame_->linesize[1] = width_ / 2;//U

frame_->linesize[2] = width_ / 2;//V

}

//生成AVFrame空间,使用默认对齐

auto re = av_frame_get_buffer(frame_, 0);

if (re != 0)

{

char buf[1024] = { 0 };

av_strerror(re, buf, sizeof(buf) - 1);

cout << buf << endl;

av_frame_free(&frame_);

return NULL;

}

}

if (!frame_)return NULL;

//读取一帧数据

if (frame_->format == AV_PIX_FMT_YUV420P)

{

ifs_.read((char*)frame_->data[0],

frame_->linesize[0] * height_); //Y

ifs_.read((char*)frame_->data[1],

frame_->linesize[1] * height_ / 2); //U

ifs_.read((char*)frame_->data[2],

frame_->linesize[2] * height_ / 2); //V

}

else //RGBA ARGB BGRA 32

{

ifs_.read((char*)frame_->data[0], frame_->linesize[0] * height_);

}

if (ifs_.gcount() == 0)

return NULL;

return frame_;

}

//打开文件

bool XVideoView::Open(std::string filepath)

{

if (ifs_.is_open())

{

ifs_.close();

}

ifs_.open(filepath, ios::binary);

return ifs_.is_open();

}

XVideoView* XVideoView::Create(RenderType type)

{

switch (type)

{

case XVideoView::SDL:

return new XSDL();

break;

default:

break;

}

return nullptr;

}

XVideoView::~XVideoView()

{

if (cache_)

delete cache_;

cache_ = nullptr;

}

bool XVideoView::DrawFrame(AVFrame* frame)

{

if (!frame || !frame->data[0])return false;

count_++;

if (beg_ms_ <= 0)

{

beg_ms_ = clock();

}

//计算显示帧率

else if ((clock() - beg_ms_) / (CLOCKS_PER_SEC / 1000) >= 1000) //一秒计算一次fps

{

render_fps_ = count_;

count_ = 0;

beg_ms_ = clock();

}

int linesize = 0;

switch (frame->format)

{

case AV_PIX_FMT_YUV420P:

return Draw(frame->data[0], frame->linesize[0],//Y

frame->data[1], frame->linesize[1], //U

frame->data[2], frame->linesize[2] //V

);

case AV_PIX_FMT_NV12:

if (!cache_)

{

cache_ = new unsigned char[4096 * 2160 * 1.5];

}

linesize = frame->width;

if (frame->linesize[0] == frame->width)

{

memcpy(cache_, frame->data[0], frame->linesize[0] * frame->height); //Y

memcpy(cache_ + frame->linesize[0] * frame->height, frame->data[1], frame->linesize[1] * frame->height / 2); //UV

}

else //逐行复制

{

for (int i = 0; i < frame->height; i++) //Y

{

memcpy(cache_ + i * frame->width,

frame->data[0] + i * frame->linesize[0],

frame->width

);

}

for (int i = 0; i < frame->height / 2; i++) //UV

{

auto p = cache_ + frame->height * frame->width;// 移位Y

memcpy(p + i * frame->width,

frame->data[1] + i * frame->linesize[1],

frame->width

);

}

}

//frame->data[0] + frame->data[1]

return Draw(cache_, linesize);

case AV_PIX_FMT_BGRA:

case AV_PIX_FMT_ARGB:

case AV_PIX_FMT_RGBA:

return Draw(frame->data[0], frame->linesize[0]);

default:

break;

}

return false;

}

xsdl.cpp

#include "xsdl.h"

#include "sdl/SDL.h"

#include <iostream>

using namespace std;

#pragma comment(lib,"SDL2.lib")

static bool InitVideo()

{

static bool is_first = true;

static mutex mux;

unique_lock<mutex> sdl_lock(mux);

if (!is_first)return true;

is_first = false;

if (SDL_Init(SDL_INIT_VIDEO))

{

cout << SDL_GetError() << endl;

return false;

}

//设定缩放算法,解决锯齿问题,线性插值算法

SDL_SetHint(SDL_HINT_RENDER_SCALE_QUALITY, "1");

return true;

}

bool XSDL::IsExit()

{

SDL_Event ev;

SDL_WaitEventTimeout(&ev, 1);

if (ev.type == SDL_QUIT)

return true;

return false;

}

void XSDL::Close()

{

//确保线程安全

unique_lock<mutex> sdl_lock(mtx_);

if (texture_)

{

SDL_DestroyTexture(texture_);

texture_ = nullptr;

}

if (render_)

{

SDL_DestroyRenderer(render_);

render_ = nullptr;

}

if (win_)

{

SDL_DestroyWindow(win_);

win_ = nullptr;

}

}

bool XSDL::Init(int w, int h, Format fmt)

{

if (w <= 0 || h <= 0)return false;

//初始化SDL 视频库

InitVideo();

//确保线程安全

unique_lock<mutex> sdl_lock(mtx_);

width_ = w;

height_ = h;

fmt_ = fmt;

if (texture_)

SDL_DestroyTexture(texture_);

if (render_)

SDL_DestroyRenderer(render_);

///1 创建窗口

if (!win_)

{

if (!win_id_)

{

//新建窗口

win_ = SDL_CreateWindow("",

SDL_WINDOWPOS_UNDEFINED,

SDL_WINDOWPOS_UNDEFINED,

w, h, SDL_WINDOW_OPENGL | SDL_WINDOW_RESIZABLE

);

}

else

{

//渲染到控件窗口

win_ = SDL_CreateWindowFrom(win_id_);

}

}

if (!win_)

{

cerr << SDL_GetError() << endl;

return false;

}

/// 2 创建渲染器

render_ = SDL_CreateRenderer(win_, -1, SDL_RENDERER_ACCELERATED);

if (!render_)

{

cerr << SDL_GetError() << endl;

return false;

}

//创建材质 (显存)

unsigned int sdl_fmt = SDL_PIXELFORMAT_RGBA8888;

switch (fmt)

{

case XVideoView::RGBA:

sdl_fmt = SDL_PIXELFORMAT_RGBA32;

break;

case XVideoView::BGRA:

sdl_fmt = SDL_PIXELFORMAT_BGRA32;

break;

case XVideoView::ARGB:

sdl_fmt = SDL_PIXELFORMAT_ARGB32;

break;

case XVideoView::YUV420P:

sdl_fmt = SDL_PIXELFORMAT_IYUV;

break;

case XVideoView::NV12:

sdl_fmt = SDL_PIXELFORMAT_NV12;

break;

default:

break;

}

texture_ = SDL_CreateTexture(render_,

sdl_fmt, //像素格式

SDL_TEXTUREACCESS_STREAMING, //频繁修改的渲染(带锁)

w, h //材质大小

);

if (!texture_)

{

cerr << SDL_GetError() << endl;

return false;

}

return true;

}

bool XSDL::Draw(

const unsigned char* y, int y_pitch,

const unsigned char* u, int u_pitch,

const unsigned char* v, int v_pitch

)

{

//参数检查

if (!y || !u || !v)return false;

unique_lock<mutex> sdl_lock(mtx_);

if (!texture_ || !render_ || !win_ || width_ <= 0 || height_ <= 0)

return false;

//复制内存到显显存

auto re = SDL_UpdateYUVTexture(texture_,

NULL,

y, y_pitch,

u, u_pitch,

v, v_pitch);

if (re != 0)

{

cout << SDL_GetError() << endl;

return false;

}

//清空屏幕

SDL_RenderClear(render_);

//材质复制到渲染器

SDL_Rect rect;

SDL_Rect* prect = nullptr;

if (scale_w_ > 0) //用户手动设置缩放

{

rect.x = 0; rect.y = 0;

rect.w = scale_w_;//渲染的宽高,可缩放

rect.h = scale_w_;

prect = ▭

}

re = SDL_RenderCopy(render_, texture_, NULL, prect);

if (re != 0)

{

cout << SDL_GetError() << endl;

return false;

}

SDL_RenderPresent(render_);

return true;

}

bool XSDL::Draw(const unsigned char* data, int linesize)

{

if (!data)return false;

unique_lock<mutex> sdl_lock(mtx_);

if (!texture_ || !render_ || !win_ || width_ <= 0 || height_ <= 0)

return false;

if (linesize <= 0)

{

switch (fmt_)

{

case XVideoView::RGBA:

case XVideoView::ARGB:

linesize = width_ * 4;

break;

case XVideoView::YUV420P:

linesize = width_;

break;

default:

break;

}

}

if (linesize <= 0)

return false;

//复制内存到显显存

auto re = SDL_UpdateTexture(texture_, NULL, data, linesize);

if (re != 0)

{

cout << SDL_GetError() << endl;

return false;

}

//清空屏幕

SDL_RenderClear(render_);

//材质复制到渲染器

SDL_Rect rect;

SDL_Rect* prect = nullptr;

if (scale_w_ > 0) //用户手动设置缩放

{

rect.x = 0; rect.y = 0;

rect.w = scale_w_;//渲染的宽高,可缩放

rect.h = scale_w_;

prect = ▭

}

re = SDL_RenderCopy(render_, texture_, NULL, prect);

if (re != 0)

{

cout << SDL_GetError() << endl;

return false;

}

SDL_RenderPresent(render_);

return true;

}

main.cpp

#include <iostream>

#include <fstream>

#include<string>

#include"xsdl.h"

using namespace std;

extern "C" { //指定函数是c语言函数,函数名不包含重载标注

//引用ffmpeg头文件

#include <libavcodec/avcodec.h>

#include <libavutil/opt.h>

}

//预处理指令导入库

#pragma comment(lib,"avcodec.lib")

#pragma comment(lib,"avutil.lib")

int main(int argc, char* argv[])

{

auto view = XVideoView::Create();

//1 分割h264 存入AVPacket

// ffmpeg -i v1080.mp4 -s 400x300 test.h264

string filename = "test.h264";

ifstream ifs(filename, ios::binary);

if (!ifs)return -1;

unsigned char inbuf[4096] = { 0 };//用于存储h264编码流

AVCodecID codec_id = AV_CODEC_ID_H264;

//1 找解码器

auto codec = avcodec_find_decoder(codec_id);

//2 创建解码器上下文

AVCodecContext *c = avcodec_alloc_context3(codec);

//硬件加速格式 DXVA2

AVHWDeviceType hw_type = AV_HWDEVICE_TYPE_DXVA2;

/// 打印所有支持的硬件加速方式

for (int i = 0;; i++)

{

//const AVCodecHWConfig *avcodec_get_hw_config(const AVCodec *codec, int index);

//用于获取指定编解码器的硬件加速配置。

//index: 硬件配置的索引值,从 0 开始递增。用于遍历所有可用的硬件配置。

const AVCodecHWConfig *config = avcodec_get_hw_config(codec, i);

if (!config)break;

if (config->device_type)

//const char *av_hwdevice_get_type_name(enum AVHWDeviceType type);

//该函数接收一个 AVHWDeviceType 类型的参数,并返回一个描述该硬件设备类型的字符串指针

//如 CUDA、DXVA2、VAAPI 等。

cout << av_hwdevice_get_type_name(config->device_type) << endl;

}

//初始化硬件加速器上下文

AVBufferRef* hw_ctx = nullptr;

av_hwdevice_ctx_create(&hw_ctx, hw_type, NULL, NULL, 0);

//设定硬件GPU加速

c->hw_device_ctx = av_buffer_ref(hw_ctx);//把硬件加速器上下文引用赋值给解码器上下文,以便在后续的编码或解码操作中利用硬件加速功能

c->thread_count = 16;

//3 打开编码器上下文

avcodec_open2(c, NULL, NULL);

//分割上下文

AVCodecParserContext* parser = av_parser_init(codec_id);

AVPacket* pkt = av_packet_alloc();

AVFrame* frame = av_frame_alloc();

AVFrame* hw_frame = av_frame_alloc(); //硬解码转换用这个frame

long long begin = NowMs();

int count = 0;//解码统计

bool is_init_win = false;

while (!ifs.eof())

{

ifs.read((char*)inbuf, sizeof(inbuf));//将h264编码的流读入inbuf中,一次读4096

int data_size = ifs.gcount();// 返回上一次读取操作实际读取的字符数,并用data_size记录

if (data_size <= 0)break;

//检查输入流是否已经到达文件末尾(EOF,End Of File)

if (ifs.eof())

{

ifs.clear();

ifs.seekg(0, ios::beg);//回到文件开头重复读取

}

auto data = inbuf;//data和inbuf指向同一片区域

while (data_size > 0) //一次有多帧数据

{

//通过0001 截断输出到AVPacket 返回帧大小

int ret = av_parser_parse2(parser, c,

&pkt->data, &pkt->size, //截断后输出到AVpacket的data中

data, data_size, //h264编码流,待处理数据

AV_NOPTS_VALUE, AV_NOPTS_VALUE, 0

);//返回消耗的输入数据字节数ret。如果所有输入数据都被消耗,则返回值等于 buf_size。如果解析过程中出现错误,则返回负数。

data += ret;//data指针向前移动 ret个字节,继续处理inbuf未处理的数据

data_size -= ret; //待处理的数据大小

if (pkt->size)//如果还有截断后的avpacket流则进行解码操作

{

//cout << pkt->size << " "<<flush;

//发送packet到解码线程

ret = avcodec_send_packet(c, pkt);//把avpacket给解码器进行解码

if (ret < 0)

break;

//获取多帧解码数据

while (ret >= 0)//如果解码成功

{

//每次回调用av_frame_unref

ret = avcodec_receive_frame(c, frame);//拿到解码后的数据存储到frame中

if (ret < 0)

break;

auto pframe = frame; //为了同时支持硬解码和软解码

if (c->hw_device_ctx) //硬解码

{

//硬解码转换GPU =》CPU ,显存=》内存

//AV_PIX_FMT_NV12, ///< planar YUV 4:2:0, 12bpp, 1 plane for Y and 1 plane for the UV components, which are interleaved (first byte U and the following byte V)

av_hwframe_transfer_data(hw_frame, frame, 0);

pframe = hw_frame;//如果是硬解码则pframe = hw_frame

}

//AV_PIX_FMT_DXVA2_VLD

AV_PIX_FMT_DXVA2_VLD;

cout << frame->format << " " << flush;

//

/// 第一帧初始化窗口

if (!is_init_win)

{

is_init_win = true;

view->Init(pframe->width, pframe->height, (XVideoView::Format)pframe->format);

}

view->DrawFrame(pframe);

count++;

auto cur = NowMs();

if (cur - begin >= 1000)// 1秒钟计算一次

{

cout << "\nfps = " << count << endl;

count = 0;

begin = cur;

}

}

}

}

}

///取出缓存数据,防止丢帧

int ret = avcodec_send_packet(c, NULL);

while (ret >= 0)

{

ret = avcodec_receive_frame(c, frame);

if (ret < 0)

break;

cout << frame->format << "-" << flush;

}

av_parser_close(parser);

avcodec_free_context(&c);

av_frame_free(&frame);

av_packet_free(&pkt);

getchar();

return 0;

}

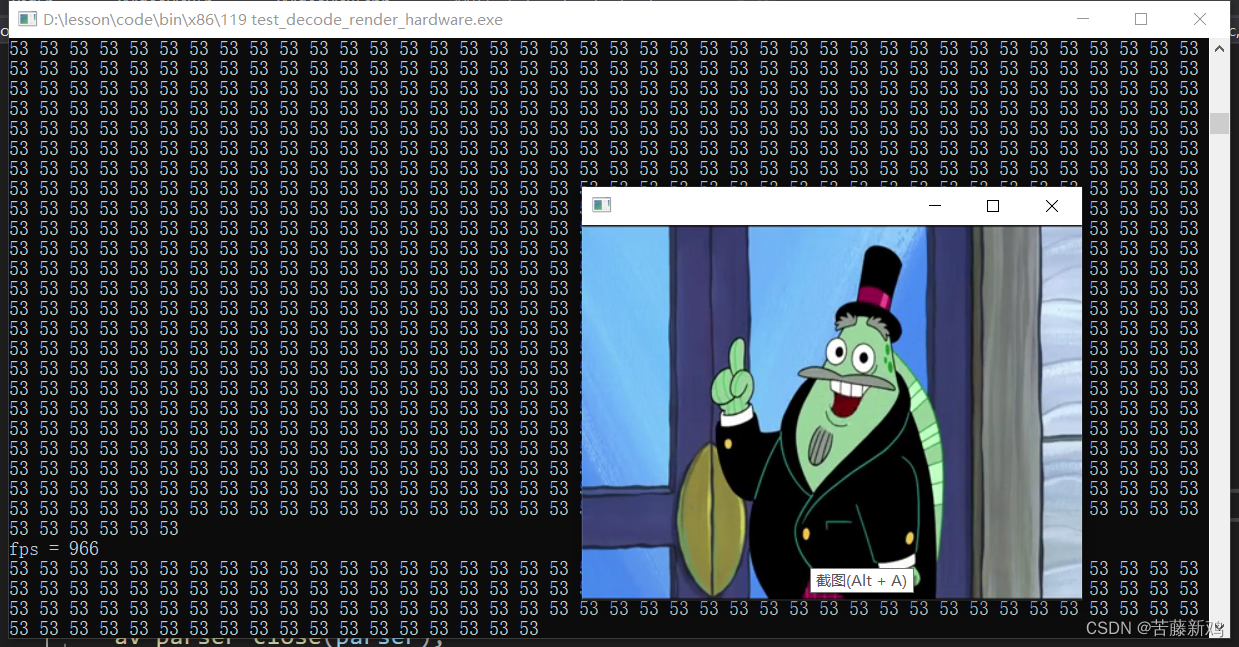

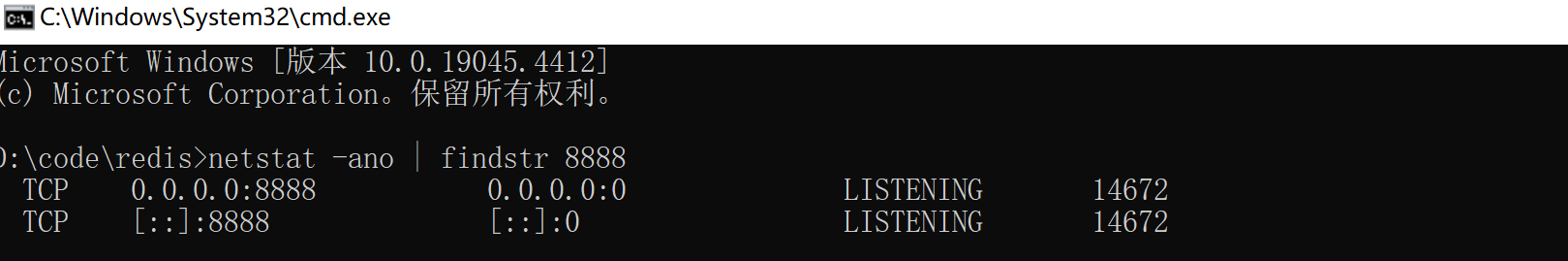

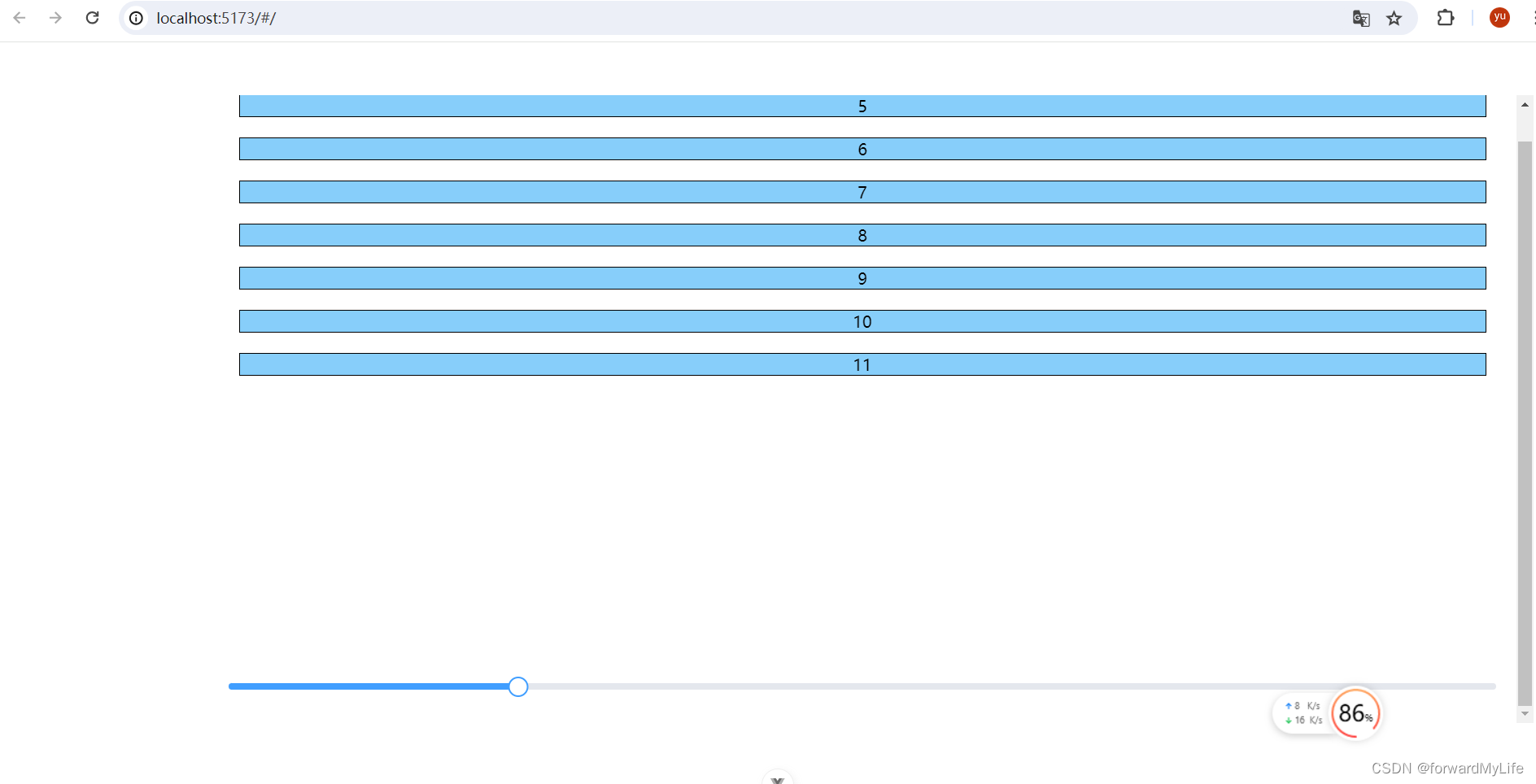

运行结果: