文章目录

- 1. 前言

- 2. ubi rootfs 加载故障现场

- 3. 故障分析与解决

- 4. 参考资料

1. 前言

限于作者能力水平,本文可能存在谬误,因此而给读者带来的损失,作者不做任何承诺。

2. ubi rootfs 加载故障现场

问题故障内核日志如下:

Starting kernel ...

[ 0.000000] Booting Linux on physical CPU 0x0

[ 0.000000] Linux version 4.19.94-g1194fe2-dirty (bill@bill-virtual-machine) (gcc version 5.3.1 20160113 (Linaro GCC 5.3-2016.02)) #21 PREEMPT Tue Jun 4 10:18:44 CST 2024

[ 0.000000] CPU: ARMv7 Processor [413fc082] revision 2 (ARMv7), cr=10c5387d

......

[ 0.000000] Kernel command line: console=ttyO0,115200n8 root=ubi0:rootfs rw ubi.mtd=NAND.rootfs,2048 rootfstype=ubifs rootwait=1

......

[ 1.713970] nand: device found, Manufacturer ID: 0x2c, Chip ID: 0xda

[ 1.720358] nand: Micron MT29F2G08AAD

[ 1.724091] nand: 256 MiB, SLC, erase size: 128 KiB, page size: 2048, OOB size: 64

[ 1.731736] nand: using OMAP_ECC_BCH8_CODE_HW ECC scheme

[ 1.737188] 11 fixed-partitions partitions found on MTD device omap2-nand.0

[ 1.744196] Creating 11 MTD partitions on "omap2-nand.0":

[ 1.749624] 0x000000000000-0x000000020000 : "NAND.SPL"

[ 1.755917] 0x000000020000-0x000000040000 : "NAND.SPL.backup1"

[ 1.762654] 0x000000040000-0x000000060000 : "NAND.SPL.backup2"

[ 1.769446] 0x000000060000-0x000000080000 : "NAND.SPL.backup3"

[ 1.776214] 0x000000080000-0x0000000c0000 : "NAND.u-boot-spl-os"

[ 1.783272] 0x0000000c0000-0x0000001c0000 : "NAND.u-boot"

[ 1.790358] 0x0000001c0000-0x0000001e0000 : "NAND.u-boot-env"

[ 1.797050] 0x0000001e0000-0x000000200000 : "NAND.u-boot-env.backup1"

[ 1.804438] 0x000000200000-0x000000a00000 : "NAND.kernel"

[ 1.818114] 0x000000a00000-0x00000e000000 : "NAND.rootfs"

[ 2.024110] 0x00000e000000-0x000010000000 : "NAND.userdata"

......

[ 2.162435] ubi0: attaching mtd9

[ 2.166572] ubi0 error: validate_ec_hdr: bad VID header offset 512, expected 2048

[ 2.174146] ubi0 error: validate_ec_hdr: bad EC header

[ 2.179304] Erase counter header dump:

[ 2.183118] magic 0x55424923

[ 2.186881] version 1

[ 2.189856] ec 0

[ 2.192829] vid_hdr_offset 512

[ 2.195994] data_offset 2048

[ 2.199232] image_seq 2007489760

[ 2.203004] hdr_crc 0xbe9cfce9

[ 2.206763] erase counter header hexdump:

[ 2.210810] CPU: 0 PID: 1 Comm: swapper Not tainted 4.19.94-g1194fe2-dirty #21

[ 2.218072] Hardware name: Generic AM33XX (Flattened Device Tree)

[ 2.224199] Backtrace:

[ 2.226668] [<c010bfe4>] (dump_backtrace) from [<c010c2b4>] (show_stack+0x18/0x1c)

[ 2.234283] r7:00000000 r6:00000000 r5:cf04c000 r4:cf675c00

[ 2.239970] [<c010c29c>] (show_stack) from [<c09531b4>] (dump_stack+0x24/0x28)

[ 2.247237] [<c0953190>] (dump_stack) from [<c064da18>] (validate_ec_hdr+0xa0/0xe4)

[ 2.254942] [<c064d978>] (validate_ec_hdr) from [<c064e600>] (ubi_io_read_ec_hdr+0x1b4/0x204)

[ 2.263546] r7:cf04c000 r6:55424923 r5:cf675c00 r4:00000000

[ 2.269233] [<c064e44c>] (ubi_io_read_ec_hdr) from [<c0653a40>] (ubi_attach+0x1b8/0x1464)

[ 2.277464] r10:cf76f000 r9:00000000 r8:00000000 r7:cf675c00 r6:cf04c000 r5:cf734a00

[ 2.285336] r4:cf736240

[ 2.287884] [<c0653888>] (ubi_attach) from [<c0647f78>] (ubi_attach_mtd_dev+0x42c/0xbc4)

[ 2.296023] r10:00020000 r9:cf721400 r8:c0e03048 r7:00000000 r6:cf721400 r5:cf04c000

[ 2.303893] r4:0000103f

[ 2.306445] [<c0647b4c>] (ubi_attach_mtd_dev) from [<c0d26378>] (ubi_init+0x184/0x22c)

[ 2.314410] r10:c0e370cc r9:c0c2a8e8 r8:c0c2a8bc r7:c0e85e64 r6:cf721400 r5:c0e85e68

[ 2.322270] r4:00000000

[ 2.324830] [<c0d261f4>] (ubi_init) from [<c01026ac>] (do_one_initcall+0x5c/0x1a4)

[ 2.332435] r10:00000008 r9:c0e03048 r8:00000000 r7:c0d261f4 r6:ffffe000 r5:c0e4f140

[ 2.340306] r4:c0e4f140

[ 2.342859] [<c0102650>] (do_one_initcall) from [<c0d00f34>] (kernel_init_freeable+0x13c/0x1d4)

[ 2.351610] r9:c0d00620 r8:000000f8 r7:c0d3e834 r6:c0d51d64 r5:c0e4f140 r4:c0e4f140

[ 2.359404] [<c0d00df8>] (kernel_init_freeable) from [<c09689d8>] (kernel_init+0x10/0x118)

[ 2.367716] r10:00000000 r9:00000000 r8:00000000 r7:00000000 r6:00000000 r5:c09689c8

[ 2.375587] r4:00000000

[ 2.378132] [<c09689c8>] (kernel_init) from [<c01010e8>] (ret_from_fork+0x14/0x2c)

[ 2.385743] Exception stack(0xcf051fb0 to 0xcf051ff8)

[ 2.390816] 1fa0: 00000000 00000000 00000000 00000000

[ 2.399040] 1fc0: 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000

[ 2.407263] 1fe0: 00000000 00000000 00000000 00000000 00000013 00000000

[ 2.413914] r5:c09689c8 r4:00000000

[ 2.417506] ubi0 error: ubi_io_read_ec_hdr: validation failed for PEB 0

[ 2.424265] ubi0 error: ubi_attach_mtd_dev: failed to attach mtd9, error -22

[ 2.431373] UBI error: cannot attach mtd9

[ 2.436450] input: volume_keys@0 as /devices/platform/volume_keys@0/input/input0

[ 2.444593] omap_rtc 44e3e000.rtc: setting system clock to 2000-01-01 00:00:00 UTC (946684800)

[ 2.453894] ALSA device list:

[ 2.456888] #0: crt_audio_bus

[ 2.460684] VFS: Cannot open root device "ubi0:rootfs" or unknown-block(0,0): error -19

[ 2.468832] Please append a correct "root=" boot option; here are the available partitions:

[ 2.477272] 0100 65536 ram0

[ 2.477276] (driver?)

[ 2.483432] 0101 65536 ram1

[ 2.483435] (driver?)

[ 2.489561] 0102 65536 ram2

[ 2.489563] (driver?)

[ 2.495704] 0103 65536 ram3

[ 2.495706] (driver?)

[ 2.501830] 0104 65536 ram4

[ 2.501832] (driver?)

[ 2.507970] 0105 65536 ram5

[ 2.507972] (driver?)

[ 2.514109] 0106 65536 ram6

[ 2.514111] (driver?)

[ 2.520236] 0107 65536 ram7

[ 2.520238] (driver?)

[ 2.526375] 0108 65536 ram8

[ 2.526378] (driver?)

[ 2.532502] 0109 65536 ram9

[ 2.532504] (driver?)

[ 2.538642] 010a 65536 ram10

[ 2.538645] (driver?)

[ 2.544868] 010b 65536 ram11

[ 2.544870] (driver?)

[ 2.551083] 010c 65536 ram12

[ 2.551085] (driver?)

[ 2.557309] 010d 65536 ram13

[ 2.557311] (driver?)

[ 2.563533] 010e 65536 ram14

[ 2.563536] (driver?)

[ 2.569748] 010f 65536 ram15

[ 2.569751] (driver?)

[ 2.575982] 1f00 128 mtdblock0

[ 2.575984] (driver?)

[ 2.582546] 1f01 128 mtdblock1

[ 2.582548] (driver?)

[ 2.589122] 1f02 128 mtdblock2

[ 2.589125] (driver?)

[ 2.595704] 1f03 128 mtdblock3

[ 2.595706] (driver?)

[ 2.602266] 1f04 256 mtdblock4

[ 2.602268] (driver?)

[ 2.608841] 1f05 1024 mtdblock5

[ 2.608844] (driver?)

[ 2.615416] 1f06 128 mtdblock6

[ 2.615418] (driver?)

[ 2.621980] 1f07 128 mtdblock7

[ 2.621983] (driver?)

[ 2.628556] 1f08 8192 mtdblock8

[ 2.628559] (driver?)

[ 2.635130] 1f09 219136 mtdblock9

[ 2.635133] (driver?)

[ 2.641695] 1f0a 32768 mtdblock10

[ 2.641697] (driver?)

[ 2.648358] Kernel panic - not syncing: VFS: Unable to mount root fs on unknown-block(0,0)

[ 2.656667] ---[ end Kernel panic - not syncing: VFS: Unable to mount root fs on unknown-block(0,0) ]---3. 故障分析与解决

内核日志信息:

[ 2.162435] ubi0: attaching mtd9

[ 2.166572] ubi0 error: validate_ec_hdr: bad VID header offset 512, expected 2048

[ 2.174146] ubi0 error: validate_ec_hdr: bad EC header结合前面的 NAND 分区日志分析,可以知道,mtd9 对应分区 "NAND.rootfs",所以实在挂载 rootfs 过程中出错了。通过内核导出的出错时的调用栈信息,定位到出错代码路径如下(内核版本为 4.19.94):

kernel_init()

kernel_init_freeable()

do_basic_setup()

do_initcalls()

...

do_one_initcall()

ubi_init()/* drivers/mtd/ubi/build.c */

static int __init ubi_init(void)

{

...

/* Attach MTD devices */

/* mtd_dev_param[] 和 mtd_devs 的设置过程,见后文的 ubi_mtd_param_parse() 分析 */

for (i = 0; i < mtd_devs; i++) {

struct mtd_dev_param *p = &mtd_dev_param[i];

struct mtd_info *mtd;

...

mtd = open_mtd_device(p->name);

...

mutex_lock(&ubi_devices_mutex);

err = ubi_attach_mtd_dev(mtd, p->ubi_num,

p->vid_hdr_offs, p->max_beb_per1024);

mutex_unlock(&ubi_devices_mutex);

...

}

...

}

int ubi_attach_mtd_dev(struct mtd_info *mtd, int ubi_num,

int vid_hdr_offset, int max_beb_per1024)

{

struct ubi_device *ubi;

...

...

ubi = kzalloc(sizeof(struct ubi_device), GFP_KERNEL);

...

ubi->mtd = mtd;

ubi->ubi_num = ubi_num;

ubi->vid_hdr_offset = vid_hdr_offset;

ubi->autoresize_vol_id = -1;

...

err = io_init(ubi, max_beb_per1024);

...

err = ubi_attach(ubi, 0);

...

/* Make device "available" before it becomes accessible via sysfs */

ubi_devices[ubi_num] = ubi;

...

}

static int io_init(struct ubi_device *ubi, int max_beb_per1024)

{

...

ubi->peb_size = ubi->mtd->erasesize;

ubi->peb_count = mtd_div_by_eb(ubi->mtd->size, ubi->mtd);

ubi->flash_size = ubi->mtd->size;

...

ubi->leb_size = ubi->peb_size - ubi->leb_start; /* (2) */

...

}

/* drivers/mtd/ubi/attach.c */

int ubi_attach(struct ubi_device *ubi, int force_scan)

{

...

err = scan_all(ubi, ai, 0);

...

}

static int scan_all(struct ubi_device *ubi, struct ubi_attach_info *ai,

int start)

{

...

for (pnum = start; pnum < ubi->peb_count; pnum++) {

...

err = scan_peb(ubi, ai, pnum, false);

...

}

...

}

static int scan_peb(struct ubi_device *ubi, struct ubi_attach_info *ai,

int pnum, bool fast)

{

...

err = ubi_io_read_ec_hdr(ubi, pnum, ech, 0);

...

}

/* drivers/mtd/ubi/io.c */

int ubi_io_read_ec_hdr(struct ubi_device *ubi, int pnum,

struct ubi_ec_hdr *ec_hdr, int verbose)

{

...

/* And of course validate what has just been read from the media */

err = validate_ec_hdr(ubi, ec_hdr);

...

}

static int validate_ec_hdr(const struct ubi_device *ubi,

const struct ubi_ec_hdr *ec_hdr)

{

...

int vid_hdr_offset, leb_start;

...

vid_hdr_offset = be32_to_cpu(ec_hdr->vid_hdr_offset);

...

/* (1) */

if (vid_hdr_offset != ubi->vid_hdr_offset) {

ubi_err(ubi, "bad VID header offset %d, expected %d",

vid_hdr_offset, ubi->vid_hdr_offset);

goto bad;

}

...

bad:

ubi_err(ubi, "bad EC header");

ubi_dump_ec_hdr(ec_hdr);

dump_stack();

return 1;

}问题出在 validate_ec_hdr() 函数位置 (1) 处,由于 vid_hdr_offset 和 ubi->vid_hdr_offset 不相等导致。vid_hdr_offset 来自 ec_hdr->vid_hdr_offset;从上面分析的代码分析,进一步得知 ec_hdr->vid_hdr_offset 来自于 ubi_io_read_ec_hdr() 从设备读取的信息,这个信息是后文提到的 ubinize 工具将 rootfs.ubifs 打包到 rootfs.ubi 镜像是插入的 UBI 卷管理信息 superblock。这里暂时不细表,留待后文分析。另外一个信息 ubi->vid_hdr_offset 来自内核命令行参数 ubi.mtd=NAND.rootfs,2048,其赋值的代码流程如下:

start_kernel()

after_dashes = parse_args("Booting kernel",

static_command_line, __start___param,

__stop___param - __start___param,

-1, -1, NULL, &unknown_bootoption);

...

ubi_mtd_param_parse()/* drivers/mtd/ubi/build.c */

/*

* 本文中用来解析 ubi.mtd=NAND.rootfs,2048

* @val: "NAND.rootfs,2048"

*/

static int ubi_mtd_param_parse(const char *val, const struct kernel_param *kp)

{

...

p = &mtd_dev_param[mtd_devs];

strcpy(&p->name[0], tokens[0]); /* @p->name: "NAND.rootfs" */

token = tokens[1];

if (token) {

p->vid_hdr_offs = bytes_str_to_int(token); /* @p->vid_hdr_offs: 2048 */

...

}

...

mtd_devs += 1;

return 0;

}

/*

* 定义解析 ubi.mtd=XXX 的接口 ubi_mtd_param_parse(),

* 如用来解析 ubi.mtd=NAND.rootfs,2048 。

*/

module_param_call(mtd, ubi_mtd_param_parse, NULL, NULL, 0400);ubi->vid_hdr_offset 的设置过程在 ubi_init() 调用之前完成。说完了 ubi->vid_hdr_offset 的设置过程,继续看在 UBI 根文件系统 rootfs.ubi 的构建过程中,对 ec_hdr->vid_hdr_offset 信息的填充过程。本文的UBI 根文件系统镜像 rootfs.ubi通过 buildroot 工具构建,其过程简单来讲,就是先通过 mkfs.ubifs 生成一个 rootfs.ubifs 文件,然后再通过工具 ubinize 将 rootfs.ubifs 打包成 UBI 根文件系统镜像 rootfs.ubi:

mkfs.ubifs ubinize

根文件系统目录树 ----------> rootfs.ubifs --------> rootfs.ubimkfs.ubifs 的构建的 rootfs.ubifs 文件,可以理解为根文件系统目录树的打包;而 ubinize 工具将 rootfs.ubifs 打包为 UBI 根文件系统镜像 rootfs.ubi 文件时,增加了包含 struct ubi_ec_hdr 头部信息等的 UBI 卷管理信息,rootfs.ubifs 无法直接作为烧录进设备分区的镜像,只有包含了 UBI 卷管理信息的 rootfs.ubi 才能烧录进设备分区,作为系统的根文件系统来启动。前面内核日志报错信息:

[ 2.166572] ubi0 error: validate_ec_hdr: bad VID header offset 512, expected 2048问题的根本原因在于:内核命令行参数 ubi.mtd=NAND.rootfs,2048 中的 2048 和 rootfs.ubifs 打包头部信息 struct ubi_ec_hdr::vid_hdr_offset 值 512(ubinize 工具给的默认值) 不匹配造成的。ubinize 工具的 -O 选项可以指定 struct ubi_ec_hdr::vid_hdr_offset 值。从 buildroot 工具 ubinize 打包过程文件 fs/ubifs/ubi.mk 片段:

...

UBI_UBINIZE_OPTS += $(call qstrip,$(BR2_TARGET_ROOTFS_UBI_OPTS))

...

define ROOTFS_UBI_CMD

sed 's;BR2_ROOTFS_UBIFS_PATH;$@fs;' \

$(UBINIZE_CONFIG_FILE_PATH) > $(BUILD_DIR)/ubinize.cfg

$(HOST_DIR)/usr/sbin/ubinize -o $@ $(UBI_UBINIZE_OPTS) $(BUILD_DIR)/ubinize.cfg

rm $(BUILD_DIR)/ubinize.cfg

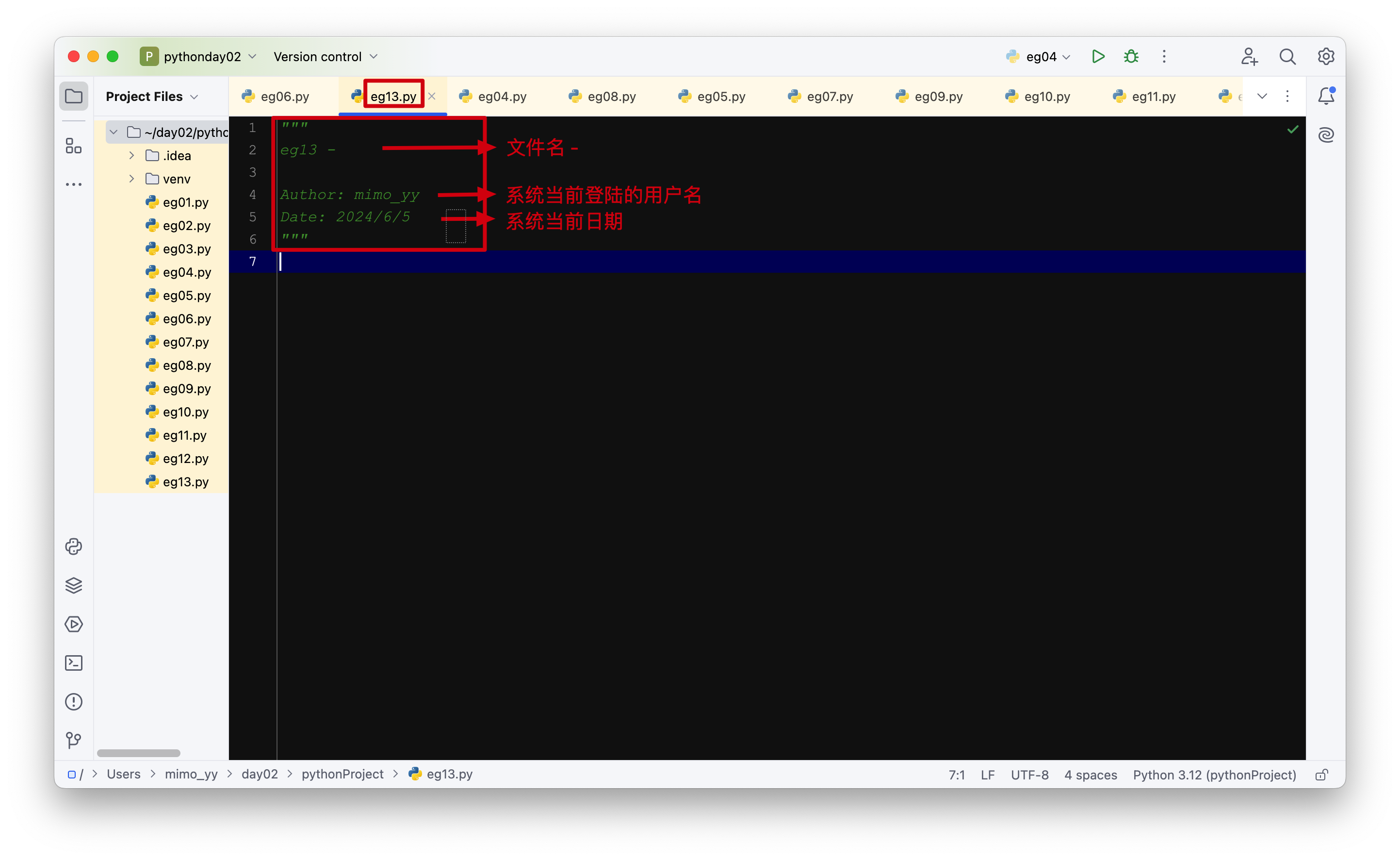

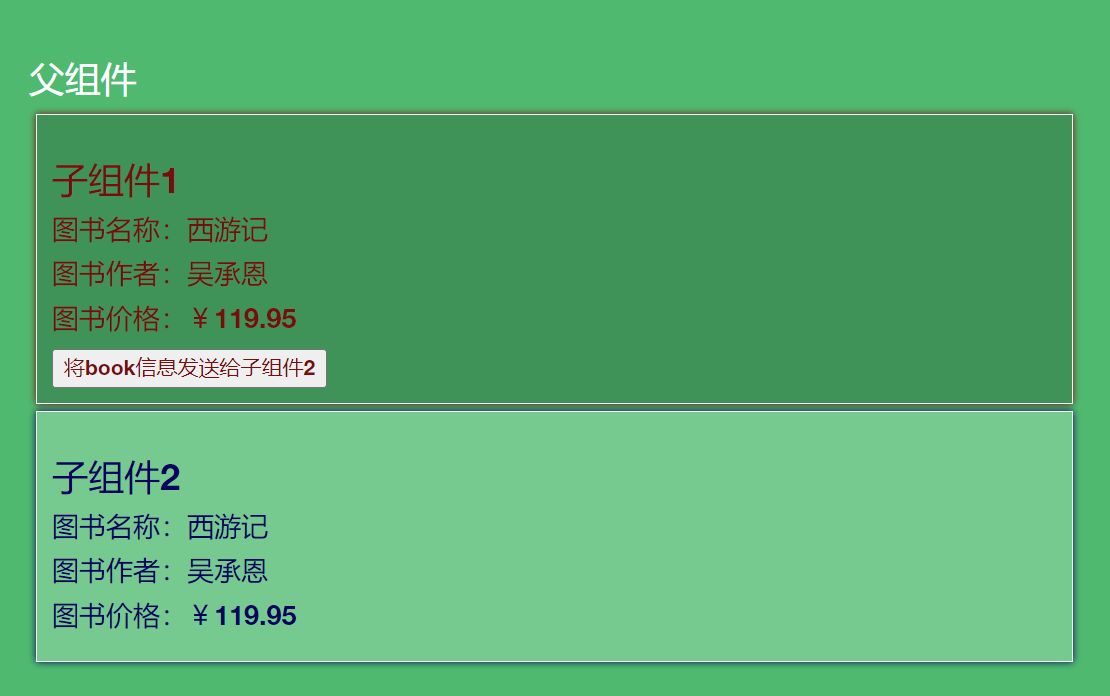

endef得知可以通过 BR2_TARGET_ROOTFS_UBI_OPTS 配置给 ubinize 工具传递参数,运行 make menuconfig ,按如下修改 buildroot 配置 BR2_TARGET_ROOTFS_UBI_OPTS,给 ubinize 工具传递 -O 1024 参数,修改 打包头部信息 struct ubi_ec_hdr::vid_hdr_offset 值为 1024:

重新编译生成 rootfs.ubi,烧录并启动运行,看问题是否解决了:

[ 2.162404] ubi0: attaching mtd9

[ 2.817587] ubi0: scanning is finished

[ 2.841285] ubi0: volume 0 ("rootfs") re-sized from 83 to 1668 LEBs

[ 2.848341] ubi0: attached mtd9 (name "NAND.rootfs", size 214 MiB)

[ 2.854613] ubi0: PEB size: 131072 bytes (128 KiB), LEB size: 126976 bytes

[ 2.861516] ubi0: min./max. I/O unit sizes: 2048/2048, sub-page size 512

[ 2.868259] ubi0: VID header offset: 2048 (aligned 2048), data offset: 4096

[ 2.875260] ubi0: good PEBs: 1711, bad PEBs: 1, corrupted PEBs: 0

[ 2.881377] ubi0: user volume: 1, internal volumes: 1, max. volumes count: 128

[ 2.888641] ubi0: max/mean erase counter: 1/0, WL threshold: 4096, image sequence number: 425578287

[ 2.897735] ubi0: available PEBs: 0, total reserved PEBs: 1711, PEBs reserved for bad PEB handling: 39

[ 2.907100] ubi0: background thread "ubi_bgt0d" started, PID 65

......

[ 2.965365] UBIFS error (ubi0:0 pid 1): ubifs_read_superblock: LEB size mismatch: 129024 in superblock, 126976 real

[ 2.992980] UBIFS error (ubi0:0 pid 1): ubifs_read_superblock: bad superblock, error 1

[ 3.000933] magic 0x6101831

[ 3.004623] crc 0x3375ce2d

[ 3.008386] node_type 6 (superblock node)

[ 3.012931] group_type 0 (no node group)

[ 3.017314] sqnum 1

[ 3.020289] len 4096

[ 3.023537] key_hash 0 (R5)

[ 3.026950] key_fmt 0 (simple)

[ 3.030709] flags 0x0

[ 3.033870] big_lpt 0

[ 3.036845] space_fixup 0

[ 3.039818] min_io_size 2048

[ 3.043063] leb_size 129024

[ 3.046474] leb_cnt 1668

[ 3.049709] max_leb_cnt 2048

[ 3.052956] max_bud_bytes 8388608

[ 3.056454] log_lebs 5

[ 3.059429] lpt_lebs 2

[ 3.062403] orph_lebs 1

[ 3.065387] jhead_cnt 1

[ 3.068362] fanout 8

[ 3.071335] lsave_cnt 256

[ 3.074495] default_compr 0

[ 3.077470] rp_size 0

[ 3.080443] rp_uid 0

[ 3.083427] rp_gid 0

[ 3.086402] fmt_version 4

[ 3.089378] time_gran 1000000000

[ 3.093151] UUID 9FC73AE3-A0BA-41C8-8615-2B75694BB8CD

[ 3.204172] UBIFS error (ubi0:0 pid 1): ubifs_read_superblock: LEB size mismatch: 129024 in superblock, 126976 real

[ 3.222987] UBIFS error (ubi0:0 pid 1): ubifs_read_superblock: bad superblock, error 1

[ 3.230940] magic 0x6101831

[ 3.234647] crc 0x3375ce2d

[ 3.238408] node_type 6 (superblock node)

[ 3.242993] group_type 0 (no node group)

[ 3.247364] sqnum 1

[ 3.250338] len 4096

[ 3.253591] key_hash 0 (R5)

[ 3.257003] key_fmt 0 (simple)

[ 3.260762] flags 0x0

[ 3.263923] big_lpt 0

[ 3.266897] space_fixup 0

[ 3.269872] min_io_size 2048

[ 3.273117] leb_size 129024

[ 3.276528] leb_cnt 1668

[ 3.279765] max_leb_cnt 2048

[ 3.283011] max_bud_bytes 8388608

[ 3.286509] log_lebs 5

[ 3.289484] lpt_lebs 2

[ 3.292458] orph_lebs 1

[ 3.295441] jhead_cnt 1

[ 3.298417] fanout 8

[ 3.301390] lsave_cnt 256

[ 3.304548] default_compr 0

[ 3.307522] rp_size 0

[ 3.310496] rp_uid 0

[ 3.313479] rp_gid 0

[ 3.316454] fmt_version 4

[ 3.319429] time_gran 1000000000

[ 3.323199] UUID 9FC73AE3-A0BA-41C8-8615-2B75694BB8CD

[ 3.433193] List of all partitions:

[ 3.436713] 0100 65536 ram0

[ 3.436716] (driver?)

[ 3.442843] 0101 65536 ram1

[ 3.442845] (driver?)

[ 3.463000] 0102 65536 ram2

[ 3.463004] (driver?)

[ 3.469132] 0103 65536 ram3

[ 3.469135] (driver?)

[ 3.492961] 0104 65536 ram4

[ 3.492964] (driver?)

[ 3.499093] 0105 65536 ram5

[ 3.499095] (driver?)

[ 3.512959] 0106 65536 ram6

[ 3.512961] (driver?)

[ 3.519087] 0107 65536 ram7

[ 3.519090] (driver?)

[ 3.542960] 0108 65536 ram8

[ 3.542962] (driver?)

[ 3.549089] 0109 65536 ram9

[ 3.549091] (driver?)

[ 3.562956] 010a 65536 ram10

[ 3.562959] (driver?)

[ 3.569173] 010b 65536 ram11

[ 3.569175] (driver?)

[ 3.582961] 010c 65536 ram12

[ 3.582964] (driver?)

[ 3.589178] 010d 65536 ram13

[ 3.589180] (driver?)

[ 3.612959] 010e 65536 ram14

[ 3.612961] (driver?)

[ 3.619175] 010f 65536 ram15

[ 3.619177] (driver?)

[ 3.632970] 1f00 128 mtdblock0

[ 3.632974] (driver?)

[ 3.639537] 1f01 128 mtdblock1

[ 3.639540] (driver?)

[ 3.662959] 1f02 128 mtdblock2

[ 3.662962] (driver?)

[ 3.669524] 1f03 128 mtdblock3

[ 3.669527] (driver?)

[ 3.682960] 1f04 256 mtdblock4

[ 3.682963] (driver?)

[ 3.689526] 1f05 1024 mtdblock5

[ 3.689529] (driver?)

[ 3.712959] 1f06 128 mtdblock6

[ 3.712962] (driver?)

[ 3.719526] 1f07 128 mtdblock7

[ 3.719528] (driver?)

[ 3.732960] 1f08 8192 mtdblock8

[ 3.732963] (driver?)

[ 3.739526] 1f09 219136 mtdblock9

[ 3.739529] (driver?)

[ 3.762959] 1f0a 32768 mtdblock10

[ 3.762962] (driver?)

[ 3.769608] No filesystem could mount root, tried:

[ 3.769610] ubifs

[ 3.782955]

[ 3.786470] Kernel panic - not syncing: VFS: Unable to mount root fs on unknown-block(0,0)

[ 3.794779] ---[ end Kernel panic - not syncing: VFS: Unable to mount root fs on unknown-block(0,0) ]---从内核日志看到,之前的问题没有了,但又有了新的问题。根据内核日志,分析下代码流程(对代码细节不感兴趣的读者,可以直接跳过):

/* 1. 解析内核命令行参数: root=ubi0:rootfs rw rootfstype=ubifs rootwait=1 */

start_kernel()

after_dashes = parse_args("Booting kernel",

static_command_line, __start___param,

__stop___param - __start___param,

-1, -1, NULL, &unknown_bootoption);

...

unknown_bootoption()

obsolete_checksetup(param)

p->setup_func(line + n) = root_dev_setup(), rootwait_setup(), fs_names_setup()

/* init/do_mounts.c */

int root_mountflags = MS_RDONLY | MS_SILENT;

static int __init readwrite(char *str)

{

if (*str)

return 0;

root_mountflags &= ~MS_RDONLY; /* @root_mountflags: MS_SILENT */

return 1;

}

__setup("rw", readwrite);

static int __init root_dev_setup(char *line)

{

/* @saved_root_name: "ubi0:rootfs" */

strlcpy(saved_root_name, line, sizeof(saved_root_name));

return 1;

}

__setup("root=", root_dev_setup);

static int __init rootwait_setup(char *str)

{

if (*str)

return 0;

root_wait = 1; /* @root_wait: 1 */

return 1;

}

__setup("rootwait", rootwait_setup);

static char * __initdata root_fs_names;

static int __init fs_names_setup(char *str)

{

root_fs_names = str; /* @root_fs_names: "ubifs" */

return 1;

}

__setup("rootfstype=", fs_names_setup);start_kernel()

rest_init()

pid = kernel_thread(kernel_init, NULL, CLONE_FS); /* 启动初始化线程 */

kernel_init()

kernel_init_freeable()

prepare_namespace()

/* init/do_mounts.c */

void __init prepare_namespace(void)

{

if (saved_root_name[0]) { /* @saved_root_name: "ubi0:rootfs" */

root_device_name = saved_root_name;

if (!strncmp(root_device_name, "mtd", 3) ||

!strncmp(root_device_name, "ubi", 3)) {

mount_block_root(root_device_name, root_mountflags); /* 挂载 rootfs */

goto out;

}

}

...

out:

devtmpfs_mount("dev");

ksys_mount(".", "/", NULL, MS_MOVE, NULL);

ksys_chroot(".");

}

/* 挂载 rootfs */

void __init mount_block_root(char *name, int flags)

{

...

for (p = fs_names; *p; p += strlen(p)+1) {

int err = do_mount_root(name, p, flags, root_mount_data);

switch (err) {

case 0:

goto out; /* 成功挂载 rootfs */

case -EACCES:

case -EINVAL:

continue;

}

}

...

out:

...

}

/*

* @name : "ubi0:rootfs"

* @fs : "ubifs"

* @flags: MS_SILENT

* @data : NULL

*/

static int __init do_mount_root(char *name, char *fs, int flags, void *data)

{

struct super_block *s;

int err = ksys_mount(name, "/root", fs, flags, data);

...

}

/* fs/namespace.c */

int ksys_mount(char __user *dev_name, char __user *dir_name, char __user *type,

unsigned long flags, void __user *data)

{

...

ret = do_mount(kernel_dev, dir_name, kernel_type, flags, options);

...

}

long do_mount(const char *dev_name, const char __user *dir_name,

const char *type_page, unsigned long flags, void *data_page)

{

...

if (flags & MS_REMOUNT)

retval = do_remount(...);

else if (flags & MS_BIND)

retval = do_loopback(...);

else if (flags & (MS_SHARED | MS_PRIVATE | MS_SLAVE | MS_UNBINDABLE))

retval = do_change_type(...);

else if (flags & MS_MOVE)

retval = do_move_mount(...);

else

retval = do_new_mount(&path, type_page, sb_flags, mnt_flags,

dev_name, data_page);

...

}

static int do_new_mount(struct path *path, const char *fstype, int sb_flags,

int mnt_flags, const char *name, void *data)

{

struct file_system_type *type;

struct vfsmount *mnt;

...

type = get_fs_type(fstype); /* @type: &ubifs_fs_type */

...

mnt = vfs_kern_mount(type, sb_flags, name, data);

...

put_filesystem(type);

...

}

struct vfsmount *

vfs_kern_mount(struct file_system_type *type, int flags, const char *name, void *data)

{

struct mount *mnt;

struct dentry *root;

...

mnt = alloc_vfsmnt(name);

...

root = mount_fs(type, flags, name, data);

mnt->mnt.mnt_root = root;

mnt->mnt.mnt_sb = root->d_sb;

mnt->mnt_mountpoint = mnt->mnt.mnt_root;

mnt->mnt_parent = mnt;

lock_mount_hash();

list_add_tail(&mnt->mnt_instance, &root->d_sb->s_mounts);

unlock_mount_hash();

return &mnt->mnt;

}

/* fs/super.c */

struct dentry *

mount_fs(struct file_system_type *type, int flags, const char *name, void *data)

{

struct dentry *root;

struct super_block *sb;

...

...

root = type->mount(type, flags, name, data); /* ubifs_mount() */

...

sb = root->d_sb;

...

return root;

...

}/* fs/ubifs/super.c */

static struct dentry *ubifs_mount(struct file_system_type *fs_type, int flags,

const char *name, void *data)

{

struct ubi_volume_desc *ubi;

struct ubifs_info *c;

struct super_block *sb;

...

/*

* Get UBI device number and volume ID. Mount it read-only so far

* because this might be a new mount point, and UBI allows only one

* read-write user at a time.

*/

ubi = open_ubi(name, UBI_READONLY);

...

c = alloc_ubifs_info(ubi);

...

sb = sget(fs_type, sb_test, sb_set, flags, c);

...

if (sb->s_root) {

...

} else {

err = ubifs_fill_super(sb, data, flags & SB_SILENT ? 1 : 0);

...

}

/* 'fill_super()' opens ubi again so we must close it here */

ubi_close_volume(ubi);

return dget(sb->s_root);

...

}

static struct ubi_volume_desc *open_ubi(const char *name, int mode)

{

struct ubi_volume_desc *ubi;

...

...

/* First, try to open using the device node path method */

ubi = ubi_open_volume_path(name, mode);

...

}

struct ubi_volume_desc *ubi_open_volume_path(const char *pathname, int mode)

{

...

if (vol_id >= 0 && ubi_num >= 0)

return ubi_open_volume(ubi_num, vol_id, mode);

...

}

struct ubi_volume_desc *ubi_open_volume(int ubi_num, int vol_id, int mode)

{

...

/*

* First of all, we have to get the UBI device to prevent its removal.

*/

ubi = ubi_get_device(ubi_num);

...

desc = kmalloc(sizeof(struct ubi_volume_desc), GFP_KERNEL);

...

spin_lock(&ubi->volumes_lock);

vol = ubi->volumes[vol_id]; /* (3) */

...

spin_unlock(&ubi->volumes_lock);

desc->vol = vol;

...

return desc;

}

struct ubi_device *ubi_get_device(int ubi_num)

{

struct ubi_device *ubi;

...

ubi = ubi_devices[ubi_num]; /* ubi_devices[] 在前一问题代码分析中的 ubi_attach_mtd_dev() 构建 */

...

return ubi;

}

static struct ubifs_info *alloc_ubifs_info(struct ubi_volume_desc *ubi)

{

struct ubifs_info *c;

c = kzalloc(sizeof(struct ubifs_info), GFP_KERNEL);

if (c) {

...

ubi_get_volume_info(ubi, &c->vi);

...

}

}

void ubi_get_volume_info(struct ubi_volume_desc *desc,

struct ubi_volume_info *vi)

{

ubi_do_get_volume_info(desc->vol->ubi, desc->vol, vi);

}

void ubi_do_get_volume_info(struct ubi_device *ubi, struct ubi_volume *vol,

struct ubi_volume_info *vi)

{

...

vi->usable_leb_size = vol->usable_leb_size;

...

}

static int ubifs_fill_super(struct super_block *sb, void *data, int silent)

{

...

err = mount_ubifs(c);

...

}

static int mount_ubifs(struct ubifs_info *c)

{

...

err = init_constants_early(c);

...

err = ubifs_read_superblock(c);

...

}

static int init_constants_early(struct ubifs_info *c)

{

...

c->leb_size = c->vi.usable_leb_size;

...

}

int ubifs_read_superblock(struct ubifs_info *c)

{

...

struct ubifs_sb_node *sup;

...

sup = ubifs_read_sb_node(c); /* 从写入到设备的 rootfs 镜像读取 superblock 信息 */

...

err = validate_sb(c, sup); /* 验证 rootfs 构建的 superblock 的合法性 */

...

}

static int validate_sb(struct ubifs_info *c, struct ubifs_sb_node *sup)

{

...

if (le32_to_cpu(sup->leb_size) != c->leb_size) { /* (4) 内核报错日志 */

ubifs_err(c, "LEB size mismatch: %d in superblock, %d real",

le32_to_cpu(sup->leb_size), c->leb_size);

goto failed;

}

...

failed:

ubifs_err(c, "bad superblock, error %d", err);

ubifs_dump_node(c, sup);

return -EINVAL;

}这个路径虽然很长,但并不复杂,在代码流程最后 validate_sb() 函数中的 (4) 处,内核报错。和前面的问题类似,又是一个 rootfs 镜像 rootfs.ubi 的参数 和 硬件参数 不匹配的问题,即 buildroot 中对应参数的配置问题。从前面的 open_ubi() 和 alloc_ubifs_info() 代码流程分析得知,参数 c->leb_size 反应 NAND 硬件参数,而 sup->leb_size 参数值来自 rootfs.ubi 。从 buildroot 的 fs/ubifs/ubifs.mk 的片段:

# -e 参数指定 LEB(Logical Erase Block) 的大小

UBIFS_OPTS := -e $(BR2_TARGET_ROOTFS_UBIFS_LEBSIZE) -c $(BR2_TARGET_ROOTFS_UBIFS_MAXLEBCNT) -m $(BR2_TARGET_ROOTFS_UBIFS_MINIOSIZE)

...

define ROOTFS_UBIFS_CMD

$(HOST_DIR)/usr/sbin/mkfs.ubifs -d $(TARGET_DIR) $(UBIFS_OPTS) -o $@

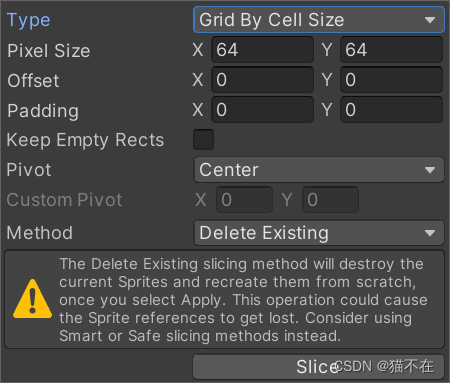

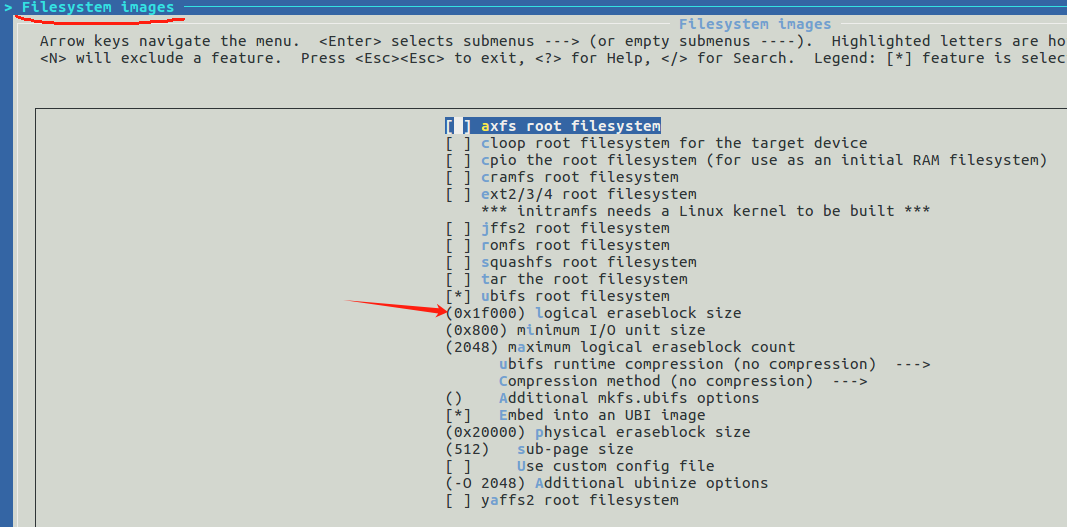

endef我们得知,配置项 BR2_TARGET_ROOTFS_UBIFS_LEBSIZE 修改 LEB(Logical Erase Block) 值,按日志提示:

[ 2.965365] UBIFS error (ubi0:0 pid 1): ubifs_read_superblock: LEB size mismatch: 129024 in superblock, 126976 real该值应该由 129024(0x1f800) 修改为 126976(0x1f000):

重新编译,烧录运行,终于可以进入登录提示处了:

重新编译,烧录运行,终于可以进入登录提示处了:

[ 2.162228] ubi0: attaching mtd9

[ 2.817396] ubi0: scanning is finished

[ 2.841103] ubi0: volume 0 ("rootfs") re-sized from 83 to 1668 LEBs

[ 2.848156] ubi0: attached mtd9 (name "NAND.rootfs", size 214 MiB)

[ 2.854435] ubi0: PEB size: 131072 bytes (128 KiB), LEB size: 126976 bytes

[ 2.861339] ubi0: min./max. I/O unit sizes: 2048/2048, sub-page size 512

[ 2.868081] ubi0: VID header offset: 2048 (aligned 2048), data offset: 4096

[ 2.875082] ubi0: good PEBs: 1711, bad PEBs: 1, corrupted PEBs: 0

[ 2.881199] ubi0: user volume: 1, internal volumes: 1, max. volumes count: 128

[ 2.888463] ubi0: max/mean erase counter: 1/0, WL threshold: 4096, image sequence number: 1890895802

[ 2.897644] ubi0: available PEBs: 0, total reserved PEBs: 1711, PEBs reserved for bad PEB handling: 39

[ 2.907010] ubi0: background thread "ubi_bgt0d" started, PID 65

......

[ 2.972922] UBIFS (ubi0:0): background thread "ubifs_bgt0_0" started, PID 66

[ 3.083296] UBIFS (ubi0:0): UBIFS: mounted UBI device 0, volume 0, name "rootfs"

[ 3.090747] UBIFS (ubi0:0): LEB size: 126976 bytes (124 KiB), min./max. I/O unit sizes: 2048 bytes/2048 bytes

[ 3.122798] UBIFS (ubi0:0): FS size: 210399232 bytes (200 MiB, 1657 LEBs), journal size 9023488 bytes (8 MiB, 72 LEBs)

[ 3.142800] UBIFS (ubi0:0): reserved for root: 0 bytes (0 KiB)

[ 3.148663] UBIFS (ubi0:0): media format: w4/r0 (latest is w5/r0), UUID 7A19D54A-3848-4AFB-8DDF-4E4A6B04D4FC, small LPT model

[ 3.184943] VFS: Mounted root (ubifs filesystem) on device 0:14.

[ 3.192113] devtmpfs: mounted

...

Welcome

(none) login: 虽然仍然还存在问题,但这和本文主题无关,就不在这里展开了。

4. 参考资料

[1] https://bootlin.com/blog/creating-flashing-ubi-ubifs-images/

[2] UBI FAQ and HOWTO

[3] UBI - Unsorted Block Images