文章大纲

- 简介

- 算力指标与概念

- 香橙派 AIpro NPU 纸面算力直观了解

- 手把手教你开机与基本配置

- 开机存储挂载设置

- 风扇设置

- 使用 Orange Pi AIpro进行YOLOv8 目标检测

- Pytorch pt 格式直接推理

- NCNN 格式推理

- 是否可以使用Orange Pi AIpro 的 NPU 进行推理 呢?

- 模型开发流程

- 模型转换

- 结论

- 参考文献

- 参考官方链接

- 高级指南

- 注释

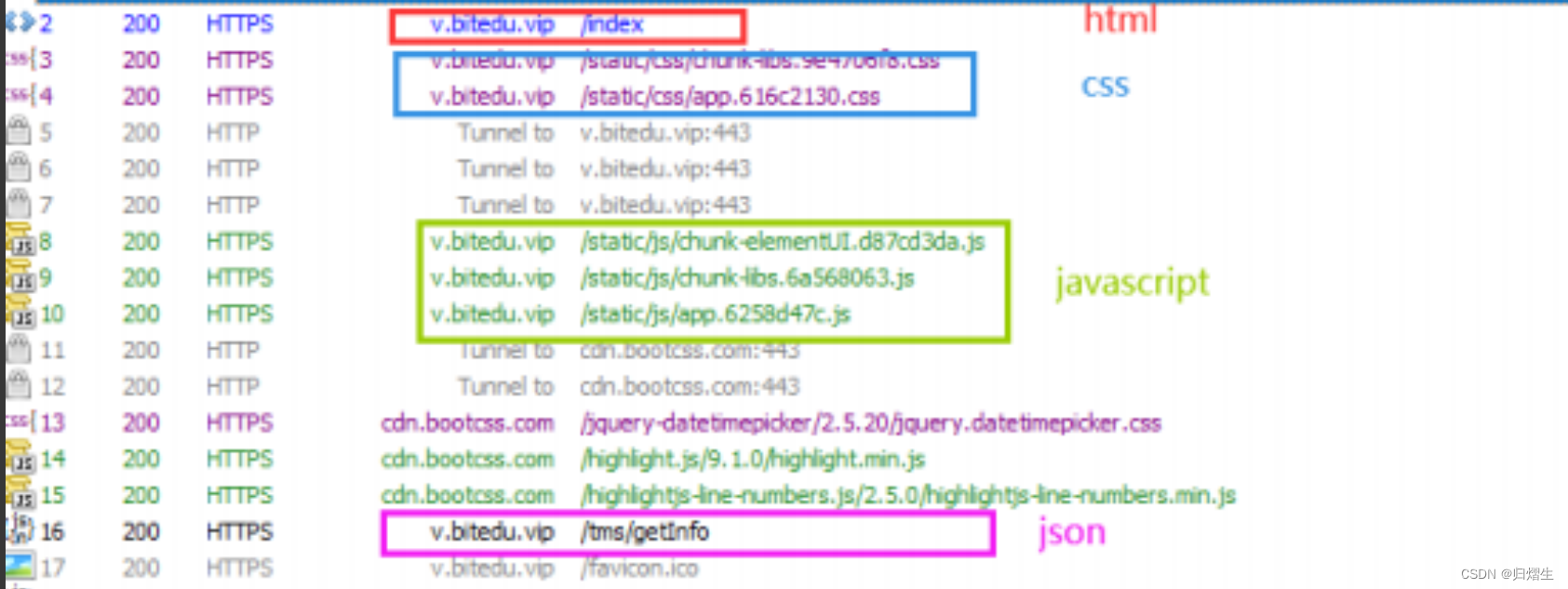

简介

官网:Orange-Pi-AIpro

算力指标与概念

TOPS是每秒数万亿或万亿次操作。它主要是衡量可实现的最大吞吐量,而不是实际吞吐量的衡量标准。大多数操作是 MAC(multiply/accumulates),因此:

TOPS =(MAC 单元数)x(MAC 操作频率)x 2

目前我手里拿到的是NPU 8 T 算力的版本,她是否能够胜任计算机视觉领域目前最火爆的目标检测任务呢?

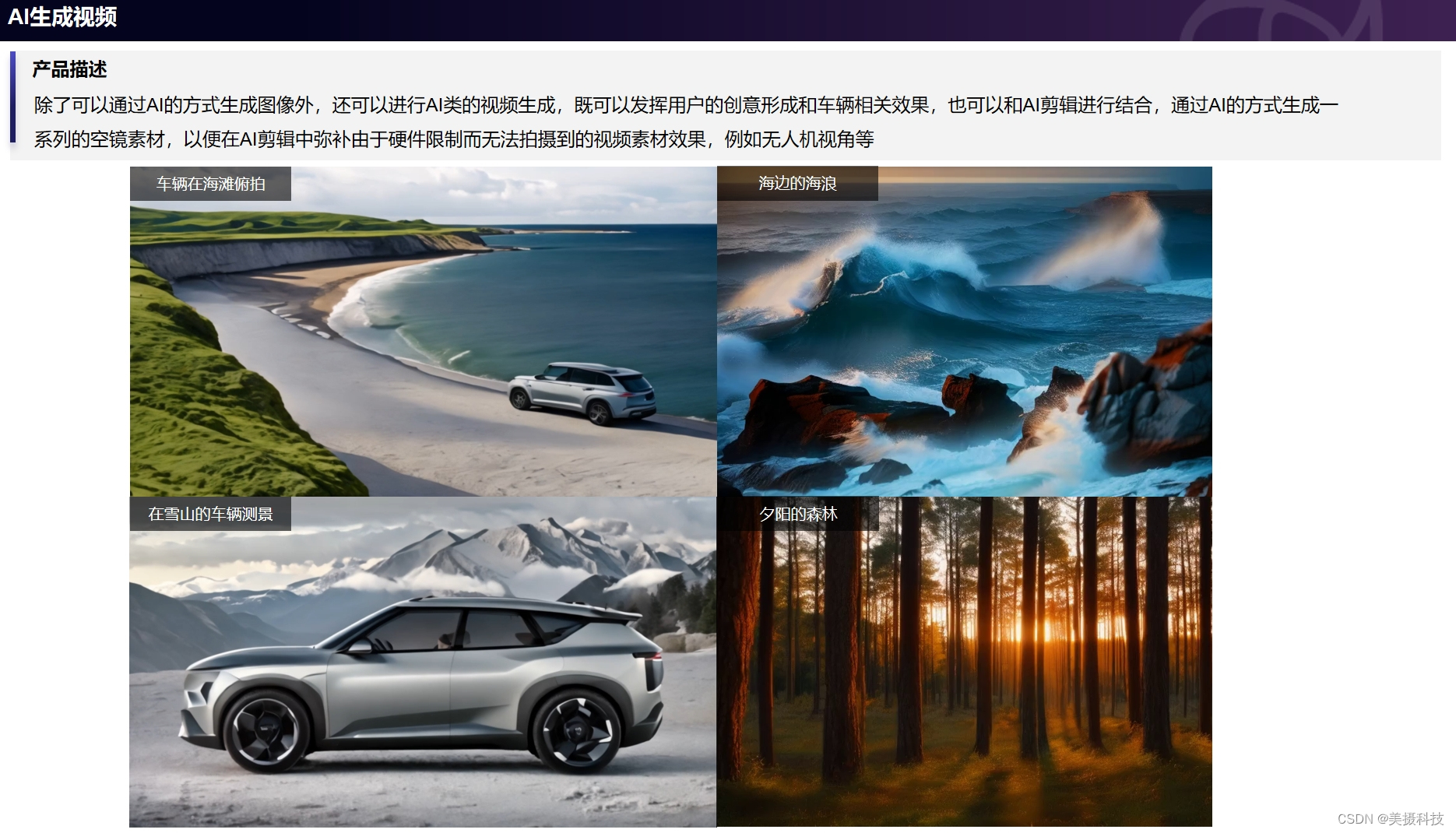

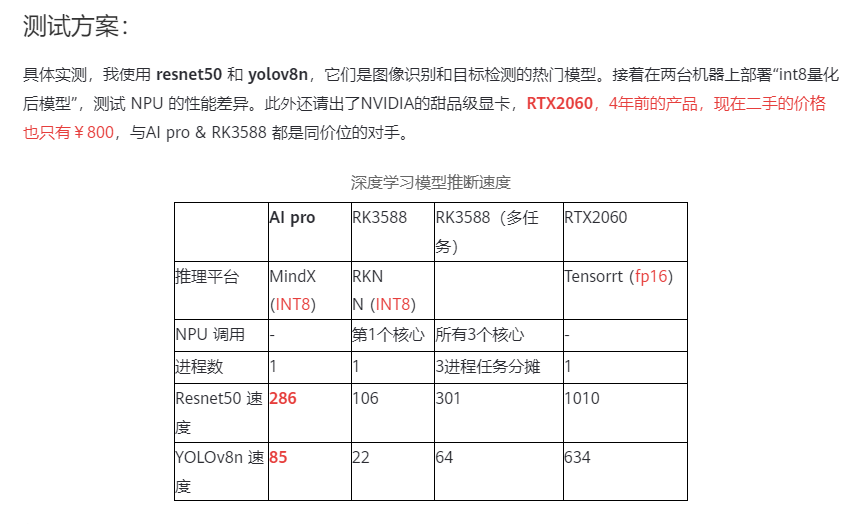

香橙派 AIpro NPU 纸面算力直观了解

找到了一个帖子

- https://www.hiascend.com/forum/thread-0281143834564881056-1-1.html

纸面上,该资料给出的YOLOv8n 量化后跑到了每85 帧秒,我也没博主贴代码和参数,有个感觉就行。可能是低分辨率下最理想状态,下面我们来手把手叫你进行目标检测。

手把手教你开机与基本配置

首先当然是基本的配置。

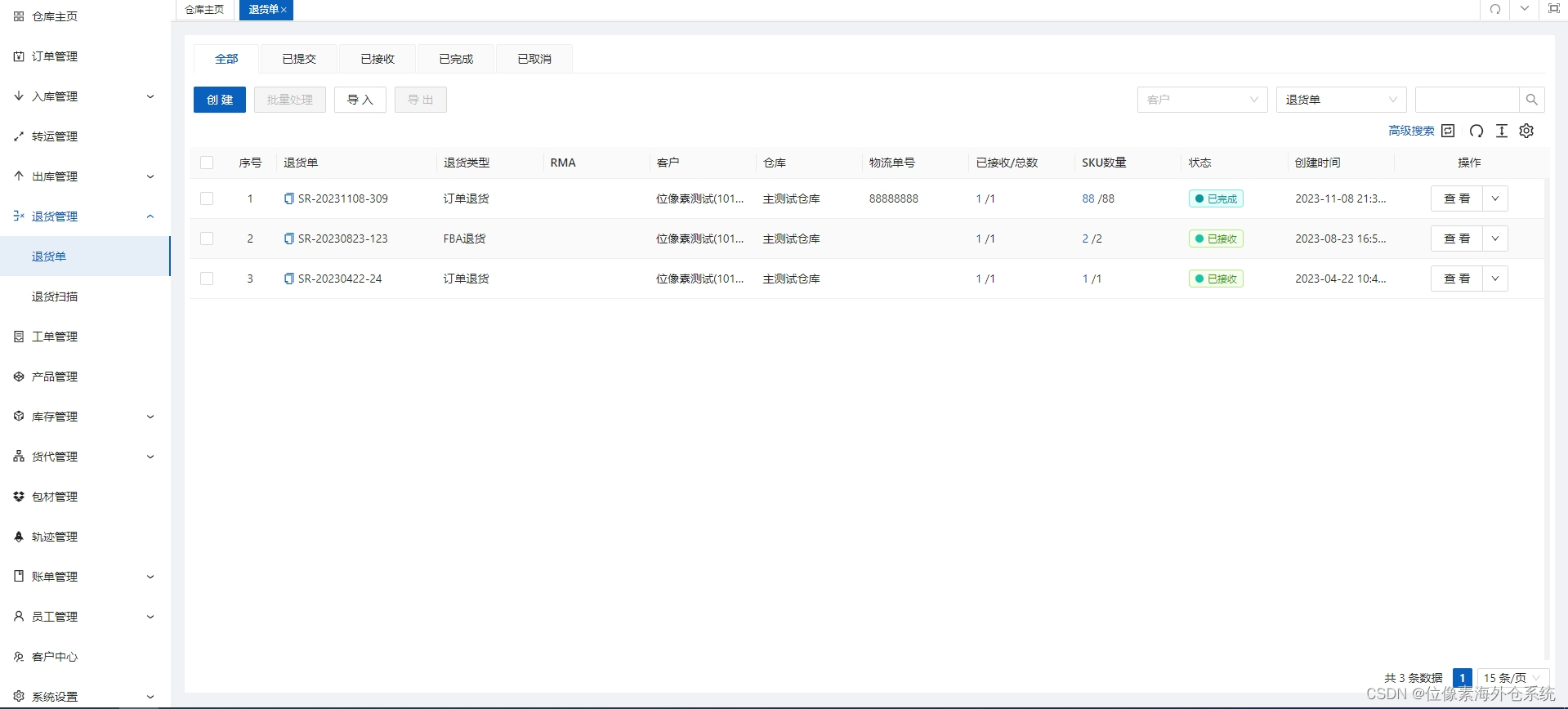

开机存储挂载设置

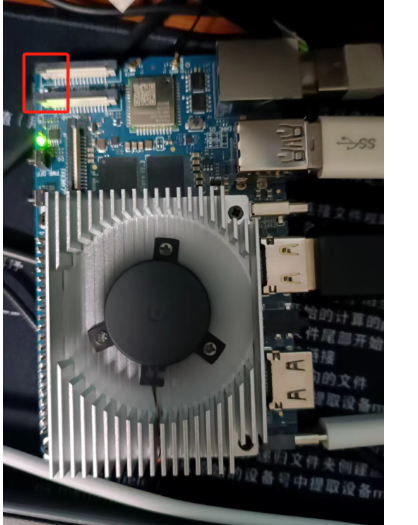

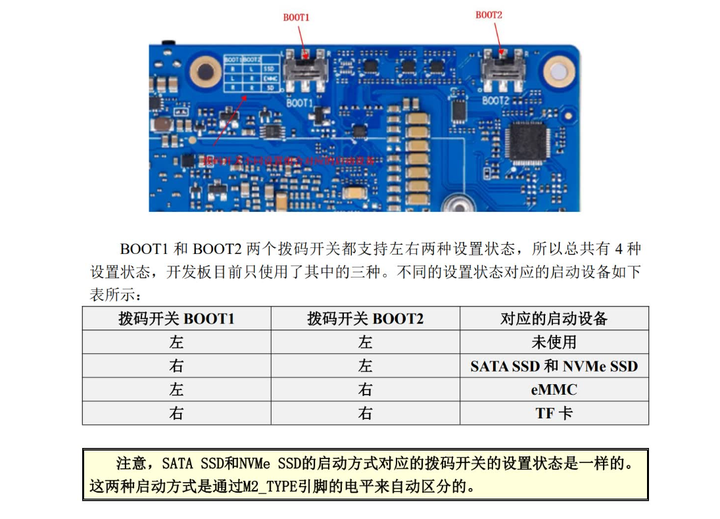

开机时候发现,有个状态知识灯没亮

查阅手册发现应该是启动状态对应的存储不对。

拨回到右右状态,完成启动

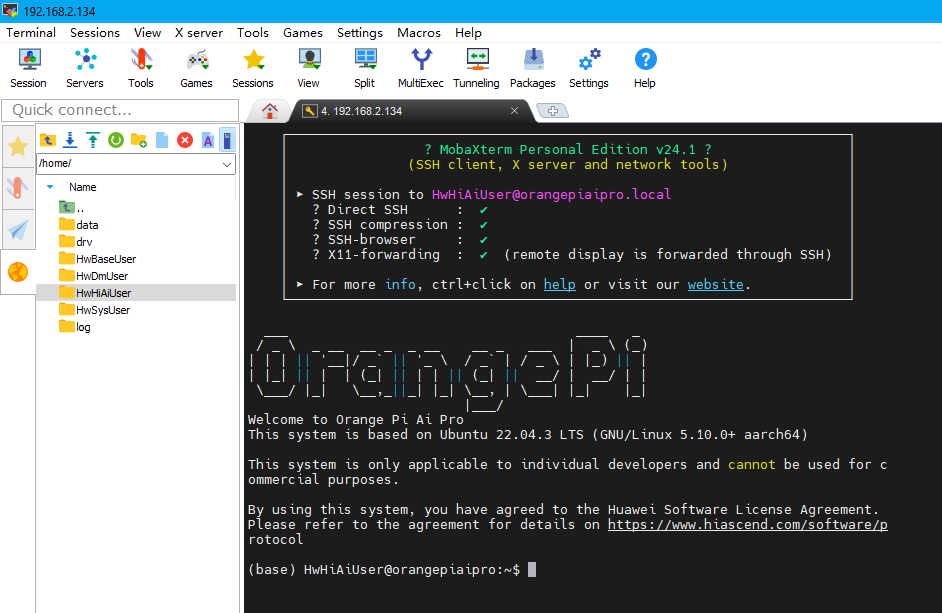

这样我们就能SSH 连上看到界面啦!

风扇设置

实际使用过程中,发现板子在推理的时候温度还是比较高的,我们可以对风扇进行设置

实际操作

在 /opt/opi_test/fan 目录下有两个与风扇风速相关的脚本,可以尝试执行,并结合昇腾文档就比较好理解。

当开发板发烫,可以控制风扇的风速操作是:

# 设置为手动模式

sudo npu-smi set -t pwm-mode -d 0

# 设置风速(最后那个100表示调到最大,0风速停止工作。60 冷却效果就很好了)

sudo npu-smi set -t pwm-duty-ratio -d 60

# 当温度正常时再设置为自动模型

sudo npu-smi set -t pwm-mode -d 1

使用 Orange Pi AIpro进行YOLOv8 目标检测

我们不用docker 安装 ,而是直接新建 新的conda 环境 基于ultralytics 安装 yolov8

conda create -n yolov8 python=3.10 -y

pip install ultralytics

Downloading oauthlib-3.2.2-py3-none-any.whl (151 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 151.7/151.7 kB 3.4 MB/s eta 0:00:00

Downloading pyasn1-0.6.0-py2.py3-none-any.whl (85 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 85.3/85.3 kB 2.0 MB/s eta 0:00:00

Building wheels for collected packages: coremltools, psutil

Building wheel for coremltools (setup.py) ... done

Created wheel for coremltools: filename=coremltools-7.2-py3-none-any.whl size=1595684 sha256=6e1dcdddd15f175ed8ef3d40ef4598f536bc311f0ea2ef031868f8ba618a539c

Stored in directory: /home/HwHiAiUser/.cache/pip/wheels/38/4a/65/6672923cb40a07330ab25e088dd6db86999907ababe8b8c398

Building wheel for psutil (pyproject.toml) ... done

Created wheel for psutil: filename=psutil-5.9.8-cp310-abi3-linux_aarch64.whl size=242067 sha256=636376e201c03ba1304b856b693430eeeb19c912aa7427e15d8f71a0ddb717bd

Stored in directory: /home/HwHiAiUser/.cache/pip/wheels/3e/93/d6/85cd469d2103627a9e38acdccc834a9997e77d2abe6da25c8b

Successfully built coremltools psutil

Installing collected packages: pytz, py-cpuinfo, openvino-telemetry, mpmath, libclang, flatbuffers, wrapt, urllib3, tzdata, typing-extensions, tqdm, termcolor, tensorflow-io-gcs-filesystem, tensorflow-estimator, tensorboard-data-server, sympy, six, pyyaml, pyparsing, pyasn1, psutil, protobuf, pillow, oauthlib, numpy, networkx, MarkupSafe, markdown, kiwisolver, keras, idna, grpcio, gast, fsspec, fonttools, filelock, exceptiongroup, cycler, charset-normalizer, certifi, cachetools, attrs, absl-py, werkzeug, tensorflow-hub, scipy, rsa, requests, python-dateutil, pyasn1-modules, pyaml, packaging, opt-einsum, opencv-python, onnx, jinja2, h5py, google-pasta, contourpy, cattrs, astunparse, torch, requests-oauthlib, pandas, openvino, matplotlib, google-auth, coremltools, torchvision, thop, seaborn, google-auth-oauthlib, ultralytics, tensorboard, tensorflow-cpu-aws, tensorflow, tensorflowjs

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

auto-tune 0.1.0 requires decorator, which is not installed.

op-compile-tool 0.1.0 requires getopt, which is not installed.

op-compile-tool 0.1.0 requires inspect, which is not installed.

op-compile-tool 0.1.0 requires multiprocessing, which is not installed.

opc-tool 0.1.0 requires decorator, which is not installed.

schedule-search 0.0.1 requires decorator, which is not installed.

te 0.4.0 requires cloudpickle, which is not installed.

te 0.4.0 requires decorator, which is not installed.

te 0.4.0 requires synr==0.5.0, which is not installed.

te 0.4.0 requires tornado, which is not installed.

Successfully installed MarkupSafe-2.1.5 absl-py-2.1.0 astunparse-1.6.3 attrs-23.2.0 cachetools-5.3.3 cattrs-23.2.3 certifi-2024.2.2 charset-normalizer-3.3.2 contourpy-1.2.1 coremltools-7.2 cycler-0.12.1 exceptiongroup-1.2.1 filelock-3.14.0 flatbuffers-24.3.25 fonttools-4.51.0 fsspec-2024.5.0 gast-0.4.0 google-auth-2.29.0 google-auth-oauthlib-1.0.0 google-pasta-0.2.0 grpcio-1.64.0 h5py-3.10.0 idna-3.7 jinja2-3.1.4 keras-2.13.1 kiwisolver-1.4.5 libclang-18.1.1 markdown-3.6 matplotlib-3.9.0 mpmath-1.3.0 networkx-3.3 numpy-1.23.5 oauthlib-3.2.2 onnx-1.16.0 opencv-python-4.9.0.80 openvino-2024.1.0 openvino-telemetry-2024.1.0 opt-einsum-3.3.0 packaging-20.9 pandas-2.2.2 pillow-10.3.0 protobuf-3.20.3 psutil-5.9.8 py-cpuinfo-9.0.0 pyaml-24.4.0 pyasn1-0.6.0 pyasn1-modules-0.4.0 pyparsing-3.1.2 python-dateutil-2.9.0.post0 pytz-2024.1 pyyaml-6.0.1 requests-2.32.2 requests-oauthlib-2.0.0 rsa-4.9 scipy-1.13.0 seaborn-0.13.2 six-1.16.0 sympy-1.12 tensorboard-2.13.0 tensorboard-data-server-0.7.2 tensorflow-2.13.1 tensorflow-cpu-aws-2.13.1 tensorflow-estimator-2.13.0 tensorflow-hub-0.12.0 tensorflow-io-gcs-filesystem-0.37.0 tensorflowjs-3.18.0 termcolor-2.4.0 thop-0.1.1.post2209072238 torch-2.1.2 torchvision-0.16.2 tqdm-4.66.4 typing-extensions-4.5.0 tzdata-2024.1 ultralytics-8.2.19 urllib3-2.2.1 werkzeug-3.0.3 wrapt-1.16.0

安装好后,有一些报错,简单测试一下,反正 能跑

Python 3.10.14 (main, May 6 2024, 19:36:58) [GCC 11.2.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> from ultralytics import YOLO

>>> model=YOLO(r'/data/yolov8n.pt')

>>> model.info()

YOLOv8n summary: 225 layers, 3157200 parameters, 0 gradients, 8.9 GFLOPs

(225, 3157200, 0, 8.8575488)

>>> exit()

Pytorch pt 格式直接推理

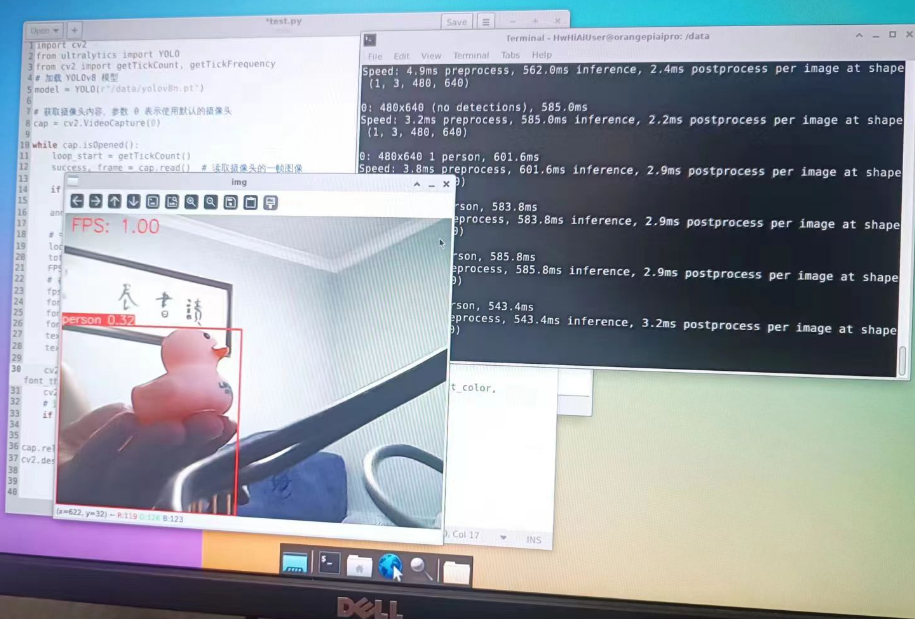

我们找一段程序,使用yolov8 进行目标检测推理,并在屏幕上显示FPS

import cv2

from ultralytics import YOLO

from cv2 import getTickCount, getTickFrequency

# 加载 YOLOv8 模型

model = YOLO("weights/yolov8s.pt")

# 获取摄像头内容,参数 0 表示使用默认的摄像头

cap = cv2.VideoCapture(0)

while cap.isOpened():

loop_start = getTickCount()

success, frame = cap.read() # 读取摄像头的一帧图像

if success:

results = model.predict(source=frame) # 对当前帧进行目标检测并显示结果

annotated_frame = results[0].plot()

# 中间放自己的显示程序

loop_time = getTickCount() - loop_start

total_time = loop_time / (getTickFrequency())

FPS = int(1 / total_time)

# 在图像左上角添加FPS文本

fps_text = f"FPS: {FPS:.2f}"

font = cv2.FONT_HERSHEY_SIMPLEX

font_scale = 1

font_thickness = 2

text_color = (0, 0, 255) # 红色

text_position = (10, 30) # 左上角位置

cv2.putText(annotated_frame, fps_text, text_position, font, font_scale, text_color, font_thickness)

cv2.imshow('img', annotated_frame)

# 通过按下 'q' 键退出循环

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release() # 释放摄像头资源

cv2.destroyAllWindows() # 关闭OpenCV窗口

可见,使用CPU 进行推理 ,运行效果缓慢,每秒只有1帧

NCNN 格式推理

接下来,为了加速推理,我们看看低成本的折腾一下 NCNN 的效果怎么样,先说结论,从官方文档看,至少应该有5倍左右的速度提升。

总体包含3个步骤如下

0.配置ncnn

首先安装

pip install ncnn

如果直接让 ultralytics yolov8 运行时候安装容易出错

(yolov8) HwHiAiUser@orangepiaipro:/data$ python

Python 3.10.14 (main, May 6 2024, 19:36:58) [GCC 11.2.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> from ultralytics import YOLO

>>> model = YOLO("yolov8n.pt")

>>> model.export(format="ncnn")

Ultralytics YOLOv8.2.19 ? Python-3.10.14 torch-2.1.2 CPU (aarch64)

YOLOv8n summary (fused): 168 layers, 3151904 parameters, 0 gradients, 8.7 GFLOPs

PyTorch: starting from 'yolov8n.pt' with input shape (1, 3, 640, 640) BCHW and o utput shape(s) (1, 84, 8400) (6.2 MB)

TorchScript: starting export with torch 2.1.2...

TorchScript: export success ✅ 7.6s, saved as 'yolov8n.torchscript' (12.4 MB)

requirements: Ultralytics requirement ['ncnn'] not found, attempting AutoUpdate. ..

ERROR: Exception:

Traceback (most recent call last):

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/pip/_ve ndor/urllib3/response.py", line 438, in _error_catcher

yield

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/pip/_ve ndor/urllib3/response.py", line 561, in read

data = self._fp_read(amt) if not fp_closed else b""

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/pip/_ve ndor/urllib3/response.py", line 527, in _fp_read

return self._fp.read(amt) if amt is not None else self._fp.read()

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/http/client.py", line 466, in read

s = self.fp.read(amt)

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/socket.py", line 705, in readinto

return self._sock.recv_into(b)

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/ssl.py", line 1307, i n recv_into

return self.read(nbytes, buffer)

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/ssl.py", line 1163, i n read

return self._sslobj.read(len, buffer)

TimeoutError: The read operation timed out

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/pip/_in ternal/cli/base_command.py", line 180, in exc_logging_wrapper

status = run_func(*args)

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/pip/_in ternal/cli/req_command.py", line 245, in wrapper

return func(self, options, args)

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/pip/_in ternal/commands/install.py", line 377, in run

requirement_set = resolver.resolve(

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/pip/_in ternal/resolution/resolvelib/resolver.py", line 179, in resolve

self.factory.preparer.prepare_linked_requirements_more(reqs)

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/pip/_in ternal/operations/prepare.py", line 552, in prepare_linked_requirements_more

self._complete_partial_requirements(

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/pip/_in ternal/operations/prepare.py", line 467, in _complete_partial_requirements

for link, (filepath, _) in batch_download:

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/pip/_in ternal/network/download.py", line 183, in __call__

for chunk in chunks:

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/pip/_in ternal/cli/progress_bars.py", line 53, in _rich_progress_bar

for chunk in iterable:

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/pip/_in ternal/network/utils.py", line 63, in response_chunks

for chunk in response.raw.stream(

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/pip/_ve ndor/urllib3/response.py", line 622, in stream

data = self.read(amt=amt, decode_content=decode_content)

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/pip/_ve ndor/urllib3/response.py", line 560, in read

with self._error_catcher():

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/contextlib.py", line 153, in __exit__

self.gen.throw(typ, value, traceback)

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/pip/_ve ndor/urllib3/response.py", line 443, in _error_catcher

raise ReadTimeoutError(self._pool, None, "Read timed out.")

pip._vendor.urllib3.exceptions.ReadTimeoutError: HTTPSConnectionPool(host='files .pythonhosted.org', port=443): Read timed out.

Retry 1/2 failed: Command 'pip install --no-cache-dir "ncnn" ' returned non-zero exit status 2.

requirements: AutoUpdate success ✅ 149.0s, installed 1 package: ['ncnn']

requirements: ⚠️ Restart runtime or rerun command for updates to take effect

NCNN: export failure ❌ 149.0s: No module named 'ncnn'

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/ultraly tics/engine/model.py", line 602, in export

return Exporter(overrides=args, _callbacks=self.callbacks)(model=self.model)

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/torch/u tils/_contextlib.py", line 115, in decorate_context

return func(*args, **kwargs)

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/ultraly tics/engine/exporter.py", line 320, in __call__

f[11], _ = self.export_ncnn()

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/ultraly tics/engine/exporter.py", line 142, in outer_func

raise e

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/ultraly tics/engine/exporter.py", line 137, in outer_func

f, model = inner_func(*args, **kwargs)

File "/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/ultraly tics/engine/exporter.py", line 534, in export_ncnn

import ncnn # noqa

ModuleNotFoundError: No module named 'ncnn'

1. 导出ncnn,下载pnnx 文件

https://github.com/pnnx/pnnx/releases/download/20240410/pnnx-20240410-linux-aarch64.zip

>>> from ultralytics import YOLO

>>> model = YOLO("yolov8n.pt")

>>> model.export(format="ncnn")

Ultralytics YOLOv8.2.19 ? Python-3.10.14 torch-2.1.2 CPU (aarch64)

YOLOv8n summary (fused): 168 layers, 3151904 parameters, 0 gradients, 8.7 GFLOPs

PyTorch: starting from 'yolov8n.pt' with input shape (1, 3, 640, 640) BCHW and output shape(s) (1, 84, 8400) (6.2 MB)

TorchScript: starting export with torch 2.1.2...

TorchScript: export success ✅ 7.2s, saved as 'yolov8n.torchscript' (12.4 MB)

NCNN: starting export with NCNN 1.0.20240410...

NCNN: WARNING ⚠️ PNNX not found. Attempting to download binary file from https://github.com/pnnx/pnnx/.

Note PNNX Binary file must be placed in current working directory or in /home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/ultralytics. See PNNX repo for full installation instructions.

NCNN: successfully found latest PNNX asset file pnnx-20240410-linux-aarch64.zip

Downloading https://github.com/pnnx/pnnx/releases/download/20240410/pnnx-20240410-linux-aarch64.zip to 'pnnx-20240410-linux-aarch64.zip'...

最后一次成功了,可见导出了是一个文件夹

Python 3.10.14 (main, May 6 2024, 19:36:58) [GCC 11.2.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> from ultralytics import YOLO

>>> model = YOLO("yolov8n.pt")

>>> model.export(format="ncnn")

Ultralytics YOLOv8.2.19 ? Python-3.10.14 torch-2.1.2 CPU (aarch64)

YOLOv8n summary (fused): 168 layers, 3151904 parameters, 0 gradients, 8.7 GFLOPs

PyTorch: starting from 'yolov8n.pt' with input shape (1, 3, 640, 640) BCHW and output shape(s) (1, 84, 8400) (6.2 MB)

TorchScript: starting export with torch 2.1.2...

TorchScript: export success ✅ 7.3s, saved as 'yolov8n.torchscript' (12.4 MB)

NCNN: starting export with NCNN 1.0.20240410...

NCNN: WARNING ⚠️ PNNX not found. Attempting to download binary file from https://github.com/pnnx/pnnx/.

Note PNNX Binary file must be placed in current working directory or in /home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/ultralytics. See PNNX repo for full installation instructions.

NCNN: successfully found latest PNNX asset file pnnx-20240410-linux-aarch64.zip

Unzipping pnnx-20240410-linux-aarch64.zip to /data/pnnx-20240410-linux-aarch64...: 100%|██████████| 3/3 [00:00<00:00, 5.47file/s]

NCNN: running '/home/HwHiAiUser/.conda/envs/yolov8/lib/python3.10/site-packages/ultralytics/pnnx yolov8n.torchscript ncnnparam=yolov8n_ncnn_model/model.ncnn.param ncnnbin=yolov8n_ncnn_model/model.ncnn.bin ncnnpy=yolov8n_ncnn_model/model_ncnn.py pnnxparam=yolov8n_ncnn_model/model.pnnx.param pnnxbin=yolov8n_ncnn_model/model.pnnx.bin pnnxpy=yolov8n_ncnn_model/model_pnnx.py pnnxonnx=yolov8n_ncnn_model/model.pnnx.onnx fp16=0 device=cpu inputshape="[1, 3, 640, 640]"'

pnnxparam = yolov8n_ncnn_model/model.pnnx.param

pnnxbin = yolov8n_ncnn_model/model.pnnx.bin

pnnxpy = yolov8n_ncnn_model/model_pnnx.py

pnnxonnx = yolov8n_ncnn_model/model.pnnx.onnx

ncnnparam = yolov8n_ncnn_model/model.ncnn.param

ncnnbin = yolov8n_ncnn_model/model.ncnn.bin

ncnnpy = yolov8n_ncnn_model/model_ncnn.py

fp16 = 0

optlevel = 2

device = cpu

inputshape = [1,3,640,640]f32

inputshape2 =

customop =

moduleop =

############# pass_level0

inline module = ultralytics.nn.modules.block.Bottleneck

inline module = ultralytics.nn.modules.block.C2f

inline module = ultralytics.nn.modules.block.DFL

inline module = ultralytics.nn.modules.block.SPPF

inline module = ultralytics.nn.modules.conv.Concat

inline module = ultralytics.nn.modules.conv.Conv

inline module = ultralytics.nn.modules.head.Detect

inline module = ultralytics.nn.modules.block.Bottleneck

inline module = ultralytics.nn.modules.block.C2f

inline module = ultralytics.nn.modules.block.DFL

inline module = ultralytics.nn.modules.block.SPPF

inline module = ultralytics.nn.modules.conv.Concat

inline module = ultralytics.nn.modules.conv.Conv

inline module = ultralytics.nn.modules.head.Detect

----------------

############# pass_level1

############# pass_level2

############# pass_level3

############# pass_level4

############# pass_level5

############# pass_ncnn

NCNN: export success ✅ 6.5s, saved as 'yolov8n_ncnn_model' (12.2 MB)

Export complete (16.2s)

Results saved to /data

Predict: yolo predict task=detect model=yolov8n_ncnn_model imgsz=640

Validate: yolo val task=detect model=yolov8n_ncnn_model imgsz=640 data=coco.yaml

Visualize: https://netron.app

'yolov8n_ncnn_model'

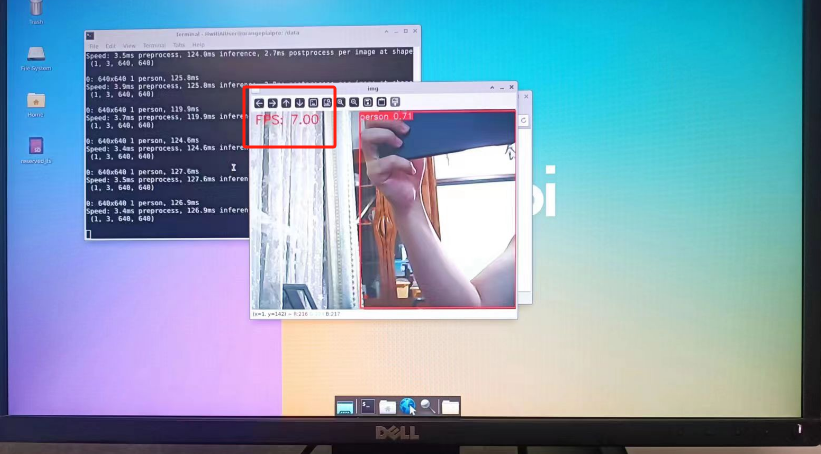

推理结果,可见NCNN推理方式能够提升5-10 倍的推理性能,我们用上面同样的代码,目前达到了 7 fps

2. 模型推理

from ultralytics import YOLO

# Load a YOLOv8n PyTorch model

model = YOLO("yolov8n.pt")

# Export the model to NCNN format

model.export(format="ncnn") # creates 'yolov8n_ncnn_model'

# Load the exported NCNN model

ncnn_model = YOLO("yolov8n_ncnn_model")

# Run inference

results = ncnn_model("https://ultralytics.com/images/bus.jpg")

应该说,这个简单步骤操作下来,就能达到近实时的目标检测,这快板子CPU性能还是不错的!

是否可以使用Orange Pi AIpro 的 NPU 进行推理 呢?

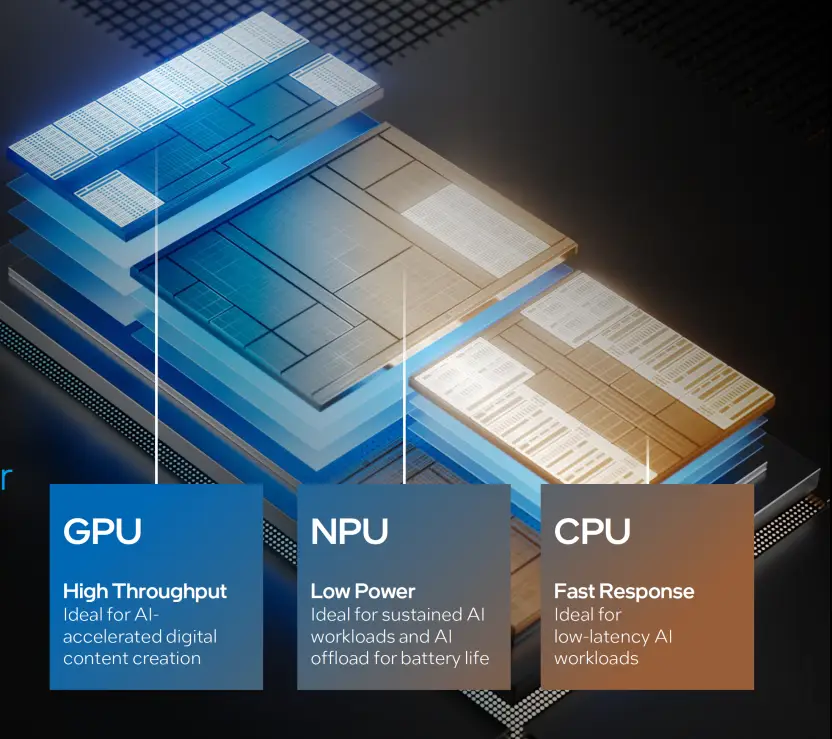

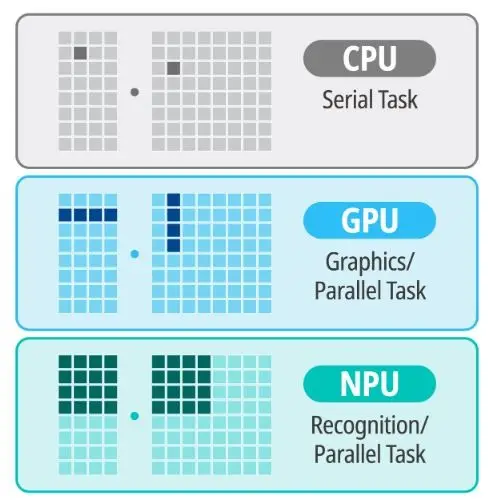

什么是 NPU 呢?

NPU,是“神经网络处理单元”的缩写。NPU的工作原理是利用其专门设计的硬件结构来执行神经网络算法中的各种数学运算,如矩阵乘法、卷积等。这些运算是神经网络训练和推理过程中的核心操作。通过在硬件层面上进行优化,NPU能够以更低的能耗和更高的效率执行这些操作。

与CPU和GPU相比,NPU在以下几个方面具有明显优势:

-

性能:NPU针对AI计算进行了专门优化,能够提供更高的计算性能。

-

能效:NPU在执行AI任务时,通常比CPU和GPU更加节能。

-

面积效率:NPU的设计紧凑,能够在有限的空间内提供高效的计算能力。

-

专用硬件加速:NPU通常包含专门的硬件加速器,如张量加速器和卷积加速器,这些加速器能够显著提高AI任务的处理速度。

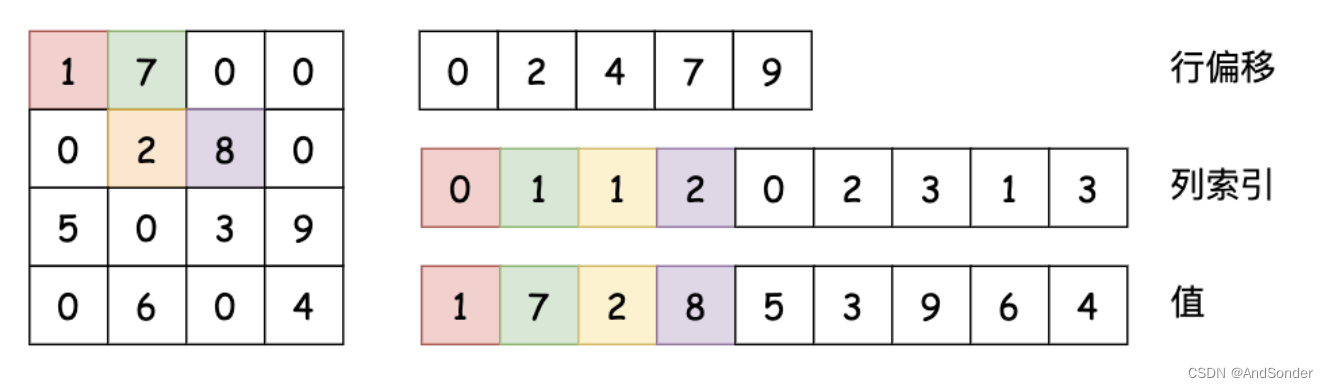

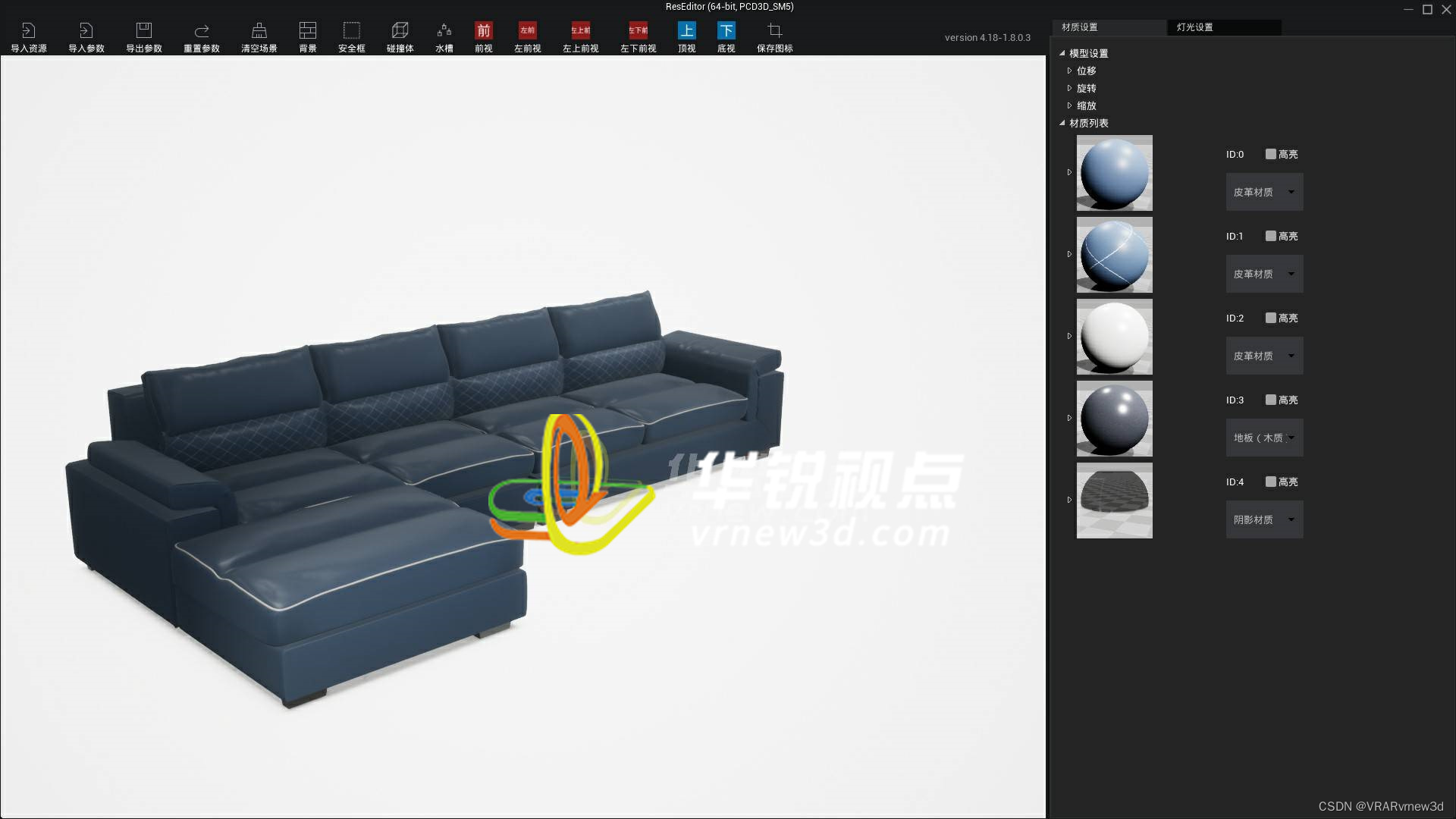

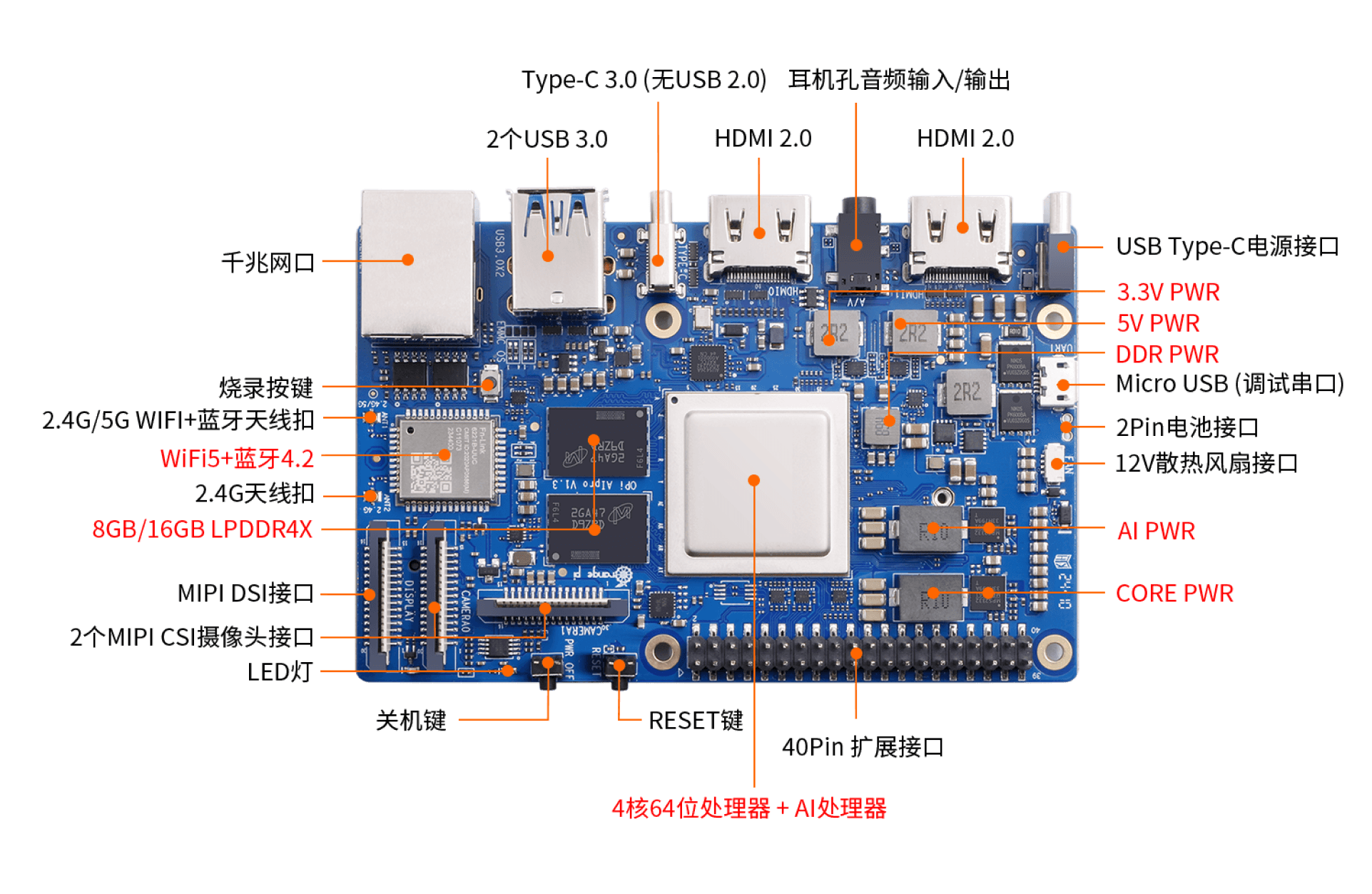

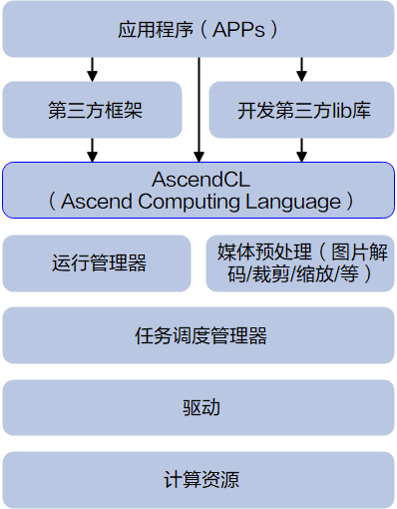

模型开发流程

基于香橙派AIpro开发AI推理应用1,开发流程如下图所示,为了调用Orange Pi AIpro NPU的能力,看来我们需要AscendCL相关的知识!

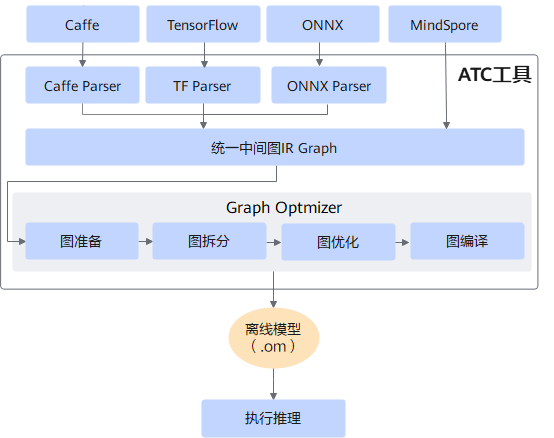

模型转换

基于香橙派AIpro将开源框架模型转换为昇腾模型2,这个ATC 中间层,就是上图中的AscendCL

根据上图,我们一般来说,只需要 做一下模型转换,然后 套用现有的推理代码就可以了

下面给出一个参考项目:

- https://gitee.com/ascend/EdgeAndRobotics/tree/master/Samples/YOLOV5USBCamera

显然,使用Orange Pi AIpro 的 NPU 进行推理 YOLOv8 的推理也是可行的,而且速度应该会有更大的提升。

时间有限,基于此,我就不再展开了,且听下回分解!

结论

带有NPU 算力的下一代国产小型边缘端 开发板 Orange Pi AIpro 的算力足够让人惊艳,在计算机视觉越来越普及的今天,我们的边缘端设备能够直接承担行为分析,事件预警的重任,无疑可以给企业,个人创作更多的可能。

下一步,我打算好好学习利用一下这个开发版,针对大语言模型,多模态大模型,进行一定的部署尝试。希望我们的生态,终有一天能够比肩Ollama,实现一条命令部署大模型应用,创造无限可能!

参考文献

官网:Orange-Pi-AIpro

用户手册(没有在线地址):http://www.orangepi.cn/html/hardWare/computerAndMicrocontrollers/service-and-support/Orange-Pi-AIpro.html

参考官方链接

- https://semiengineering.com/tops-memory-throughput-and-inference-efficiency/

- 昇腾社区 技术干货

- 香橙派 快速上手指南

- 香橙派 学习资源导航

- 华为昇腾 AI 市场应用案例

- 华为昇腾论坛-香橙派

开机报错参考:

- https://blog.csdn.net/snmper/article/details/136161555

高级指南

- 部署大语言模型

- NPU 命令参考指南

注释

如何基于香橙派AIpro开发AI推理应用 ↩︎

基于香橙派AIpro将开源框架模型转换为昇腾模型 ↩︎