一、前置条件

- HBase服务:【快捷部署】023_HBase(2.3.6)

- 开发环境:Java(1.8)、Maven(3)、IDE(Idea 或 Eclipse)

二、相关代码

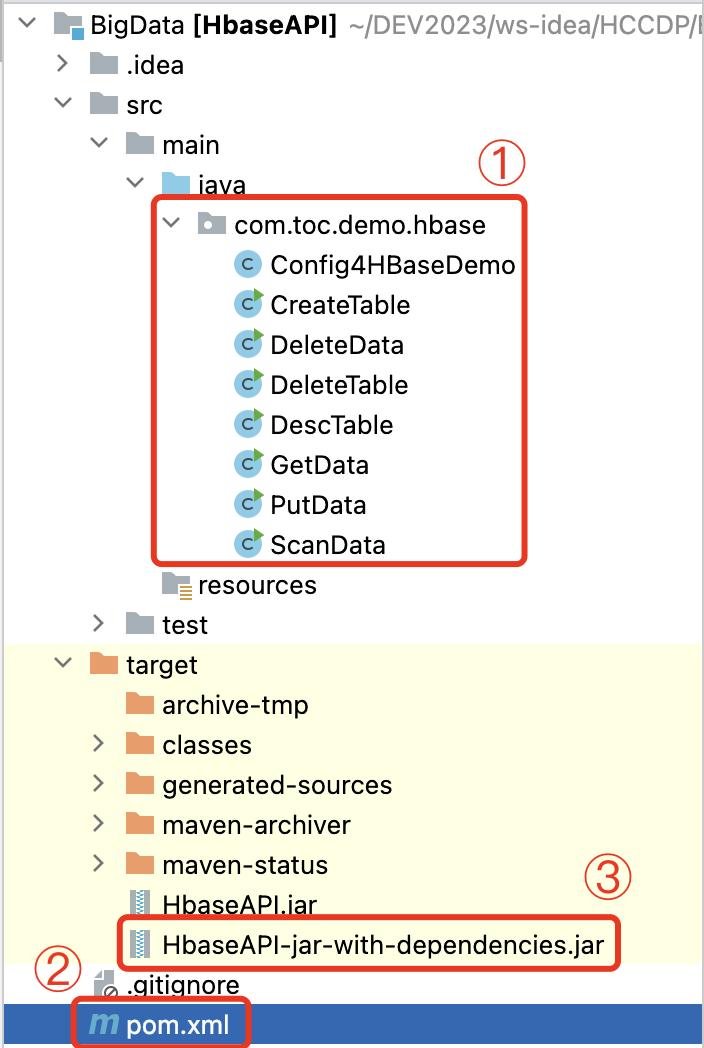

代码结构如上图中①和②

pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.huawei</groupId>

<artifactId>HbaseAPI</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

</properties>

<repositories>

<repository>

<id>huaweicloud2</id>

<name>huaweicloud2</name>

<url>https://mirrors.huaweicloud.com/repository/maven/</url>

</repository>

<repository>

<id>huaweicloud1</id>

<name>huaweicloud1</name>

<url>https://repo.huaweicloud.com/repository/maven/huaweicloudsdk/</url>

</repository>

</repositories>

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.8.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.8.3</version>

</dependency>

<!--hbase-->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>1.4.13</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>1.4.13</version>

</dependency>

</dependencies>

<build>

<finalName>HbaseAPI</finalName>

<plugins>

<plugin>

<artifactId>maven-assembly-plugin</artifactId>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>assembly</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

Config4HBaseDemo(公共配置类)

package com.toc.demo.hbase;

/**

* 公共配置类

* @author cxy@toc

* @date 2024-05-07

*

*/

public class Config4HBaseDemo {

public static String zkQuorum = "127.0.0.1";

public static String getZkQuorum(String[] args) {

if (args!=null && args.length > 0) {

System.out.println("接收参数:" + args[0]);

zkQuorum = args[0];

}

return zkQuorum;

}

}

CreateTable(创建Hbase表)

package com.toc.demo.hbase;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import java.io.IOException;

/**

* 创建Hbase表

* @author cxy@toc

* @date 2024-05-07

*

*/

public class CreateTable {

public static void main(String[] args) throws IOException {

//链接hbase

Configuration conf = HBaseConfiguration.create();

//这里的zookeeper地址要改为自己集群的zookeeper地址

conf.set("hbase.zookeeper.quorum",Config4HBaseDemo.getZkQuorum(args));

conf.set("hbase.zookeeper.property.clientPort", "2181");

Connection connection = ConnectionFactory.createConnection(conf);

Admin admin = connection.getAdmin();

TableName tableName = TableName.valueOf("users");

if (!admin.tableExists(tableName)){

//创建表描述器

HTableDescriptor htd = new HTableDescriptor(tableName);

htd.addFamily(new HColumnDescriptor("f"));

admin.createTable(htd);

System.out.println(tableName+"表创建成功");

}else {

System.out.println(tableName+"表已经存在");

}

}

}

DeleteData(删除数据)

package com.toc.demo.hbase;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

/**

* 删除数据

* @author cxy@toc

* @date 2024-05-07

*

*/

public class DeleteData {

public static void main(String[] args) throws IOException {

//链接hbase

Configuration conf = HBaseConfiguration.create();

//这里的zookeeper地址要改为自己集群的zookeeper地址

conf.set("hbase.zookeeper.quorum",Config4HBaseDemo.getZkQuorum(args));

conf.set("hbase.zookeeper.property.clientPort", "2181");

Connection connection = ConnectionFactory.createConnection(conf);

Table hTable = connection.getTable(TableName.valueOf("users"));

Delete delete = new Delete(Bytes.toBytes("row5"));

delete.addColumn(Bytes.toBytes("f"),Bytes.toBytes("id"));

//直接删除family,将所有row5的信息全部删除

delete.addFamily(Bytes.toBytes("f"));

hTable.delete(delete);

System.out.println("删除成功");

}

}

DeleteTable(删除表)

package com.toc.demo.hbase;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import java.io.IOException;

/**

* 删除表

* @author cxy@toc

* @date 2024-05-07

*

*/

public class DeleteTable {

public static void main(String[] args) throws IOException {

//链接hbase

Configuration conf = HBaseConfiguration.create();

//这里的zookeeper地址要改为自己集群的zookeeper地址

conf.set("hbase.zookeeper.quorum",Config4HBaseDemo.getZkQuorum(args));

conf.set("hbase.zookeeper.property.clientPort", "2181");

Connection connection = ConnectionFactory.createConnection(conf);

Admin hBaseAdmin = connection.getAdmin();

TableName tableName = TableName.valueOf("users");

if (hBaseAdmin.tableExists(tableName)) {

//判断表的状态

if(hBaseAdmin.isTableAvailable(tableName)) {

hBaseAdmin.disableTable(tableName);

}

hBaseAdmin.deleteTable(tableName);

System.out.println("删除表"+tableName+"成功");

}else {

System.out.println(tableName+"表不存在");

}

}

}

DescTable(查看表结构)

package com.toc.demo.hbase;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.*;

import java.io.IOException;

/**

* 查看表结构

* @author cxy@toc

* @date 2024-05-07

*

*/

public class DescTable {

public static void main(String[] args) throws IOException {

//链接hbase

Configuration conf = HBaseConfiguration.create();

//这里的zookeeper地址要改为自己集群的zookeeper地址

conf.set("hbase.zookeeper.quorum",Config4HBaseDemo.getZkQuorum(args));

conf.set("hbase.zookeeper.property.clientPort", "2181");

Connection connection = ConnectionFactory.createConnection(conf);

Admin hBaseAdmin = connection.getAdmin();

TableName tableName = TableName.valueOf("users");

if(hBaseAdmin.tableExists(tableName)) {

HTableDescriptor htd = hBaseAdmin.getTableDescriptor(tableName);

System.out.println("查看"+tableName+"表结构");

System.out.println(htd);

}else {

System.out.println(tableName+"表不存在");

}

}

}

GetData(获取数据)

package com.toc.demo.hbase;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

/**

* 获取数据

* @author cxy@toc

* @date 2024-05-07

*

*/

public class GetData {

public static void main(String[] args) throws IOException {

//链接hbase

Configuration conf = HBaseConfiguration.create();

//这里的zookeeper地址要改为自己集群的zookeeper地址

conf.set("hbase.zookeeper.quorum",Config4HBaseDemo.getZkQuorum(args));

conf.set("hbase.zookeeper.property.clientPort", "2181");

Connection connection = ConnectionFactory.createConnection(conf);

Table hTable = connection.getTable(TableName.valueOf("users"));

Get get = new Get(Bytes.toBytes("row1"));

Result result = hTable.get(get);

byte[] family = Bytes.toBytes("f");

byte[] buf = result.getValue(family,Bytes.toBytes("id"));

System.out.println("id="+Bytes.toString(buf));

buf = result.getValue(family,Bytes.toBytes("age"));

System.out.println("age="+Bytes.toInt(buf));

buf = result.getValue(family,Bytes.toBytes("name"));

System.out.println("name="+Bytes.toString(buf));

buf = result.getRow();

System.out.println("rowkey="+Bytes.toString(buf));

}

}

PutData(插入数据)

package com.toc.demo.hbase;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

/**

* 插入数据

* @author cxy@toc

* @date 2024-05-07

*

*/

public class PutData {

public static void main(String[] args) throws IOException {

//链接hbase

Configuration conf = HBaseConfiguration.create();

//这里的zookeeper地址要改为自己集群的zookeeper地址

conf.set("hbase.zookeeper.quorum",Config4HBaseDemo.getZkQuorum(args));

conf.set("hbase.zookeeper.property.clientPort", "2181");

Connection connection = ConnectionFactory.createConnection(conf);

Table hTable = connection.getTable(TableName.valueOf("users"));

//插入一条

Put put= new Put(Bytes.toBytes("row1"));

put.addColumn(Bytes.toBytes("f"),Bytes.toBytes("id"),Bytes.toBytes("1"));

put.addColumn(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("张三"));

put.addColumn(Bytes.toBytes("f"),Bytes.toBytes("age"),Bytes.toBytes(27));

put.addColumn(Bytes.toBytes("f"),Bytes.toBytes("phone"),Bytes.toBytes("18600000000"));

put.addColumn(Bytes.toBytes("f"),Bytes.toBytes("emil"),Bytes.toBytes("123654@163.com"));

hTable.put(put);

//插入多个

Put put1= new Put(Bytes.toBytes("row2"));

put1.addColumn(Bytes.toBytes("f"),Bytes.toBytes("id"),Bytes.toBytes("2"));

put1.addColumn(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("李四"));

Put put2= new Put(Bytes.toBytes("row3"));

put2.addColumn(Bytes.toBytes("f"),Bytes.toBytes("id"),Bytes.toBytes("3"));

put2.addColumn(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("王五"));

Put put3= new Put(Bytes.toBytes("row4"));

put3.addColumn(Bytes.toBytes("f"),Bytes.toBytes("id"),Bytes.toBytes("4"));

put3.addColumn(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("赵六"));

List<Put> list = new ArrayList<Put>();

list.add(put1);

list.add(put2);

list.add(put3);

hTable.put(list);

//检测put,条件成功就插入,要求RowKey是一样的

Put put4 = new Put(Bytes.toBytes("row5"));

put4.addColumn(Bytes.toBytes("f"),Bytes.toBytes("id"),Bytes.toBytes("5"));

hTable.checkAndPut(Bytes.toBytes("row5"),Bytes.toBytes("f"),Bytes.toBytes("id"),null,put4);

System.out.println("插入成功");

}

}

ScanData(扫描遍历数据)

package com.toc.demo.hbase;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.filter.FilterList;

import org.apache.hadoop.hbase.filter.MultipleColumnPrefixFilter;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

import java.util.Iterator;

import java.util.Map;

import java.util.NavigableMap;

/**

* 扫描遍历数据

* @author cxy@toc

* @date 2024-05-07

*

*/

public class ScanData {

public static void main(String[] args) throws IOException {

//链接hbase

Configuration conf = HBaseConfiguration.create();

//这里的zookeeper地址要改为自己集群的zookeeper地址

conf.set("hbase.zookeeper.quorum",Config4HBaseDemo.getZkQuorum(args));

conf.set("hbase.zookeeper.property.clientPort", "2181");

Connection connection = ConnectionFactory.createConnection(conf);

Table hTable = connection.getTable(TableName.valueOf("users"));

Scan scan = new Scan();

//增加起始rowkey

scan.withStartRow(Bytes.toBytes("row1"));

scan.withStopRow(Bytes.toBytes("row5"));

//增加过滤filter

FilterList list = new FilterList(FilterList.Operator.MUST_PASS_ALL);

byte[][] prefixes = new byte[2][];

prefixes[0] = Bytes.toBytes("id");

prefixes[1] = Bytes.toBytes("name");

MultipleColumnPrefixFilter mcpf = new MultipleColumnPrefixFilter(prefixes);

list.addFilter(mcpf);

scan.setFilter(list);

ResultScanner rs = hTable.getScanner(scan);

Iterator<Result> iter = rs.iterator();

while (iter.hasNext()){

Result result = iter.next();

printResult(result);

}

}

/*

打印Result对象

*/

static void printResult(Result result){

System.out.println("***********"+Bytes.toString(result.getRow()));

NavigableMap<byte[],NavigableMap<byte[], NavigableMap<Long,byte[]>>> map = result.getMap();

for(Map.Entry<byte[],NavigableMap<byte[],NavigableMap<Long,byte[]>>> entry: map.entrySet()){

String family = Bytes.toString(entry.getKey());

for(Map.Entry<byte[],NavigableMap<Long,byte[]>> columnEntry :entry.getValue().entrySet()){

String column = Bytes.toString(columnEntry.getKey());

String value = "";

if("age".equals(column)){

value=""+Bytes.toInt(columnEntry.getValue().firstEntry().getValue());

}else {

value=""+Bytes.toString(columnEntry.getValue().firstEntry().getValue());

}

System.out.println(family+":"+column+":"+value);

}

}

}

}

三、如何使用

-

Maven打包

Eclipse:项目上右键 Run As -> Maven Install进行打包

Idea:Maven工具栏 -> 生命周期 -> install

打包好的jar如上图中的③ -

上传jar到Hadoop(yarn)服务器

scp 你的target/HbaseAPI-jar-with-dependencies.jar root@xxx.xxx.xxx.xxx:/root -

登录到服务器,并查看上传的文件

ssh root@xxx.xxx.xxx.xxx

ls

- 执行命令,查看效果

# 将{ZK的内网IP}改为zookeeper的ip,如过就是本机可以不写,默认是127.0.0.1

yarn jar HbaseAPI-jar-with-dependencies.jar com.toc.demo.hbase.CreateTable {ZK的内网IP}

yarn jar HbaseAPI-jar-with-dependencies.jar com.toc.demo.hbase.DescTable {ZK的内网IP}

yarn jar HbaseAPI-jar-with-dependencies.jar com.toc.demo.hbase.PutData {ZK的内网IP}

yarn jar HbaseAPI-jar-with-dependencies.jar com.toc.demo.hbase.GetData {ZK的内网IP}

yarn jar HbaseAPI-jar-with-dependencies.jar com.toc.demo.hbase.ScanData {ZK的内网IP}

yarn jar HbaseAPI-jar-with-dependencies.jar com.toc.demo.hbase.DeleteData {ZK的内网IP}

yarn jar HbaseAPI-jar-with-dependencies.jar com.toc.demo.hbase.DeleteTable {ZK的内网IP}

更多详细操作可参见华为云沙箱实验:https://lab.huaweicloud.com/experiment-detail_1779

往期精彩内容推荐

云原生:10分钟了解一下Kubernetes架构

云原生:5分钟了解一下Kubernetes是什么

「快速部署」第二期清单

「快速部署」第一期清单

![[ACTF新生赛2020]SoulLike](https://img-blog.csdnimg.cn/direct/8d855ffecc734012b65dc7c51c224753.png)

![宝塔纯净版 7.6.0版本无需手机登录 [稳定版本/推荐]](https://img-blog.csdnimg.cn/direct/3d809472fdcd44058e69e8dec8ab0b8e.png)