参考资料

-

Setup AWS ParallelCluster 3.0 with AWS Cloud9 200

-

HPC For Public Sector Customers 200

-

HPC pcluster workshop 200

-

Running CFD on AWS ParallelCluster at scale 400

-

Tutorial on how to run CFD on AWS ParallelCluster 400

-

Running CFD on AWS ParallelCluster at scale 400

-

Running WRF on AWS ParallelCluster 300

-

Slurm REST API, Accounting and Federation on AWS ParallelCluster 400

-

Running Fire Dynamics CFD Simulation on AWS ParallelCluster at scale 200

-

Spack Tutorial on AWS ParallelCluster

AWS ParallelCluster 是 AWS 支持的开源集群管理工具。它允许客户轻松入门,并在几分钟内更新和扩展 AWS Cloud 中的 HPC 集群环境。支持各种作业调度程序,如 AWS 批处理、 SGE、Torque和 Slurm(Amazon ParallelCluster 3. x 不支持 SGE 和 Torque 调度器),以方便作业提交

pcluster集群配置和创建

安装pcluster工具,需要依赖cdk生成cloudformation模板,因此需要预装node环境

virtualenv pvenv

source pvenv/bin/active

pip3 install --upgrade "aws-parallelcluster"

pcluster version

# pip install aws-parallelcluster --upgrade --user

生成集群配置

$ pcluster configure --config cluster-config.yaml --region cn-north-1

配置文件示例,网络配置参照后文的pcluster集群的网络配置部分

pclusterv3支持的调度器有slurm和awsbatch,这里只涉及到slurm

Region: cn-north-1

Image:

Os: ubuntu1804

HeadNode:

InstanceType: m5.large

Networking:

SubnetId: subnet-027025e9d9760acdd

Ssh:

KeyName: cluster-key

CustomActions: #自定义行为

OnNodeConfigured:

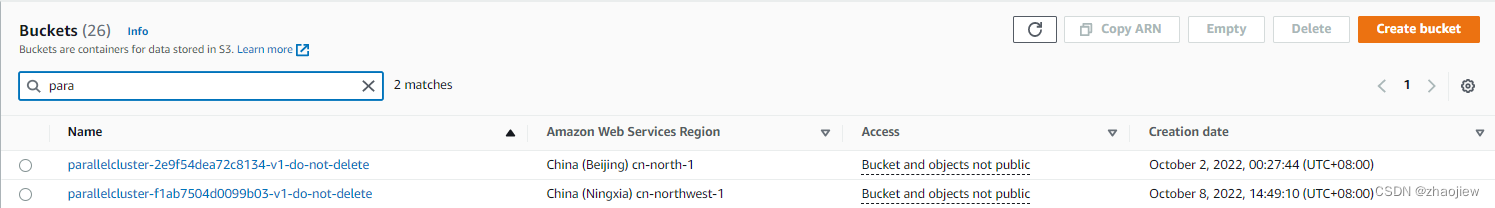

Script: s3://parallelcluster-2e9f54dea72c8134-v1-do-not-delete/script/hello.sh

Iam:

S3Access: #访问s3权限

- BucketName: parallelcluster-2e9f54dea72c8134-v1-do-not-delete

EnableWriteAccess: false #只读

AdditionalIamPolicies: # 访问ecr权限

- Policy: arn:aws-cn:iam::aws:policy/AmazonEC2ContainerRegistryFullAccess

Scheduling:

Scheduler: slurm

SlurmSettings:

Dns:

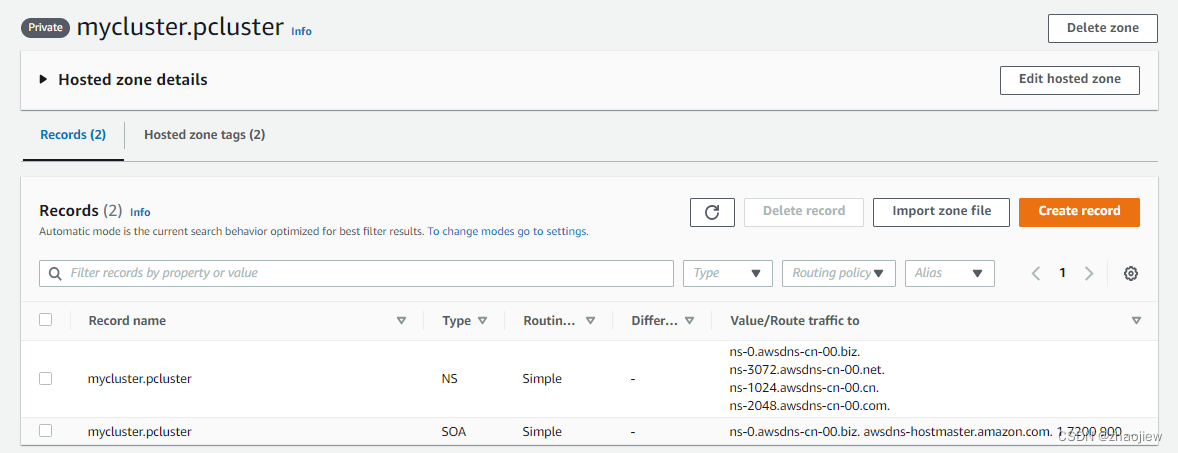

DisableManagedDns: false #默认为false,使用r53的dns解析

ScaledownIdletime: 3 #队列中无任务时3分钟缩容

SlurmQueues:

- Name: queue1

ComputeResources:

- Name: c5large

DisableSimultaneousMultithreading: false

Efa:

Enabled: false

GdrSupport: false

InstanceType: c5.large

MinCount: 1 #静态实例的数量

MaxCount: 10

Iam:

AdditionalIamPolicies: # 访问ecr权限

- Policy: arn:aws-cn:iam::aws:policy/AmazonEC2ContainerRegistryFullAccess

Networking:

SubnetIds:

- subnet-027025e9d9760acdd

SharedStorage:

- FsxLustreSettings: #配置fsx共享存储

StorageCapacity: 1200

MountDir: /fsx

Name: fsx

StorageType: FsxLustre

创建集群

默认情况下创建的 ParallelCluster 不启用 VPC 流日志

$ pcluster create-cluster --cluster-name mycluster --cluster-configuration cluster-config.yaml

查看集群

$ pcluster describe-cluster --cluster-name mycluster

{

"creationTime": "2023-01-1xT01:33:01.470Z",

"version": "3.4.1",

"clusterConfiguration": {

"url": "https://parallelcluster-2e9f54dea72c8134-v1-do-not-delete.s3.cn-north-1.amazonaws.com.cn/parallelcluster/3.4.1/clusters/mycluster-69tt2sf5bgsldktx/configs/cluster-config.yaml?versionId=Q7XO1MF.LE4sh3d.K06n49CmQIirsb3k&X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=ASIAQRIBWRJKH4DPNLIN%2F20230116%2Fcn-north-1%2Fs3%2Faws4_request&..."

},

"tags": [...],

"cloudFormationStackStatus": "CREATE_IN_PROGRESS",

"clusterName": "mycluster",

"computeFleetStatus": "UNKNOWN",

"cloudformationStackArn": "arn:aws-cn:cloudformation:cn-north-1:xxxxxxxxxxx:stack/mycluster/b6ea1050-953d-11ed-ad17-0e468cb97d98",

"region": "cn-north-1",

"clusterStatus": "CREATE_IN_PROGRESS",

"scheduler": {

"type": "slurm"

}

}

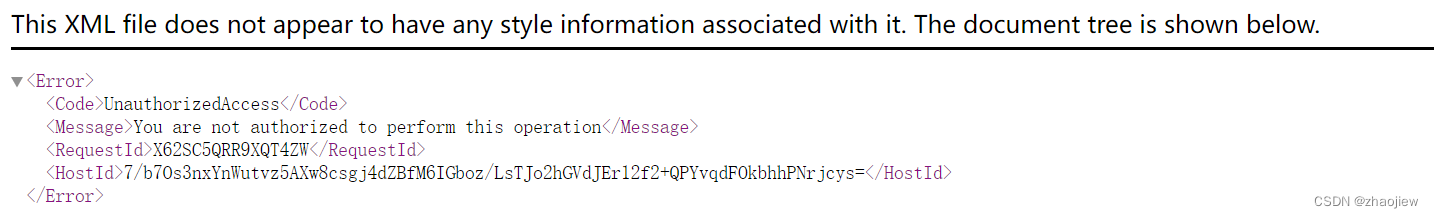

访问配置链接没有权限,目测是一个presign url,由于中国区账号未备案无法访问

该对象存储在专用的s3桶中

查看集群实例

$ pcluster describe-cluster-instances --cluster-name mycluster

{

"instances": [

{

"launchTime": "2023-01-xxT01:35:50.000Z",

"instanceId": "i-0c3xxxxxxxd164",

"publicIpAddress": "xx.xx.xx.xx",

"instanceType": "m5.large",

"state": "running",

"nodeType": "HeadNode",

"privateIpAddress": "172.31.20.150"

}

]

}

更新集群

$ pcluster update-cluster -n mycluster -c cluster-config.yaml

删除集群

$ pcluster delete-cluster --cluster-name mycluster

连接集群

$ pcluster ssh --cluster-name mycluster -i /home/ec2-user/.ssh/cluster-key.pem

获取日志,不需要导入到s3桶之后再下载了

$ pcluster export-cluster-logs --cluster-name mycluster --region cn-north-1 \

--bucket zhaojiew-test --bucket-prefix logs --output-file /tmp/archive.tar.gz

$ tar -xzvf /tmp/archive.tar.gz

mycluster-logs-202301160516/cloudwatch-logs/ip-172-31-17-51.i-0b3f352aa1a503b5a.cloud-init

mycluster-logs-202301160516/cloudwatch-logs/ip-172-31-17-51.i-0b3f352aa1a503b5a.cloud-init-output

mycluster-logs-202301160516/cloudwatch-logs/ip-172-31-17-51.i-0b3f352aa1a503b5a.computemgtd

mycluster-logs-202301160516/cloudwatch-logs/ip-172-31-17-51.i-0b3f352aa1a503b5a.slurmd

mycluster-logs-202301160516/cloudwatch-logs/ip-172-31-17-51.i-0b3f352aa1a503b5a.supervisord

...

mycluster-logs-202301160516/mycluster-cfn-events

slurm

slurm部分配置

- slurm配置

(1)JobRequeue

控制要重新排队的批作业的默认值。manager可能重新启动作业,例如,在计划停机之后、从节点故障恢复或者在被更高优先级的作业抢占时

This option controls the default ability for batch jobs to be requeued. Jobs may be requeued explicitly by a system administrator, after node failure, or upon preemption by a higher priority job

作业抢占的报错

slurmstepd: error: *** JOB 63830645 ON p08r06n17 CANCELLED AT 2020-08-18T21:40:52 DUE TO PREEMPTION ***

对于pcluster来说,任务失败会自动重新排队

(2)backfill

https://hpc.nmsu.edu/discovery/slurm/backfill-and-checkpoints/

当作业在回填分区中暂停时,当具有较高优先级的作业完成执行时,它将立即重新启动并从头开始计算

IBM的LSF对backfill解释的还比较清楚,但是不知道和slurm有什么区别

https://www.ibm.com/docs/en/spectrum-lsf/10.1.0?topic=jobs-backfill-scheduling

Introducing new backfill-based scheduler for SLURM resource manager

slurm常用命令

- Slurm作业调度系统使用指南-USTC超算中心

- Slurm资源管理与作业调度系统安装配置

- 北京大学国际数学中心微型工作站slurm使用参考

查看节点

$ sinfo

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

queue1* up infinite 9 idle~ queue1-dy-c5large-[1-9]

queue1* up infinite 1 down~ queue1-st-c5large-1

提交任务

$ sbatch hellojob.sh

查看任务队列

$ squeue

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

1 queue1 hellojob ubuntu R 0:01 1 queue1-st-c5large-1

$ squeue --format="%.3i %.9P %.40j %.8T %.10M %.6D %.30R %E"

JOB PARTITION NAME STATE TIME NODES NODELIST(REASON) DEPENDENCY

2 queue1 hellojob.sh RUNNING 0:02 1 queue1-st-c5large-1 (null)

$ squeue --states=RUNNING -o "%i" --noheader

2

取消作业

$ scancel $(squeue --states=RUNNING -o "%i" --noheader)

查看计算结果

$ cat slurm-1.out

Hello World from queue1-st-c5large-1

可以使用ssh直接登录节点

$ ssh queue1-st-c5large-1

集群诊断和配置

$ sdiag

$ scontrol show config | grep -i time

BatchStartTimeout = 10 sec

BOOT_TIME = 2023-01-16T03:19:54

EioTimeout = 60

EpilogMsgTime = 2000 usec

GetEnvTimeout = 2 sec

GroupUpdateTime = 600 sec

LogTimeFormat = iso8601_ms

MessageTimeout = 60 sec

OverTimeLimit = 0 min

PreemptExemptTime = 00:00:00

PrologEpilogTimeout = 65534

ResumeTimeout = 1800 sec

SchedulerTimeSlice = 30 sec

SlurmctldTimeout = 300 sec

SlurmdTimeout = 180 sec

SuspendTime = 180 sec

SuspendTimeout = 120 sec

TCPTimeout = 2 sec

UnkillableStepTimeout = 180 sec

WaitTime = 0 sec

PMIxTimeout = 300

提交示例负载程序

mpi示例程序

cat > hello.c << EOF

#include <mpi.h>

#include <stdio.h>

int main(int argc, char** argv) {

// Initialize the MPI environment

MPI_Init(NULL, NULL);

// Get the number of processes

int world_size;

MPI_Comm_size(MPI_COMM_WORLD, &world_size);

// Get the rank of the process

int world_rank;

MPI_Comm_rank(MPI_COMM_WORLD, &world_rank);

// Get the name of the processor

char processor_name[MPI_MAX_PROCESSOR_NAME];

int name_len;

MPI_Get_processor_name(processor_name, &name_len);

// Print off a hello world message

printf("Hello world from processor %s, rank %d out of %d processors\n",

processor_name, world_rank, world_size);

// Finalize the MPI environment.

MPI_Finalize();

}

EOF

运行结果

$ mpicc -o hello hello.c

$ mpirun -n 4 hello

Hello world from processor ip-172-31-23-84, rank 0 out of 4 processors

Hello world from processor ip-172-31-23-84, rank 1 out of 4 processors

Hello world from processor ip-172-31-23-84, rank 2 out of 4 processors

Hello world from processor ip-172-31-23-84, rank 3 out of 4 processors

提交任务

cat > hello.sbatch << EOF

#!/bin/bash

#SBATCH --job-name=hello-world

#SBATCH --ntasks-per-node=2

#SBATCH --output=/fsx/logs/%x_%j.out

set -x

module load openmpi

mpirun /home/ubuntu/hello

sleep 10

EOF

mkdir -p /fsx/logs

sbatch -N2 /home/ubuntu/hello.sbatch

查看计算结果和过程

$ cat hello-world_4.out

+ module load openmpi

+ mpirun /home/ubuntu/hello

Hello world from processor queue1-dy-c5large-1, rank 0 out of 4 processors

Hello world from processor queue1-dy-c5large-1, rank 1 out of 4 processors

Hello world from processor queue1-st-c5large-1, rank 3 out of 4 processors

Hello world from processor queue1-st-c5large-1, rank 2 out of 4 processors

+ sleep 10

非排他作业

#!/bin/bash

#SBATCH --output=/dev/null

#SBATCH --error=/dev/null

#SBATCH --job-name=sleep-inf

sleep inf

EOF

排他作业

cat > ~/slurm/sleep-exclusive.sbatch << EOF

#!/bin/bash

#SBATCH --exclusive

#SBATCH --output=/dev/null

#SBATCH --error=/dev/null

#SBATCH --job-name=sleep-inf-exclusive

sleep inf

EOF

pcluster集群的网络配置

pcluster对集群的网络要求比较严格

- vpc必须开启

DNS Resolution和DNS Hostnames

可能的网络配置如下

(1)单个公有子网

- 子网启用自动分配公有ip

- 如果实例为多网卡,则需要开启EIP,因为公有 IP 只能分配给使用单个网络接口启动的实例

(2)头节点在公有子网,计算节点在nat私有子网

- nat需要正确配置,代理计算节点流量

- 头节点配置同(1)

(3)使用dx连接http proxy

(4)私有子网

-

必须配置以下终端节点

Service Service name Type Amazon CloudWatch com.amazonaws. region-id.logsInterface Amazon CloudFormation ccom.amazonaws. region-id.cloudformationInterface Amazon EC2 com.amazonaws. region-id.ec2Interface Amazon S3 com.amazonaws. region-id.s3Gateway Amazon DynamoDB com.amazonaws. region-id.dynamodbGateway Amazon Secrets Manager(AD功能需要) com.amazonaws. region-id.secretsmanagerInterface -

禁用route53(默认pcluster会创建,但是r53不支持vpc endpoint)并启动ec2的dns解析(使用ec2的dns主机名称)

Scheduling: ... SlurmSettings: Dns: DisableManagedDns: true UseEc2Hostnames: true

-

只支持slurm调度器

pcluster自定义ami

https://docs.aws.amazon.com/zh_cn/parallelcluster/latest/ug/building-custom-ami-v3.html

尽量使用节点自定义引导实现节点的自定义,而不是构建ami。因为ami需要在每次集群升级的时候重复构建新的ami

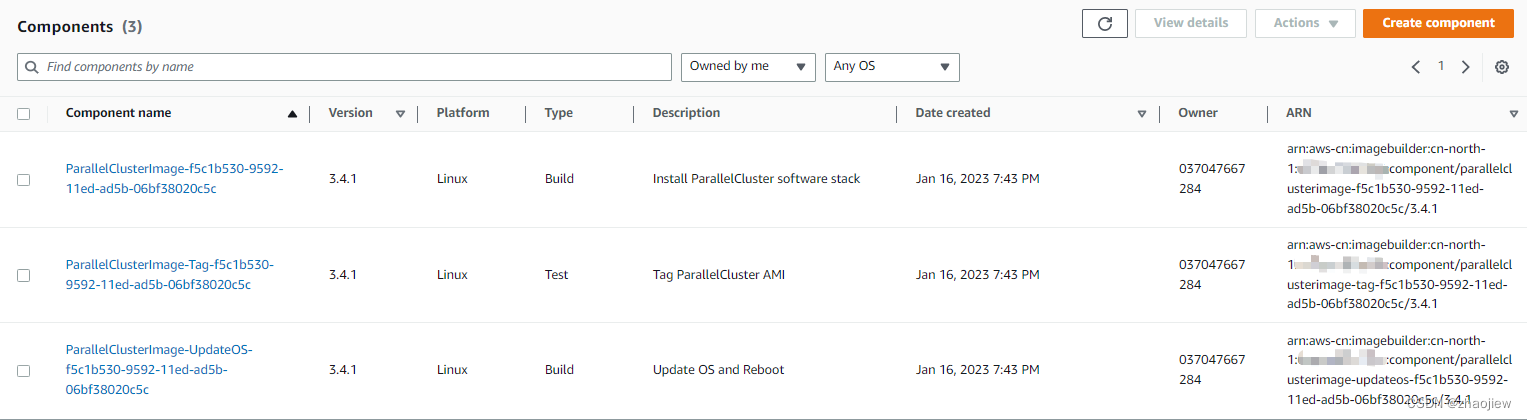

从3.0.0开始pcluster支持构建ami,pcluster依赖 EC2 Image Builder 服务来构建自定义 AMI

创建build配置,其中InstanceType和ParentImage是必须的,使用默认vpc启动构建实例(需要访问互联网),此处明确配置公有子网

https://docs.amazonaws.cn/zh_cn/parallelcluster/latest/ug/Build-v3.html

$ cat > image-config.yaml << EOF

Build:

InstanceType: c5.4xlarge

ParentImage: ami-07356f2da3fd22521

SubnetId: subnet-xxxxxxxxx

SecurityGroupIds:

- sg-xxxxxxxxx

UpdateOsPackages:

Enabled: true

EOF

image builder构建可能会花费1小时以上的时间,具体步骤如下

-

通过cloudformation创建基础设施

-

添加pcluster自定义组件

https://catalog.us-east-1.prod.workshops.aws/workshops/e2f40d13-8082-4718-909b-6cdc3155ae41/en-US/examples/custom-ami

-

构建完毕后启动新实例测试新的ami

-

构建成功删除堆栈

开始构建

$ pcluster build-image --image-configuration image-config.yaml --image-id myubuntu1804

{

"image": {

"imageId": "myubuntu1804",

"imageBuildStatus": "BUILD_IN_PROGRESS",

"cloudformationStackStatus": "CREATE_IN_PROGRESS",

"cloudformationStackArn": "arn:aws-cn:cloudformation:cn-north-1:xxxxxxxxxxx:stack/myubuntu1804/f5c1b530-9592-11ed-ad5b-06bf38020c5c",

"region": "cn-north-1",

"version": "3.4.1"

}

}

查看控制台imagebuilder,一共创建了3个components

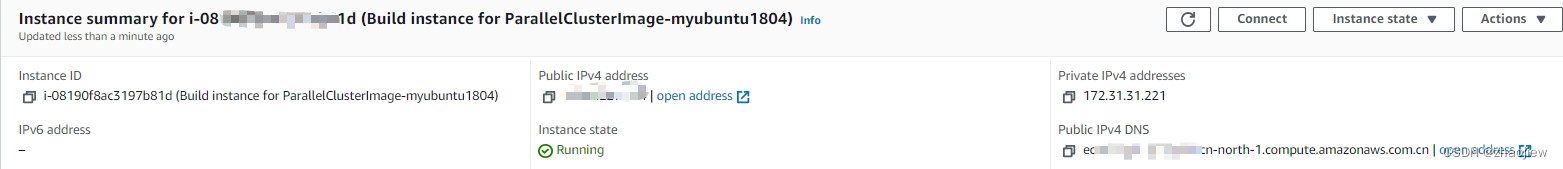

启动新的ec2实例进行构建工作

查看构建日志

$ watch -n 1 'pcluster get-image-log-events -i myubuntu1804 \

--log-stream-name 3.4.1/1 \

--query "events[*].message" | tail -n 50'

查看镜像

$ pcluster describe-image --image-id myubuntu1804

删除镜像

$ pcluster delete-image --image-id myubuntu1804

列出官方镜像

$ pcluster list-official-images | grep -B 2 ubuntu1804

最佳实践

(1)实例类型

-

头节点协调集群的扩展逻辑,并负责将新节点连接到调度器,如果性能不足会导致集群崩溃

-

头节点通过nfs将任务与计算节点共享,需要确保足够和网络和存储带宽

以下目录在节点间共享

- /home,默认的用户 home 文件夹

- /opt/intel

- /opt/slurm,Slurm Workload Manager 和相关文件

$ cat /etc/exports /home 172.31.0.0/16(rw,sync,no_root_squash) /opt/parallelcluster/shared 172.31.0.0/16(rw,sync,no_root_squash) /opt/intel 172.31.0.0/16(rw,sync,no_root_squash) /opt/slurm 172.31.0.0/16(rw,sync,no_root_squash) $ sudo showmount -e 127.0.0.1 Export list for 127.0.0.1: /opt/slurm 172.31.0.0/16 /opt/intel 172.31.0.0/16 /opt/parallelcluster/shared 172.31.0.0/16 /home 172.31.0.0/16

(2)网络性能

- 使用置放群组,使用cluster策略实现最低的延迟和最高的每秒数据包网络性能

- 选择支持增强联网,使用EFA类型实例

- 保证实例具备足够的网络带宽

(3)共享存储

- 使用fsx或efs等外部存储,避免数据损失,便于集群迁移

- 使用 custom bootstrap actions 来定制节点,而非使用自定义ami

(4)集群监控

- 使用sar收集日志

- 使用node exporter收集指标

相关错误

集群自定义配置脚本出错,bash脚本格式问题,在windows下编辑的换行符问题

[ERROR] Command runpostinstall (/opt/parallelcluster/scripts/fetch_and_run -postinstall) failed

2023-01-16 03:01:40,474 [DEBUG] Command runpostinstall output: /opt/parallelcluster/scripts/fetch_and_run: /tmp/tmp.wI9VD7fhQs: /bin/bash^M: bad interpreter: No such file or directory

parallelcluster: fetch_and_run - Failed to run postinstall, s3://parallelcluster-2e9f54dea72c8134-v1-do-not-delete/script/hello.sh failed with non 0 return code: 126

![[idekCTF 2023] Malbolge I Gluttony,Typop,Cleithrophobia,Megalophobia](https://img-blog.csdnimg.cn/img_convert/075d78485bd91437286c0636c2fd0896.png)

![[激光原理与应用-65]:激光器-器件 - 多模光纤(宽频光纤)、单模光纤的原理与区别](https://img-blog.csdnimg.cn/img_convert/1c80bc2c44cec28a17d9fd23843c62e2.png)