多维时序 | MATLAB实现VMD-SSA-KELM和VMD-KELM变分模态分解结合麻雀算法优化核极限学习机多输入单输出时间序列预测

目录

- 多维时序 | MATLAB实现VMD-SSA-KELM和VMD-KELM变分模态分解结合麻雀算法优化核极限学习机多输入单输出时间序列预测

- 预测效果

- 基本介绍

- 程序设计

- 学习小结

- 参考资料

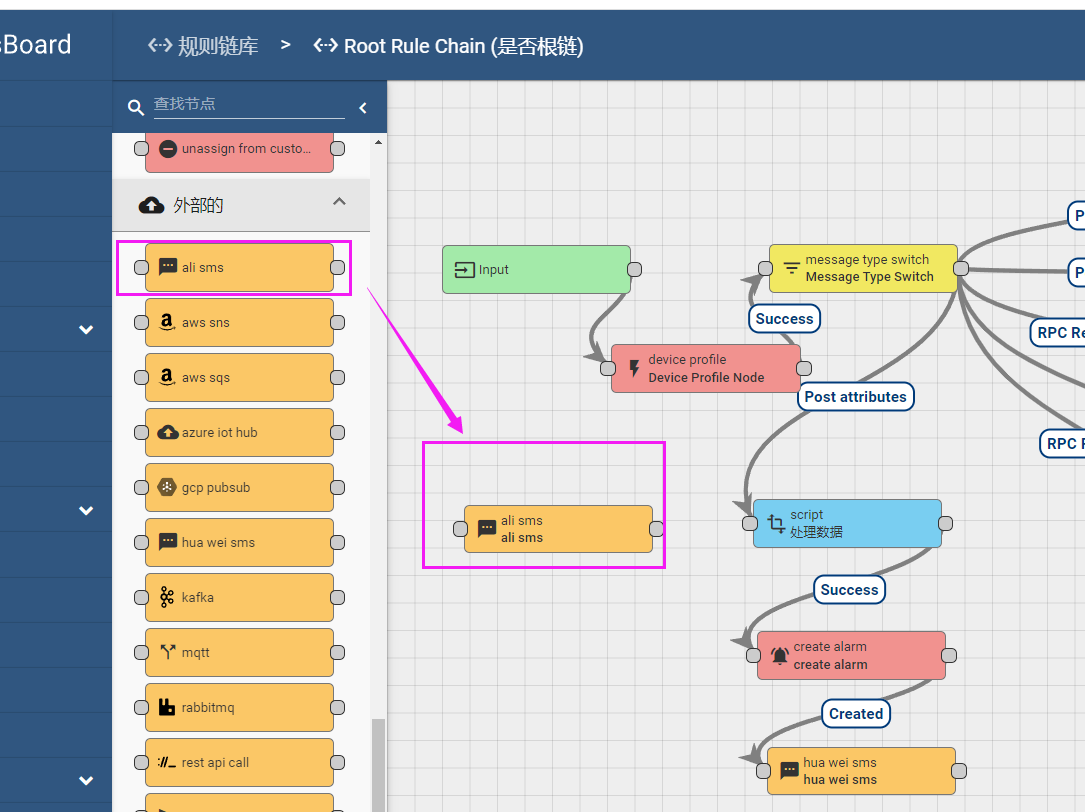

预测效果

基本介绍

MATLAB实现VMD-SSA-KELM和VMD-KELM变分模态分解结合麻雀算法优化核极限学习机多输入单输出时间序列预测

程序设计

- 完整程序和数据下载方式1:同等价值程序兑换;

- 完整程序和数据下载方式2: MATLAB实现VMD-SSA-KELM和VMD-KELM变分模态分解结合麻雀算法优化核极限学习机多输入单输出时间序列预测

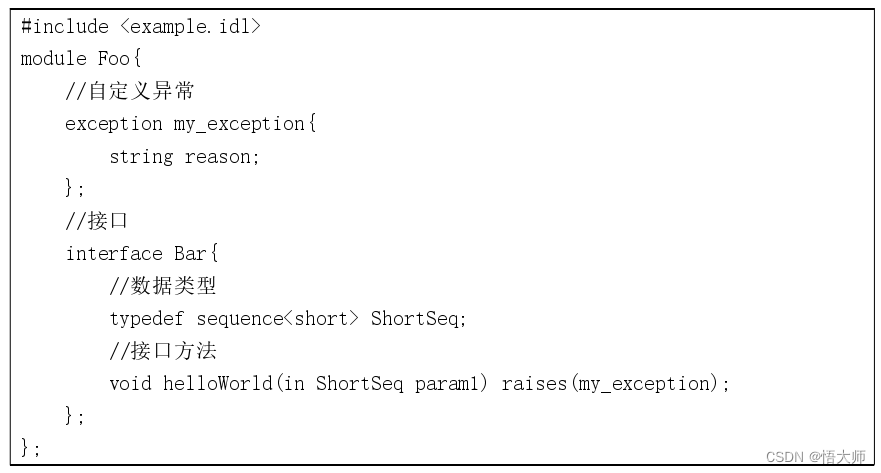

vmd(YData,'NumIMFs',3)

[imf,res] = vmd(YData,'NumIMFs',3);

Data=[imf res];

input = xlsread('WTData.xlsx', 'Sheet1', 'B2:H3673'); % 影响因素

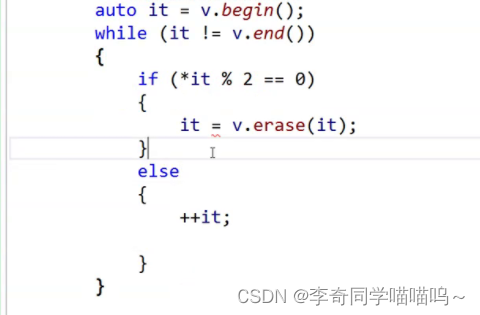

for k = 1 : size(Data, 2)

disp(['第 ',num2str(k),' 个分量的预测: '])

data(:,k) = Data(:, k);

delay=1; %时滞

data1 = [];

for i=1:length(data(:,k))-delay

data1(i,:)=data(i:i+delay,k)';

end

data1 = [input(delay+1:end,:),data1];

P = data1(:,1:end-1);

T = data1(:,end);

% 设定训练集与测试集

Len = size(data1, 1);

% testNum = 300;

testNum = round(Len*0.3);

trainNum = Len - testNum;

% 训练集

P_train = P(1:trainNum, :)';

T_train = T(1:trainNum, :)';

% 测试集

P_test = P(trainNum+1:trainNum+testNum,:)';

T_test = T(trainNum+1:trainNum+testNum,:)';

%% 归一化

[Pn_train ,ps]= mapminmax(P_train, 0, 1);

[Tn_train, ts] = mapminmax(T_train, 0, 1);

%测试集的归一化

Pn_test = mapminmax('apply', P_test, ps);

Tn_test = mapminmax('apply', T_test, ts);

%% 麻雀参数设置

pop=20; %种群数量

Max_iteration=30; % 设定最大迭代次数

dim = 2;% 维度为2,即优化两个参数,正则化系数 C 和核函数参数 S

lb = [0.001,0.001];%下边界

ub = [50,50];%上边界

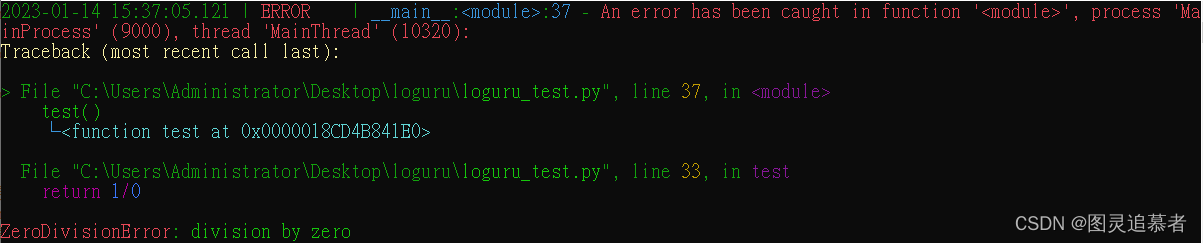

fobj = @(x) fun(x,Pn_train,Tn_train,Pn_test,T_test,ts);

[Best_pos,Best_score,SSA_curve]=SSA(pop,Max_iteration,lb,ub,dim,fobj); %开始优化

figure

plot(SSA_curve,'linewidth',1.5);

grid on;

xlabel('Number of iteration')

ylabel('Objective function')

title('SSA convergence curve')

set(gca,'Fontname', 'Times New Roman');

%% 获取最优正则化系数 C 和核函数参数 S

Regularization_coefficient = Best_pos(1);

Kernel_para = Best_pos(2);

Kernel_type = 'rbf';

%% 训练

[~,OutputWeight] = kelmTrain(Pn_train,Tn_train,Regularization_coefficient,Kernel_type,Kernel_para);

%% 测试

InputWeight = OutputWeight;

[TestOutT] = kelmPredict(Pn_train,InputWeight,Kernel_type,Kernel_para,Pn_test);

TestOut(k,:) = mapminmax('reverse',TestOutT,ts);

%% 基础KELM进行预测

Regularization_coefficient1 = 2;

Kernel_para1 = [4]; %核函数参数矩阵

Kernel_type = 'rbf';

%% 训练

[~,OutputWeight1] = kelmTrain(Pn_train,Tn_train,Regularization_coefficient1,Kernel_type,Kernel_para1);

%% 预测

InputWeight1 = OutputWeight1;

[TestOutT1] = kelmPredict(Pn_train,InputWeight1,Kernel_type,Kernel_para1,Pn_test);

TestOut1(k,:) = mapminmax('reverse',TestOutT1,ts);

end

学习小结

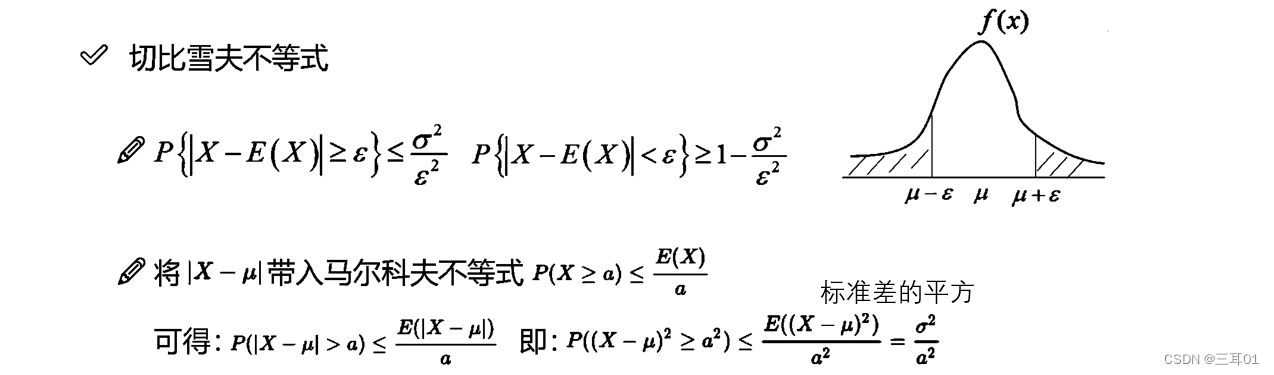

与ELM 方法相比, KELM 方法无需确定隐含层节点的数目, 在ELM 的特征映射函数未知时, 应用核函数的技术, 基于正则化最小二乘算法获取输出权值的解. 因此, KELM 具有更好的逼近精度和泛化能力.

参考资料

[1] https://blog.csdn.net/kjm13182345320/article/details/128577926?spm=1001.2014.3001.5501

[2] https://blog.csdn.net/kjm13182345320/article/details/128573597?spm=1001.2014.3001.5501