先按照官方的教程在虚拟机安装学习

在开始以下教程之前,您应该确保您的系统上安装了以下软件:

- Docker,容器运行时。

- Kubectl,Kubernetes 的命令行工具。

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

kubectl version --client

- Helm,Kubernetes 的包管理器。

curl https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash

helm version

- curl,通过 HTTP/HTTPS 与教程应用程序交互。

- Kind,Docker 运行时中的 Kubernetes。(在docker中运行的Kubernetes,主要做测试用)

#For AMD64 / x86_64

[ $(uname -m) = x86_64 ] && curl -Lo ./kind https://kind.sigs.k8s.io/dl/v0.22.0/kind-linux-amd64

#For ARM64

[ $(uname -m) = aarch64 ] && curl -Lo ./kind https://kind.sigs.k8s.io/dl/v0.22.0/kind-linux-arm64

chmod +x ./kind

sudo mv ./kind /usr/local/bin/kind

kind version

- liqoctl与 Liqo 交互的命令行工具。

#AMD64:

curl --fail -LS "https://github.com/liqotech/liqo/releases/download/v0.10.2/liqoctl-linux-amd64.tar.gz" | tar -xz

sudo install -o root -g root -m 0755 liqoctl /usr/local/bin/liqoctl

#ARM64:

curl --fail -LS "https://github.com/liqotech/liqo/releases/download/v0.10.2/liqoctl-linux-arm64.tar.gz" | tar -xz

sudo install -o root -g root -m 0755 liqoctl /usr/local/bin/liqoctl

创建虚拟集群

然后,让我们在计算机上打开一个终端并启动以下脚本,该脚本将使用 Kind 创建一对集群。每个集群由两个节点组成(一个用于控制平面,一个作为简单工作节点):

git clone https://github.com/liqotech/liqo.git

cd liqo

git checkout v0.10.2

cd examples/quick-start

./setup.sh

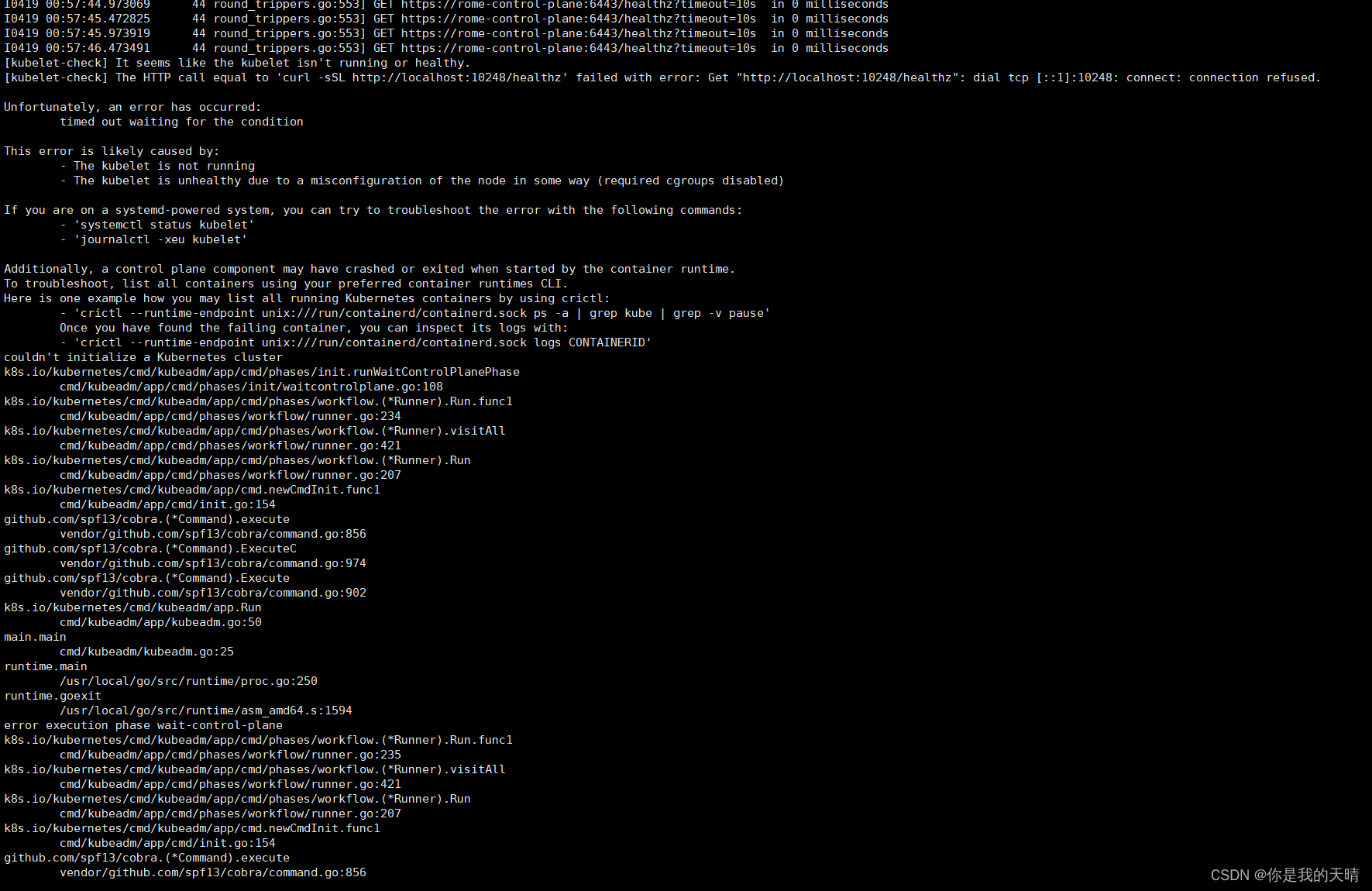

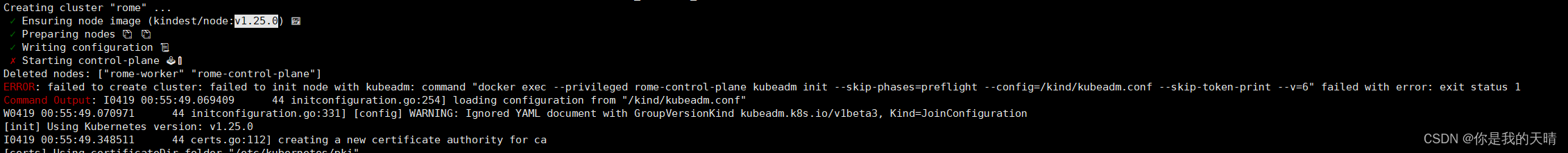

此步骤如果报错: failed to create cluster: failed to init node with kubeadm: command “docker exec --privileged rome-control-plane kubeadm init --skip-phases=preflight --config=/kind/kubeadm.conf --skip-token-print --v=6” failed with error: exit status 1

Command Output: I0419 00:55:49.069409 44 initconfiguration.go:254] loading configuration from “/kind/kubeadm.conf”

…

the HTTP call equal to ‘curl -sSL http://localhost:10248/healthz’ failed with error: Get “http://localhost:10248/healthz”: dial tcp [::1]:10248: connect: connection refused.

排查:

直接用 kind create cluster --name rome 是成功的,发现Ensuring node image:kindest/node:v1.29.2

而用./setup.sh执行的是v1.25.0

解决办法:

直接改/opt/liqo/examples/quick-start/manifests/cluster.yam里面的kindest/node改成v1.29.2

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

networking:

serviceSubnet: "10.90.0.0/12"

podSubnet: "10.200.0.0/16"

nodes:

- role: control-plane

image: kindest/node:v1.25.0 #这里改成v1.29.2

- role: worker

image: kindest/node:v1.25.0 #这里改成v1.29.2

详情请点击

在执行:

root@main:~/liqo/examples/quick-start# ./setup.sh

SUCCESS No cluster "rome" is running.

SUCCESS No cluster "milan" is running.

SUCCESS Cluster "rome" has been created.

SUCCESS Cluster "milan" has been created.

root@main:~/liqo/examples/quick-start#

测试集群

您可以通过键入以下内容来检查已部署的集群:

root@main:~/liqo/examples/quick-start# kind get clusters

milan

rome

root@main:~/liqo/examples/quick-start#

这意味着您的主机上部署并运行了两个 Kind 集群。

默认情况下,两个集群的 kubeconfig 存储在当前目录(./liqo_kubeconf_rome, ./liqo_kubeconf_milan)中。

root@main:~/liqo/examples/quick-start# pwd

/root/liqo/examples/quick-start

root@main:~/liqo/examples/quick-start# ls

liqo_kubeconf_milan liqo_kubeconf_rome manifests setup.sh

root@main:~/liqo/examples/quick-start#

您可以通过以下方式导出本教程其余部分使用的适当环境变量(即KUBECONFIG和KUBECONFIG_MILAN),并引用它们的位置:

export KUBECONFIG="$PWD/liqo_kubeconf_rome"

export KUBECONFIG_MILAN="$PWD/liqo_kubeconf_milan"

建议将第一个集群的 kubeconfig 导出为默认值(即KUBECONFIG),因为它将是虚拟集群的入口点,您将主要与其交互。

在第一个集群上,您只需输入以下内容即可获取可用的 Pod:

root@liqo:~/liqo/examples/quick-start# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-76f75df574-m5ppl 1/1 Running 0 110s

kube-system coredns-76f75df574-mljgc 1/1 Running 0 110s

kube-system etcd-rome-control-plane 1/1 Running 0 2m6s

kube-system kindnet-p9cjf 1/1 Running 0 107s

kube-system kindnet-pg7bt 1/1 Running 0 110s

kube-system kube-apiserver-rome-control-plane 1/1 Running 0 2m4s

kube-system kube-controller-manager-rome-control-plane 1/1 Running 0 2m4s

kube-system kube-proxy-gh5kr 1/1 Running 0 110s

kube-system kube-proxy-l67g5 1/1 Running 0 107s

kube-system kube-scheduler-rome-control-plane 1/1 Running 0 2m4s

local-path-storage local-path-provisioner-7577fdbbfb-ct9rz 1/1 Running 0 110s

同样,在第二个集群上,您可以观察执行中的 Pod:

kubectl get pods -A --kubeconfig "$KUBECONFIG_MILAN"

安装Liqo

现在,您将使用以下名称在两个集群上安装 Liqo

-

rome:本地集群,您将在其中部署和控制应用程序。

-

milan:远程集群,部分工作负载将被卸载到该集群。

您可以通过启动以下命令在rome集群上安装 Liqo:

liqoctl install kind --cluster-name rome

这里一直报错,安装不成功

ERRO Error installing or upgrading Liqo: release liqo failed, and has been uninstalled due to atomic being set: timed out waiting for the condition

INFO Likely causes for the installation/upgrade timeout could include:

INFO * One or more pods failed to start (e.g., they are in the ImagePullBackOff status)

INFO * A service of type LoadBalancer has been configured, but no provider is available

INFO You can add the --verbose flag for debug information concerning the failing resources

INFO Additionally, if necessary, you can increase the timeout value with the --timeout flag

根据提示,运行:liqoctl install kind --cluster-name rome --verbose 展示详情信息:

Starting delete for "liqo-webhook-certificate-patch" ServiceAccount

INFO beginning wait for 1 resources to be deleted with timeout of 10m0s

INFO creating 1 resource(s)

INFO Starting delete for "liqo-webhook-certificate-patch" Role

INFO beginning wait for 1 resources to be deleted with timeout of 10m0s

INFO creating 1 resource(s)

INFO Starting delete for "liqo-webhook-certificate-patch" RoleBinding

INFO beginning wait for 1 resources to be deleted with timeout of 10m0s

INFO creating 1 resource(s)

INFO Starting delete for "liqo-webhook-certificate-patch-pre" Job

INFO beginning wait for 1 resources to be deleted with timeout of 10m0s

INFO creating 1 resource(s)

INFO Watching for changes to Job liqo-webhook-certificate-patch-pre with timeout of 10m0s

INFO Add/Modify event for liqo-webhook-certificate-patch-pre: ADDED

INFO liqo-webhook-certificate-patch-pre: Jobs active: 0, jobs failed: 0, jobs succeeded: 0

INFO Add/Modify event for liqo-webhook-certificate-patch-pre: MODIFIED

INFO liqo-webhook-certificate-patch-pre: Jobs active: 1, jobs failed: 0, jobs succeeded: 0

INFO Install failed and atomic is set, uninstalling release

.....

Ignoring delete failure for "liqo-telemetry" /v1, Kind=ServiceAccount: serviceaccounts "liqo-telemetry" not found

INFO Ignoring delete failure for "liqo-crd-replicator" /v1, Kind=ServiceAccount: serviceaccounts "liqo-crd-replicator" not found

INFO Ignoring delete failure for "liqo-gateway" /v1, Kind=ServiceAccount: serviceaccounts "liqo-gateway" not found

INFO Ignoring delete failure for "liqo-auth" /v1, Kind=ServiceAccount: serviceaccounts "liqo-auth" not found

INFO Ignoring delete failure for "liqo-network-manager" /v1, Kind=ServiceAccount: serviceaccounts "liqo-network-manager" not found

INFO Ignoring delete failure for "liqo-route" /v1, Kind=ServiceAccount: serviceaccounts "liqo-route" not found

INFO Ignoring delete failure for "liqo-controller-manager" /v1, Kind=ServiceAccount: serviceaccounts "liqo-controller-manager" not found

INFO Ignoring delete failure for "liqo-metric-agent" /v1, Kind=ServiceAccount: serviceaccounts "liqo-metric-agent" not found

INFO Starting delete for "liqo-webhook" MutatingWebhookConfiguration

INFO Ignoring delete failure for "liqo-webhook" admissionregistration.k8s.io/v1, Kind=MutatingWebhookConfiguration: mutatingwebhookconfigurations.admissionregistration.k8s.io "liqo-webhook" not found

INFO Starting delete for "liqo-webhook" ValidatingWebhookConfiguration

INFO Ignoring delete failure for "liqo-webhook" admissionregistration.k8s.io/v1, Kind=ValidatingWebhookConfiguration: validatingwebhookconfigurations.admissionregistration.k8s.io "liqo-webhook" not found

INFO purge requested for liqo

ERRO Error installing or upgrading Liqo: release liqo failed, and has been uninstalled due to atomic being set: timed out waiting for the condition

INFO Likely causes for the installation/upgrade timeout could include:

INFO * One or more pods failed to start (e.g., they are in the ImagePullBackOff status)

INFO * A service of type LoadBalancer has been configured, but no provider is available

INFO You can add the --verbose flag for debug information concerning the failing resources

INFO Additionally, if necessary, you can increase the timeout value with the --timeout flag

这里还不太清楚怎么办,看情况好像是liqo-webhook相关的东西不行,又看到提示"One or more pods failed to start (e.g., they are in the ImagePullBackOff status)"

然后执行了下kubectl get pods -A 发现liqo-webhook-certificate-patch-pre-dxqj9 没拉取下来

root@liqo:~/liqo/examples/quick-start# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-76f75df574-prq94 1/1 Running 0 63m

kube-system coredns-76f75df574-xljfr 1/1 Running 0 63m

kube-system etcd-rome-control-plane 1/1 Running 0 63m

kube-system kindnet-5g85h 1/1 Running 0 63m

kube-system kindnet-74l4j 1/1 Running 0 63m

kube-system kube-apiserver-rome-control-plane 1/1 Running 0 63m

kube-system kube-controller-manager-rome-control-plane 1/1 Running 0 63m

kube-system kube-proxy-86v24 1/1 Running 0 63m

kube-system kube-proxy-9c9df 1/1 Running 0 63m

kube-system kube-scheduler-rome-control-plane 1/1 Running 0 63m

liqo liqo-webhook-certificate-patch-pre-dxqj9 0/1 ImagePullBackOff 0 25m

local-path-storage local-path-provisioner-7577fdbbfb-7vxcq 1/1 Running 0 63m

root@liqo:~/liqo/examples/quick-start# kubectl logs -f -n liqo liqo-webhook-certificate-patch-pre-dxqj9

Error from server (BadRequest): container "create" in pod "liqo-webhook-certificate-patch-pre-dxqj9" is waiting to start: image can't be pulled

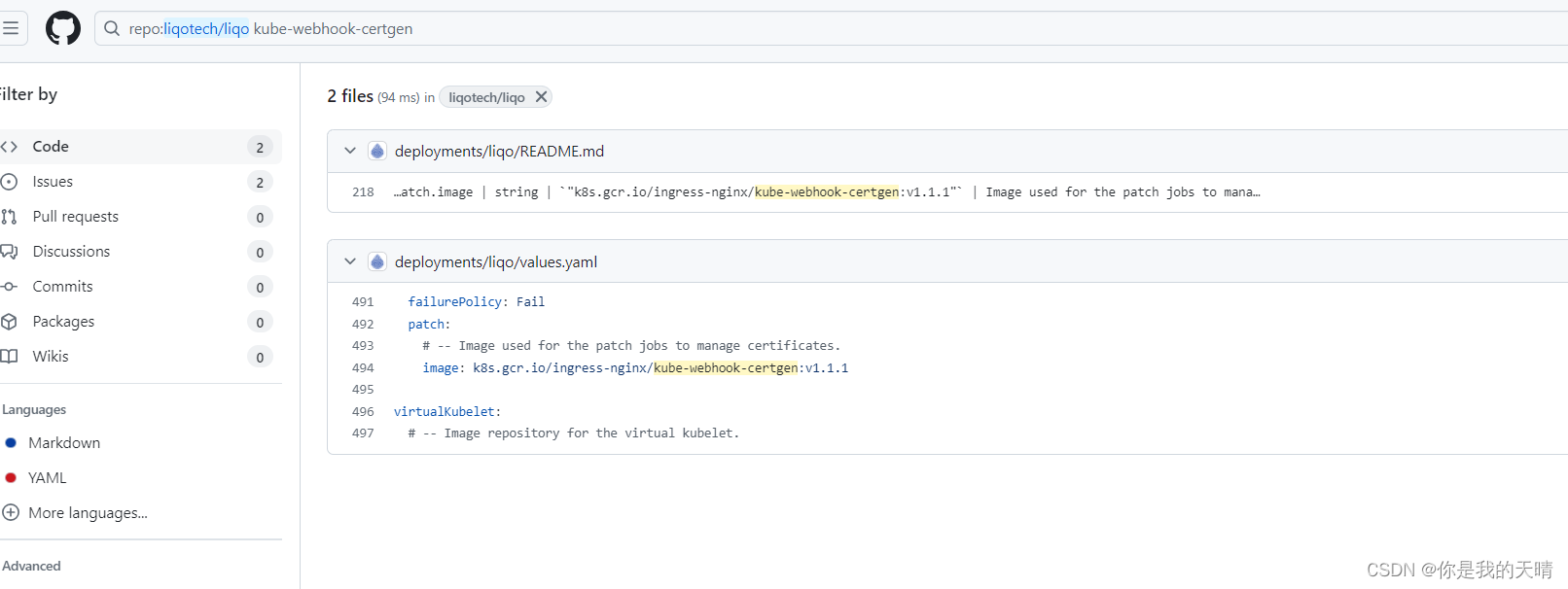

然后去官方仓库搜索webhook-certificate 发现

执行 kubectl describe job liqo-webhook-certificate-patch --namespace=liqo

root@liqo:~/liqo/examples/quick-start# kubectl describe job liqo-webhook-certificate-patch --namespace=liqo

Name: liqo-webhook-certificate-patch-pre

Namespace: liqo

Selector: batch.kubernetes.io/controller-uid=54b6ee31-c866-487f-a874-4c71ec3a872c

Labels: app.kubernetes.io/component=webhook-certificate-patch

app.kubernetes.io/instance=liqo-webhook-certificate-patch-pre

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=webhook-certificate-patch-pre

app.kubernetes.io/part-of=liqo

app.kubernetes.io/version=v0.10.2

helm.sh/chart=liqo-v0.10.2

Annotations: helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

Parallelism: 1

Completions: 1

Completion Mode: NonIndexed

Suspend: false

Backoff Limit: 6

TTL Seconds After Finished: 150

Start Time: Fri, 19 Apr 2024 07:04:18 +0000

Pods Statuses: 1 Active (0 Ready) / 0 Succeeded / 0 Failed

Pod Template:

Labels: app.kubernetes.io/component=webhook-certificate-patch

app.kubernetes.io/instance=liqo-webhook-certificate-patch-pre

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=webhook-certificate-patch-pre

app.kubernetes.io/part-of=liqo

app.kubernetes.io/version=v0.10.2

batch.kubernetes.io/controller-uid=54b6ee31-c866-487f-a874-4c71ec3a872c

batch.kubernetes.io/job-name=liqo-webhook-certificate-patch-pre

controller-uid=54b6ee31-c866-487f-a874-4c71ec3a872c

helm.sh/chart=liqo-v0.10.2

job-name=liqo-webhook-certificate-patch-pre

Service Account: liqo-webhook-certificate-patch

Containers:

create:

Image: k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1

Port: <none>

Host Port: <none>

Args:

create

--host=liqo-controller-manager,liqo-controller-manager.liqo,liqo-controller-manager.liqo.svc,liqo-controller-manager.liqo.svc.cluster.local

--namespace=liqo

--secret-name=liqo-webhook-certs

--cert-name=tls.crt

--key-name=tls.key

Environment: <none>

Mounts: <none>

Volumes: <none>

Node-Selectors: <none>

Tolerations: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 29m job-controller Created pod: liqo-webhook-certificate-patch-pre-dxqj9

发现image是:k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1

这里安装过k8s的都知道,会有这个问题 k8s.gcr.io会访问不到。

这里卡了好久,k8s可以指定–image-repository 但是他不支持

liqoctl install kind --cluster-name rome --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --verbose

Error: unknown flag: --image-repository

root@liqo:~/liqo/examples/quick-start# kubectl get pods -A

然后拉取aliyuncs的kube-webhook-certgen,打tag,在重新删除pod从新运行pod也是不行:

root@liqo:~/liqo/examples/quick-start# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1

v1.1.1: Pulling from google_containers/kube-webhook-certgen

ec52731e9273: Pull complete

b90aa28117d4: Pull complete

Digest: sha256:64d8c73dca984af206adf9d6d7e46aa550362b1d7a01f3a0a91b20cc67868660

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1

root@liqo:~/liqo/examples/quick-start# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1 k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1

root@liqo:~/liqo/examples/quick-start# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

kindest/node v1.29.2 09c50567d34e 2 months ago 956MB

kindest/node v1.25.0 d3da246e125a 19 months ago 870MB

k8s.gcr.io/ingress-nginx/kube-webhook-certgen v1.1.1 c41e9fcadf5a 2 years ago 47.7MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen v1.1.1 c41e9fcadf5a 2 years ago 47.7MB

root@liqo:~/liqo/examples/quick-start# kubectl delete pod liqo-webhook-certificate-patch-pre-fj72j -n liqo

pod "liqo-webhook-certificate-patch-pre-fj72j" deleted

root@liqo:~/liqo/examples/quick-start# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-76f75df574-prq94 1/1 Running 0 159m

kube-system coredns-76f75df574-xljfr 1/1 Running 0 159m

kube-system etcd-rome-control-plane 1/1 Running 0 159m

kube-system kindnet-5g85h 1/1 Running 0 159m

kube-system kindnet-74l4j 1/1 Running 0 159m

kube-system kube-apiserver-rome-control-plane 1/1 Running 0 159m

kube-system kube-controller-manager-rome-control-plane 1/1 Running 0 159m

kube-system kube-proxy-86v24 1/1 Running 0 159m

kube-system kube-proxy-9c9df 1/1 Running 0 159m

kube-system kube-scheduler-rome-control-plane 1/1 Running 0 159m

liqo liqo-webhook-certificate-patch-pre-jk979 0/1 ContainerCreating 0 3s

local-path-storage local-path-provisioner-7577fdbbfb-7vxcq 1/1 Running 0 159m

最后还是创建失败,ImagePullBackOff。这里我的原理不太懂,打标签之后也没直接用我pull下来的镜像,还是会去拉取,因为我安装k8s的时候直接指定–image-repository 所以不用什么拉取镜像打标签什么的,这里应该网上有的教程太老了吧,现在k8s都是直接用containerd了,k8s安装见k8s安装,linux-ubuntu上面kubernetes详细安装过程

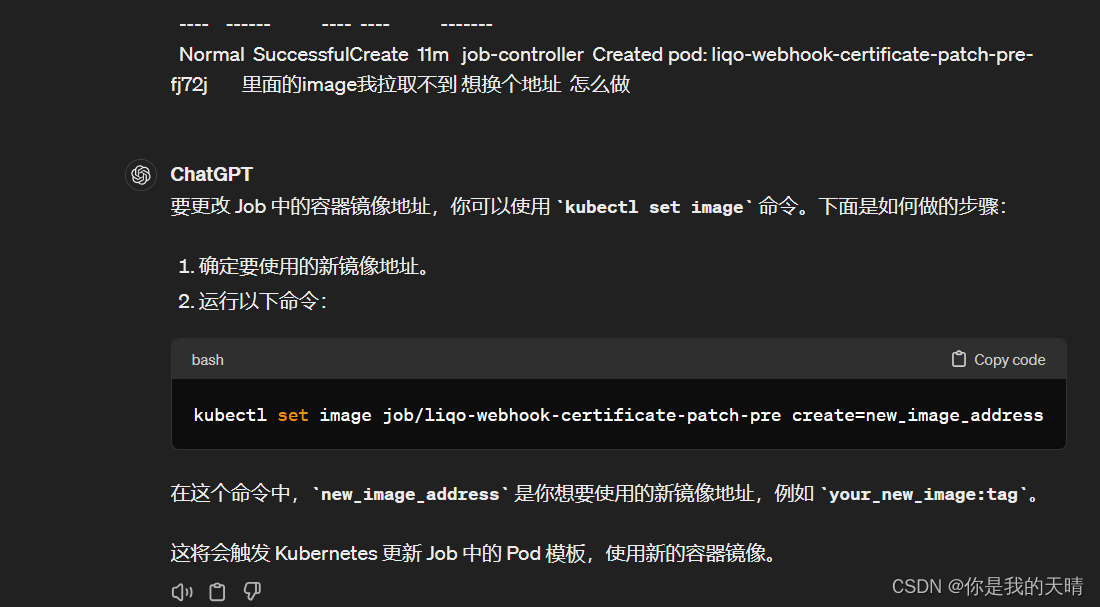

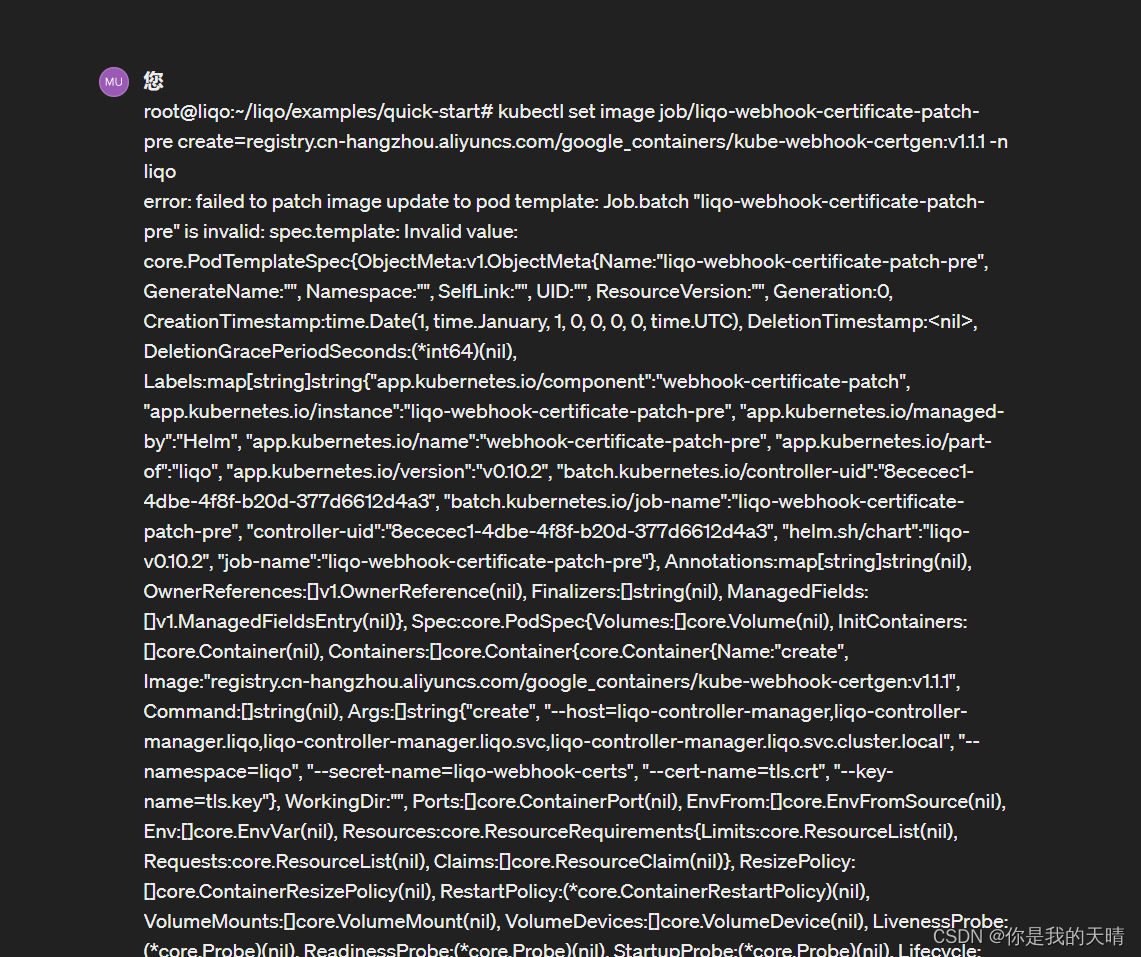

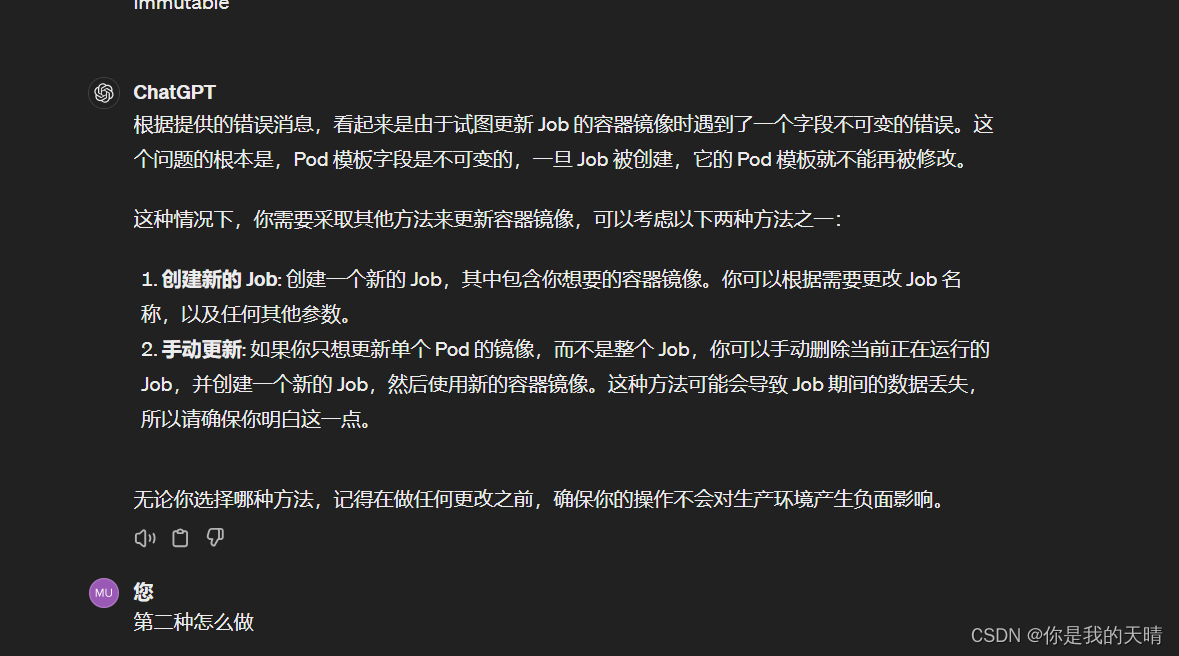

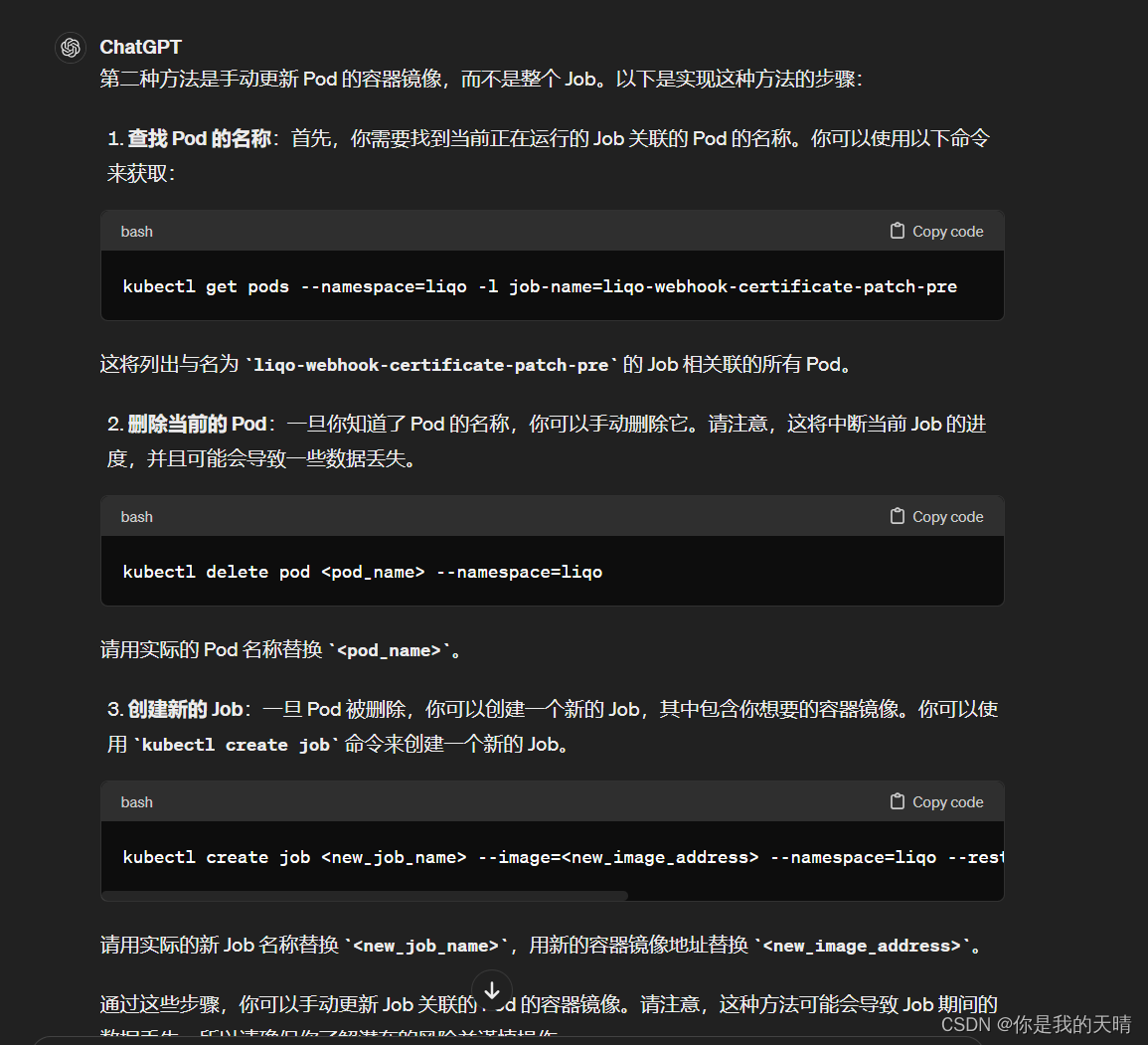

接着又搜索怎么修改pod的image,各种试都不行,他是个job,k8s太多东西了,这个概念不清楚

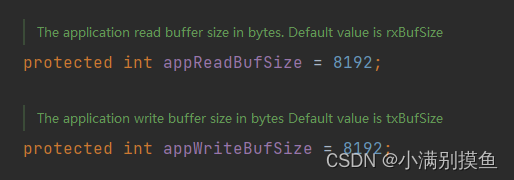

到这里又行不通了,我没那个job文件啊,又一想,之前安装kubectl 的时候使用源码包的方式安装的,看看源码里面相关的字段,可以把他改了吧?

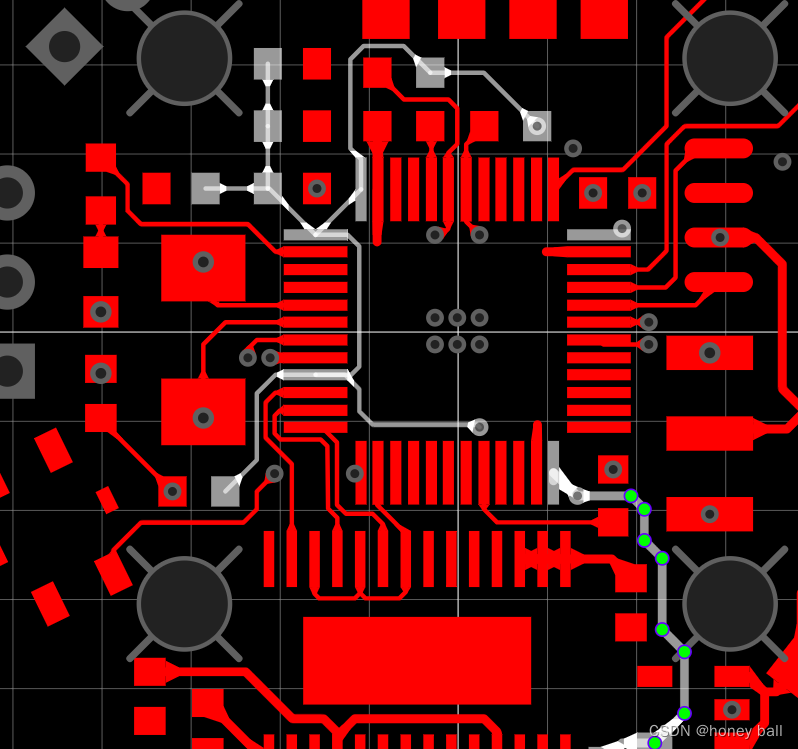

然后发现:

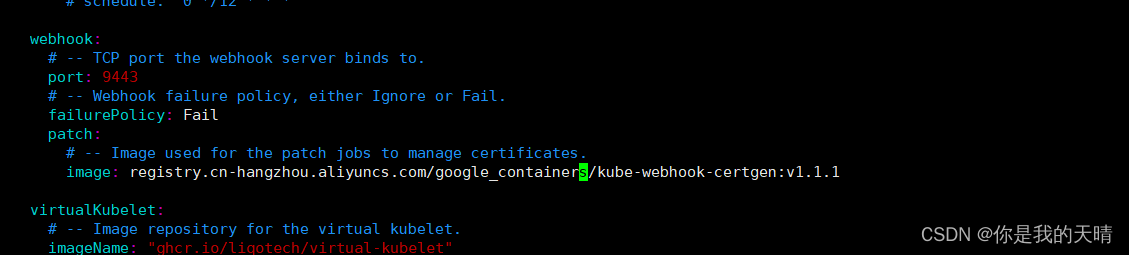

最后直接修改这个文件

vim /liqo/deployments/liqo/values.yaml

把k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1改成registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1

然后又删除liqoctl从新安装,不知道不删除直接从新运行sudo install -o root -g root -m 0755 liqoctl /usr/local/bin/liqoctl行不行

root@liqo:~# sudo rm $(which liqoctl)

root@liqo:~# liqoctl

bash: /usr/local/bin/liqoctl: No such file or directory

root@liqo:~# rm -rf ~/.liqo

root@liqo:~# sudo install -o root -g root -m 0755 liqoctl /usr/local/bin/liqoctl

root@liqo:~# liqoctl

liqoctl is a CLI tool to install and manage Liqo.

Liqo is a platform to enable dynamic and decentralized resource sharing across

Kubernetes clusters, either on-prem or managed. Liqo allows to run pods on a

remote cluster seamlessly and without any modification of Kubernetes and the

applications. With Liqo it is possible to extend the control and data plane of a

Kubernetes cluster across the cluster's boundaries, making multi-cluster native

and transparent: collapse an entire remote cluster to a local virtual node,

enabling workloads offloading, resource management and cross-cluster communication

compliant with the standard Kubernetes approach.

接着又从新安装了下集群(./setup.sh)。应该不用从新安装集群也可以吧,因为我之前命令弄错了,集群出问题了所以就也直接从新安装了一遍集群

接着运行

root@liqo:~/liqo/examples/quick-start# liqoctl install kind --cluster-name rome

INFO Installer initialized

INFO Cluster configuration correctly retrieved

INFO Installation parameters correctly generated

INFO All Set! You can now proceed establishing a peering (liqoctl peer --help for more information)

INFO Make sure to use the same version of Liqo on all remote clusters

终于成功了,Oh My God

想在看看这个job

root@liqo:~/liqo/examples/quick-start# kubectl describe job liqo-webhook-certificate-patch --namespace=liqo

Error from server (NotFound): jobs.batch "liqo-webhook-certificate-patch" not found

root@liqo:~/liqo/examples/quick-start# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-76f75df574-7sgf9 1/1 Running 0 4m8s

kube-system coredns-76f75df574-x55fq 1/1 Running 0 4m8s

kube-system etcd-rome-control-plane 1/1 Running 0 4m24s

kube-system kindnet-b7c84 1/1 Running 0 4m9s

kube-system kindnet-s84l7 1/1 Running 0 4m7s

kube-system kube-apiserver-rome-control-plane 1/1 Running 0 4m24s

kube-system kube-controller-manager-rome-control-plane 1/1 Running 0 4m24s

kube-system kube-proxy-5ptwc 1/1 Running 0 4m9s

kube-system kube-proxy-gpznj 1/1 Running 0 4m7s

kube-system kube-scheduler-rome-control-plane 1/1 Running 0 4m26s

liqo liqo-auth-6bf849d75-ll25k 1/1 Running 0 91s

liqo liqo-controller-manager-7c586975f-d2k6c 1/1 Running 0 92s

liqo liqo-crd-replicator-679cfc85cd-g85nb 1/1 Running 0 91s

liqo liqo-gateway-759f8b7d4d-lsblm 1/1 Running 0 92s

liqo liqo-metric-agent-77d765d9df-v4tp2 1/1 Running 0 92s

liqo liqo-network-manager-dd886d8bc-9st79 1/1 Running 0 92s

liqo liqo-proxy-6d49f7789b-klcp4 1/1 Running 0 92s

liqo liqo-route-6nglb 1/1 Running 0 92s

liqo liqo-route-7z4vc 1/1 Running 0 92s

local-path-storage local-path-provisioner-7577fdbbfb-shcd8 1/1 Running 0 4m8s

job没有了,也没有那个liqo-webhook-certificate-patch-pre-jk979 pod了 。。。。。

job是中间运行环节的东西,然后就不展示吗,还是我没安装成功?。。。。。。

也不知道这个阿里的image跟需要那个是不是对等的,反正registry.cn-hangzhou.aliyuncs.com/google_containers/ingress-nginx/kube-webhook-certgen:v1.1.1(加上ingress-nginx/)是不行的,我用docker也拉不下来。

接着往下学下吧,说不定这确实是成功了。

在Milan集群上也安装 Liqo :

root@liqo:~/liqo/examples/quick-start# liqoctl install kind --cluster-name milan --kubeconfig "$KUBECONFIG_MILAN"

INFO Installer initialized

INFO Cluster configuration correctly retrieved

INFO Installation parameters correctly generated

INFO All Set! You can now proceed establishing a peering (liqoctl peer --help for more information)

INFO Make sure to use the same version of Liqo on all remote clusters

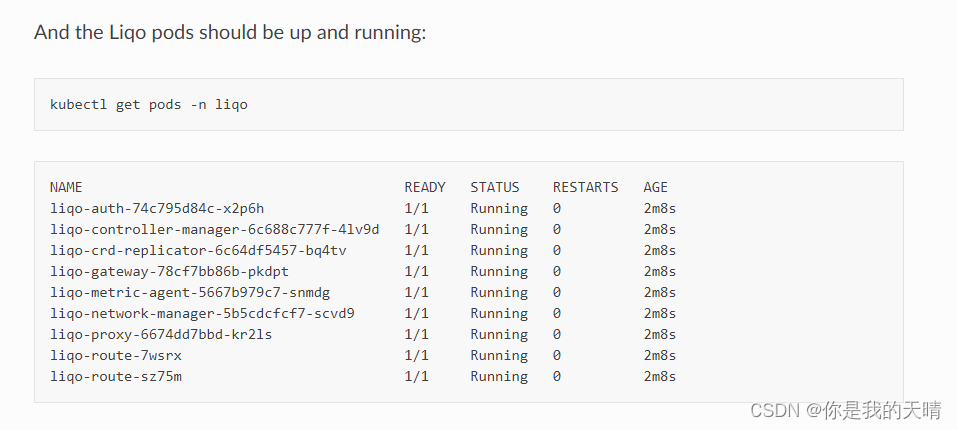

Liqo pod 已启动并运行:

root@liqo:~/liqo/examples/quick-start# kubectl get pods -n liqo

NAME READY STATUS RESTARTS AGE

liqo-auth-6bf849d75-ll25k 1/1 Running 0 53m

liqo-controller-manager-7c586975f-d2k6c 1/1 Running 0 53m

liqo-crd-replicator-679cfc85cd-g85nb 1/1 Running 0 53m

liqo-gateway-759f8b7d4d-lsblm 1/1 Running 0 53m

liqo-metric-agent-77d765d9df-v4tp2 1/1 Running 0 53m

liqo-network-manager-dd886d8bc-9st79 1/1 Running 0 53m

liqo-proxy-6d49f7789b-klcp4 1/1 Running 0 53m

liqo-route-6nglb 1/1 Running 0 53m

liqo-route-7z4vc 1/1 Running 0 53m

root@liqo:~/liqo/examples/quick-start#

官网也是没有那个liqo-webhook-certificate-patch的pod,那应该是没问题的

此外,您可以使用以下命令检查安装状态和主要 Liqo 配置参数:

root@liqo:~/liqo/examples/quick-start# liqoctl status

┌─ Namespace existence check ──────────────────────────────────────────────────────┐

| INFO ✔ liqo control plane namespace liqo exists |

└──────────────────────────────────────────────────────────────────────────────────┘

┌─ Control plane check ────────────────────────────────────────────────────────────┐

| Deployment |

| liqo-controller-manager: Desired: 1, Ready: 1/1, Available: 1/1 |

| liqo-crd-replicator: Desired: 1, Ready: 1/1, Available: 1/1 |

| liqo-metric-agent: Desired: 1, Ready: 1/1, Available: 1/1 |

| liqo-auth: Desired: 1, Ready: 1/1, Available: 1/1 |

| liqo-proxy: Desired: 1, Ready: 1/1, Available: 1/1 |

| liqo-network-manager: Desired: 1, Ready: 1/1, Available: 1/1 |

| liqo-gateway: Desired: 1, Ready: 1/1, Available: 1/1 |

| DaemonSet |

| liqo-route: Desired: 2, Ready: 2/2, Available: 2/2 |

└──────────────────────────────────────────────────────────────────────────────────┘

┌─ Local cluster information ──────────────────────────────────────────────────────┐

| Cluster identity |

| Cluster ID: 06b2ab0f-5dd0-42cb-aaca-73f92741b740 |

| Cluster name: rome |

| Cluster labels |

| liqo.io/provider: kind |

| Configuration |

| Version: v0.10.2 |

| Network |

| Pod CIDR: 10.200.0.0/16 |

| Service CIDR: 10.80.0.0/12 |

| External CIDR: 10.201.0.0/16 |

| Endpoints |

| Network gateway: udp://172.18.0.2:30620 |

| Authentication: https://172.18.0.3:32395 |

| Kubernetes API server: https://172.18.0.3:6443 |

└──────────────────────────────────────────────────────────────────────────────────┘

root@liqo:~/liqo/examples/quick-start#

看见这样的就开心

对等两个集群

就是把两个集群建立互连,在此示例中,由于两个 API 服务器(可以理解成两个k8s可以相互访问)可相互访问,因此您将使用带外对等互连方法。

首先,从Milan集群获取对等命令:

root@liqo:~/liqo/examples/quick-start# liqoctl generate peer-command --kubeconfig "$KUBECONFIG_MILAN"

INFO Peering information correctly retrieved

Execute this command on a *different* cluster to enable an outgoing peering with the current cluster:

liqoctl peer out-of-band milan --auth-url https://172.18.0.4:31720 --cluster-id 0422b752-25e5-42d0-acbf-1d584b09d1a6 --auth-token dfd35fcb10d65c142738261c17d94724bd3bf2dd54e14ac344e22a0cee27b58a084f452ead3df1857e2bb9dd35d0d6ba5b03b7e507f220eff4c71785a42e7cae

root@liqo:~/liqo/examples/quick-start#

rome:本地集群,您将在其中部署和控制应用程序。

milan:远程集群,部分工作负载将被卸载到该集群。

就是在rome集群执行pod相关的命令会到milan上?

其次,将命令复制粘贴到Rome集群中:

root@liqo:~/liqo/examples/quick-start# liqoctl peer out-of-band milan --auth-url https://172.18.0.4:31720 --cluster-id 0422b752-25e5-42d0-acbf-1d584b09d1a6 --auth-token dfd35fcb10d65c142738261c17d94724bd3bf2dd54e14ac344e22a0cee27b58a084f452ead3df1857e2bb9dd35d0d6ba5b03b7e507f220eff4c71785a42e7cae

INFO Peering enabled

INFO Authenticated to cluster "milan"

INFO Outgoing peering activated to the remote cluster "milan"

INFO Network established to the remote cluster "milan"

INFO Node created for remote cluster "milan"

INFO Peering successfully established

root@liqo:~/liqo/examples/quick-start#

现在, Rome集群中的 Liqo 控制平面将联系所提供的身份验证端点,向Milan集群提供令牌以获取其 Kubernetes 身份。

您可以通过运行以下命令来检查对等状态:

root@liqo:~/liqo/examples/quick-start# kubectl get foreignclusters

NAME TYPE OUTGOING PEERING INCOMING PEERING NETWORKING AUTHENTICATION AGE

milan OutOfBand Established None Established Established 85s

输出表明跨集群网络隧道已建立,并且传出对等互连当前处于活动状态(即,Rome集群可以将工作负载卸载到Milan集群,但反之则不然):

同时,liqo-milan除了物理节点之外,您还应该看到一个虚拟节点 ( ):

oot@liqo:~/liqo/examples/quick-start# kubectl get nodes

NAME STATUS ROLES AGE VERSION

liqo-milan Ready agent 2m46s v1.29.2

rome-control-plane Ready control-plane 71m v1.29.2

rome-worker Ready <none> 71m v1.29.2

此外,您可以使用以下方法检查对等互连状态并检索更多高级信息:

liqoctl status peer milan

部署服务

现在,您可以像在单集群场景中一样在多集群环境中部署标准 Kubernetes 应用程序(即无需修改)。

下一篇见

![Amazon云计算AWS之[1]基础存储架构Dynamo](https://img-blog.csdnimg.cn/direct/0bd82ce343354d1a9881ebbfd7443378.png#pic_center)