摘要

本文介绍我自研的下采样模块。本次改进的下采样模块是一种通用的改进方法,你可以用分类任务的主干网络中,也可以用在分割和超分的任务中。已经有粉丝用来改进ConvNext模型,取得了非常好的效果,配合一些其他的改进,发一篇CVPR、ECCV之类的顶会完全没有问题。

本次我将这个模块用来改进YoloV9,实现大幅度涨点。

自研下采样模块及其变种

第一种改进方法

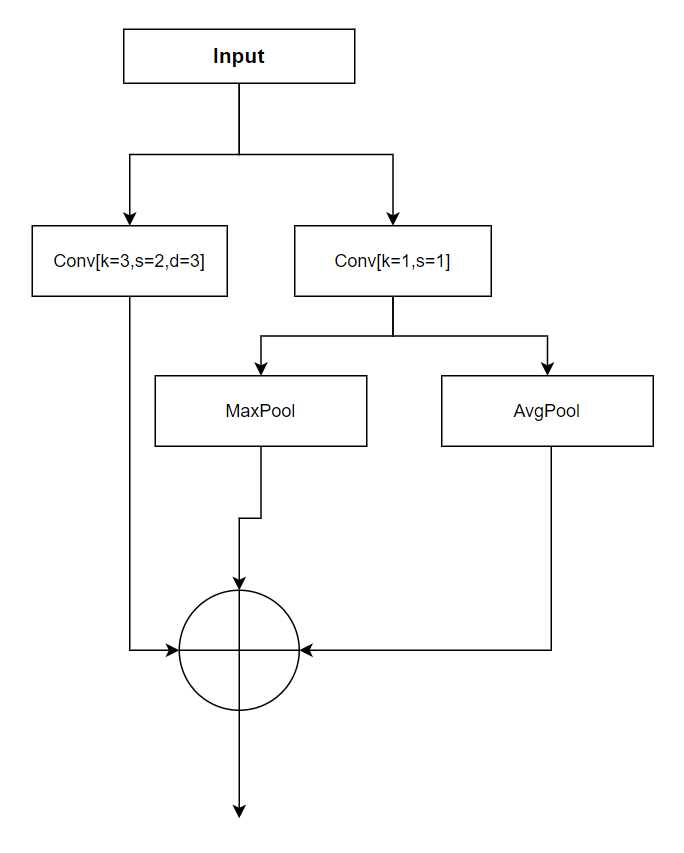

将输入分成两个分支,一个分支用卷积,一个分支分成两部分,一部分用MaxPool,一部分用AvgPool。然后,在最后合并起来。代码如下:

import torch

import torch.nn as nn

def autopad(k, p=None, d=1): # kernel, padding, dilation

"""Pad to 'same' shape outputs."""

if d > 1:

k = d * (k - 1) + 1 if isinstance(k, int) else [d * (x - 1) + 1 for x in k] # actual kernel-size

if p is None:

p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-pad

return p

class Conv(nn.Module):

"""Standard convolution with args(ch_in, ch_out, kernel, stride, padding, groups, dilation, activation)."""

default_act = nn.SiLU() # default activation

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, d=1, act=True):

"""Initialize Conv layer with given arguments including activation."""

super().__init__()

self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p, d), groups=g, dilation=d, bias=False)

self.bn = nn.BatchNorm2d(c2)

self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()

def forward(self, x):

"""Apply convolution, batch normalization and activation to input tensor."""

return self.act(self.bn(self.conv(x)))

def forward_fuse(self, x):

"""Perform transposed convolution of 2D data."""

return self.act(self.conv(x))

class DownSimper(nn.Module):

"""DownSimper."""

def __init__(self, c1, c2):

super().__init__()

self.c = c2 // 2

self.cv1 = Conv(c1, self.c, 3, 2, d=3)

self.cv2 = Conv(c1, self.c, 1, 1, 0)

def forward(self, x):

x1 = self.cv1(x)

x = self.cv2(x)

x2, x3 = x.chunk(2, 1)

x2 = torch.nn.functional.max_pool2d(x2, 3, 2, 1)

x3 = torch.nn.functional.avg_pool2d(x3, 3, 2, 1)

return torch.cat((x1, x2, x3), 1)

结构图:

左侧卷积中d=3,代表使用空洞卷积或者是膨胀卷积,可以带来更大的感受野。d=3,k=3等同卷积核为9.

YoloV9官方测试结果

yolov9 summary: 580 layers, 60567520 parameters, 0 gradients, 264.3 GFLOPs

Class Images Instances P R mAP50 mAP50-95: 100%|██████████| 15/15 00:02

all 230 1412 0.878 0.991 0.989 0.732

c17 230 131 0.92 0.992 0.994 0.797

c5 230 68 0.828 1 0.992 0.807

helicopter 230 43 0.895 0.977 0.969 0.634

c130 230 85 0.955 0.999 0.994 0.684

f16 230 57 0.839 0.965 0.966 0.689

b2 230 2 1 0.978 0.995 0.647

other 230 86 0.91 0.942 0.957 0.525

b52 230 70 0.917 0.971 0.979 0.806

kc10 230 62 0.958 0.984 0.987 0.826

command 230 40 0.964 1 0.995 0.815

f15 230 123 0.939 0.995 0.995 0.702

kc135 230 91 0.949 0.989 0.978 0.691

a10 230 27 0.863 0.963 0.982 0.458

b1 230 20 0.926 1 0.995 0.712

aew 230 25 0.929 1 0.993 0.812

f22 230 17 0.835 1 0.995 0.706

p3 230 105 0.97 1 0.995 0.804

p8 230 1 0.566 1 0.995 0.697

f35 230 32 0.908 1 0.995 0.547

f18 230 125 0.956 0.992 0.993 0.828

v22 230 41 0.921 1 0.995 0.682

su-27 230 31 0.925 1 0.994 0.832

il-38 230 27 0.899 1 0.995 0.816

tu-134 230 1 0.346 1 0.995 0.895

su-33 230 2 0.96 1 0.995 0.747

an-70 230 2 0.718 1 0.995 0.796

tu-22 230 98 0.912 1 0.995 0.804

改进方法

测试结果

yolov9 summary: 595 layers, 58708576 parameters, 0 gradients, 274.0 GFLOPs

Class Images Instances P R mAP50 mAP50-95: 100%|██████████| 15/15 00:35

all 230 1412 0.952 0.974 0.99 0.738

c17 230 131 0.981 0.992 0.995 0.832

c5 230 68 0.963 0.985 0.995 0.847

helicopter 230 43 0.968 0.93 0.972 0.635

c130 230 85 0.988 0.996 0.995 0.669

f16 230 57 0.976 0.947 0.975 0.687

b2 230 2 0.767 1 0.995 0.516

other 230 86 0.981 0.907 0.968 0.573

b52 230 70 0.969 0.971 0.985 0.812

kc10 230 62 0.986 0.984 0.989 0.835

command 230 40 0.988 1 0.995 0.82

f15 230 123 0.965 0.992 0.989 0.697

kc135 230 91 0.984 0.989 0.981 0.725

a10 230 27 1 0.794 0.976 0.495

b1 230 20 0.979 1 0.995 0.682

aew 230 25 0.944 1 0.995 0.802

f22 230 17 1 0.881 0.992 0.717

p3 230 105 0.981 0.992 0.995 0.81

p8 230 1 0.756 1 0.995 0.697

f35 230 32 0.99 0.938 0.982 0.55

f18 230 125 0.981 0.992 0.991 0.829

v22 230 41 0.99 1 0.995 0.684

su-27 230 31 0.985 1 0.995 0.849

il-38 230 27 0.987 1 0.995 0.84

tu-134 230 1 0.756 1 0.995 0.895

su-33 230 2 0.99 1 0.995 0.747

an-70 230 2 0.838 1 0.995 0.848

tu-22 230 98 1 1 0.995 0.832