羊驼Llama是当前最流行的开源大模型,其卓越的性能和广泛的应用领域使其成为业界瞩目的焦点。作为一款由Meta AI发布的开放且高效的大型基础语言模型,Llama拥有7B、13B和70B(700亿)三种版本,满足不同场景和需求。

吴恩达教授推出了全新的Llama课程,旨在帮助学习者全面理解并掌握Llama大模型这一前沿技术。

课程地址:DLAI - Prompt Engineering with Llama 2

知识点笔记

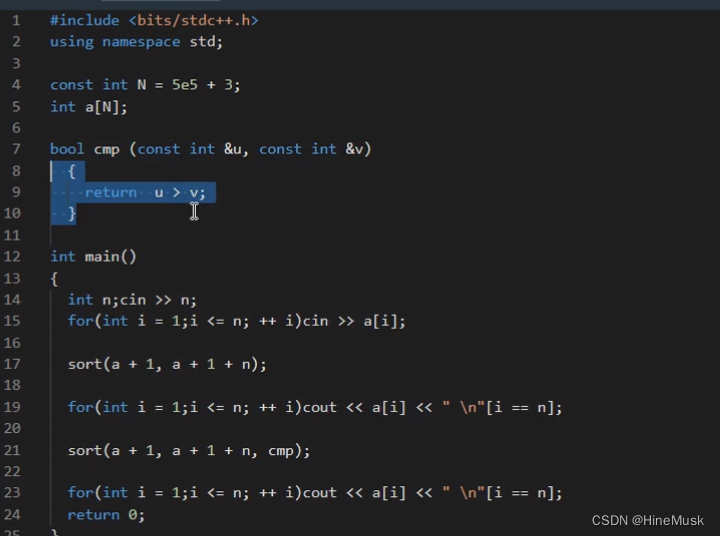

Chat vs. base models

### chat model

prompt = "What is the capital of France?"

response = llama(prompt,

verbose=True,

model="togethercomputer/llama-2-7b-chat")

### base model

prompt = "What is the capital of France?"

response = llama(prompt,

verbose=True,

add_inst=False,

model="togethercomputer/llama-2-7b")chat model 传送过去的prompt有[inst] [/inst]标记,而base model在设置add_inst=False之后没有标记。

但是具体呢?

原来chat model只返回一个回答,而base model在有[inst]标记的情况下返回所有相同的回答,在没有[inst]标记时返回相似问题。

shot Prompting 样本提示分类

Zero-shot Prompting 、One-shot Prompting 和Few-shot Prompting

零样本提示、单样本提示和多样本提示,顾名思义,零样本提示,就是prompt里面没有样例,直接提问问题,单样本提示就是给出一个例子,多样本提示就是给出几个例子。大模型最厉害的地方就是即使 多样本提示,一般也只需要区区几个例子,而不想传统的CV、NLP模型,需要几十甚至几百个样例才能达到较好的效果。

零样本提示

prompt = """

Message: Hi Amit, thanks for the thoughtful birthday card!

Sentiment: ?

"""

response = llama(prompt)

print(response)多样本提示

prompt = """

Message: Hi Dad, you're 20 minutes late to my piano recital!

Sentiment: Negative

Message: Can't wait to order pizza for dinner tonight

Sentiment: Positive

Message: Hi Amit, thanks for the thoughtful birthday card!

Sentiment: ?

"""

response = llama(prompt)

print(response)角色提示

角色为LLM提供了所需答案类型的上下文。

当提供角色时,Llama 2通常会给出更一致的回答。

普通写法

prompt = """

How can I answer this question from my friend:

What is the meaning of life?

"""

response = llama(prompt)

print(response)有角色提示写法

role = """

Your role is a life coach \

who gives advice to people about living a good life.\

You attempt to provide unbiased advice.

You respond in the tone of an English pirate.

"""

prompt = f"""

{role}

How can I answer this question from my friend:

What is the meaning of life?

"""

response = llama(prompt)

print(response)