欢迎关注我的CSDN:https://spike.blog.csdn.net/

本文地址:https://blog.csdn.net/caroline_wendy/article/details/137569332

Kubeflow 的 PyTorchJob 是 Kubernetes 自定义资源,用于在 Kubernetes 上运行 PyTorch 训练任务,是 Kubeflow 组件中的一部分,具有稳定状态,并且,实现位于 training-operator 中。PyTorchJob 允许定义一个配置文件,来启动 PyTorch 模型的训练,可以是分布式的,也可以是单机的。

请注意,PyTorchJob 默认情况下不在用户命名空间中工作,因为 istio 自动侧车注入。为了使其运行,需要为 PyTorchJob pod 或命名空间添加注释 sidecar.istio.io/inject: "false" 以禁用它。

在 PyTorch Lightning 框架中,可以通过 strategy 配置多机多卡模式,例如 DDP(Distributed Data Parallel) 策略,即:

- 多机多卡,需要设置固定的随机种子

- 训练策略设置成 DDPStrategy

pl.Trainer()设置strategy(策略)、num_nodes(节点数)、devices(节点的卡数)

即:

from pytorch_lightning import seed_everything

from pytorch_lightning.strategies import DeepSpeedStrategy, DDPStrategy

# 多机多卡,需要设置固定的随机种子

seed_everything(args.seed)

# DeepSpeed 策略

# strategy = DeepSpeedStrategy(config=args.deepspeed_config_path)

# DDP 策略

strategy = DDPStrategy(find_unused_parameters=False)

# num_nodes 是节点数量,devices 是节点的 GPU 数量,可以设置成 auto

trainer = pl.Trainer(

accelerator="gpu",

# ...

strategy=strategy, # 多机多卡配置

num_nodes=args.num_nodes, # 节点数

devices="auto", # 每个节点 GPU 卡数

)

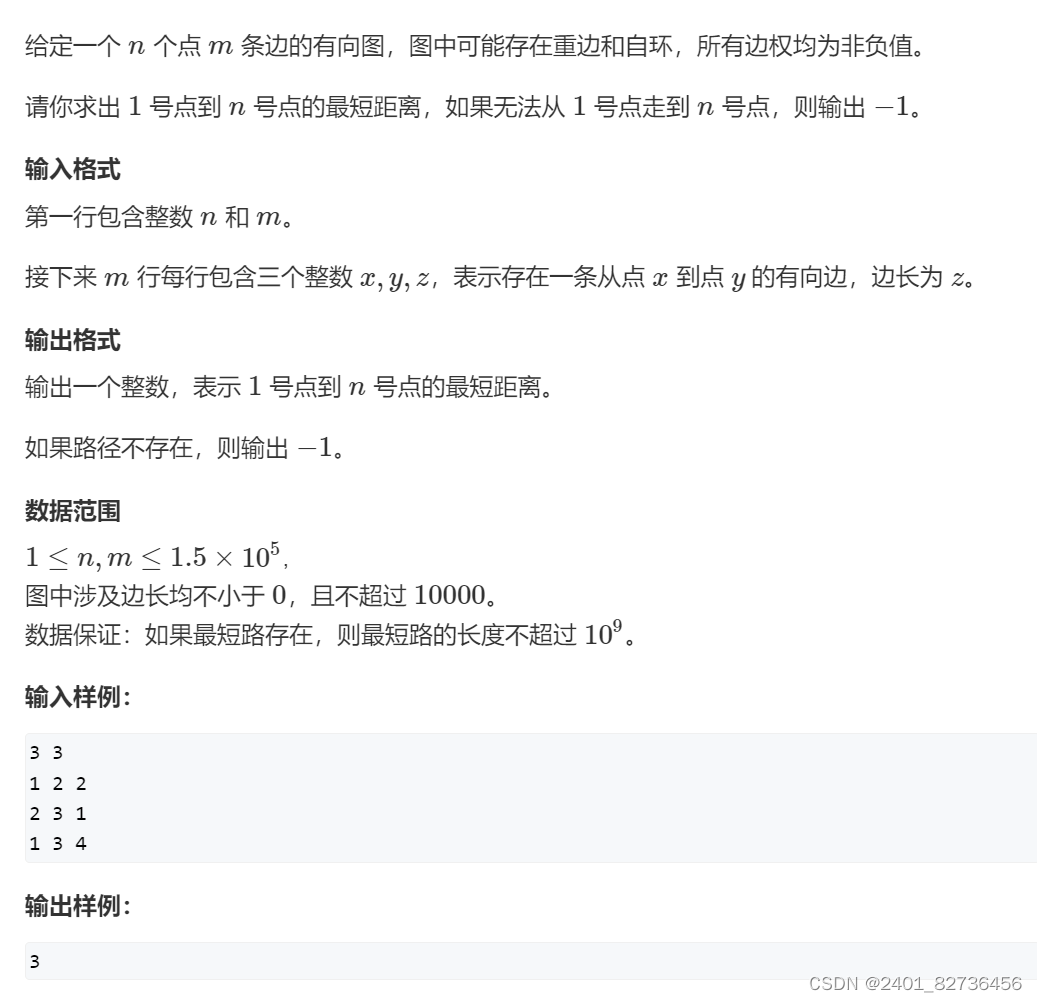

Kubeflow 配置 PyTorchJob,即:

- Job的类型(kind),需要设置成 PyTorchJob,支持 DDP 模式。

- 包括 Master 节点与 Worker 节点,两个节点的配置可以相同。

- 运行命令

command相同,可以存储不同的nohup.out中,例如_master或_worker。 resources配置资源,即单机卡数;tolerations配置资源池。- 必须添加

sidecar.istio.io/inject: "false" replicas表示节点数量,Master 与 Worker 的总和,就是num_nodes的数量。

即:

apiVersion: "kubeflow.org/v1"

kind: PyTorchJob

metadata:

name: [your project]-trainer-n8g1-20240409

spec:

pytorchReplicaSpecs:

Master:

replicas: 1

template:

metadata:

annotations:

sidecar.istio.io/inject: "false"

labels:

file-mount: "true"

user-mount: "true"

spec:

containers:

- name: pytorch

command:

- /bin/sh

- -cl

- "bash run_train_n8g1.sh > nohup.run_train_n8g1_master.log 2>&1"

image: "[docker image]"

imagePullPolicy: Always

securityContext: # New

privileged: false

capabilities:

add: ["IPC_LOCK"]

resources:

limits:

rdma/hca: 1

cpu: 12

memory: "100G"

nvidia.com/gpu: 1

workingDir: "[project dir]"

volumeMounts:

- name: cache-volume # change the name to your volume on k8s

mountPath: /dev/shm

nodeSelector:

gpu.device: "a100" # support 'a10' or 'a100'

group: "algo2"

tolerations:

- effect: NoSchedule

key: role

operator: Equal

value: "algo2"

volumes:

- name: cache-volume # change the name to your volume on k8s

emptyDir:

medium: Memory

sizeLimit: "960G"

Worker:

replicas: 7

template:

metadata:

annotations:

sidecar.istio.io/inject: "false"

labels:

file-mount: "true"

user-mount: "true"

spec:

containers:

- name: pytorch

command:

- /bin/sh

- -cl

- "bash run_train_n8g1.sh > nohup.run_train_n8g1_worker.log 2>&1"

image: "[docker image]"

imagePullPolicy: Always

securityContext: # New

privileged: false

capabilities:

add: ["IPC_LOCK"]

resources:

limits:

rdma/hca : 1

cpu: 12

memory: "100G"

nvidia.com/gpu: 1

workingDir: "[project dir]"

volumeMounts:

- name: cache-volume # change the name to your volume on k8s

mountPath: /dev/shm

nodeSelector:

gpu.device: "a100" # support 'a10' or 'a100'

group: "algo2"

tolerations:

- effect: NoSchedule

key: role

operator: Equal

value: "algo2"

volumes:

- name: cache-volume # change the name to your volume on k8s

emptyDir:

medium: Memory

sizeLimit: "960G"

设置运行脚本:

# 激活环境

source /opt/conda/etc/profile.d/conda.sh # 必要步骤

conda activate alphaflow

# DDP 模式需要设置 MASTER_PORT,否则异常

export MASTER_PORT=9800

# 显示环境变量

export

注意:DDP 模式需要设置 MASTER_PORT,否则异常

运行日志,主要关注 RANK 与 WORLD_SIZE 变量,如下:

RANK="0"

WORLD_SIZE="8"

RANK="6"

WORLD_SIZE="8"

遇到 Bug:ncclInternalError: Internal check failed. ,即:

RuntimeError: NCCL error in: ../torch/csrc/distributed/c10d/ProcessGroupNCCL.cpp:1269, internal error, NCCL version 2.14.3

ncclInternalError: Internal check failed.

Last error:

Net : Call to recv from [IP]<[Port]> failed : Connection refused

原因是 DDP 策略需要设置 MASTER_PORT 参数,例如:

export MASTER_PORT=9800

参考:GitHub - multi node training error:NCCL error

![[Java基础揉碎]StringBuffer类 StringBuild类](https://img-blog.csdnimg.cn/direct/601fcbe76d694e809ad0d3422c3a22cd.png)