❤️作者简介:2022新星计划第三季云原生与云计算赛道Top5🏅、华为云享专家🏅、云原生领域潜力新星🏅

💛博客首页:C站个人主页🌞

💗作者目的:如有错误请指正,将来会不断的完善笔记,帮助更多的Java爱好者入门,共同进步!

文章目录

- 基本理论介绍

- 什么是云原生

- 什么是kubernetes

- kubernetes核心功能

- Service详解

- 概述

- 几种Service类型

- 开启ipvs(必须安装ipvs内核模块,否则会降级为iptables):

- 环境准备(创建Deployment)

- ClusterIP类型的Service(默认类型)

- 概述

- 创建Service

- 查看Service

- 查看Service的详细信息

- 查看ipvs的映射规则

- 测试Service

- Endpoint资源

- 查看Endpoint

- 删除Service

- HeadLiness类型的Service

- 概述

- 创建Service

- 查看Service

- 查看Service详情

- 查看域名解析情况

- 通过Service的域名进行查询

- 删除Service

- NodePort类型的Service(常用⭐)

- 概述

- 创建Service

- 查看Service

- 访问Service

- 删除Service

- LoadBalancer类型的Service

- 概述

- ExternalName类型的Service

- 概述

- 创建Service

- 域名解析

- 删除Service

- Ingress详解

- 概述

- 安装Ingress1.1.2(在Master节点执行)

- 创建并切换目录

- 创建Ingress需要的配置文件⭐

- 开始安装Ingress

- 检查是否安装成功

- Ingress实战

- 准备Service和Pod

- Http代理模式

- 访问测试(windows环境下访问)

- 访问测试(Linux环境下访问)

- 访问测试(windows环境下访问)

- 访问测试(Linux环境下访问)

- Ingress 中的 nginx 的全局配置

- Ingress限流

- 限流配置格式:(在Ingress配置文件定义使用)

- 限流实战

基本理论介绍

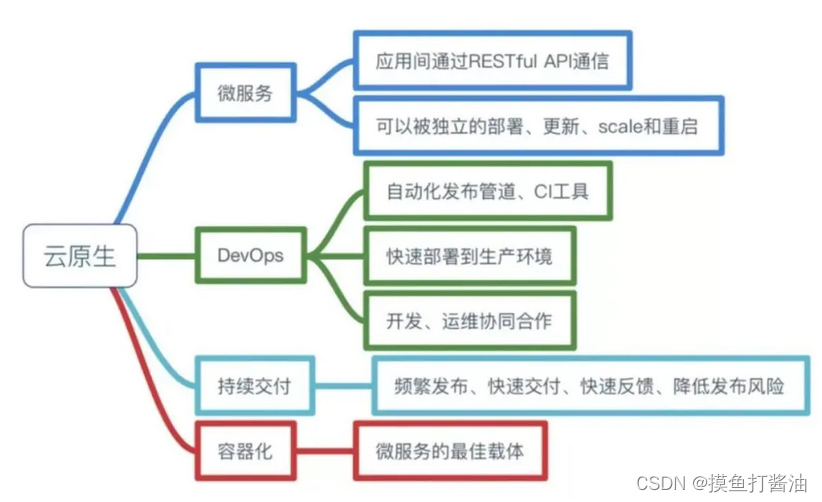

什么是云原生

Pivotal公司的Matt Stine于2013年首次提出云原生(Cloud-Native)的概念;2015年,云原生刚推广时,Matt Stine在《迁移到云原生架构》一书中定义了符合云原生架构的几个特征:12因素、微服务、自敏捷架构、基于API协作、扛脆弱性;到了2017年,Matt Stine在接受InfoQ采访时又改了口风,将云原生架构归纳为模块化、可观察、可部署、可测试、可替换、可处理6特质;而Pivotal最新官网对云原生概括为4个要点:DevOps+持续交付+微服务+容器。

总而言之,符合云原生架构的应用程序应该是:采用开源堆栈(K8S+Docker)进行容器化,基于微服务架构提高灵活性和可维护性,借助敏捷方法、DevOps支持持续迭代和运维自动化,利用云平台设施实现弹性伸缩、动态调度、优化资源利用率。

(此处摘选自《知乎-华为云官方帐号》)

什么是kubernetes

kubernetes,简称K8s,是用8代替8个字符"ubernete"而成的缩写。是一个开源的,用于管理云平台中多个主机上的容器化的应用,Kubernetes的目标是让部署容器化的应用简单并且高效,Kubernetes提供了应用部署,规划,更新,维护的一种机制。

传统的应用部署方式:是通过插件或脚本来安装应用。这样做的缺点是应用的运行、配置、管理、所有生存周期将与当前操作系统绑定,这样做并不利于应用的升级更新/回滚等操作,当然也可以通过创建虚拟机的方式来实现某些功能,但是虚拟机非常重,并不利于可移植性。

新的部署方式:是通过部署容器方式实现,每个容器之间互相隔离,每个容器有自己的文件系统 ,容器之间进程不会相互影响,能区分计算资源。相对于虚拟机,容器能快速部署,由于容器与底层设施、机器文件系统解耦的,所以它能在不同云、不同版本操作系统间进行迁移。

容器占用资源少、部署快,每个应用可以被打包成一个容器镜像,每个应用与容器间成一对一关系也使容器有更大优势,使用容器可以在build或release 的阶段,为应用创建容器镜像,因为每个应用不需要与其余的应用堆栈组合,也不依赖于生产环境基础结构,这使得从研发到测试、生产能提供一致环境。类似地,容器比虚拟机轻量、更"透明",这更便于监控和管理。

Kubernetes是Google开源的一个容器编排引擎,它支持自动化部署、大规模可伸缩、应用容器化管理。在生产环境中部署一个应用程序时,通常要部署该应用的多个实例以便对应用请求进行负载均衡。

在Kubernetes中,我们可以创建多个容器,每个容器里面运行一个应用实例,然后通过内置的负载均衡策略,实现对这一组应用实例的管理、发现、访问,而这些细节都不需要运维人员去进行复杂的手工配置和处理。

(此处摘选自《百度百科》)

kubernetes核心功能

- 存储系统挂载(数据卷):pod中容器之间共享数据,可以使用数据卷

- 应用健康检测:容器内服务可能进程阻塞无法处理请求,可以设置监控检查策略保证应用健壮性

- 应用实例的复制(实现pod的高可用):pod控制器(deployment)维护着pod副本数量(可以自己进行设置,默认为1),保证一个pod或一组同类的pod数量始终可用,如果pod控制器deployment当前维护的pod数量少于deployment设置的pod数量,则会自动生成一个新的pod,以便数量符合pod控制器,形成高可用。

- Pod的弹性伸缩:根据设定的指标(比如:cpu利用率)自动缩放pod副本数。

- 服务发现:使用环境变量或者DNS插件保证容器中程序发现pod入口访问地址

- 负载均衡:一组pod副本分配一个私有的集群IP地址,负载均衡转发请求到后端容器。在集群内部其他pod可通过这个clusterIP访问应用

- 滚动更新:更新服务不会发生中断,一次更新一个pod,而不是同时删除整个服务。

- 容器编排:通过文件来部署服务,使得应用程序部署变得更高效

- 资源监控:node节点组件集成cAdvisor资源收集工具,可通过Heapster汇总整个集群节点资源数据,然后存储到InfluxDb时序数据库,再由Grafana展示。

- 提供认证和授权:支持角色访问控制(RBAC)认证授权等策略

Service详解

概述

-

为什么会引入Service资源?

- 问题:在kubernetes中,Pod是应用程序的载体,我们可以通过Pod的IP来访问应用程序,但是Pod的IP地址不是固定的,这就意味着不方便直接采用Pod的IP对服务进行访问。

- 解决方式:而为了解决这个问题,kubernetes提供了Service资源,Service会对提供同一个服务的多个Pod进行聚合,并且提供一个统一的入口地址,通过访问Service的入口地址就能访问到后面的Pod服务。

-

Service在很多情况下只是一个概念,真正起作用的其实是kube-proxy服务进程,每个Node节点上都运行了一个kube-proxy的服务进程。当创建Service的时候会通过API Server向etcd写入创建的Service的信息,而kube-proxy会基于监听的机制发现这种Service的变化,然后它会将最新的Service信息转换为对应的访问规则。

-

kube-proxy目前支持三种工作模式:

-

- 1:userspace模式:kube-proxy会为每一个Service创建一个监听端口,发向Cluster IP的请求被iptables规则重定向到kube-proxy监听的端口上,kube-proxy根据LB算法(负载均衡算法)选择一个提供服务的Pod并和其建立连接,以便将请求转发到Pod上。该模式下,kube-proxy充当了一个四层负载均衡器的角色。由于kube-proxy运行在userspace中,在进行转发处理的时候会增加内核和用户空间之间的数据拷贝,特点是:虽然比较稳定,但是效率非常低下。

-

- 2:iptables模式:iptables模式下,kube-proxy为Service后端的每个Pod创建对应的iptables规则,直接将发向Cluster IP的请求重定向到一个Pod的IP上。该模式下kube-proxy不承担四层负载均衡器的角色,只负责创建iptables规则。特点是:该模式的优点在于较userspace模式效率更高,但是不能提供灵活的LB策略,当后端Pod不可用的时候无法进行重试。

-

- 3:ipvs模式:ipvs模式和iptables类似,kube-proxy监控Pod的变化并创建相应的ipvs规则。ipvs相对iptables转发效率更高,除此之外,ipvs支持更多的LB算法。(推荐这种使用这种模式!!!)

几种Service类型

- Service的资源清单:

apiVersion: v1 # 版本

kind: Service # 类型

metadata: # 元数据

name: # 资源名称

namespace: # 命名空间

spec:

selector: # 标签选择器,用于确定当前Service代理那些Pod

app: nginx

type: NodePort # Service的类型,指定Service的访问方式

clusterIP: # 虚拟服务的IP地址

sessionAffinity: # session亲和性,支持ClientIP、None两个选项,默认值为None

ports: # 端口信息

- port: 8080 # Service端口

protocol: TCP # 协议

targetPort 9020: # Pod端口

nodePort: 31666 # 主机端口,如果type为NodePort的话,同时指定了这个值(现在为31666),则Service对外暴露的端口就是31666。反之NodePort类型,但是不指定这个值,则对外暴露端口号随机生成。

spec.type的说明:

-

ClusterIP:它是kubernetes系统自动分配的虚拟IP。特点是:该IP只能在集群内部访问,集群外是访问不了的。(默认)

-

NodePort:将Service通过指定的Node上的端口暴露给外部,特点是:通过这个服务器IP和nodePort端口号,可以实现在集群外部访问Pod。(常用)

-

LoadBalancer:使用外接负载均衡器完成到服务的负载分发,注意此模式需要外部云环境的支持。

-

ExternalName:把集群外部的服务引入集群内部,直接使用。

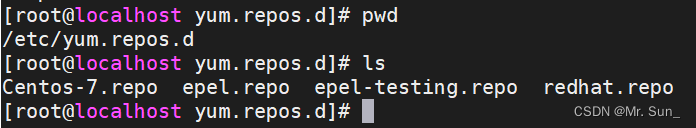

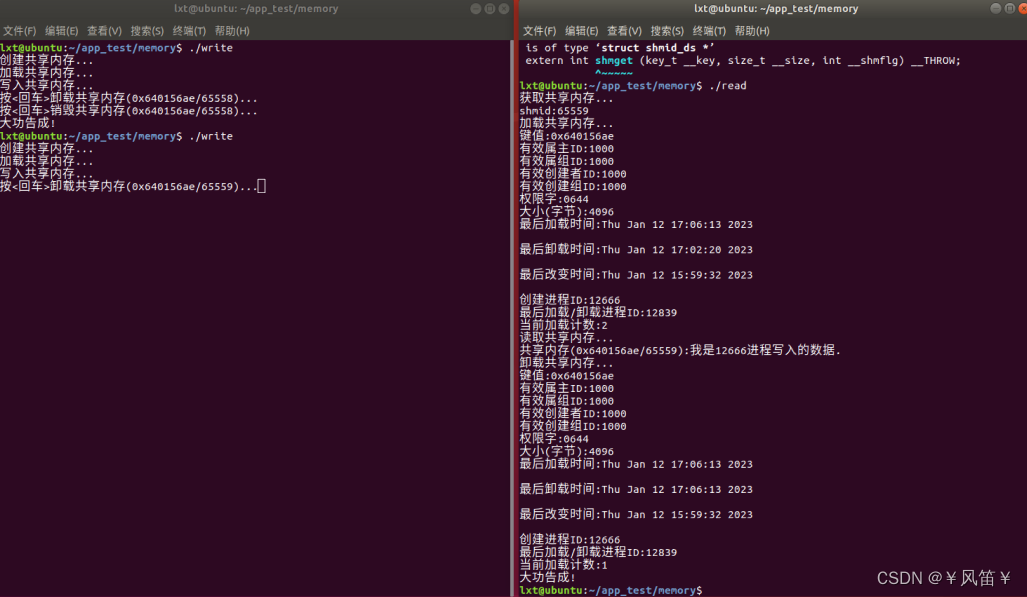

开启ipvs(必须安装ipvs内核模块,否则会降级为iptables):

- 1:查看ipvs模块是否开启:(下面这种就是没有开启的意思)

[root@k8s-master ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

- 2:编辑kube-proxy:

kubectl edit cm kube-proxy -n kube-system

- 输入/mode,找到mode属性,将其双引号的值写上ipvs:

metricsBindAddress: ""

mode: "ipvs" #修改成这样就行,然后保存退出。

.......

- 3:删除对应kube-proxy的Pod:

kubectl delete pod -l k8s-app=kube-proxy -n kube-system

- 4:查看ipvs模块是否开启成功:(开启成功!)

[root@k8s-master ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 192.168.184.100:6443 Masq 1 0 0

TCP 10.96.0.10:53 rr

-> 10.244.220.198:53 Masq 1 0 0

-> 10.244.220.199:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.220.198:9153 Masq 1 0 0

-> 10.244.220.199:9153 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.244.220.198:53 Masq 1 0 0

-> 10.244.220.199:53 Masq 1 0 0

环境准备(创建Deployment)

- 1:创建Deployment配置文件:

vim podcontroller-deployment.yaml

- 内容如下:

apiVersion: v1

kind: Namespace

metadata:

name: test

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: podcontroller-deployment

namespace: test

spec:

replicas: 3

selector:

matchLabels:

app: pod-nginx-label

template:

metadata:

labels:

app: pod-nginx-label

spec:

containers:

- name: nginx

image: nginx:1.19

ports:

- containerPort: 80

- 2:执行配置文件:

kubectl apply -f podcontroller-deployment.yaml

- 3:查看Pod(展示标签):

[root@k8s-master ~]# kubectl get pods -n test -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS

podcontroller-deployment-66f79cc9f5-l4m5z 1/1 Running 1 22h 10.244.232.76 k8s-slave01 <none> <none> app=pod-nginx-label,pod-template-hash=66f79cc9f5

podcontroller-deployment-66f79cc9f5-smp8j 1/1 Running 1 22h 10.244.232.77 k8s-slave01 <none> <none> app=pod-nginx-label,pod-template-hash=66f79cc9f5

podcontroller-deployment-66f79cc9f5-vvl9t 1/1 Running 1 22h 10.244.220.203 k8s-slave02 <none> <none> app=pod-nginx-label,pod-template-hash=66f79cc9f5

-

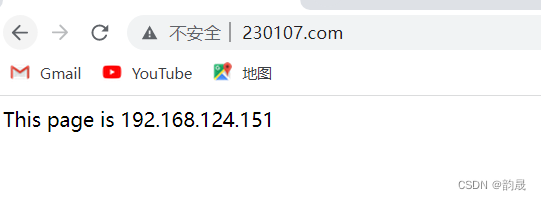

4:为了方便后面的测试,修改三个Nginx的Pod的index.html:(如果不修改看不出那么明显的效果,对我们后面了解Service资源不利)

- 1:修改第一个Nginx的Pod:(并且将nginx的首页内容换成各自Nginx的Pod的IP地址)

kubectl exec -it podcontroller-deployment-66f79cc9f5-l4m5z -c nginx -n test /bin/shecho "10.244.232.76" > /usr/share/nginx/html/index.htmlexit- 2:修改第二个Nginx的Pod:(并且将nginx的首页内容换成各自Nginx的Pod的IP地址)

kubectl exec -it podcontroller-deployment-66f79cc9f5-smp8j -c nginx -n test /bin/shecho "10.244.232.77" > /usr/share/nginx/html/index.htmlexit- 3:修改第三个Nginx的Pod:(并且将nginx的首页内容换成各自Nginx的Pod的IP地址)

kubectl exec -it podcontroller-deployment-66f79cc9f5-vvl9t -c nginx -n test /bin/shecho "10.244.220.203" > /usr/share/nginx/html/index.htmlexit -

5:修改完成上面的nginx的Pod的首页内容之后,我们进行测试一下每一个Pod:

[root@k8s-master ~]# curl 10.244.232.76

10.244.232.76

[root@k8s-master ~]# curl 10.244.232.77

10.244.232.77

[root@k8s-master ~]# curl 10.244.220.203

10.244.220.203

ClusterIP类型的Service(默认类型)

概述

- 它是kubernetes系统自动分配的虚拟IP。特点是:该IP只能在集群内部访问,集群外是访问不了的,并且是kubernetes默认的选择类型。

创建Service

- 1:创建配置文件:

vim service-clusterip.yaml

- 内容如下:

apiVersion: v1

kind: Namespace

metadata:

name: test

---

apiVersion: v1

kind: Service

metadata:

name: service-clusterip

namespace: test

spec:

selector: #Pod的标签

app: pod-nginx-label

clusterIP: 10.96.96.96 # service的集群内部IP地址(集群外无法访问),如果不写,默认会生成一个IP

type: ClusterIP

ports:

- port: 80 # Service的端口

targetPort: 80 # Pod的端口

- 2:执行配置文件:

[root@k8s-master ~]# kubectl apply -f service-clusterip.yaml

namespace/test unchanged

service/service-clusterip created

查看Service

[root@k8s-master ~]# kubectl get service -n test -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service-clusterip ClusterIP 10.96.96.96 <none> 80/TCP 45s app=pod-nginx-label

查看Service的详细信息

- 使用describe查看service详细信息:

- 下面最重要的就是Endpoints:(也就是说请求通过service,service会负载均衡到那些IP+端口的Pod上),里面记录这个service对应指定Pod标签的Pod的IP+端口。

- 例如下面的Endpoints:10.244.220.203和10.244.232.76和10.244.232.77就是我们上面通过Deployment创建的三个Nginx的Pod的IP地址。

- 当请求过来的时候,就会通过service的Endpoints再通过轮询算法访问Pod的IP。

- 下面最重要的就是Endpoints:(也就是说请求通过service,service会负载均衡到那些IP+端口的Pod上),里面记录这个service对应指定Pod标签的Pod的IP+端口。

[root@k8s-master ~]# kubectl describe service service-clusterip -n test

Name: service-clusterip

Namespace: test

Labels: <none>

Annotations: <none>

Selector: app=pod-nginx-label

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.96.96.96

IPs: 10.96.96.96

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.220.203:80,10.244.232.76:80,10.244.232.77:80

Session Affinity: None

Events: <none>

查看ipvs的映射规则

[root@k8s-master ~]# ipvsadm -Ln

......

TCP 10.96.96.96:80 rr

-> 10.244.220.203:80 Masq 1 0 0

-> 10.244.232.76:80 Masq 1 0 0

-> 10.244.232.77:80 Masq 1 0 0

......

- rr:表示轮询算法。

- 可以看到上面这一组就是Service对应的规则。(由于当前算法是轮询rr)

- 例如当有5个请求访问10.96.96.96:80时候,此时就相当于依次访问下面的IP:(会转发流量到下面IP+端口上)

- **第一次请求相当于访问10.244.220.203:80 **

- 第二次请求相当于访问10.244.232.76:80

- 第三次请求相当于访问10.244.232.77:80

- 第四次请求相当于访问10.244.220.203:80

- **第五次请求相当于访问10.244.232.76:80 **

- 例如当有5个请求访问10.96.96.96:80时候,此时就相当于依次访问下面的IP:(会转发流量到下面IP+端口上)

测试Service

- 访问5次Service:(10.96.96.96:80)

[root@k8s-master ~]# curl 10.96.96.96:80

10.244.220.203

[root@k8s-master ~]# curl 10.96.96.96:80

10.244.232.77

[root@k8s-master ~]# curl 10.96.96.96:80

10.244.232.76

[root@k8s-master ~]# curl 10.96.96.96:80

10.244.220.203

[root@k8s-master ~]# curl 10.96.96.96:80

10.244.232.77

Endpoint资源

-

Endpoint是kubernetes中的一个资源对象,存储在etcd中,用来记录一个service对应的所有Pod的访问地址(也就是记录每个和该Service相关联的Pod的IP+端口),它是根据service配置文件中的selector描述产生的。

-

一个service能够关联一组Pod,这些Pod的IP+端口号通过Endpoints暴露出来,Endpoints是实现实际服务的端点集合。换言之,service和Pod之间的联系是通过Endpoints实现的。

查看Endpoint

[root@k8s-master ~]# kubectl get endpoints -n test

NAME ENDPOINTS AGE

service-clusterip 10.244.220.203:80,10.244.232.76:80,10.244.232.77:80 17h

删除Service

[root@k8s-master ~]# kubectl delete service service-clusterip -n test

service "service-clusterip" deleted

HeadLiness类型的Service

概述

- 在某些场景中,开发人员可能不想使用Service提供的负载均衡功能,而希望自己来控制负载均衡策略,针对这种情况,kubernetes提供了HeadLinesss Service,特点是:这类Service不会分配Cluster IP,如果想要访问Service,只能通过Service的域名进行查询。

创建Service

- 1:创建配置文件:

vim service-headliness.yaml

- 内容如下:

apiVersion: v1

kind: Service

metadata:

name: service-headliness

namespace: test

spec:

selector:

app: pod-nginx-label

clusterIP: None # 将clusterIP设置为None,即可创建headliness模式的Service

type: ClusterIP

ports:

- port: 80 # Service的端口

targetPort: 80 # Pod的端口

- 2:执行配置文件:

[root@k8s-master ~]# kubectl apply -f service-headliness.yaml

service/service-headliness created

查看Service

[root@k8s-master ~]# kubectl get svc service-headliness -n test -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service-headliness ClusterIP None <none> 80/TCP 30s app=pod-nginx-label

查看Service详情

[root@k8s-master ~]# kubectl describe service service-headliness -n test

Name: service-headliness

Namespace: test

Labels: <none>

Annotations: <none>

Selector: app=pod-nginx-label

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: None

IPs: None

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.220.203:80,10.244.232.76:80,10.244.232.77:80

Session Affinity: None

Events: <none>

查看域名解析情况

- 1:查看Pod:

[root@k8s-master ~]# kubectl get pod -n test

NAME READY STATUS RESTARTS AGE

podcontroller-deployment-66f79cc9f5-l4m5z 1/1 Running 1 30h

podcontroller-deployment-66f79cc9f5-smp8j 1/1 Running 1 30h

podcontroller-deployment-66f79cc9f5-vvl9t 1/1 Running 1 30h

- 2:进入Pod中:

kubectl exec -it podcontroller-deployment-66f79cc9f5-l4m5z -n test /bin/sh

- 3:执行cat /etc/resolv.conf命令:

cat /etc/resolv.conf

# 输出结果如下:

nameserver 10.96.0.10

search test.svc.cluster.local svc.cluster.local cluster.local

options ndots:5

- 4:退出

exit

通过Service的域名进行查询

[root@k8s-master ~]# dig @10.96.0.10 service-headliness.test.svc.cluster.local

; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7 <<>> @10.96.0.10 service-headliness.test.svc.cluster.local

; (1 server found)

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 9684

;; flags: qr aa rd; QUERY: 1, ANSWER: 3, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;service-headliness.test.svc.cluster.local. IN A

;; ANSWER SECTION:

service-headliness.test.svc.cluster.local. 30 IN A 10.244.232.77

service-headliness.test.svc.cluster.local. 30 IN A 10.244.232.76

service-headliness.test.svc.cluster.local. 30 IN A 10.244.220.203

;; Query time: 0 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: 日 6月 26 00:23:18 CST 2022

;; MSG SIZE rcvd: 241

删除Service

[root@k8s-master ~]# kubectl delete service service-headliness -n test

service "service-headliness" deleted

NodePort类型的Service(常用⭐)

概述

- 在上面的案例中(ClusterIP、HeadLiness)创建的Service的IP地址+端口只能在集群内部才可以访问,如果希望Service暴露给集群外部使用,那么就需要使用到NodePort类型的Service。NodePort的工作原理就是将Service的端口映射到Node的一个端口上,然后就可以通过节点IP+节点端口来访问Service了。

创建Service

- 1:创建配置文件:

vim service-nodeport.yaml

- 内容如下:

apiVersion: v1

kind: Service

metadata:

name: service-nodeport

namespace: test

spec:

selector:

app: pod-nginx-label

type: NodePort # Service类型为NodePort

ports:

- port: 80 # Service的端口

targetPort: 80 # Pod的端口

nodePort: 30666 # 指定绑定的node的端口(默认取值范围是30000~32767),如果不指定,会默认分配

- 2:执行配置文件:

kubectl apply -f service-nodeport.yaml

查看Service

[root@k8s-master ~]# kubectl get service -n test -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service-nodeport NodePort 10.96.236.222 <none> 80:30666/TCP 76s app=pod-nginx-label

访问Service

- 打开浏览器,访问http://192.168.184.100:30666/

删除Service

kubectl delete service service-nodeport -n test

LoadBalancer类型的Service

概述

- LoadBalancer和NodePort很相似,目的都是向外部暴露一个端口,区别在于LoadBalancer会在集群的外部再来做一个负载均衡设备,而这个设备需要外部环境的支持,外部服务发送到这个设备上的请求,会被设备负载之后转发到集群中。

ExternalName类型的Service

概述

- ExternalName类型的Service用于引入集群外部的服务,它通过externalName属性指定一个服务的地址,然后在集群内部访问此Service就可以访问到外部的服务了。

创建Service

- 1:创建配置文件:

vim service-externalname.yaml

- 内容如下:

apiVersion: v1

kind: Service

metadata:

name: service-externalname

namespace: test

spec:

type: ExternalName # Service类型为ExternalName

externalName: www.baidu.com # 改成IP地址也可以

- 2:执行配置文件:

kubectl apply -f service-externalname.yaml

域名解析

[root@k8s-master ~]# dig @10.96.0.10 service-externalname.test.svc.cluster.local

; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7 <<>> @10.96.0.10 service-externalname.test.svc.cluster.local

; (1 server found)

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 38181

;; flags: qr aa rd; QUERY: 1, ANSWER: 4, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;service-externalname.test.svc.cluster.local. IN A

;; ANSWER SECTION:

service-externalname.test.svc.cluster.local. 30 IN CNAME www.baidu.com.

www.baidu.com. 30 IN CNAME www.a.shifen.com.

www.a.shifen.com. 30 IN A 183.232.231.172

www.a.shifen.com. 30 IN A 183.232.231.174

;; Query time: 30 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: 日 6月 26 00:52:09 CST 2022

;; MSG SIZE rcvd: 249

删除Service

kubectl delete service service-externalname -n test

Ingress详解

概述

-

Service对集群之外暴露服务的主要方式有两种:NodePort和LoadBalancer,但是这两种方式,都有一定的缺点:

-

- NodePort方式的缺点是会占用很多集群机器的端口,那么当集群服务变多的时候,这个缺点就愈发明显。

-

- LoadBalancer的缺点是每个Service都需要一个LB,浪费,麻烦,并且需要kubernetes之外的设备的支持。

-

基于这种现状,kubernetes提供了Ingress资源对象,特点是:Ingress可以通过只占用node的两个端口(分别是http和https)来发布多个服务,而nodePort类型的Service,有几个服务就需要占用node几个端口,当服务很多的时候就会占用大量的端口。(说白了Ingress就是为了减少机器端口的占用而产生的):

-

实际上,Ingress相当于一个七层的负载均衡器,是kubernetes对反向代理的一个抽象,它的工作原理类似于Nginx,可以理解为Ingress里面建立了诸多映射规则,Ingress Controller通过监听这些配置规则并转化为Nginx的反向代理配置,然后对外提供服务。

-

- Ingress:kubernetes中的一个对象,作用是定义请求如何转发到Service的规则。

-

- Ingress Controller:具体实现反向代理及负载均衡的程序,对Ingress定义的规则进行解析,根据配置的规则来实现请求转发,实现的方式有很多,比如Nginx,Contour,Haproxy等。

-

Ingress(以Nginx)的工作原理如下:

-

- 1:用户编写Ingress规则,说明那个域名对应kubernetes集群中的那个Service。

-

- 2:Ingress控制器动态感知Ingress服务规则的变化,然后生成一段对应的Nginx的反向代理配置。

-

- 3:Ingress控制器会将生成的Nginx配置写入到一个运行着的Nginx服务中,并动态更新。

-

- 4:到此为止,其实真正在工作的就是一个Nginx了,内部配置了用户定义的请求规则。

安装Ingress1.1.2(在Master节点执行)

创建并切换目录

[root@k8s-master ~]# mkdir ingress && cd ingress

创建Ingress需要的配置文件⭐

- 1:创建deploy.yaml文件:(我们使用的是V1.1.2)

- 这里使用vi,而不使用vim,因为使用vim复制下面内容会乱序

vi deploy.yaml

- 内容如下:

#GENERATED FOR K8S 1.20

apiVersion: v1

kind: Namespace

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

name: ingress-nginx

---

apiVersion: v1

automountServiceAccountToken: true

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resourceNames:

- ingress-controller-leader

resources:

- configmaps

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx-admission

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- secrets

verbs:

- get

- create

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx-admission

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx-admission

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx-admission

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: v1

data:

allow-snippet-annotations: "true"

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx-controller

namespace: ingress-nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- appProtocol: http

name: http

port: 80

protocol: TCP

targetPort: http

- appProtocol: https

name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: ClusterIP # NodePort

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

ports:

- appProtocol: https

name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: ClusterIP

---

apiVersion: apps/v1

kind: DaemonSet # Deployment

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

spec:

dnsPolicy: ClusterFirstWithHostNet # dns 调整为主机网络 ,原先为 ClusterFirst

hostNetwork: true # 直接让 nginx 占用本机的 80 和 443 端口,这样就可以使用主机网络

containers:

- args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --report-node-internal-ip-address=true

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.1.2 #

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: controller

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8443

name: webhook

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources: # 资源限制

requests:

cpu: 100m

memory: 90Mi

limits:

cpu: 500m

memory: 500Mi

securityContext:

allowPrivilegeEscalation: true

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 101

volumeMounts:

- mountPath: /usr/local/certificates/

name: webhook-cert

readOnly: true

nodeSelector:

node-role: ingress # 以后只需要给某个 node 打上这个标签就可以部署 ingress-nginx 到这个节点上了

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx-admission-create

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx-admission-create

spec:

containers:

- args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1 #

imagePullPolicy: IfNotPresent

name: create

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx-admission-patch

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx-admission-patch

spec:

containers:

- args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1 #

imagePullPolicy: IfNotPresent

name: patch

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: nginx

spec:

controller: k8s.io/ingress-nginx

---

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.1.2

helm.sh/chart: ingress-nginx-4.0.18

name: ingress-nginx-admission

webhooks:

- admissionReviewVersions:

- v1

clientConfig:

service:

name: ingress-nginx-controller-admission

namespace: ingress-nginx

path: /networking/v1/ingresses

failurePolicy: Fail

matchPolicy: Equivalent

name: validate.nginx.ingress.kubernetes.io

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

sideEffects: None

- 2:给Node工作节点打上标签:

[root@k8s-master ingress]# kubectl label node k8s-slave01 node-role=ingress

node/k8s-slave01 labeled

[root@k8s-master ingress]# kubectl label node k8s-slave02 node-role=ingress

node/k8s-slave02 labeled

开始安装Ingress

- 1:执行deploy.yaml配置文件:(需要关闭 Node 节点的 80 和 443 端口,不能有其他进程占用)

[root@k8s-master ingress]# kubectl apply -f deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

serviceaccount/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

configmap/ingress-nginx-controller created

service/ingress-nginx-controller created

service/ingress-nginx-controller-admission created

daemonset.apps/ingress-nginx-controller created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

检查是否安装成功

[root@k8s-master ingress]# kubectl get service -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller ClusterIP 10.96.70.216 <none> 80/TCP,443/TCP 2m4s

ingress-nginx-controller-admission ClusterIP 10.96.91.236 <none> 443/TCP 2m4s

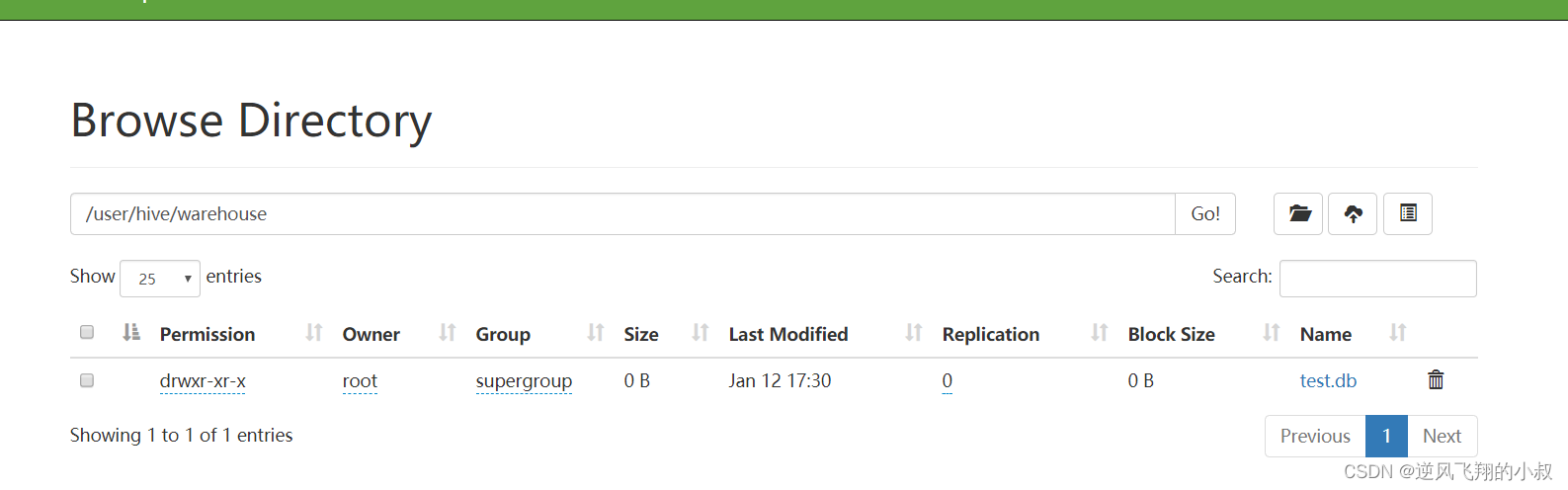

Ingress实战

案例过程:

1:使用Deployment分别创建一组Nginx的Pod和一组Tomcat的Pod(版本为Tomcat:8.5-jre10-slim);

2:创建一个关联Nginx的Pod的Service(type要为ClusterIP);

3:创建一个关联Tomcat的Pod的Service(type要为ClusterIP);

4:使用Http代理的方式访问Nginx和Tomcat;

5:使用Https代理的方式访问Nginx和Tomcat;

准备Service和Pod

- 1:创建配置文件:

vim nginx-tomcat.yaml

- 内容如下:

apiVersion: v1

kind: Namespace

metadata:

name: test

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment-nginx

namespace: test

spec:

replicas: 3

selector:

matchLabels:

app: pod-nginx-label

template:

metadata:

labels:

app: pod-nginx-label

spec:

containers:

- name: nginx

image: nginx:1.19

ports:

- containerPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment-tomcat

namespace: test

spec:

replicas: 3

selector:

matchLabels:

app: pod-tomcat-label

template:

metadata:

labels:

app: pod-tomcat-label

spec:

containers:

- name: tomcat

image: tomcat:8.5-jre10-slim #版本要选择这个,其他版本可能会没有Tomcat首页

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: service-nginx

namespace: test

spec:

selector:

app: pod-nginx-label

type: ClusterIP #类型为ClusterIP

ports:

- port: 80

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: service-tomcat

namespace: test

spec:

selector:

app: pod-tomcat-label

type: ClusterIP #类型为ClusterIP

ports:

- port: 8080

targetPort: 8080

- 2:执行配置文件:

[root@k8s-master ingress]# kubectl apply -f nginx-tomcat.yaml

namespace/test created

deployment.apps/deployment-nginx created

deployment.apps/deployment-tomcat created

service/service-nginx created

service/service-tomcat created

- 3:查看Service和Pod:

[root@k8s-master ingress]# kubectl get service,pods -n test

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/service-nginx ClusterIP None <none> 80/TCP 101m

service/service-tomcat ClusterIP None <none> 8080/TCP 101m

NAME READY STATUS RESTARTS AGE

pod/deployment-nginx-66f79cc9f5-4zdrm 1/1 Running 0 101m

pod/deployment-nginx-66f79cc9f5-lp5z7 1/1 Running 0 101m

pod/deployment-nginx-66f79cc9f5-n4h4w 1/1 Running 0 101m

pod/deployment-tomcat-66f674b9f6-7fzxd 1/1 Running 0 101m

pod/deployment-tomcat-66f674b9f6-d5jlq 1/1 Running 0 101m

pod/deployment-tomcat-66f674b9f6-f6vft 1/1 Running 0 101m

- 总结:可以看到我们创建的nginx和Tomcat的service是没有IP的,也没用对外暴露的机器节点端口nodePort,所以不会占用机器节点端口!这样的情况下,我们在集群外是无法访问这些Service的,那我们如何在没有service的nodePort的情况下访问这些Pod呢?这样我们就需要Ingress,下面有两种方式,分别是Http和Https。

Http代理模式

- 1:创建Ingress-http配置文件:

vim ingress-http.yaml

- 内容如下:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-http

namespace: test

annotations: #打上注解

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/backend-protocol: "HTTP"

spec:

rules: #规则为:http://host的值+path

- host: my.nginx.com #这里的规则 http://my.nginx.com/

http:

paths:

- path: /

pathType: Prefix #匹配规则,Prefix 前缀匹配 my.nginx.com/* 都可以匹配到

backend: # 指定路由的后台服务的service名称

service:

name: service-nginx #nginx的service的名称(可以在上面创建service的文件找)

port:

number: 80 #nginx的service的端口(可以在上面创建service的文件找)

- host: my.tomcat.com #这里的规则 http://my.tomcat.com/abc

http:

paths:

- path: /abc

pathType: Prefix #匹配规则,Prefix 前缀匹配

backend: # 指定路由的后台服务的service名称

service:

name: service-tomcat #tomcat的service的名称(可以在上面创建service的文件找)

port:

number: 8080 #tomcat的service的端口(可以在上面创建service的文件找)

-

pathType 说明:

-

- Prefix:基于以

/分隔的 URL 路径前缀匹配。匹配区分大小写,并且对路径中的元素逐个完成。 路径元素指的是由/分隔符分隔的路径中的标签列表。 如果每个 p 都是请求路径 p 的元素前缀,则请求与路径 p 匹配。

- Prefix:基于以

-

- Exact:精确匹配 URL 路径,且区分大小写。

-

- ImplementationSpecific:对于这种路径类型,匹配方法取决于 IngressClass。 具体实现可以将其作为单独的 pathType 处理或者与 Prefix 或 Exact 类型作相同处理。

-

2:执行配置文件:

[root@k8s-master ingress]# kubectl apply -f ingress-http.yaml

ingress.networking.k8s.io/ingress-http created

- 3:使用describe查看ingress-http:

[root@k8s-master ingress]# kubectl describe ingress ingress-http -n test

Name: ingress-http

Namespace: test

Address: 192.168.184.101

Default backend: default-http-backend:80 (<error: endpoints "default-http-backend" not found>)

Rules:

Host Path Backends

---- ---- --------

my.nginx.com

/ service-nginx:80 (10.244.232.71:80,10.244.232.73:80,10.244.232.74:80)

my.tomcat.com

/abc service-tomcat:8080 (10.244.232.72:8080,10.244.232.75:8080,10.244.232.76:8080)

Annotations: kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/backend-protocol: HTTP

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 8s (x2 over 13s) nginx-ingress-controller Scheduled for sync

- 4:查看ingress-http被调度到那个机器上了并记录其机器的IP:

- 可以看到我们的ingress-http被调度到192.168.184.101 上了。

[root@k8s-master ingress]# kubectl get ingress ingress-http -n test -o wide

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-http <none> my.nginx.com,my.tomcat.com 192.168.184.101 80 2m4s

访问测试(windows环境下访问)

- 1:在windows中的 hosts(

C:\Windows\System32\drivers\etc\hosts) 文件中添加如下的信息:- ingress被调度到的ip地址为192.168.184.101,可以通过(kubectl get ingress ingress-http -n test -o wide)命令找到。

# 模拟 DNS,其中192.168.184.101是 ingress 部署的机器(可以从上面第4步找到该IP),my.nginx.com和my.tomcat.com是ingress文件中监听的域名

192.168.184.101 my.nginx.com

192.168.184.101 my.tomcat.com

- 2:使用火狐或者google等浏览器访问(这里我们选择用windows的命令行使用curl来访问,效果是一样的)

curl http://my.nginx.com/

curl http://my.tomcat.com/abc

访问测试(Linux环境下访问)

- 如果不进行设置,默认直接访问是不可以的:

[root@k8s-master ingress]# curl http://my.nginx.com/

curl: (6) Could not resolve host: my.nginx.com; 未知的错误

- 开始设置!!!!!

- 1:进入hosts文件:

vim /etc/hosts

- 添加一段内容(注意是添加,不要修改文件其他的内容),保存并退出:

- ingress被调度到的ip地址为192.168.184.101,可以通过(kubectl get ingress ingress-http -n test -o wide)命令找到。

# 模拟 DNS,其中192.168.184.101是 ingress 部署的机器(可以从上面第4步找到该IP),my.nginx.com和my.tomcat.com是ingress文件中监听的域名

192.168.184.101 my.nginx.com

192.168.184.101 my.tomcat.com

- 2:再次进行访问即可:

[root@k8s-master ingress]# curl http://my.nginx.com/

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

访问测试(windows环境下访问)

访问测试(Linux环境下访问)

Ingress 中的 nginx 的全局配置

- 1:编辑cm:

kubectl edit cm ingress-nginx-controller -n ingress-nginx

- 配置项加上:

data:

map-hash-bucket-size: "128" # Nginx的全局配置

ssl-protocols: SSLv2

Ingress限流

限流配置格式:(在Ingress配置文件定义使用)

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/backend-protocol: "HTTP"

nginx.ingress.kubernetes.io/limit-rps: "2" # 配置限流。每秒从指定IP接受的请求数。突发限制设置为此限制乘以突发乘数,默认乘数为5。当客户端超过此限制时,返回limit-req-status-code default: 503

限流实战

- 1:创建配置文件:

vim ingress-rate.yaml

- 内容如下:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-rate

namespace: test

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/backend-protocol: "HTTP"

nginx.ingress.kubernetes.io/limit-rps: "2" # 限流

spec:

rules:

- host: my.nginx.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: service-nginx

port:

number: 80

- 2:执行配置文件:

[root@k8s-master ingress]# kubectl apply -f ingress-rate.yaml

ingress.networking.k8s.io/ingress-rate created

- 3:快速的连续访问my.nginx.com/,超过一定次数后会出现503 Service Temporarily Unavailable。(说明限流成功)

❤️💛🧡本章结束,我们下一章见❤️💛🧡

![基于YOLOv5+C3CBAM+CBAM注意力的海底生物[海参、海胆、扇贝、海星]检测识别分析系统](https://img-blog.csdnimg.cn/img_convert/df21899986d75fb72e8d3291ac8b7a60.png)