ZLMediaKit简介

ZLMediaKit是一个基于C++11的高性能运营级流媒体服务框架,和SRS类似,功能强大,性能优异,提供多种支持多种协议(RTSP/RTMP/HLS/HTTP-FLV/WebSocket-FLV/GB28181/HTTP-TS/WebSocket-TS/HTTP-fMP4/WebSocket-fMP4/MP4/WebRTC),支持协议互转功能相当强大。

需求

ZLMediaKit本身提供player播放流媒体,但是在windows下用着不方便,而且播放时总提示overload,我需求也比较简单,测试各种流媒体播放延时,使用VLC等第三方播放器测试延时难以确定。所以想使用ZLMediaKit提供的c接口API自己做解码显示,ffmpeg软解和opengl显示yuv420p/nv12/nv21有成熟轮子用都比较简单(感谢雷霄晔赐予的入行第一个轮子),所以就搭建工程,实现起来也比较简单,做下记录。

项目准备

- openssl库

ZlMediakit需要依赖openssl,直接去下载网站下载安装文件安装即可,我安装的是Win64 OpenSSL v1.1.1w - ffmpeg库 解码必然要用到ffmpeg库,前往github编译地址下载编译好的压缩包,解压后包含头文件和动态库,我下载的是

ffmpeg-master-latest-win64-gpl.zip - freeflut库和glew库,使用opengl显示yuv需要使用这两个库,需要使用cmake创建vs工程,编译出需要的库文件,参考文章,具体过程也比较简单,下载解压源码,在源码下新建build文件夹,然后打开cmake-gui配置好源码目录和build工程文件存放目录,生成工程文件,使用vs编译即可。

- ZLMediaKit C api和mk_api.lib动态库,和之前一样,使用cmake-gui配置工程,编译后会在

release\windows\Debug\Debug目录下生成mk_api.lib和mk_api.dll,头文件位于源码api\include目录下;

注意先安装openssl,再编译ZLMediaKit。

实现

ffmpeg软解

硬解可参考ffmpeg官方的hwdecode.c,。

VideoDecoder.h

#pragma once

#include <iostream>

#include <cstdint>

extern "C" {

#include <libavcodec/avcodec.h>

#include <libavutil/imgutils.h>

#include <libavutil/opt.h>

}

typedef enum DecPixelFormat_ {

DEC_FMT_NONE = -1,

DEC_FMT_YUV420P, ///< planar YUV 4:2:0, 12bpp, (1 Cr & Cb sample per

///< 2x2 Y samples)

DEC_FMT_NV12, ///< planar YUV 4:2:0, 12bpp, 1 plane for Y and 1 plane

///< for the UV components, which are interleaved (first

///< byte U and the following byte V)

DEC_FMT_NV21, ///< as above, but U and V bytes are swapped

} DecPixelFormat;

class VideoDecoder {

public:

VideoDecoder(int32_t codec_id);// 0 : h264, 1 : hevc

~VideoDecoder();

uint8_t* decode(const uint8_t* src, uint32_t len, int32_t& pix_w, int32_t& pix_h, int32_t& format);

private:

int32_t AVPixelFormat2Format(int32_t av_fmt);

private:

AVCodecContext* dec_context = nullptr;

};

VideoDecoder.cpp

#include <iostream>

#include "videoDecoder.h"

#pragma comment(lib, "avcodec.lib")

#pragma comment(lib, "avutil.lib")

#pragma comment(lib, "swresample.lib")

VideoDecoder::VideoDecoder(int32_t codec_id)

{

// 根据输入参数选择解码器

const AVCodec* codec = nullptr;

if (codec_id == 0) {

codec = avcodec_find_decoder(AV_CODEC_ID_H264);

}

else if (codec_id == 1) {

codec = avcodec_find_decoder(AV_CODEC_ID_HEVC);

}

else {

std::cerr << "unknow codec_id" << std::endl;

}

if (!codec) {

std::cerr << "Codec [" << codec_id << "] not found\n";

return;

}

dec_context = avcodec_alloc_context3(codec);

if (!dec_context) {

std::cerr << "Could not allocate video codec context\n";

return;

}

// 打开解码器

if (avcodec_open2(dec_context, codec, NULL) < 0) {

std::cerr << "Could not open codec\n";

return;

}

std::cout << "open codec success" << std::endl;

}

VideoDecoder::~VideoDecoder()

{

std::cout << "VideoDecoder::~VideoDecoder()" << std::endl;

if (dec_context) {

avcodec_free_context(&dec_context);

dec_context = nullptr;

}

}

uint8_t* VideoDecoder::decode(const uint8_t* src, uint32_t len, int32_t& pix_w, int32_t& pix_h, int32_t& format)

{

AVPacket* pkt = nullptr;

AVFrame* frame = NULL;

AVFrame* tmp_frame = NULL;

uint8_t* buffer = NULL;

AVPixelFormat tmp_pixFmt = AV_PIX_FMT_NONE;

int size = 0;

pix_w = 0;

pix_h = 0;

format = DEC_FMT_NONE;

if (dec_context == nullptr) {

std::cerr << "dec_context is nullptr\n";

return NULL;

}

if (src == nullptr || len == 0) {

std::cerr << "src or len is null" << std::endl;

return nullptr;

}

pkt = av_packet_alloc();

if (pkt == nullptr) {

std::cerr << "Could not allocate packet\n";

return NULL;

}

pkt->data = const_cast<uint8_t*>(src); // FFmpeg expects non-const data pointer

pkt->size = len;

int ret = avcodec_send_packet(dec_context, pkt);

if (ret < 0) {

av_packet_free(&pkt);

pkt = nullptr;

fprintf(stderr, "Error during decoding\n");

return buffer;

}

while (1) {

if (!(frame = av_frame_alloc())) {

fprintf(stderr, "Can not alloc frame\n");

ret = AVERROR(ENOMEM);

goto fail;

}

ret = avcodec_receive_frame(dec_context, frame);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

av_frame_free(&frame);

return 0;

}

else if (ret < 0) {

fprintf(stderr, "Error while decoding\n");

goto fail;

}

tmp_frame = frame;

tmp_pixFmt = static_cast<AVPixelFormat>(tmp_frame->format);

size = av_image_get_buffer_size(tmp_pixFmt, tmp_frame->width, tmp_frame->height, 1);

buffer = (uint8_t*)malloc(sizeof(uint8_t) * size);

if (!buffer) {

fprintf(stderr, "Can not alloc buffer\n");

ret = AVERROR(ENOMEM);

goto fail;

}

ret = av_image_copy_to_buffer(buffer, size,

(const uint8_t* const*)tmp_frame->data,

(const int*)tmp_frame->linesize, tmp_pixFmt,

tmp_frame->width, tmp_frame->height, 1);

if (ret < 0) {

fprintf(stderr, "Can not copy image to buffer\n");

goto fail;

}

pix_w = tmp_frame->width;

pix_h = tmp_frame->height;

format = AVPixelFormat2Format(tmp_pixFmt);

fail:

av_packet_free(&pkt);

pkt = nullptr;

av_frame_free(&frame);

frame = NULL;

if (ret < 0 && buffer != NULL) {

av_free(buffer);

buffer = NULL;

}

return buffer;

}

return buffer;

}

int32_t VideoDecoder::AVPixelFormat2Format(int32_t pix_fmt)

{

switch (pix_fmt) {

case AV_PIX_FMT_YUV420P:

case AV_PIX_FMT_YUVJ420P:

return DEC_FMT_YUV420P;

case AV_PIX_FMT_NV12:

return DEC_FMT_NV12;

case AV_PIX_FMT_NV21:

return DEC_FMT_NV21;

default:

return DEC_FMT_NONE;

}

return DEC_FMT_NONE;

}

opengl显示YUV

yuvDisplayer.h

#pragma once

#include <iostream>

#define FREEGLUT_STATIC

#include "GL/glew.h"

#include "GL/glut.h"

#define ATTRIB_VERTEX 3

#define ATTRIB_TEXTURE 4

// Rotate the texture

#define TEXTURE_ROTATE 0

// Show half of the Texture

#define TEXTURE_HALF 0

typedef enum DisPixelFormat_ {

DIS_FMT_NONE = -1,

DIS_FMT_YUV420P, ///< planar YUV 4:2:0, 12bpp, (1 Cr & Cb sample per

///< 2x2 Y samples)

DIS_FMT_NV12, ///< planar YUV 4:2:0, 12bpp, 1 plane for Y and 1 plane

///< for the UV components, which are interleaved (first

///< byte U and the following byte V)

DIS_FMT_NV21, ///< as above, but U and V bytes are swapped

} DisPixelFormat;

class YUVDisplayer {

public:

YUVDisplayer(const std::string& title = "YUV Renderer", const int32_t x = -1, const int32_t y = -1);

~YUVDisplayer();

void display(const uint8_t* pData, int width, int height, int32_t format = DIS_FMT_NV12);

static bool getWindowSize(int32_t& width, int32_t& height);

private:

bool bInit;

int32_t gl_format;

std::string gl_title;

int32_t gl_x;

int32_t gl_y;

GLuint program;

GLuint id_y, id_u, id_v, id_uv; // Texture id

GLuint textureY, textureU, textureV, textureUV;

void initGL(int width, int height, int32_t format);

bool InitShaders(int32_t format);

void initTextures(int32_t format);

void initYV12Textures();

void initNV12Textures();

void display_yv12(const uint8_t* pData, int width, int height);

void display_nv12(const uint8_t* pData, int width, int height);

};

yuvDisplayer.cpp

#include <string.h>

#include <chrono>

#include <iostream>

#include <vector>

#include "yuvDisplayer.h"

#pragma comment(lib, "glew32.lib")

#pragma comment(lib, "glew32s.lib")

// Helper function to compile shaders

static const char* vs = "attribute vec4 vertexIn;\n"

"attribute vec2 textureIn;\n"

"varying vec2 textureOut;\n"

"void main(void){\n"

" gl_Position = vertexIn;\n"

" textureOut = textureIn;\n"

"}\n";

static const char* fs_yuv420p = "varying vec2 textureOut;\n"

"uniform sampler2D tex_y;\n"

"uniform sampler2D tex_u;\n"

"uniform sampler2D tex_v;\n"

"void main(void){\n"

" vec3 yuv;\n"

" vec3 rgb;\n"

" yuv.x = texture2D(tex_y, textureOut).r;\n"

" yuv.y = texture2D(tex_u, textureOut).r - 0.5;\n"

" yuv.z = texture2D(tex_v, textureOut).r - 0.5;\n"

" rgb = mat3( 1, 1, 1,\n"

" 0, -0.39465, 2.03211,\n"

" 1.13983, -0.58060, 0) * yuv;\n"

" gl_FragColor = vec4(rgb, 1);\n"

"}\n";

static const char* fs_nv12 = "varying vec2 textureOut;\n"

"uniform sampler2D tex_y;\n"

"uniform sampler2D tex_uv;\n"

"void main(void) {\n"

" vec3 yuv;\n"

" vec3 rgb;\n"

" yuv.x = texture2D(tex_y, textureOut).r - 0.0625;\n"

" vec2 uv = texture2D(tex_uv, textureOut).rg - vec2(0.5, 0.5);\n"

" yuv.y = uv.x;\n"

" yuv.z = uv.y;\n"

" rgb = mat3(1.164, 1.164, 1.164,\n"

" 0.0, -0.391, 2.018,\n"

" 1.596, -0.813, 0.0) * yuv;\n"

" gl_FragColor = vec4(rgb, 1.0);\n"

"}\n";

static const char* fs_nv21 = "varying vec2 textureOut;\n"

"uniform sampler2D tex_y;\n"

"uniform sampler2D tex_uv;\n"

"void main(void) {\n"

" vec3 yuv;\n"

" vec3 rgb;\n"

" yuv.x = texture2D(tex_y, textureOut).r;\n"

" vec2 uv = texture2D(tex_uv, textureOut).rg - vec2(0.5, 0.5);\n"

" yuv.y = uv.y;\n"

" yuv.z = uv.x;\n"

" rgb = mat3(1, 1, 1,\n"

" 0, -0.39465, 2.03211,\n"

" 1.13983, -0.58060, 0) * yuv;\n"

" gl_FragColor = vec4(rgb, 1);\n"

"}\n";

#if TEXTURE_ROTATE

static const GLfloat vertexVertices[] = {

-1.0f, -0.5f,

0.5f, -1.0f,

-0.5f, 1.0f,

1.0f, 0.5f,

};

#else

static const GLfloat vertexVertices[] = {

-1.0f, -1.0f,

1.0f, -1.0f,

-1.0f, 1.0f,

1.0f, 1.0f,

};

#endif

#if TEXTURE_HALF

static const GLfloat textureVertices[] = {

0.0f, 1.0f,

0.5f, 1.0f,

0.0f, 0.0f,

0.5f, 0.0f,

};

#else

static const GLfloat textureVertices[] = {

0.0f, 1.0f,

1.0f, 1.0f,

0.0f, 0.0f,

1.0f, 0.0f,

};

#endif

YUVDisplayer::YUVDisplayer(const std::string& title, const int32_t x, const int32_t y)

: bInit(false), gl_x(x), gl_y(y), gl_format(-1),

textureY(0), textureU(0), textureV(0), textureUV(0),

id_y(0), id_u(0), id_v(0), id_uv(0), program(0)

{

if (title.empty())

gl_title = "opengl yuv display";

else

gl_title = title;

}

YUVDisplayer::~YUVDisplayer() {

if (!bInit) return;

glDeleteTextures(1, &textureY);

if (textureY) glDeleteTextures(1, &textureY);

if (textureU) glDeleteTextures(1, &textureU);

if (textureV) glDeleteTextures(1, &textureV);

if (textureUV) glDeleteTextures(1, &textureUV);

glDeleteProgram(program);

bInit = false;

}

void YUVDisplayer::initGL(int32_t width, int32_t height, int32_t format)

{

int xpos = 0, ypos = 0;

int32_t screen_w = 0;

int32_t screen_h = 0;

int32_t width_win = width;

int32_t height_win = height;

if (!getWindowSize(screen_w, screen_h)) {

screen_w = 1920;

screen_h = 1080;

std::cerr << "getWindowSize failed!" << std::endl;

}

if (width > (screen_w * 2 / 3) || height > (screen_h * 2 / 3)) {

width_win = (width * 2 / 3);

height_win = (height * 2 / 3);

}

else {

width_win = width;

height_win = height;

}

if (gl_x < 0 || gl_y < 0) {

xpos = (screen_w - width_win) / 2;

ypos = (screen_h - height_win) / 2;

}

else {

xpos = gl_x;

ypos = gl_y;

}

int argc = 1;

char argv[1][16] = { 0 };

strcpy_s(argv[0], sizeof(argv[0]), "YUV Renderer");

char* pargv[1] = { argv[0] };

glutInit(&argc, pargv);

// GLUT_DOUBLE

glutInitDisplayMode(GLUT_DOUBLE |

GLUT_RGBA /*| GLUT_STENCIL | GLUT_DEPTH*/);

glutInitWindowPosition(xpos, ypos);

glutInitWindowSize(width_win, height_win);

glutCreateWindow(gl_title.c_str());

GLenum glewState = glewInit();

if (GLEW_OK != glewState) {

std::cerr << "glewInit failed!" << std::endl;

return;

}

InitShaders(format);

initTextures(format);

gl_format = format;

const uint8_t* glVersion = glGetString(GL_VERSION);

bInit = true;

std::cout << "opengl(" << glVersion << ") init success!" << std::endl;

}

bool YUVDisplayer::getWindowSize(int32_t& width, int32_t& height) {

#if 0

Display* display = XOpenDisplay(NULL);

if (!display) {

std::cerr << "Unable to open X display" << std::endl;

return false;

}

int screen_num = DefaultScreen(display);

width = DisplayWidth(display, screen_num);

height = DisplayHeight(display, screen_num);

XCloseDisplay(display);

#endif

width = 1920;

height = 1080;

return true;

}

// Init Shader

bool YUVDisplayer::InitShaders(int32_t format) {

GLint vertCompiled, fragCompiled, linked;

GLint v, f;

// Shader: step1

v = glCreateShader(GL_VERTEX_SHADER);

f = glCreateShader(GL_FRAGMENT_SHADER);

// Get source code

// Shader: step2

glShaderSource(v, 1, &vs, NULL);

if (format == DIS_FMT_YUV420P)

glShaderSource(f, 1, &fs_yuv420p, NULL);

else if (format == DIS_FMT_NV12)

glShaderSource(f, 1, &fs_nv12, NULL);

else if (format == DIS_FMT_NV21)

glShaderSource(f, 1, &fs_nv12, NULL);

else {

std::cerr << "InitShaders Invalid format [" << format << "]" << std::endl;

return false;

}

// Shader: step3

glCompileShader(v);

// Debug

glGetShaderiv(v, GL_COMPILE_STATUS, &vertCompiled);

glCompileShader(f);

glGetShaderiv(f, GL_COMPILE_STATUS, &fragCompiled);

// Program: Step1

program = glCreateProgram();

// Program: Step2

glAttachShader(program, v);

glAttachShader(program, f);

glBindAttribLocation(program, ATTRIB_VERTEX, "vertexIn");

glBindAttribLocation(program, ATTRIB_TEXTURE, "textureIn");

// Program: Step3

glLinkProgram(program);

// Debug

glGetProgramiv(program, GL_LINK_STATUS, &linked);

// Program: Step4

glUseProgram(program);

return true;

}

void YUVDisplayer::initYV12Textures()

{

textureY = glGetUniformLocation(program, "tex_y");

textureU = glGetUniformLocation(program, "tex_u");

textureV = glGetUniformLocation(program, "tex_v");

// Set Arrays

glVertexAttribPointer(ATTRIB_VERTEX, 2, GL_FLOAT, 0, 0, vertexVertices);

// Enable it

glEnableVertexAttribArray(ATTRIB_VERTEX);

glVertexAttribPointer(ATTRIB_TEXTURE, 2, GL_FLOAT, 0, 0, textureVertices);

glEnableVertexAttribArray(ATTRIB_TEXTURE);

// Init Texture

glGenTextures(1, &id_y);

glBindTexture(GL_TEXTURE_2D, id_y);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glGenTextures(1, &id_u);

glBindTexture(GL_TEXTURE_2D, id_u);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glGenTextures(1, &id_v);

glBindTexture(GL_TEXTURE_2D, id_v);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

}

void YUVDisplayer::initNV12Textures()

{

textureY = glGetUniformLocation(program, "tex_y");

textureUV = glGetUniformLocation(program, "tex_uv");

// Set Arrays

glVertexAttribPointer(ATTRIB_VERTEX, 2, GL_FLOAT, 0, 0, vertexVertices);

// Enable it

glEnableVertexAttribArray(ATTRIB_VERTEX);

glVertexAttribPointer(ATTRIB_TEXTURE, 2, GL_FLOAT, 0, 0, textureVertices);

glEnableVertexAttribArray(ATTRIB_TEXTURE);

// Init Texture

glGenTextures(1, &id_y);

glBindTexture(GL_TEXTURE_2D, id_y);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glGenTextures(1, &id_uv); // 注意这里只需要一个纹理用于UV分量

glBindTexture(GL_TEXTURE_2D, id_uv);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

}

void YUVDisplayer::initTextures(int32_t format)

{

// Get Uniform Variables Location

if (format == DIS_FMT_YUV420P)

initYV12Textures();

else if (format == DIS_FMT_NV12 || format == DIS_FMT_NV21)

initNV12Textures();

else {

std::cerr << "initTextures error Invalid format [" << format << "]" << std::endl;

return;

}

}

void YUVDisplayer::display(const uint8_t* yuv, int32_t pixel_w, int32_t pixel_h, int32_t format)

{

if (yuv == nullptr) {

std::cerr << "yuv nullptr error!" << std::endl;

return;

}

if (pixel_w <= 0 || pixel_h <= 0) {

std::cerr << "Invalid width or height!" << std::endl;

return;

}

if (format != DIS_FMT_YUV420P && format != DIS_FMT_NV12 && format != DIS_FMT_NV21) {

std::cerr << "displayYUVData Invalid format error " << format << std::endl;

return;

}

if (!bInit) {

initGL(pixel_w, pixel_h, format);

return;

}

if (pixel_w <= 0 || pixel_h <= 0) {

std::cerr << "Invalid width or height!" << std::endl;

return;

}

if (yuv == nullptr) {

std::cerr << "yuv nullptr error!" << std::endl;

return;

}

if (gl_format == DIS_FMT_YUV420P)

display_yv12(yuv, pixel_w, pixel_h);

else if (gl_format == DIS_FMT_NV12 || gl_format == DIS_FMT_NV21)

display_nv12(yuv, pixel_w, pixel_h);

else {

std::cerr << "displayYUVData Invalid format error " << gl_format << std::endl;

return;

}

}

void YUVDisplayer::display_yv12(const uint8_t* yuv, int pixel_w, int pixel_h)

{

const unsigned char* y = yuv;

const unsigned char* u = y + pixel_w * pixel_h;

const unsigned char* v = u + pixel_w * pixel_h / 4;

//Clear

glClearColor(0.0, 255, 0.0, 0.0);

glClear(GL_COLOR_BUFFER_BIT);

//Y

//

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, id_y);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RED, pixel_w, pixel_h, 0, GL_RED, GL_UNSIGNED_BYTE, y);

glUniform1i(textureY, 0);

//U

glActiveTexture(GL_TEXTURE1);

glBindTexture(GL_TEXTURE_2D, id_u);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RED, pixel_w / 2, pixel_h / 2, 0, GL_RED, GL_UNSIGNED_BYTE, u);

glUniform1i(textureU, 1);

//V

glActiveTexture(GL_TEXTURE2);

glBindTexture(GL_TEXTURE_2D, id_v);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RED, pixel_w / 2, pixel_h / 2, 0, GL_RED, GL_UNSIGNED_BYTE, v);

glUniform1i(textureV, 2);

// Draw

glDrawArrays(GL_TRIANGLE_STRIP, 0, 4);

// Show

//Double

glutSwapBuffers();

//Single

//glFlush();

}

void YUVDisplayer::display_nv12(const uint8_t* yuv, int pixel_w, int pixel_h)

{

const unsigned char* y = yuv;

const unsigned char* uv = y + pixel_w * pixel_h;

//Clear

glClearColor(0.0, 0.0, 0.0, 1.0);

glClear(GL_COLOR_BUFFER_BIT);

//Y

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, id_y);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RED, pixel_w, pixel_h, 0, GL_RED, GL_UNSIGNED_BYTE, y);

glUniform1i(textureY, 0);

//UV

glActiveTexture(GL_TEXTURE1);

glBindTexture(GL_TEXTURE_2D, id_uv);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RG, pixel_w / 2, pixel_h / 2, 0, GL_RG, GL_UNSIGNED_BYTE, uv);

glUniform1i(textureUV, 1); // 注意这里我们使用UV纹理和uniform,不再需要单独的U和V

// Draw

glDrawArrays(GL_TRIANGLE_STRIP, 0, 4);

// Show

glutSwapBuffers();

}

组帧

对ZLMediaKit获取的帧格式转为便于解码的格式

pullFramer.h

#pragma once

#include <iostream>

#include <memory>

#include <functional>

#include "mk_frame.h"

class FrameData {

public:

using Ptr = std::shared_ptr<FrameData>;

static Ptr CreateShared(const mk_frame frame) {

return Ptr(new FrameData(frame));

}

static Ptr CreateShared(uint8_t* data_, size_t size_, const mk_frame frame) {

return Ptr(new FrameData(data_, size_, frame));

}

~FrameData();

uint64_t dts() { return dts_; };

uint64_t pts() const { return pts_; }

size_t prefixSize() const { return prefixSize_; }

bool keyFrame() const { return keyFrame_; }

bool dropAble() const { return dropAble_; }

bool configFrame() const { return configFrame_; }

bool decodeAble() const { return decodeAble_; }

uint8_t* data() const { return data_; }

size_t size() const { return size_; }

friend std::ostream& operator<<(std::ostream& os, const FrameData::Ptr& Frame);

private:

FrameData(const mk_frame frame);

FrameData(uint8_t *data_, size_t size_, const mk_frame frame);

FrameData(const FrameData& other) = delete;

private:

uint64_t dts_;

uint64_t pts_;

size_t prefixSize_;

bool keyFrame_;

bool dropAble_;

bool configFrame_;

bool decodeAble_;

uint8_t* data_;

size_t size_;

};

class PullFramer {

public:

using Ptr = std::shared_ptr<PullFramer>;

using onGetFrame = std::function<void(const FrameData::Ptr&, void* decoder, void* displayer)>;

// using onConver = std::function<void(const FFmpegFrame::Ptr &)>;

static PullFramer::Ptr CreateShared() {

return std::make_shared<PullFramer>();

}

void setOnGetFrame(const onGetFrame& onGetFrame, void* decoder = nullptr, void* displayer = nullptr);

PullFramer();

~PullFramer();

bool onFrame(const mk_frame frame);

private:

onGetFrame cb_;

void* decoder_;

void* displayer_;

unsigned char* configFrames;

size_t configFramesSize;

void clearConfigFrames();

};

pullFramer.cpp

#include <iostream>

#include <iomanip>

#include "PullFramer.h"

static std::string dump(const void* buf, size_t size)

{

int len = 0;

char* data = (char*)buf;

std::string result = "NULL";

if (data == NULL || size <= 0)return result;

size_t total = size * 3 + 1;

char* buff = new char[size * 3 + 1];

memset(buff, 0, size * 3 + 1);

for (size_t i = 0; i < size; i++) {

len += sprintf_s(buff + len, total-len, "%.2x ", (data[i] & 0xff));

}

result = std::string(buff);

delete[] buff;

buff = NULL;

return result;

}

FrameData::FrameData(const mk_frame frame)

: data_(nullptr), size_(0), dts_(0), pts_(0), prefixSize_(0),

keyFrame_(false), configFrame_(false), dropAble_(true), decodeAble_(false)

{

if (frame == NULL) {

std::cerr << "frame is NULL" << std::endl;

return;

}

auto data = mk_frame_get_data(frame);

auto size = mk_frame_get_data_size(frame);

if (data == NULL || size == 0) {

std::cerr << "data is NULL or size is 0" << std::endl;

return;

}

data_ = new uint8_t[size + 1];

if (data_ == nullptr) {

std::cout << "new failed" << std::endl;

return;

}

size_ = size;

memcpy(data_, data, size_);

data_[size_] = 0;

dts_ = mk_frame_get_dts(frame);

pts_ = mk_frame_get_pts(frame);

prefixSize_ = mk_frame_get_data_prefix_size(frame);

auto flag = mk_frame_get_flags(frame);

keyFrame_ = (flag & MK_FRAME_FLAG_IS_KEY);

configFrame_ = (flag & MK_FRAME_FLAG_IS_CONFIG);

dropAble_ = (flag & MK_FRAME_FLAG_DROP_ABLE);

decodeAble_ = (flag & MK_FRAME_FLAG_NOT_DECODE_ABLE);

}

FrameData::FrameData(uint8_t *data, size_t size, const mk_frame frame)

: data_(nullptr), size_(0), dts_(0), pts_(0), prefixSize_(0),

keyFrame_(false), configFrame_(false), dropAble_(true), decodeAble_(false)

{

if (frame == NULL) {

std::cerr << "frame is NULL" << std::endl;

return;

}

if (data == NULL || size == 0) {

std::cerr << "data is NULL or size is 0" << std::endl;

return;

}

data_ = new uint8_t[size + 1];

if (data_ == nullptr) {

std::cout << "new failed" << std::endl;

return;

}

size_ = size;

memcpy(data_, data, size_);

data_[size_] = 0;

dts_ = mk_frame_get_dts(frame);

pts_ = mk_frame_get_pts(frame);

prefixSize_ = mk_frame_get_data_prefix_size(frame);

auto flag = mk_frame_get_flags(frame);

keyFrame_ = (flag & MK_FRAME_FLAG_IS_KEY);

configFrame_ = (flag & MK_FRAME_FLAG_IS_CONFIG);

dropAble_ = (flag & MK_FRAME_FLAG_DROP_ABLE);

decodeAble_ = (flag & MK_FRAME_FLAG_NOT_DECODE_ABLE);

}

FrameData::~FrameData()

{

if (data_ != nullptr) {

delete[] data_;

data_ = nullptr;

}

size_ = 0;

}

std::ostream& operator<<(std::ostream& os, const FrameData::Ptr& Frame)

{

size_t len = 10;

if (Frame.get() == nullptr || Frame->data() == nullptr || Frame->size() == 0) {

os << "NULL";

return os;

}

if (Frame->size() < 10) {

len = Frame->size();

}

os << "[" << Frame->pts() << ", drop:" << Frame->dropAble() << ", key:" << Frame->keyFrame() << ", "

<< std::setw(6) << std::right << Frame->size() << "] : "

<< dump(Frame->data(), len);

return os;

}

PullFramer::PullFramer()

{

configFrames = NULL;

configFramesSize = 0;

cb_ = NULL;

decoder_ = NULL;

displayer_ = NULL;

}

PullFramer::~PullFramer()

{

clearConfigFrames();

}

void PullFramer::setOnGetFrame(const onGetFrame& onGetFrame, void* decoder, void* displayer)

{

cb_ = onGetFrame;

decoder_ = decoder;

displayer_ = displayer;

}

void PullFramer::clearConfigFrames()

{

if (configFrames != NULL) {

free(configFrames);

configFrames = nullptr;

}

configFramesSize = 0;

}

bool PullFramer::onFrame(const mk_frame frame_)

{

if (frame_ == NULL) {

return false;

}

auto frame = FrameData::CreateShared(frame_);

if (frame.get() == nullptr || frame->data() == nullptr || frame->size() == 0) {

return false;

}

auto data = frame->data();

auto size = frame->size();

if (frame->configFrame()) {

size_t newSize = configFramesSize + size;

unsigned char* newConfigFrames = (unsigned char*)realloc(configFrames, newSize);

if (newConfigFrames == NULL) {

std::cout << "realloc failed" << std::endl;

clearConfigFrames();

return false;

}

configFrames = newConfigFrames;

memcpy(configFrames + configFramesSize, data, size);

configFramesSize = newSize;

}

else {

if (configFrames != NULL && configFramesSize != 0) {

if (frame->dropAble()) {

size_t newSize = configFramesSize + size;

unsigned char* newConfigFrames = (unsigned char*)realloc(configFrames, newSize);

if (newConfigFrames == NULL) {

std::cout << "realloc failed" << std::endl;

clearConfigFrames();

return false;

}

configFrames = newConfigFrames;

memcpy(configFrames + configFramesSize, data, size);

configFramesSize = newSize;

return true;

}

size_t totalSize = configFramesSize + size;

unsigned char* mergedData = (unsigned char*)malloc(totalSize);

if (mergedData == NULL) {

std::cout << "malloc failed" << std::endl;

clearConfigFrames();

return false;

}

memcpy(mergedData, configFrames, configFramesSize);

memcpy(mergedData + configFramesSize, data, size);

clearConfigFrames();

if (cb_) {

cb_(FrameData::CreateShared(mergedData, totalSize, frame_), decoder_, displayer_);

}

else {

printf("on Frame %zu [%.2x %.2x %.2x %.2x %.2x %.2x]\n", totalSize, mergedData[0], mergedData[1], mergedData[2], mergedData[3], (mergedData[4] & 0xff), (mergedData[5] & 0xff));

}

free(mergedData);

mergedData = NULL;

return true;

}

else {

if (cb_) {

cb_(FrameData::CreateShared(frame_), decoder_, displayer_);

}

else {

printf("on Frame %zu [%.2x %.2x %.2x %.2x %.2x %.2x]\n", size, data[0], data[1], data[2], data[3], (data[4] & 0xff), (data[5] & 0xff));

}

return true;

}

}

return true;

}

主函数

实现拉流解码显示流程

// zlmplayer.cpp : 此文件包含 "main" 函数。程序执行将在此处开始并结束。

//

#include <iostream>

#include <string.h>

#include <stdlib.h>

#include <stdio.h>

#include <chrono>

#include "yuvDisplayer.h"

#include "videoDecoder.h"

#include "pullFramer.h"

#include "mk_mediakit.h"

#pragma comment(lib, "mk_api.lib")

typedef struct {

void* puller;

void* decoder;

} Context;

void API_CALL on_track_frame_out(void* user_data, mk_frame frame) {

Context* ctx = (Context*)user_data;

auto pullerPtr = static_cast<std::shared_ptr<PullFramer>*>(ctx->puller);

std::shared_ptr<PullFramer> puller = *pullerPtr;

if (puller) {

puller->onFrame(frame);

}

}

void API_CALL on_mk_play_event_func(void* user_data, int err_code, const char* err_msg, mk_track tracks[],

int track_count) {

// Context* ctx = (Context*)user_data;

if (err_code == 0) {

//success

log_debug("play success!");

int i;

for (i = 0; i < track_count; ++i) {

if (mk_track_is_video(tracks[i])) {

Context* ctx = (Context*)user_data;

auto decoderPtr = static_cast<std::shared_ptr<VideoDecoder>*>(ctx->decoder);

std::shared_ptr<VideoDecoder> decoder = *decoderPtr;

if (decoder == nullptr) {

decoder = std::make_shared<VideoDecoder>(mk_track_codec_id(tracks[i]));

*decoderPtr = decoder;

}

log_info("got video track: %s", mk_track_codec_name(tracks[i]));

//ctx->video_decoder = mk_decoder_create(tracks[i], 0);

//mk_decoder_set_cb(ctx->video_decoder, on_frame_decode, user_data);

//监听track数据回调

mk_track_add_delegate(tracks[i], on_track_frame_out, user_data);

}

}

}

else {

log_warn("play failed: %d %s", err_code, err_msg);

}

}

void API_CALL on_mk_shutdown_func(void* user_data, int err_code, const char* err_msg, mk_track tracks[], int track_count) {

log_warn("play interrupted: %d %s", err_code, err_msg);

}

void onGetFrame(const FrameData::Ptr& frame, void* userData1, void* userData2)

{

// std::cout <<frame<<std::endl;

auto decoderPtr = static_cast<std::shared_ptr<VideoDecoder>*>(userData1);

std::shared_ptr<VideoDecoder> decoder = *decoderPtr;

auto displayerPtr = static_cast<std::shared_ptr<YUVDisplayer>*>(userData2);

std::shared_ptr<YUVDisplayer> displayer = *displayerPtr;

if (decoder) {

int32_t pixel_width = 0;

int32_t pixel_height = 0;

int32_t pixel_format = 0;

auto start = std::chrono::steady_clock::now();

auto dstYUV = decoder->decode(frame->data(), frame->size(), pixel_width, pixel_height, pixel_format);

if (dstYUV == nullptr) {

std::cerr << "decode error" << std::endl;

return;

}

auto end = std::chrono::steady_clock::now();

auto duration = std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count();

// std::cout<<"width:"<<pixel_width<<" height:"<<pixel_height<<" format:"<<pixel_format<<std::endl;

std::cout << "decode cost " << duration << " ms" << std::endl;

#if 1

if (displayer) {

start = end;

displayer->display(dstYUV, pixel_width, pixel_height, pixel_format);

end = std::chrono::steady_clock::now();

duration = std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count();

std::cout << "display cost " << duration << " ms" << std::endl;

}

#endif

free(dstYUV);

dstYUV = nullptr;

}

}

int main(int argc, char *argv[])

{

mk_config config;

config.ini = NULL;

config.ini_is_path = 0;

config.log_level = 0;

config.log_mask = LOG_CONSOLE;

config.ssl = NULL;

config.ssl_is_path = 1;

config.ssl_pwd = NULL;

config.thread_num = 0;

std::string url = "";

printf("%d %s\n", argc, argv[0]);

if (argc == 1) {

url = "rtsp://admin:zmj123456@192.168.119.39";

}

else {

url = argv[1];

}

mk_env_init(&config);

auto player = mk_player_create();

auto puller = PullFramer::CreateShared();

auto displayer = std::make_shared<YUVDisplayer>(url);

std::shared_ptr<VideoDecoder> decoder = nullptr;

puller->setOnGetFrame(onGetFrame, static_cast<void*>(&decoder), static_cast<void*>(&displayer));

Context ctx;

memset(&ctx, 0, sizeof(Context));

ctx.puller = static_cast<void*>(&puller);

ctx.decoder = static_cast<void*>(&decoder);

mk_player_set_on_result(player, on_mk_play_event_func, &ctx);

mk_player_set_on_shutdown(player, on_mk_shutdown_func, NULL);

mk_player_set_option(player, "rtp_type", "0");

mk_player_set_option(player, "protocol_timeout_ms", "5000");

mk_player_play(player, url.c_str());

std::cout << "Hello World!\n";

log_info("enter any key to exit");

getchar();

if (player) {

mk_player_release(player);

}

return 0;

}

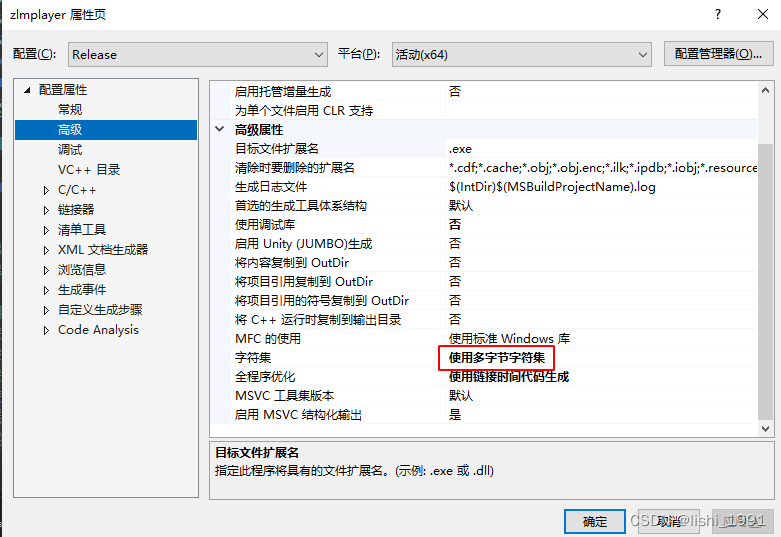

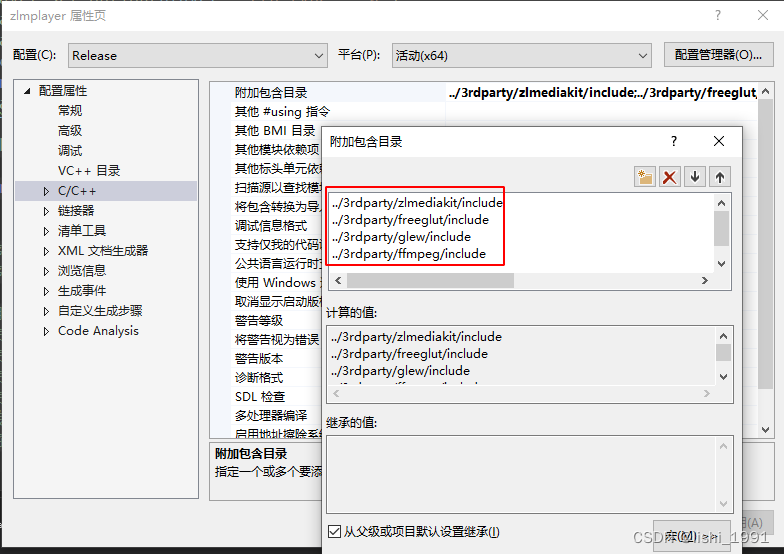

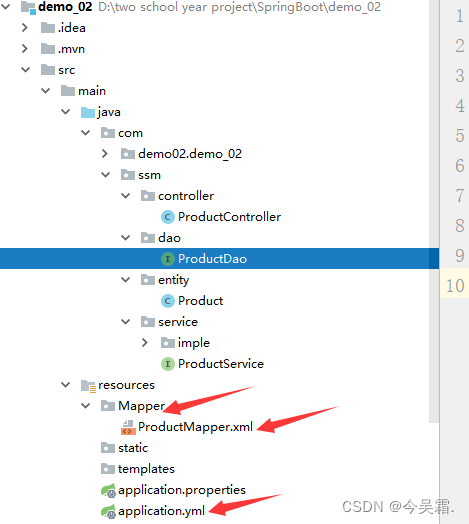

vs工程其他配置

使用多字节字符集,避免处理字符繁琐过程。

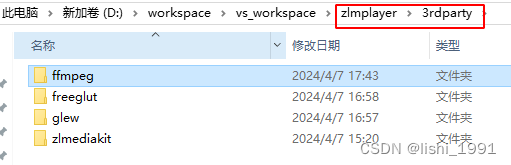

需要配置几个第三方库头文件和lib库位置,如下图:

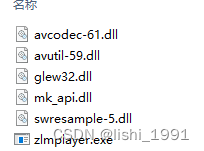

运行目录下需要ffmpeg,glew,mk-api第三方库(freeglut我使用静态库编译)

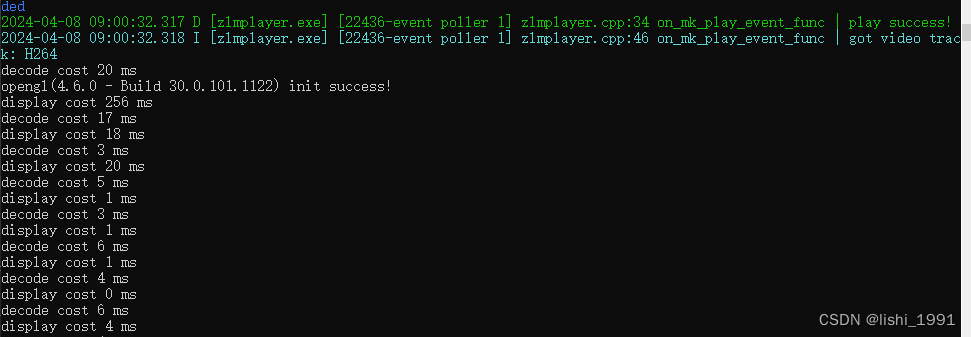

运行效果

![[开源] 基于transformer的时间序列预测模型python代码](https://img-blog.csdnimg.cn/direct/a9e682f5b24949579e45c1604aaa9d4d.png)