目录

一、Apache HBase

1、HBase Shell操作

1.1、DDL创建修改表格

1、创建命名空间和表格

2、查看表格

3、修改表

4、删除表

1.2、DML写入读取数据

1、写入数据

2、读取数据

3、删除数据

2、大数据软件启动

一、Apache HBase

1、HBase Shell操作

先启动HBase。再进行下面命令行操作。

1、进入HBase客户端命令行

[root@node1 hbase-3.0.0]# bin/hbase shell

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/export/server/hadoop-3.3.6/share/hadoop/common/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/export/server/hbase-3.0.0/lib/client-facing-thirdparty/log4j-slf4j-impl-2.17.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Reload4jLoggerFactory]

HBase Shell

Use "help" to get list of supported commands.

Use "exit" to quit this interactive shell.

For Reference, please visit: http://hbase.apache.org/book.html#shell

Version 3.0.0-beta-1, r119d11c808aefa82d22fef6cd265981506b9dc09, Tue Dec 26 07:40:00 UTC 2023

Took 0.0014 seconds

hbase:001:0>

2、查看帮助命令

能够展示HBase中所有能使用的命令,主要使用的命令有namespace命令空间相关,DDL创建修改表格,DML写入读取数据。

hbase:001:0> help

HBase Shell, version 3.0.0-beta-1, r119d11c808aefa82d22fef6cd265981506b9dc09, Tue Dec 26 07:40:00 UTC 2023

Type 'help "COMMAND"', (e.g. 'help "get"' -- the quotes are necessary) for help on a specific command.

Commands are grouped. Type 'help "COMMAND_GROUP"', (e.g. 'help "general"') for help on a command group.

COMMAND GROUPS:

Group name: general

Commands: processlist, status, table_help, version, whoami

Group name: ddl

Commands: alter, alter_async, alter_status, clone_table_schema, create, describe, disable, disable_all, drop, drop_all, enable, enable_all, exists, get_table, is_disabled, is_enabled, list, list_disabled_tables, list_enabled_tables, list_regions, locate_region, show_filters

Group name: namespace

Commands: alter_namespace, create_namespace, describe_namespace, drop_namespace, list_namespace, list_namespace_tables

Group name: dml

Commands: append, count, delete, deleteall, get, get_counter, get_splits, incr, put, scan, truncate, truncate_preserve

Group name: tools

Commands: assign, balance_switch, balancer, balancer_enabled, catalogjanitor_enabled, catalogjanitor_run, catalogjanitor_switch, cleaner_chore_enabled, cleaner_chore_run, cleaner_chore_switch, clear_block_cache, clear_compaction_queues, clear_deadservers, clear_slowlog_responses, close_region, compact, compact_rs, compaction_state, compaction_switch, decommission_regionservers, flush, flush_master_store, get_balancer_decisions, get_balancer_rejections, get_largelog_responses, get_slowlog_responses, hbck_chore_run, is_in_maintenance_mode, list_deadservers, list_decommissioned_regionservers, list_liveservers, list_unknownservers, major_compact, merge_region, move, normalize, normalizer_enabled, normalizer_switch, recommission_regionserver, regioninfo, rit, snapshot_cleanup_enabled, snapshot_cleanup_switch, split, splitormerge_enabled, splitormerge_switch, stop_master, stop_regionserver, trace, truncate_region, unassign, wal_roll, zk_dump

Group name: replication

Commands: add_peer, append_peer_exclude_namespaces, append_peer_exclude_tableCFs, append_peer_namespaces, append_peer_tableCFs, disable_peer, disable_table_replication, enable_peer, enable_table_replication, get_peer_config, list_peer_configs, list_peers, list_replicated_tables, peer_modification_enabled, peer_modification_switch, remove_peer, remove_peer_exclude_namespaces, remove_peer_exclude_tableCFs, remove_peer_namespaces, remove_peer_tableCFs, set_peer_bandwidth, set_peer_exclude_namespaces, set_peer_exclude_tableCFs, set_peer_namespaces, set_peer_replicate_all, set_peer_serial, set_peer_tableCFs, show_peer_tableCFs, transit_peer_sync_replication_state, update_peer_config

Group name: snapshots

Commands: clone_snapshot, delete_all_snapshot, delete_snapshot, delete_table_snapshots, list_snapshots, list_table_snapshots, restore_snapshot, snapshot

Group name: configuration

Commands: update_all_config, update_config, update_rsgroup_config

Group name: quotas

Commands: disable_exceed_throttle_quota, disable_rpc_throttle, enable_exceed_throttle_quota, enable_rpc_throttle, list_quota_snapshots, list_quota_table_sizes, list_quotas, list_snapshot_sizes, set_quota

Group name: security

Commands: grant, list_security_capabilities, revoke, user_permission

Group name: procedures

Commands: list_locks, list_procedures

Group name: visibility labels

Commands: add_labels, clear_auths, get_auths, list_labels, set_auths, set_visibility

Group name: rsgroup

Commands: add_rsgroup, alter_rsgroup_config, balance_rsgroup, get_namespace_rsgroup, get_rsgroup, get_server_rsgroup, get_table_rsgroup, list_rsgroups, move_namespaces_rsgroup, move_servers_namespaces_rsgroup, move_servers_rsgroup, move_servers_tables_rsgroup, move_tables_rsgroup, remove_rsgroup, remove_servers_rsgroup, rename_rsgroup, show_rsgroup_config

Group name: storefiletracker

Commands: change_sft, change_sft_all

SHELL USAGE:

Quote all names in HBase Shell such as table and column names. Commas delimit

command parameters. Type <RETURN> after entering a command to run it.

Dictionaries of configuration used in the creation and alteration of tables are

Ruby Hashes. They look like this:

{'key1' => 'value1', 'key2' => 'value2', ...}

and are opened and closed with curley-braces. Key/values are delimited by the

'=>' character combination. Usually keys are predefined constants such as

NAME, VERSIONS, COMPRESSION, etc. Constants do not need to be quoted. Type

'Object.constants' to see a (messy) list of all constants in the environment.

If you are using binary keys or values and need to enter them in the shell, use

double-quote'd hexadecimal representation. For example:

hbase> get 't1', "key\x03\x3f\xcd"

hbase> get 't1', "key\003\023\011"

hbase> put 't1', "test\xef\xff", 'f1:', "\x01\x33\x40"

The HBase shell is the (J)Ruby IRB with the above HBase-specific commands added.

For more on the HBase Shell, see http://hbase.apache.org/book.html

hbase:002:0>

查看命令使用方式以create_namespace为例

hbase:002:0> help 'create_namespace'

Create namespace; pass namespace name,

and optionally a dictionary of namespace configuration.

Examples:

hbase> create_namespace 'ns1'

hbase> create_namespace 'ns1', {'PROPERTY_NAME'=>'PROPERTY_VALUE'}

hbase:003:0>

上面命令中的list相当于数据库中的show命令。

问题:创建namespace报错!

hbase:002:0> create_namespace 'lwztest'

2024-03-24 23:23:21,813 INFO [RPCClient-NioEventLoopGroup-1-1] Configuration.deprecation (Configuration.java:logDeprecation(1395)) - hbase.client.pause.cqtbe is deprecated. Instead, use hbase.client.pause.server.overloaded

2024-03-24 23:23:24,628 WARN [RPCClient-NioEventLoopGroup-1-2] client.AsyncRpcRetryingCaller (AsyncRpcRetryingCaller.java:onError(177 )) - Call to master failed, tries = 6, maxAttempts = 7, timeout = 1200000 ms, time elapsed = 2692 ms

org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: Server is not runn ing yet

at org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)

at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)

at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)

at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hbase.ipc.RemoteWithExtrasException.instantiateException(RemoteWithExtrasException.java:110)

at org.apache.hadoop.hbase.ipc.RemoteWithExtrasException.unwrapRemoteException(RemoteWithExtrasException.java:100)

at org.apache.hadoop.hbase.client.ConnectionUtils.translateException(ConnectionUtils.java:217)

at org.apache.hadoop.hbase.client.AsyncRpcRetryingCaller.onError(AsyncRpcRetryingCaller.java:165)

at org.apache.hadoop.hbase.client.AsyncMasterRequestRpcRetryingCaller.lambda$null$4(AsyncMasterRequestRpcRetryingCaller.java:7 6)

at org.apache.hadoop.hbase.util.FutureUtils.lambda$addListener$0(FutureUtils.java:71)

at java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:760)

at java.util.concurrent.CompletableFuture$UniWhenComplete.tryFire(CompletableFuture.java:736)

at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:474)

at java.util.concurrent.CompletableFuture.completeExceptionally(CompletableFuture.java:1977)

at org.apache.hadoop.hbase.client.RawAsyncHBaseAdmin$1.run(RawAsyncHBaseAdmin.java:463)

at org.apache.hbase.thirdparty.com.google.protobuf.RpcUtil$1.run(RpcUtil.java:79)

at org.apache.hbase.thirdparty.com.google.protobuf.RpcUtil$1.run(RpcUtil.java:70)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient.onCallFinished(AbstractRpcClient.java:396)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient.access$100(AbstractRpcClient.java:93)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient$3.run(AbstractRpcClient.java:429)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient$3.run(AbstractRpcClient.java:424)

at org.apache.hadoop.hbase.ipc.Call.callComplete(Call.java:117)

at org.apache.hadoop.hbase.ipc.Call.setException(Call.java:132)

at org.apache.hadoop.hbase.ipc.NettyRpcDuplexHandler.readResponse(NettyRpcDuplexHandler.java:199)

at org.apache.hadoop.hbase.ipc.NettyRpcDuplexHandler.channelRead(NettyRpcDuplexHandler.java:220)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:442)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:420)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.ja va:412)

at org.apache.hbase.thirdparty.io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:346)

at org.apache.hbase.thirdparty.io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:318)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:444)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:420)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.ja va:412)

at org.apache.hbase.thirdparty.io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:286)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:442)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:420)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.ja va:412)

at org.apache.hbase.thirdparty.io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:141 0)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:440)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:420)

at org.apache.hbase.thirdparty.io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

at org.apache.hbase.thirdparty.io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:166)

at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:788)

at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:724)

at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:650)

at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:562)

at org.apache.hbase.thirdparty.io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:997)

at org.apache.hbase.thirdparty.io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at org.apache.hbase.thirdparty.io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.hadoop.hbase.ipc.RemoteWithExtrasException(org.apache.hadoop.hbase.ipc.ServerNotRunningYetException): org.apache .hadoop.hbase.ipc.ServerNotRunningYetException: Server is not running yet

at org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)

at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)

at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)

at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient.onCallFinished(AbstractRpcClient.java:391)

... 32 more

2024-03-24 23:23:26,673 WARN [RPCClient-NioEventLoopGroup-1-2] client.AsyncRpcRetryingCaller (AsyncRpcRetryingCaller.java:onError(177 )) - Call to master failed, tries = 7, maxAttempts = 7, timeout = 1200000 ms, time elapsed = 4739 ms

org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: Server is not runn ing yet

at org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)

at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)

at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)

at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hbase.ipc.RemoteWithExtrasException.instantiateException(RemoteWithExtrasException.java:110)

at org.apache.hadoop.hbase.ipc.RemoteWithExtrasException.unwrapRemoteException(RemoteWithExtrasException.java:100)

at org.apache.hadoop.hbase.client.ConnectionUtils.translateException(ConnectionUtils.java:217)

at org.apache.hadoop.hbase.client.AsyncRpcRetryingCaller.onError(AsyncRpcRetryingCaller.java:165)

at org.apache.hadoop.hbase.client.AsyncMasterRequestRpcRetryingCaller.lambda$null$4(AsyncMasterRequestRpcRetryingCaller.java:7 6)

at org.apache.hadoop.hbase.util.FutureUtils.lambda$addListener$0(FutureUtils.java:71)

at java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:760)

at java.util.concurrent.CompletableFuture$UniWhenComplete.tryFire(CompletableFuture.java:736)

at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:474)

at java.util.concurrent.CompletableFuture.completeExceptionally(CompletableFuture.java:1977)

at org.apache.hadoop.hbase.client.RawAsyncHBaseAdmin$1.run(RawAsyncHBaseAdmin.java:463)

at org.apache.hbase.thirdparty.com.google.protobuf.RpcUtil$1.run(RpcUtil.java:79)

at org.apache.hbase.thirdparty.com.google.protobuf.RpcUtil$1.run(RpcUtil.java:70)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient.onCallFinished(AbstractRpcClient.java:396)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient.access$100(AbstractRpcClient.java:93)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient$3.run(AbstractRpcClient.java:429)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient$3.run(AbstractRpcClient.java:424)

at org.apache.hadoop.hbase.ipc.Call.callComplete(Call.java:117)

at org.apache.hadoop.hbase.ipc.Call.setException(Call.java:132)

at org.apache.hadoop.hbase.ipc.NettyRpcDuplexHandler.readResponse(NettyRpcDuplexHandler.java:199)

at org.apache.hadoop.hbase.ipc.NettyRpcDuplexHandler.channelRead(NettyRpcDuplexHandler.java:220)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:442)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:420)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.ja va:412)

at org.apache.hbase.thirdparty.io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:346)

at org.apache.hbase.thirdparty.io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:318)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:444)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:420)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.ja va:412)

at org.apache.hbase.thirdparty.io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:286)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:442)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:420)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.ja va:412)

at org.apache.hbase.thirdparty.io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:141 0)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:440)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:420)

at org.apache.hbase.thirdparty.io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

at org.apache.hbase.thirdparty.io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:166)

at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:788)

at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:724)

at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:650)

at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:562)

at org.apache.hbase.thirdparty.io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:997)

at org.apache.hbase.thirdparty.io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at org.apache.hbase.thirdparty.io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.hadoop.hbase.ipc.RemoteWithExtrasException(org.apache.hadoop.hbase.ipc.ServerNotRunningYetException): org.apache .hadoop.hbase.ipc.ServerNotRunningYetException: Server is not running yet

at org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)

at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)

at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)

at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient.onCallFinished(AbstractRpcClient.java:391)

... 32 more

2024-03-24 23:23:26,693 INFO [RPCClient-NioEventLoopGroup-1-2] client.AsyncHBaseAdmin (RawAsyncHBaseAdmin.java:onError(2730)) - Opera tion: CREATE_NAMESPACE, Namespace: lwztest failed with Failed after attempts=7, exceptions:

2024-03-24T15:23:22.437Z, org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetExc eption: Server is not running yet

at org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)

at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)

at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)

at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)

2024-03-24T15:23:22.561Z, org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetExc eption: Server is not running yet

at org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)

at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)

at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)

at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)

2024-03-24T15:23:22.777Z, org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetExc eption: Server is not running yet

at org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)

at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)

at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)

at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)

2024-03-24T15:23:23.084Z, org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetExc eption: Server is not running yet

at org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)

at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)

at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)

at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)

2024-03-24T15:23:23.599Z, org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetExc eption: Server is not running yet

at org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)

at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)

at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)

at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)

2024-03-24T15:23:24.637Z, org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetExc eption: Server is not running yet

at org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)

at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)

at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)

at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)

2024-03-24T15:23:26.676Z, org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetExc eption: Server is not running yet

at org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)

at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)

at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)

at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)

ERROR: org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: Server is not running yet

at org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)

at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)

at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)

at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)

For usage try 'help "create_namespace"'解决方式:

1. 停止hbase集群

2. 在配置文件hbase-site.xml 文件中增加如下配置-->分发到集群其他机器

<property>

<name>hbase.wal.provider</name>

<value>filesystem</value>

</property>3. 重启hbase集群

1.1、DDL创建修改表格

1、创建命名空间和表格

此时操作成功!

hbase:001:0> create_namespace 'lwztest'

2024-03-24 23:33:51,354 INFO [RPCClient-NioEventLoopGroup-1-1] Configuration.deprecation (Configuration.java:logDeprecation(1395)) - hbase.client.pause.cqtbe is deprecated. Instead, use hbase.client.pause.server.overloaded

2024-03-24 23:33:51,653 INFO [RPCClient-NioEventLoopGroup-1-2] client.AsyncHBaseAdmin (RawAsyncHBaseAdmin.java:onFinished(2725)) - Operation: CREATE_NAMESPACE, Namespace: lwztest completed

Took 1.3931 seconds

hbase:002:0> list

TABLE

0 row(s)

Took 0.0251 seconds

=> []

hbase:003:0> list_namespace

NAMESPACE

default

hbase

lwztest

3 row(s)

Took 0.0534 seconds

hbase:004:0> help 'create'

Creates a table. Pass a table name, and a set of column family

specifications (at least one), and, optionally, table configuration.

Column specification can be a simple string (name), or a dictionary

(dictionaries are described below in main help output), necessarily

including NAME attribute.

Examples:

Create a table with namespace=ns1 and table qualifier=t1

hbase> create 'ns1:t1', {NAME => 'f1', VERSIONS => 5}

Create a table with namespace=default and table qualifier=t1

hbase> create 't1', {NAME => 'f1'}, {NAME => 'f2'}, {NAME => 'f3'}

hbase> # The above in shorthand would be the following:

hbase> create 't1', 'f1', 'f2', 'f3'

hbase> create 't1', {NAME => 'f1', VERSIONS => 1, TTL => 2592000, BLOCKCACHE => true}

hbase> create 't1', {NAME => 'f1', CONFIGURATION => {'hbase.hstore.blockingStoreFiles' => '10'}}

hbase> create 't1', {NAME => 'f1', IS_MOB => true, MOB_THRESHOLD => 1000000, MOB_COMPACT_PARTITION_POLICY => 'weekly'}

Table configuration options can be put at the end.

Examples:

hbase> create 'ns1:t1', 'f1', SPLITS => ['10', '20', '30', '40']

hbase> create 't1', 'f1', SPLITS => ['10', '20', '30', '40']

hbase> create 't1', 'f1', SPLITS_FILE => 'splits.txt'

hbase> create 't1', {NAME => 'f1', VERSIONS => 5}, METADATA => { 'mykey' => 'myvalue' }

hbase> # Optionally pre-split the table into NUMREGIONS, using

hbase> # SPLITALGO ("HexStringSplit", "UniformSplit" or classname)

hbase> create 't1', 'f1', {NUMREGIONS => 15, SPLITALGO => 'HexStringSplit'}

hbase> create 't1', 'f1', {NUMREGIONS => 15, SPLITALGO => 'HexStringSplit', REGION_REPLICATION => 2, CONFIGURATION => {'hbase.hregion.scan.loadColumnFamiliesOnDemand' => 'true'}}

hbase> create 't1', 'f1', {SPLIT_ENABLED => false, MERGE_ENABLED => false}

hbase> create 't1', {NAME => 'f1', DFS_REPLICATION => 1}

You can also keep around a reference to the created table:

hbase> t1 = create 't1', 'f1'

Which gives you a reference to the table named 't1', on which you can then

call methods.

# 创建表

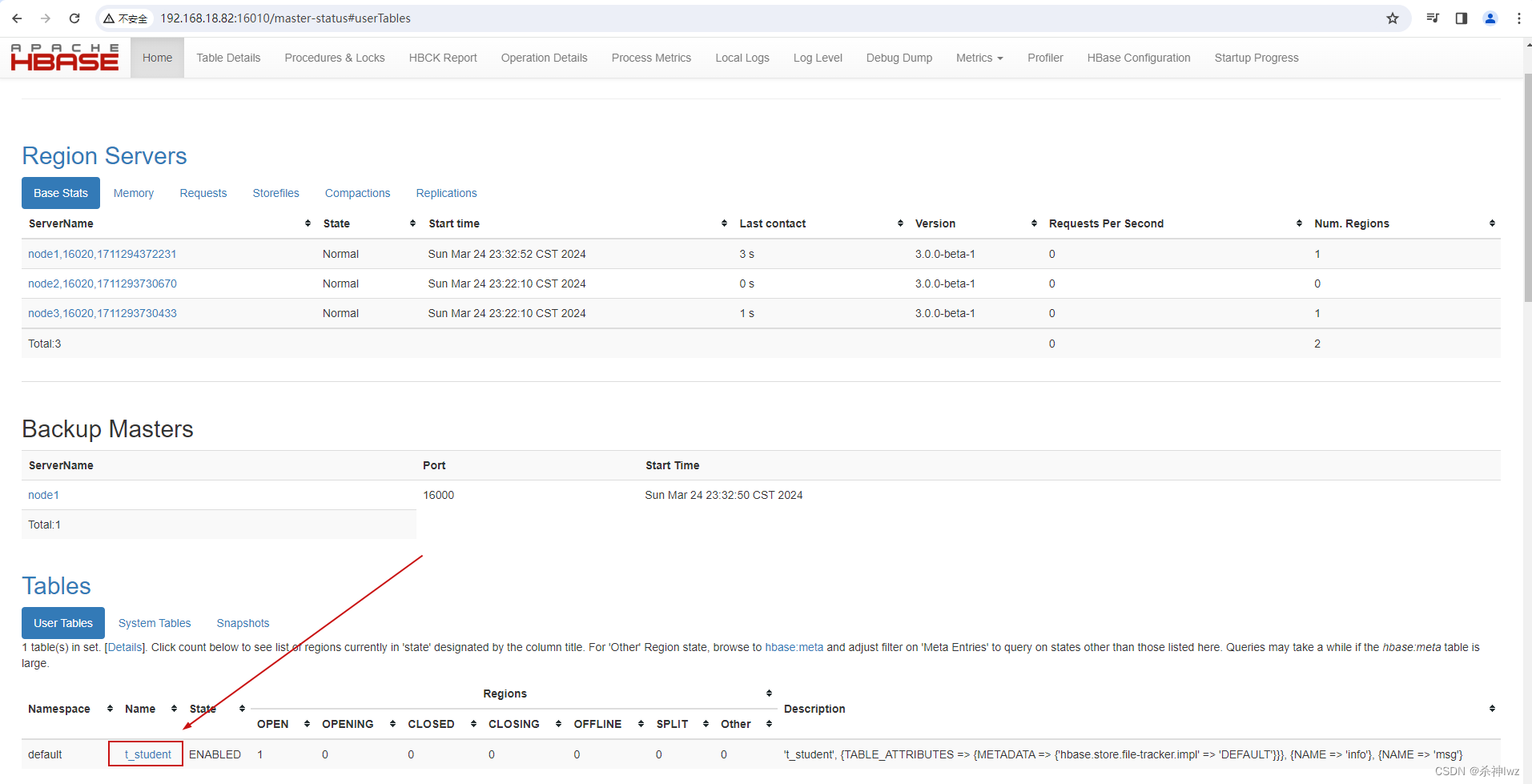

hbase:005:0> create 't_student','info','msg'

Created table t_student

2024-03-25 00:01:27,754 INFO [RPCClient-NioEventLoopGroup-1-3] client.AsyncHBaseAdmin (RawAsyncHBaseAdmin.java:onFinished(2701)) - Operation: CREATE, Table Name: default:t_student completed

Took 1.2346 seconds

=> Hbase::Table - t_student

hbase:006:0> list

TABLE

t_student

1 row(s)

Took 0.0084 seconds

=> ["t_student"]

2、查看表格

#创建表格

hbase:003:0> create 'lwztest:t_person', {NAME => 'info', VERSIONS => 5}, {NAME => 'msg', VERSIONS => 5}

Created table lwztest:t_person

2024-03-27 23:38:15,261 INFO [RPCClient-NioEventLoopGroup-1-3] client.AsyncHBaseAdmin (RawAsyncHBaseAdmin.java:onFinished(2701)) - Operation: CREATE, Table Name: lwztest:t_person completed

Took 1.2760 seconds

=> Hbase::Table - lwztest:t_person

#查看表格信息

hbase:005:0> describe 'lwztest:t_person'

Table lwztest:t_person is ENABLED

lwztest:t_person, {TABLE_ATTRIBUTES => {METADATA => {'hbase.store.file-tracker.impl' => 'DEFAULT'}}}

COLUMN FAMILIES DESCRIPTION

{NAME => 'info', INDEX_BLOCK_ENCODING => 'NONE', VERSIONS => '5', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', M

IN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE =>

'65536 B (64KB)'}

{NAME => 'msg', INDEX_BLOCK_ENCODING => 'NONE', VERSIONS => '5', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', MI

N_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE =>

'65536 B (64KB)'}

2 row(s)

Quota is disabled

Took 0.1988 seconds

hbase:006:0>

3、修改表

alter

#修改表格

hbase:006:0> alter 't_student',{NAME =>'info',VERSIONS => 3}

Updating all regions with the new schema...

2024-03-27 23:45:24,377 INFO [RPCClient-NioEventLoopGroup-1-8] client.AsyncHBaseAdmin (RawAsyncHBaseAdmin.java:onFinished(2701)) - Operation: MODIFY, Table Name: default:t_student completed

Took 0.9728 seconds

hbase:008:0> describe 't_student'

Table t_student is ENABLED

t_student, {TABLE_ATTRIBUTES => {METADATA => {'hbase.store.file-tracker.impl' => 'DEFAULT'}}}

COLUMN FAMILIES DESCRIPTION

{NAME => 'info', INDEX_BLOCK_ENCODING => 'NONE', VERSIONS => '3', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', M

IN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE =>

'65536 B (64KB)'}

{NAME => 'msg', INDEX_BLOCK_ENCODING => 'NONE', VERSIONS => '1', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', MI

N_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE =>

'65536 B (64KB)'}

2 row(s)

Quota is disabled

Took 0.0436 seconds

#删除信息使用特殊的语法

hbase:009:0> alter 't_student',NAME => 'msg',METHOD => 'delete'

Updating all regions with the new schema...

2024-03-27 23:47:58,313 INFO [RPCClient-NioEventLoopGroup-1-8] client.AsyncHBaseAdmin (RawAsyncHBaseAdmin.java:onFinished(2701)) - Operation: MODIFY, Table Name: default:t_student completed

Took 0.8596 seconds

hbase:010:0> describe 't_student'

Table t_student is ENABLED

t_student, {TABLE_ATTRIBUTES => {METADATA => {'hbase.store.file-tracker.impl' => 'DEFAULT'}}}

COLUMN FAMILIES DESCRIPTION

{NAME => 'info', INDEX_BLOCK_ENCODING => 'NONE', VERSIONS => '3', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', M

IN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE =>

'65536 B (64KB)'}

1 row(s)

Quota is disabled

Took 0.0453 seconds

#删除方式2

hbase:011:0>alter 't_student','delete' => 'msg'

4、删除表

shell中删除表格,需要先将表格状态设置为不可用

#表格设置为不可用

hbase:011:0> disable 't_student'

2024-03-27 23:51:11,305 INFO [RPCClient-NioEventLoopGroup-1-8] client.AsyncHBaseAdmin (RawAsyncHBaseAdmin.java:onFinished(2701)) - Operation: DISABLE, Table Name: default:t_student completed

Took 0.6848 seconds

hbase:012:0> describe 't_student'

Table t_student is DISABLED

t_student, {TABLE_ATTRIBUTES => {METADATA => {'hbase.store.file-tracker.impl' => 'DEFAULT'}}}

COLUMN FAMILIES DESCRIPTION

{NAME => 'info', INDEX_BLOCK_ENCODING => 'NONE', VERSIONS => '3', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', M

IN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE =>

'65536 B (64KB)'}

1 row(s)

Quota is disabled

Took 0.0353 seconds

#删除表

hbase:013:0> drop 't_student'

2024-03-27 23:51:58,684 INFO [RPCClient-NioEventLoopGroup-1-8] client.AsyncHBaseAdmin (RawAsyncHBaseAdmin.java:onFinished(2701)) - Operation: DELETE, Table Name: default:t_student completed

Took 0.3649 seconds

hbase:014:0>

1.2、DML写入读取数据

1、写入数据

在HBase中如果想要写入数据,只能添加结构中最底层的cell。可以手动写入时间戳指定cell的版本,推荐不写默认使用当前的系统时间。

#写入数据

hbase:001:0> put 'lwztest:t_person','1001','info:name','zhangsan'

2024-03-28 23:11:11,460 INFO [RPCClient-NioEventLoopGroup-1-1] Configuration.deprecation (Configuration.java:logDeprecation(1395)) - hbase.client.pause.cqtbe is deprecated. Instead, use hbase.client.pause.server.overloaded

Took 0.2648 seconds

hbase:002:0> put 'lwztest:t_person','1001','info:name','zhangsan'

Took 0.0190 seconds

hbase:003:0> put 'lwztest:t_person','1001','info:name','lisi'

Took 0.0212 seconds

hbase:004:0> put 'lwztest:t_person','1001','info:age','20'

Took 0.0139 seconds

2、读取数据

读取数据的方法有两个:get和scan。

get最大范围是一行数据,也可以进行列的过滤,读取数据的结果为多行cell。

#读取数据

hbase:006:0> get 'lwztest:t_person','1001'

COLUMN CELL

info:age timestamp=2024-03-28T23:13:30.749, value=20

info:name timestamp=2024-03-28T23:13:11.586, value=lisi

1 row(s)

Took 0.1166 seconds

#读取数据

hbase:007:0> scan 'lwztest:t_person'

ROW COLUMN+CELL

1001 column=info:age, timestamp=2024-03-28T23:13:30.749, value=20

1001 column=info:name, timestamp=2024-03-28T23:13:11.586, value=lisi

1 row(s)

Took 0.0394 seconds

3、删除数据

删除数据的方法有两个:delete和deleteall。

delete表示删除一个版本的数据,即为1个cell,不填写版本默认删除最新的一个版本。

deleteall表示删除所有版本的数据,即为当前行当前列的多个cell。(执行命令会标记数据为要删除,不会直接将数据彻底删除,删除数据只在特定时期清理磁盘时进行)

#删除数据

hbase:008:0> delete 'lwztest:t_person','1001','info:name'

Took 0.0254 seconds

hbase:009:0> scan 'lwztest:t_person'

ROW COLUMN+CELL

1001 column=info:age, timestamp=2024-03-28T23:13:30.749, value=20

1001 column=info:name, timestamp=2024-03-28T23:12:52.937, value=zhangsan

1 row(s)

Took 0.0130 seconds

#删除所有版本数据

hbase:010:0> deleteall 'lwztest:t_person','1001','info:name'

Took 0.0107 seconds

hbase:011:0> scan 'lwztest:t_person'

ROW COLUMN+CELL

1001 column=info:age, timestamp=2024-03-28T23:13:30.749, value=20

1 row(s)

Took 0.0083 seconds

hbase:012:0>

2、大数据软件启动

由于大数据相关的软件非常多,启动和关闭比较麻烦...自己写一个脚本,直接启动一个脚本把所有软件都起来。按照实际情况编写。

lwz-start.sh

#!/bin/bash

echo "hadoop startup..."

start-all.sh

echo "zookeeper startup..."

zkServer.sh start

ssh root@node2 << eeooff

zkServer.sh start

exit

eeooff

ssh root@node3 << eeooff

zkServer.sh start

exit

eeooff

echo "hive startup..."

nohup /export/server/hive-3.1.3/bin/hive --service metastore &

nohup /export/server/hive-3.1.3/bin/hive --service hiveserver2 &

echo "HBase startup..."

start-hbase.sh

echo done!lwz-stop.sh

#!/bin/bash

echo "hadoop stop..."

stop-all.sh

echo "zookeeper stop..."

zkServer.sh stop

ssh root@node2 << eeooff

zkServer.sh stop

exit

eeooff

ssh root@node3 << eeooff

zkServer.sh stop

exit

eeooff

echo "hive stop..."

echo "HBase stop..."

hbase-daemon.sh stop master

hbase-daemon.sh stop regionserver

echo done!3、HBase API

创建一个maven项目,在pom.xml中添加依赖

注意:会报错javax.el包不存在,是一个测试用的依赖,不影响使用

<dependencies>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>3.0.0-beta-1</version>

<exclusions>

<exclusion>

<groupId>org.glassfish</groupId>

<artifactId>javax.el</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>3.0.0-beta-1</version>

</dependency>

<dependency>

<groupId>org.glassfish</groupId>

<artifactId>javax.el</artifactId>

<version>3.0.1-b12</version>

</dependency>

</dependencies>Apache HBase(一)

请记住,你当下的结果,由过去决定;你现在的努力,在未来见效;

不断学习才能不断提高!磨炼,不断磨炼自己的技能!学习伴随我们终生!

生如蝼蚁,当立鸿鹄之志,命比纸薄,应有不屈之心。

乾坤未定,你我皆是黑马,若乾坤已定,谁敢说我不能逆转乾坤?

努力吧,机会永远是留给那些有准备的人,否则,机会来了,没有实力,只能眼睁睁地看着机会溜走。