1.划分数据集函数train_test_split以及数据的加载:

python机器学习 train_test_split()函数用法解析及示例 划分训练集和测试集 以鸢尾数据为例 入门级讲解_侯小啾的博客-CSDN博客_train_test_split

还有这篇文章,解析的清除:

https://community.modelscope.cn/635e56aed3efff3090b5f62c.html?spm=1001.2101.3001.6650.7&utm_medium=distribute.pc_relevant.none-task-blog-2%7Edefault%7EESLANDING%7Eactivity-7-117196625-blog-120677767.pc_relevant_landingrelevant&depth_1-utm_source=distribute.pc_relevant.none-task-blog-2%7Edefault%7EESLANDING%7Eactivity-7-117196625-blog-120677767.pc_relevant_landingrelevant&utm_relevant_index=14

总之,就是

dtrain = xgb.DMatrix(data,label)

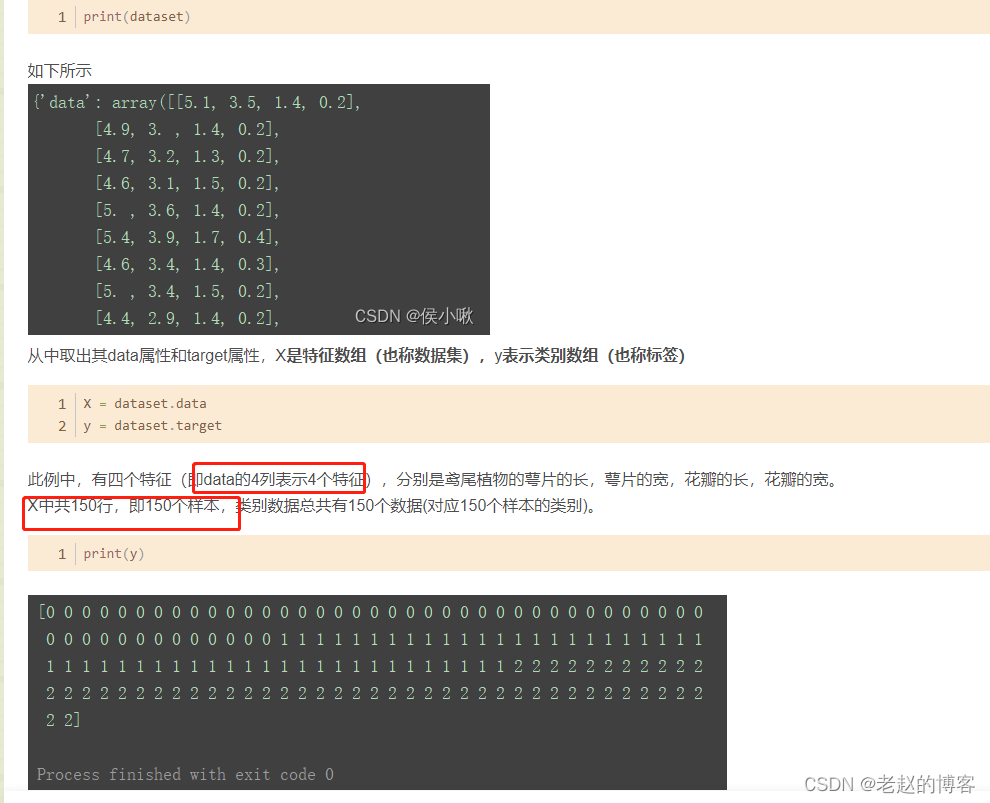

中的label,摘抄第一个链接为例:原始二维numpy数据,列表示有多少特征,行表示有多少样本

2. 原理和代码实现:

树类算法之--XGBoost算法原理&代码实战_小小的天和蜗牛的博客-CSDN博客

3. 线性回归模型的两种实现:

XGBoost线性回归工控数据分析实践案例(原生篇)_肖永威的博客-CSDN博客_xgboost 线性回归

XGBoost线性回归工控数据分析实践案例(Sklearn接口篇)_肖永威的博客-CSDN博客_xgboost 线性回归

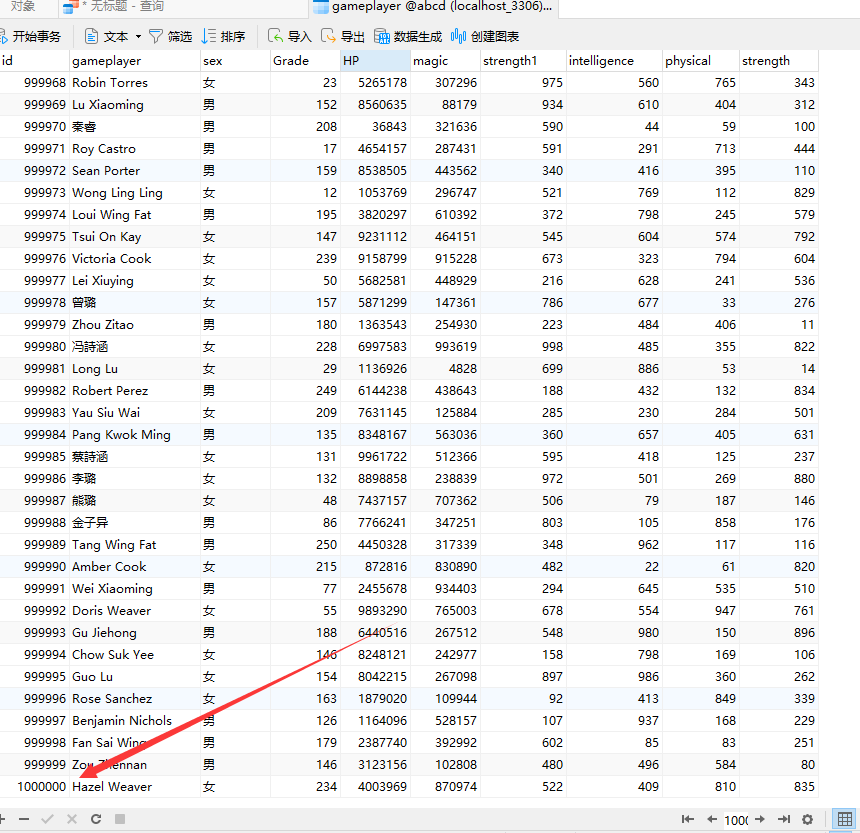

xgboost回归预测模型_XGBoost模型(3)--球员身价预测_weixin_39628180的博客-CSDN博客

4.sklearn性能评估:

sklearn中的回归器性能评估方法 - nolonely - 博客园

https://haosen.blog.csdn.net/article/details/105930868?spm=1001.2101.3001.6661.1&utm_medium=distribute.pc_relevant_t0.none-task-blog-2%7Edefault%7ECTRLIST%7ERate-1-105930868-blog-108106208.pc_relevant_aa2&depth_1-utm_source=distribute.pc_relevant_t0.none-task-blog-2%7Edefault%7ECTRLIST%7ERate-1-105930868-blog-108106208.pc_relevant_aa2&utm_relevant_index=1

评估回归模型的指标:MSE、RMSE、MAE、R2、偏差和方差_悦光阴的博客-CSDN博客

5.案例

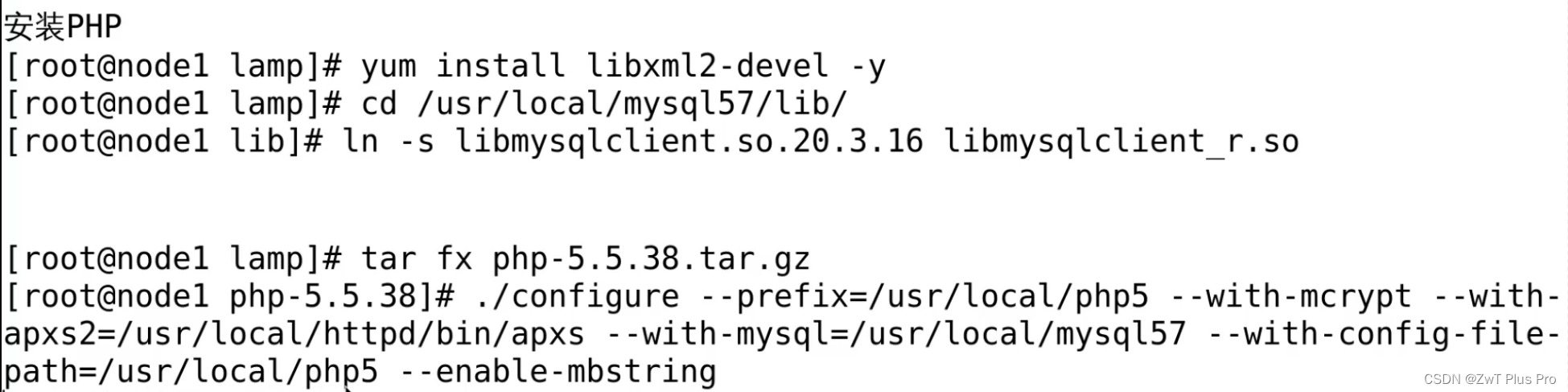

import xgboost as xgb

from xgboost import plot_importance

from matplotlib import pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

import pandas as pd

import numpy as np

import os

os.environ["KMP_DUPLICATE_LIB_OK"] = "TRUE"

#load_boston在1.2被移除

# 加载数据集,此数据集时做回归的

data_url = "http://lib.stat.cmu.edu/datasets/boston"

raw_df = pd.read_csv(data_url, sep="\s+", skiprows=22, header=None)

data = np.hstack([raw_df.values[::2, :], raw_df.values[1::2, :2]])

target = raw_df.values[1::2, 2]

X, y = data, target

# Xgboost训练过程

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=22)

# 算法参数

params = {

'booster': 'gbtree',

'objective': 'reg:gamma',

'gamma': 0.01,

'max_depth': 6,

'silent': 1,

'lambda': 3,

'subsample': 0.8,

'colsample_bytree': 0.8,

'min_child_weight': 3,

'slient': 1,

'eta': 0.1,

'seed': 1000,

'nthread': 4,

}

dtrain = xgb.DMatrix(X_train, y_train)

num_rounds = 800

plst = list(params.items())

model = xgb.train(plst, dtrain, num_rounds)

# 对测试集进行预测

dtest = xgb.DMatrix(X_test)

y_pred = model.predict(dtest)

# 计算mse

mse = mean_squared_error(y_true=y_test, y_pred=y_pred)

print('mse:', mse)

# 显示重要特征

plot_importance(model)

plt.show()