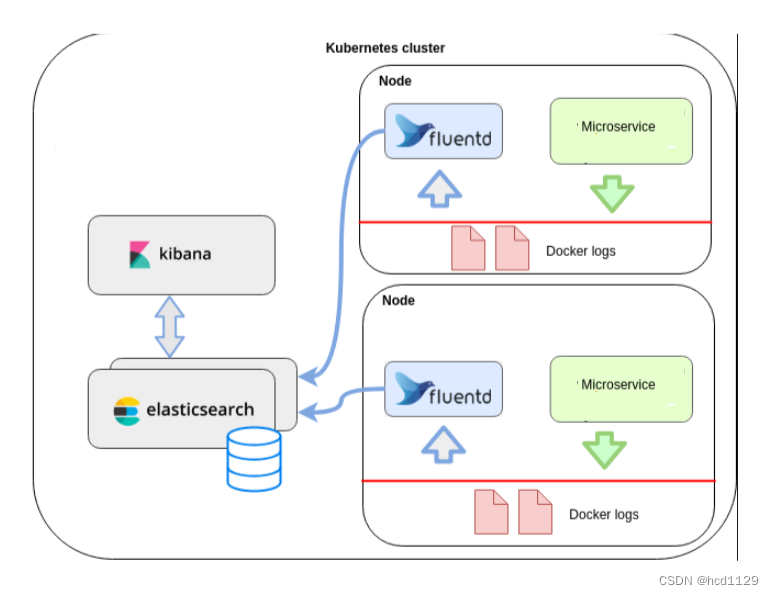

EFK架构工作流程

部署说明

ECK (Elastic Cloud on Kubernetes):2.7

Kubernetes:1.23.0

文件准备

crds.yaml

下载地址:https://download.elastic.co/downloads/eck/2.7.0/crds.yaml

operator.yaml

下载地址:https://download.elastic.co/downloads/eck/2.7.0/operator.yaml

elastic.yaml

apiVersion: elasticsearch.k8s.elastic.co/v1

kind: Elasticsearch

metadata:

name: efk-elastic

namespace: elastic-system

spec:

version: 8.12.2

nodeSets:

- name: default

count: 3

config:

node.store.allow_mmap: false

volumeClaimTemplates:

- metadata:

name: elasticsearch-data

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

storageClassName: openebs-hostpath

podTemplate:

spec:

containers:

- name: elasticsearch

env:

- name: ES_JAVA_OPTS

value: -Xms2g -Xmx2g

resources:

requests:

memory: 2Gi

cpu: 2

limits:

memory: 4Gi

cpu: 2

# https://www.elastic.co/guide/en/cloud-on-k8s/current/k8s-virtual-memory.html

initContainers:

- name: sysctl

securityContext:

privileged: true

command: ["sh", "-c", "sysctl -w vm.max_map_count=262144"]

---

apiVersion: v1

kind: Service

metadata:

namespace: elastic-system

labels:

app: efk-elastic-nodeport

name: efk-elastic-nodeport

spec:

sessionAffinity: None

selector:

common.k8s.elastic.co/type: elasticsearch

elasticsearch.k8s.elastic.co/cluster-name: efk-elastic

ports:

- name: http-9200

protocol: TCP

targetPort: 9200

port: 9200

nodePort: 30920

- name: http-9300

protocol: TCP

targetPort: 9300

port: 9300

nodePort: 30921

type: NodePort

---

apiVersion: kibana.k8s.elastic.co/v1

kind: Kibana

metadata:

name: efk-kibana

namespace: elastic-system

spec:

version: 8.12.2

count: 1

elasticsearchRef:

name: efk-elastic

namespace: elastic-system

---

apiVersion: v1

kind: Service

metadata:

namespace: elastic-system

labels:

app: efk-kibana-nodeport

name: efk-kibana-nodeport

spec:

sessionAffinity: None

selector:

common.k8s.elastic.co/type: kibana

kibana.k8s.elastic.co/name: efk-kibana

ports:

- name: http-5601

protocol: TCP

targetPort: 5601

port: 5601

nodePort: 30922

type: NodePort

fluentd-es-configmap.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: fluentd-es-config

namespace: elastic-system

data:

fluent.conf: |-

# https://github.com/fluent/fluentd-kubernetes-daemonset/blob/master/docker-image/v1.11/debian-elasticsearch7/conf/fluent.conf

@include "#{ENV['FLUENTD_SYSTEMD_CONF'] || 'systemd'}.conf"

@include "#{ENV['FLUENTD_PROMETHEUS_CONF'] || 'prometheus'}.conf"

@include kubernetes.conf

@include conf.d/*.conf

<match kubernetes.**>

# https://github.com/kubernetes/kubernetes/issues/23001

@type elasticsearch_dynamic

@id kubernetes_elasticsearch

@log_level info

include_tag_key true

host "#{ENV['FLUENT_ELASTICSEARCH_HOST']}"

port "#{ENV['FLUENT_ELASTICSEARCH_PORT']}"

path "#{ENV['FLUENT_ELASTICSEARCH_PATH']}"

scheme "#{ENV['FLUENT_ELASTICSEARCH_SCHEME'] || 'http'}"

ssl_verify "#{ENV['FLUENT_ELASTICSEARCH_SSL_VERIFY'] || 'true'}"

ssl_version "#{ENV['FLUENT_ELASTICSEARCH_SSL_VERSION'] || 'TLSv1_2'}"

user "#{ENV['FLUENT_ELASTICSEARCH_USER'] || use_default}"

password "#{ENV['FLUENT_ELASTICSEARCH_PASSWORD'] || use_default}"

reload_connections "#{ENV['FLUENT_ELASTICSEARCH_RELOAD_CONNECTIONS'] || 'false'}"

reconnect_on_error "#{ENV['FLUENT_ELASTICSEARCH_RECONNECT_ON_ERROR'] || 'true'}"

reload_on_failure "#{ENV['FLUENT_ELASTICSEARCH_RELOAD_ON_FAILURE'] || 'true'}"

log_es_400_reason "#{ENV['FLUENT_ELASTICSEARCH_LOG_ES_400_REASON'] || 'false'}"

logstash_prefix logstash-${record['kubernetes']['namespace_name']}

logstash_dateformat "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_DATEFORMAT'] || '%Y.%m.%d'}"

logstash_format "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_FORMAT'] || 'true'}"

index_name "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_INDEX_NAME'] || 'logstash'}"

target_index_key "#{ENV['FLUENT_ELASTICSEARCH_TARGET_INDEX_KEY'] || use_nil}"

type_name "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_TYPE_NAME'] || 'fluentd'}"

include_timestamp "#{ENV['FLUENT_ELASTICSEARCH_INCLUDE_TIMESTAMP'] || 'false'}"

template_name "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_NAME'] || use_nil}"

template_file "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_FILE'] || use_nil}"

template_overwrite "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_OVERWRITE'] || use_default}"

sniffer_class_name "#{ENV['FLUENT_SNIFFER_CLASS_NAME'] || 'Fluent::Plugin::ElasticsearchSimpleSniffer'}"

request_timeout "#{ENV['FLUENT_ELASTICSEARCH_REQUEST_TIMEOUT'] || '5s'}"

suppress_type_name "#{ENV['FLUENT_ELASTICSEARCH_SUPPRESS_TYPE_NAME'] || 'true'}"

enable_ilm "#{ENV['FLUENT_ELASTICSEARCH_ENABLE_ILM'] || 'false'}"

ilm_policy_id "#{ENV['FLUENT_ELASTICSEARCH_ILM_POLICY_ID'] || use_default}"

ilm_policy "#{ENV['FLUENT_ELASTICSEARCH_ILM_POLICY'] || use_default}"

ilm_policy_overwrite "#{ENV['FLUENT_ELASTICSEARCH_ILM_POLICY_OVERWRITE'] || 'false'}"

<buffer>

flush_thread_count "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_FLUSH_THREAD_COUNT'] || '8'}"

flush_interval "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_FLUSH_INTERVAL'] || '5s'}"

chunk_limit_size "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_CHUNK_LIMIT_SIZE'] || '2M'}"

queue_limit_length "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_QUEUE_LIMIT_LENGTH'] || '32'}"

retry_max_interval "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_RETRY_MAX_INTERVAL'] || '30'}"

retry_forever true

</buffer>

</match>

<match **>

@type elasticsearch

@id out_es

@log_level info

include_tag_key true

host "#{ENV['FLUENT_ELASTICSEARCH_HOST']}"

port "#{ENV['FLUENT_ELASTICSEARCH_PORT']}"

path "#{ENV['FLUENT_ELASTICSEARCH_PATH']}"

scheme "#{ENV['FLUENT_ELASTICSEARCH_SCHEME'] || 'http'}"

ssl_verify "#{ENV['FLUENT_ELASTICSEARCH_SSL_VERIFY'] || 'true'}"

ssl_version "#{ENV['FLUENT_ELASTICSEARCH_SSL_VERSION'] || 'TLSv1_2'}"

user "#{ENV['FLUENT_ELASTICSEARCH_USER'] || use_default}"

password "#{ENV['FLUENT_ELASTICSEARCH_PASSWORD'] || use_default}"

reload_connections "#{ENV['FLUENT_ELASTICSEARCH_RELOAD_CONNECTIONS'] || 'false'}"

reconnect_on_error "#{ENV['FLUENT_ELASTICSEARCH_RECONNECT_ON_ERROR'] || 'true'}"

reload_on_failure "#{ENV['FLUENT_ELASTICSEARCH_RELOAD_ON_FAILURE'] || 'true'}"

log_es_400_reason "#{ENV['FLUENT_ELASTICSEARCH_LOG_ES_400_REASON'] || 'false'}"

logstash_prefix "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_PREFIX'] || 'logstash'}"

logstash_dateformat "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_DATEFORMAT'] || '%Y.%m.%d'}"

logstash_format "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_FORMAT'] || 'true'}"

index_name "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_INDEX_NAME'] || 'logstash'}"

target_index_key "#{ENV['FLUENT_ELASTICSEARCH_TARGET_INDEX_KEY'] || use_nil}"

type_name "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_TYPE_NAME'] || 'fluentd'}"

include_timestamp "#{ENV['FLUENT_ELASTICSEARCH_INCLUDE_TIMESTAMP'] || 'false'}"

template_name "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_NAME'] || use_nil}"

template_file "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_FILE'] || use_nil}"

template_overwrite "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_OVERWRITE'] || use_default}"

sniffer_class_name "#{ENV['FLUENT_SNIFFER_CLASS_NAME'] || 'Fluent::Plugin::ElasticsearchSimpleSniffer'}"

request_timeout "#{ENV['FLUENT_ELASTICSEARCH_REQUEST_TIMEOUT'] || '5s'}"

suppress_type_name "#{ENV['FLUENT_ELASTICSEARCH_SUPPRESS_TYPE_NAME'] || 'true'}"

enable_ilm "#{ENV['FLUENT_ELASTICSEARCH_ENABLE_ILM'] || 'false'}"

ilm_policy_id "#{ENV['FLUENT_ELASTICSEARCH_ILM_POLICY_ID'] || use_default}"

ilm_policy "#{ENV['FLUENT_ELASTICSEARCH_ILM_POLICY'] || use_default}"

ilm_policy_overwrite "#{ENV['FLUENT_ELASTICSEARCH_ILM_POLICY_OVERWRITE'] || 'false'}"

<buffer>

flush_thread_count "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_FLUSH_THREAD_COUNT'] || '8'}"

flush_interval "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_FLUSH_INTERVAL'] || '5s'}"

chunk_limit_size "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_CHUNK_LIMIT_SIZE'] || '2M'}"

queue_limit_length "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_QUEUE_LIMIT_LENGTH'] || '32'}"

retry_max_interval "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_RETRY_MAX_INTERVAL'] || '30'}"

retry_forever true

</buffer>

</match>

kubernetes.conf: |-

# https://github.com/fluent/fluentd-kubernetes-daemonset/blob/master/docker-image/v1.11/debian-elasticsearch7/conf/kubernetes.conf

<label @FLUENT_LOG>

<match fluent.**>

@type null

@id ignore_fluent_logs

</match>

</label>

<source>

@id fluentd-containers.log

@type tail

path /var/log/containers/*.log

pos_file /var/log/es-containers.log.pos

tag raw.kubernetes.*

read_from_head true

<parse>

@type multi_format

<pattern>

format json

time_key time

time_format %Y-%m-%dT%H:%M:%S.%NZ

</pattern>

<pattern>

format /^(?<time>.+) (?<stream>stdout|stderr) [^ ]* (?<log>.*)$/

time_format %Y-%m-%dT%H:%M:%S.%N%:z

</pattern>

</parse>

</source>

# Detect exceptions in the log output and forward them as one log entry.

<match raw.kubernetes.**>

@id raw.kubernetes

@type detect_exceptions

remove_tag_prefix raw

message log

stream stream

multiline_flush_interval 5

max_bytes 500000

max_lines 1000

</match>

# Concatenate multi-line logs

<filter **>

@id filter_concat

@type concat

key message

multiline_end_regexp /\n$/

separator ""

</filter>

# Enriches records with Kubernetes metadata

<filter kubernetes.**>

@id filter_kubernetes_metadata

@type kubernetes_metadata

</filter>

# Fixes json fields in Elasticsearch

<filter kubernetes.**>

@id filter_parser

@type parser

key_name log

reserve_data true

remove_key_name_field true

<parse>

@type multi_format

<pattern>

format json

</pattern>

<pattern>

format none

</pattern>

</parse>

</filter>

<source>

@type tail

@id in_tail_minion

path /var/log/salt/minion

pos_file /var/log/fluentd-salt.pos

tag salt

<parse>

@type regexp

expression /^(?<time>[^ ]* [^ ,]*)[^\[]*\[[^\]]*\]\[(?<severity>[^ \]]*) *\] (?<message>.*)$/

time_format %Y-%m-%d %H:%M:%S

</parse>

</source>

<source>

@type tail

@id in_tail_startupscript

path /var/log/startupscript.log

pos_file /var/log/fluentd-startupscript.log.pos

tag startupscript

<parse>

@type syslog

</parse>

</source>

<source>

@type tail

@id in_tail_docker

path /var/log/docker.log

pos_file /var/log/fluentd-docker.log.pos

tag docker

<parse>

@type regexp

expression /^time="(?<time>[^)]*)" level=(?<severity>[^ ]*) msg="(?<message>[^"]*)"( err="(?<error>[^"]*)")?( statusCode=($<status_code>\d+))?/

</parse>

</source>

<source>

@type tail

@id in_tail_etcd

path /var/log/etcd.log

pos_file /var/log/fluentd-etcd.log.pos

tag etcd

<parse>

@type none

</parse>

</source>

<source>

@type tail

@id in_tail_kubelet

multiline_flush_interval 5s

path /var/log/kubelet.log

pos_file /var/log/fluentd-kubelet.log.pos

tag kubelet

<parse>

@type kubernetes

</parse>

</source>

<source>

@type tail

@id in_tail_kube_proxy

multiline_flush_interval 5s

path /var/log/kube-proxy.log

pos_file /var/log/fluentd-kube-proxy.log.pos

tag kube-proxy

<parse>

@type kubernetes

</parse>

</source>

<source>

@type tail

@id in_tail_kube_apiserver

multiline_flush_interval 5s

path /var/log/kube-apiserver.log

pos_file /var/log/fluentd-kube-apiserver.log.pos

tag kube-apiserver

<parse>

@type kubernetes

</parse>

</source>

<source>

@type tail

@id in_tail_kube_controller_manager

multiline_flush_interval 5s

path /var/log/kube-controller-manager.log

pos_file /var/log/fluentd-kube-controller-manager.log.pos

tag kube-controller-manager

<parse>

@type kubernetes

</parse>

</source>

<source>

@type tail

@id in_tail_kube_scheduler

multiline_flush_interval 5s

path /var/log/kube-scheduler.log

pos_file /var/log/fluentd-kube-scheduler.log.pos

tag kube-scheduler

<parse>

@type kubernetes

</parse>

</source>

<source>

@type tail

@id in_tail_rescheduler

multiline_flush_interval 5s

path /var/log/rescheduler.log

pos_file /var/log/fluentd-rescheduler.log.pos

tag rescheduler

<parse>

@type kubernetes

</parse>

</source>

<source>

@type tail

@id in_tail_glbc

multiline_flush_interval 5s

path /var/log/glbc.log

pos_file /var/log/fluentd-glbc.log.pos

tag glbc

<parse>

@type kubernetes

</parse>

</source>

<source>

@type tail

@id in_tail_cluster_autoscaler

multiline_flush_interval 5s

path /var/log/cluster-autoscaler.log

pos_file /var/log/fluentd-cluster-autoscaler.log.pos

tag cluster-autoscaler

<parse>

@type kubernetes

</parse>

</source>

# Example:

# 2017-02-09T00:15:57.992775796Z AUDIT: id="90c73c7c-97d6-4b65-9461-f94606ff825f" ip="104.132.1.72" method="GET" user="kubecfg" as="<self>" asgroups="<lookup>" namespace="default" uri="/api/v1/namespaces/default/pods"

# 2017-02-09T00:15:57.993528822Z AUDIT: id="90c73c7c-97d6-4b65-9461-f94606ff825f" response="200"

<source>

@type tail

@id in_tail_kube_apiserver_audit

multiline_flush_interval 5s

path /var/log/kubernetes/kube-apiserver-audit.log

pos_file /var/log/kube-apiserver-audit.log.pos

tag kube-apiserver-audit

<parse>

@type multiline

format_firstline /^\S+\s+AUDIT:/

# Fields must be explicitly captured by name to be parsed into the record.

# Fields may not always be present, and order may change, so this just looks

# for a list of key="\"quoted\" value" pairs separated by spaces.

# Unknown fields are ignored.

# Note: We can't separate query/response lines as format1/format2 because

# they don't always come one after the other for a given query.

format1 /^(?<time>\S+) AUDIT:(?: (?:id="(?<id>(?:[^"\\]|\\.)*)"|ip="(?<ip>(?:[^"\\]|\\.)*)"|method="(?<method>(?:[^"\\]|\\.)*)"|user="(?<user>(?:[^"\\]|\\.)*)"|groups="(?<groups>(?:[^"\\]|\\.)*)"|as="(?<as>(?:[^"\\]|\\.)*)"|asgroups="(?<asgroups>(?:[^"\\]|\\.)*)"|namespace="(?<namespace>(?:[^"\\]|\\.)*)"|uri="(?<uri>(?:[^"\\]|\\.)*)"|response="(?<response>(?:[^"\\]|\\.)*)"|\w+="(?:[^"\\]|\\.)*"))*/

time_format %Y-%m-%dT%T.%L%Z

</parse>

</source>

fluentd-es-ds.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd-es

namespace: elastic-system

labels:

app: fluentd-es

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd-es

labels:

app: fluentd-es

rules:

- apiGroups:

- ""

resources:

- "namespaces"

- "pods"

verbs:

- "get"

- "watch"

- "list"

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd-es

labels:

app: fluentd-es

subjects:

- kind: ServiceAccount

name: fluentd-es

namespace: elastic-system

apiGroup: ""

roleRef:

kind: ClusterRole

name: fluentd-es

apiGroup: ""

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd-es

namespace: elastic-system

labels:

app: fluentd-es

spec:

selector:

matchLabels:

app: fluentd-es

template:

metadata:

labels:

app: fluentd-es

spec:

serviceAccount: fluentd-es

serviceAccountName: fluentd-es

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: fluentd-es

image: fluent/fluentd-kubernetes-daemonset:v1.16-debian-elasticsearch8-2

env:

- name: FLUENT_ELASTICSEARCH_HOST

value: efk-elastic-es-http

# default user

- name: FLUENT_ELASTICSEARCH_USER

value: elastic

# is already present from the elasticsearch deployment

- name: FLUENT_ELASTICSEARCH_PASSWORD

valueFrom:

secretKeyRef:

name: efk-elastic-es-elastic-user

key: elastic

# elasticsearch standard port

- name: FLUENT_ELASTICSEARCH_PORT

value: "9200"

# der elastic operator ist https standard

- name: FLUENT_ELASTICSEARCH_SCHEME

value: "https"

# dont need systemd logs for now

- name: FLUENTD_SYSTEMD_CONF

value: disable

# da certs self signt sind muss verify disabled werden

- name: FLUENT_ELASTICSEARCH_SSL_VERIFY

value: "false"

# to avoid issue https://github.com/uken/fluent-plugin-elasticsearch/issues/525

- name: FLUENT_ELASTICSEARCH_RELOAD_CONNECTIONS

value: "false"

resources:

limits:

memory: 512Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: config-volume

mountPath: /fluentd/etc

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: config-volume

configMap:

name: fluentd-es-config

index-cleaner.yaml

apiVersion: batch/v1

kind: CronJob

metadata:

name: index-cleaner

namespace: elastic-system

spec:

schedule: "0 0 * * *"

jobTemplate:

spec:

backoffLimit: 2

template:

spec:

containers:

- name: index-cleaner

image: hcd1129/es-index-cleaner:latest

env:

- name: DAYS

value: "2"

- name: PREFIX

value: logstash-*

- name: ES_HOST

value: https://efk-elastic-es-http:9200

- name: ES_USER

value: elastic

- name: ES_PASSWORD

valueFrom:

secretKeyRef:

key: elastic

name: efk-elastic-es-elastic-user

restartPolicy: Never

es-index-cleaner镜像

执行部署

# 依次执行

#安装 Operator

kubectl create -f crds.yaml

kubectl apply -f operator.yaml

# 安装Elasticsearch kibana

kubectl apply -f elastic.yaml

#安装 Fluentd

kubectl apply -f fluentd-es-configmap.yaml

kubectl apply -f fluentd-es-ds.yaml

#部署自动清理旧日志定时任务

kubectl apply -f index-cleaner.yaml

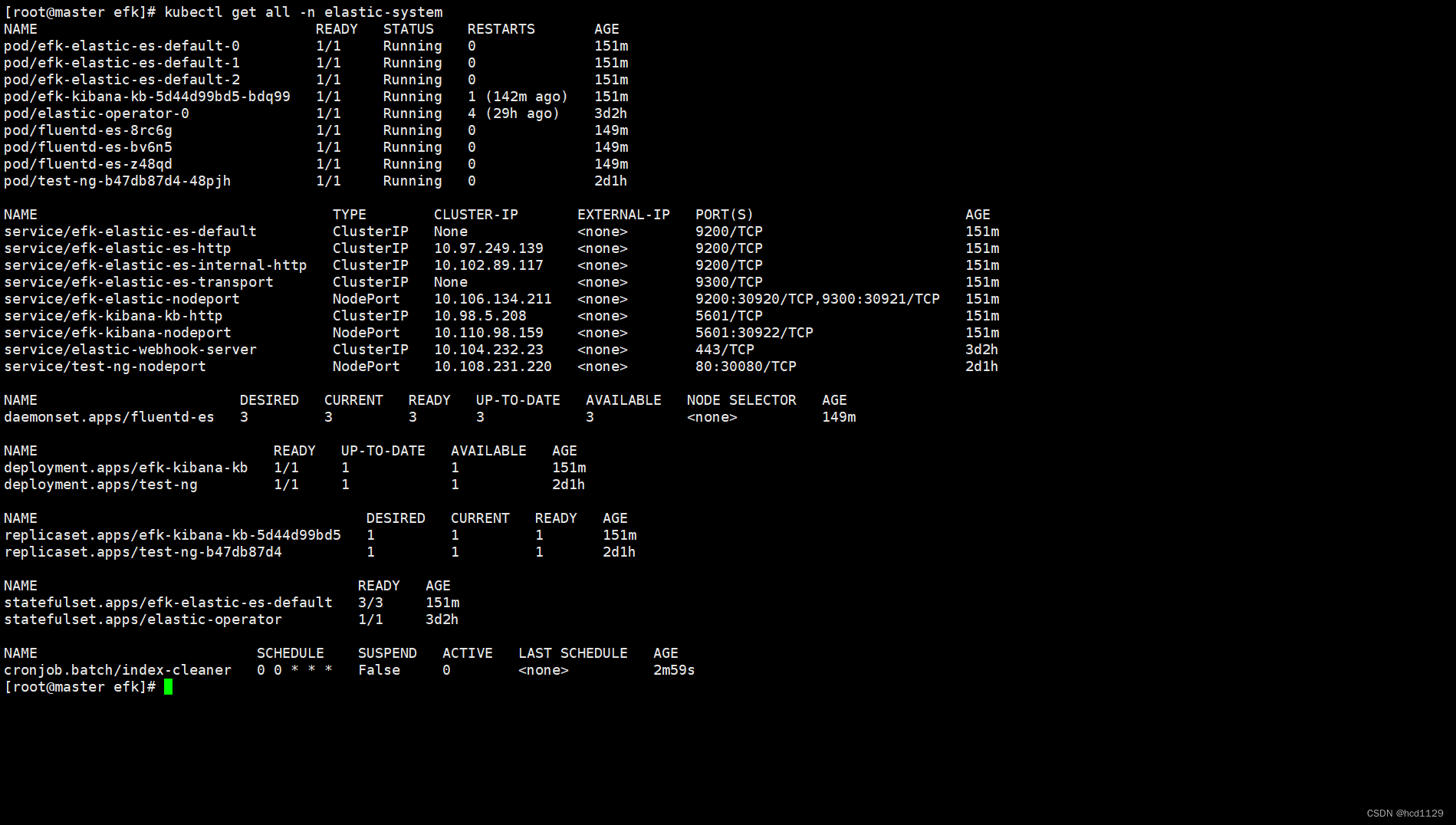

部署效果

kubectl get all -n elastic-system

账号密码

#es账号:elastic

#查看es密码

kubectl get secret efk-elastic-es-elastic-user -n elastic-system -o=jsonpath='{.data.elastic}' | base64 --decode; echo

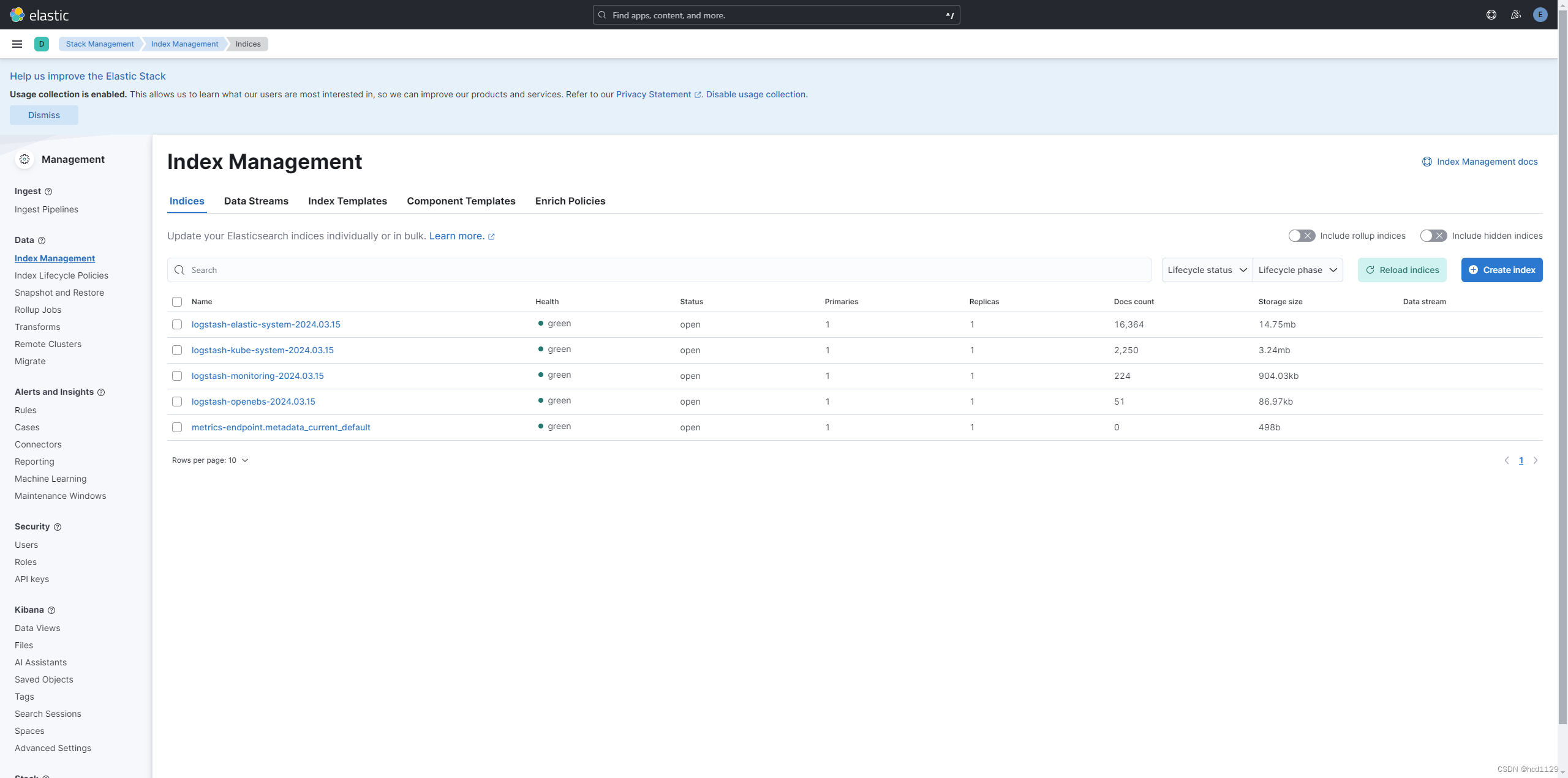

效果展示

https://192.168.1.180:30920/

https://192.168.1.180:30922/