以下代码图片思路来源:

北京大学Tensor flow笔记

嗯,最近学了一下神经网络,并没有很难,主要是把代码背下来,然后掌握Tensorflow是怎么搭建网络的,Tensorflow是比pytorch好用的,我直接抄的代码里面,训练还要自己写循环,,而tensonflow直接调用fit函数即可

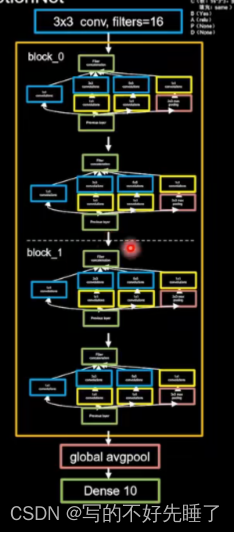

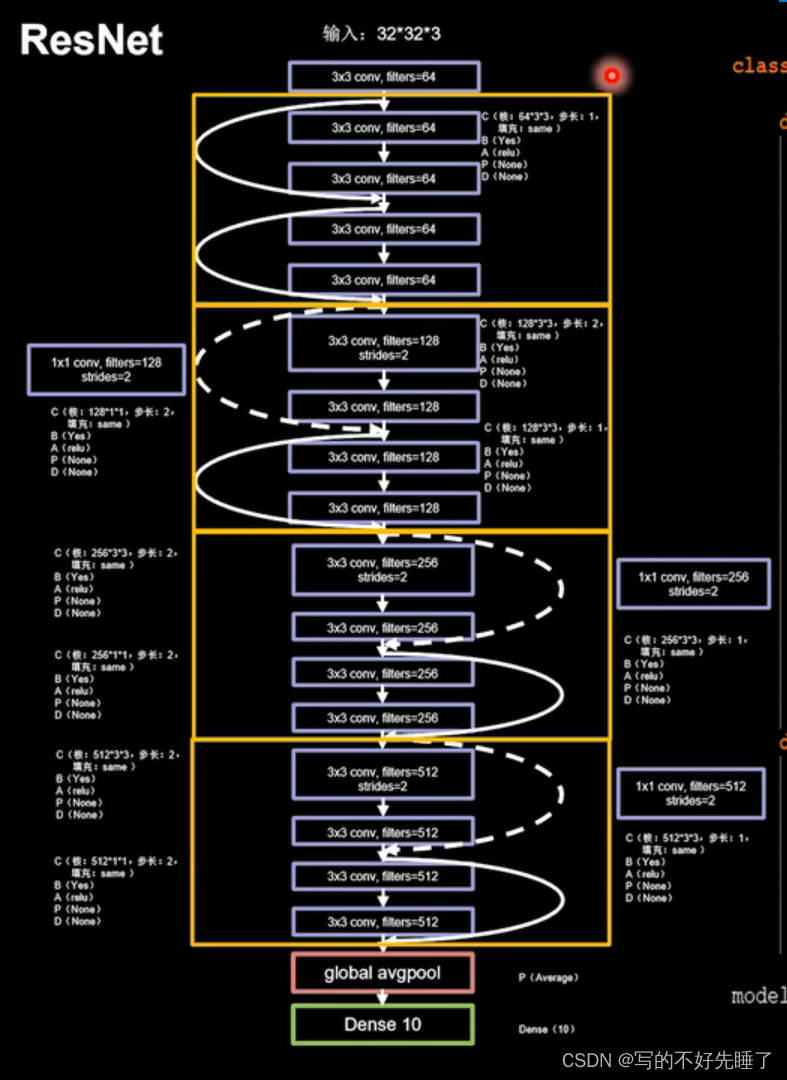

和老师做了一下InceptionNet还有ResNet,ResNet主要是有一条path,由于维度不同需要使用1*1卷积核结合步长还有卷积核的数量来个输入的图片降低维度

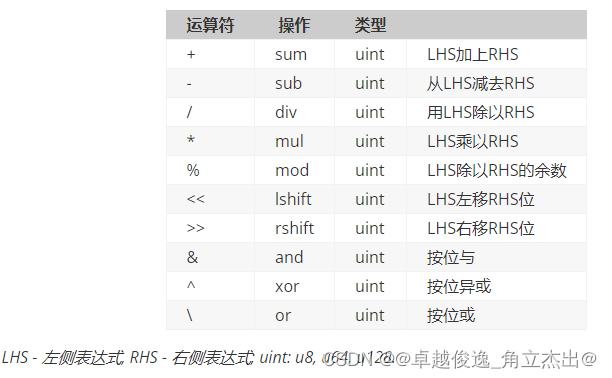

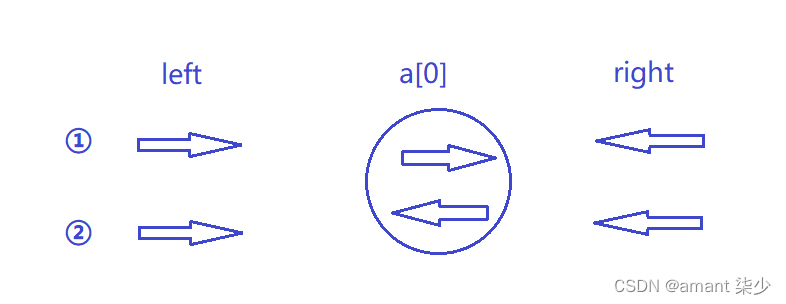

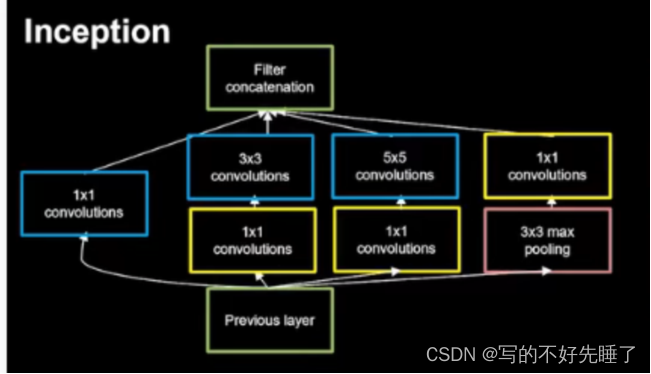

Inception结构:

对于一个Inception块:

是长这样的,其实还是像搭积木一样网上堆,如果你想改变输入input的行进方向的话,只需要在call函数里面改一下就可以:

def call(self, x):

print("input_shape:",x.shape)

x1=self.c1(x)

print("x1:",x1.shape)

x2_1=self.c2_1(x)

print("x2_1:",x2_1.shape)

x2=self.c2_2(x2_1)

print("x2:",x2.shape)

x3_1=self.c3_1(x)

print("x3_1:",x3_1.shape)

x3=self.c3_2(x3_1)

print("x3:",x3.shape)

x4_1=self.p4_1(x)

print("x4_1:",x4_1.shape)

x4=self.c4_2(x4_1)

print("x4:",x4.shape)

y=tf.concat([x1,x2,x3,x4],axis=3)

然后在init里面把积木初始化就可以了,甚至你可以不按顺序的初始化

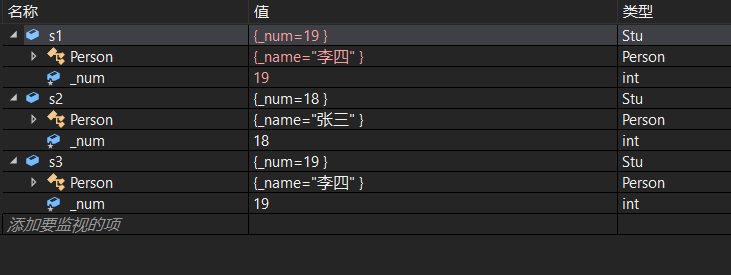

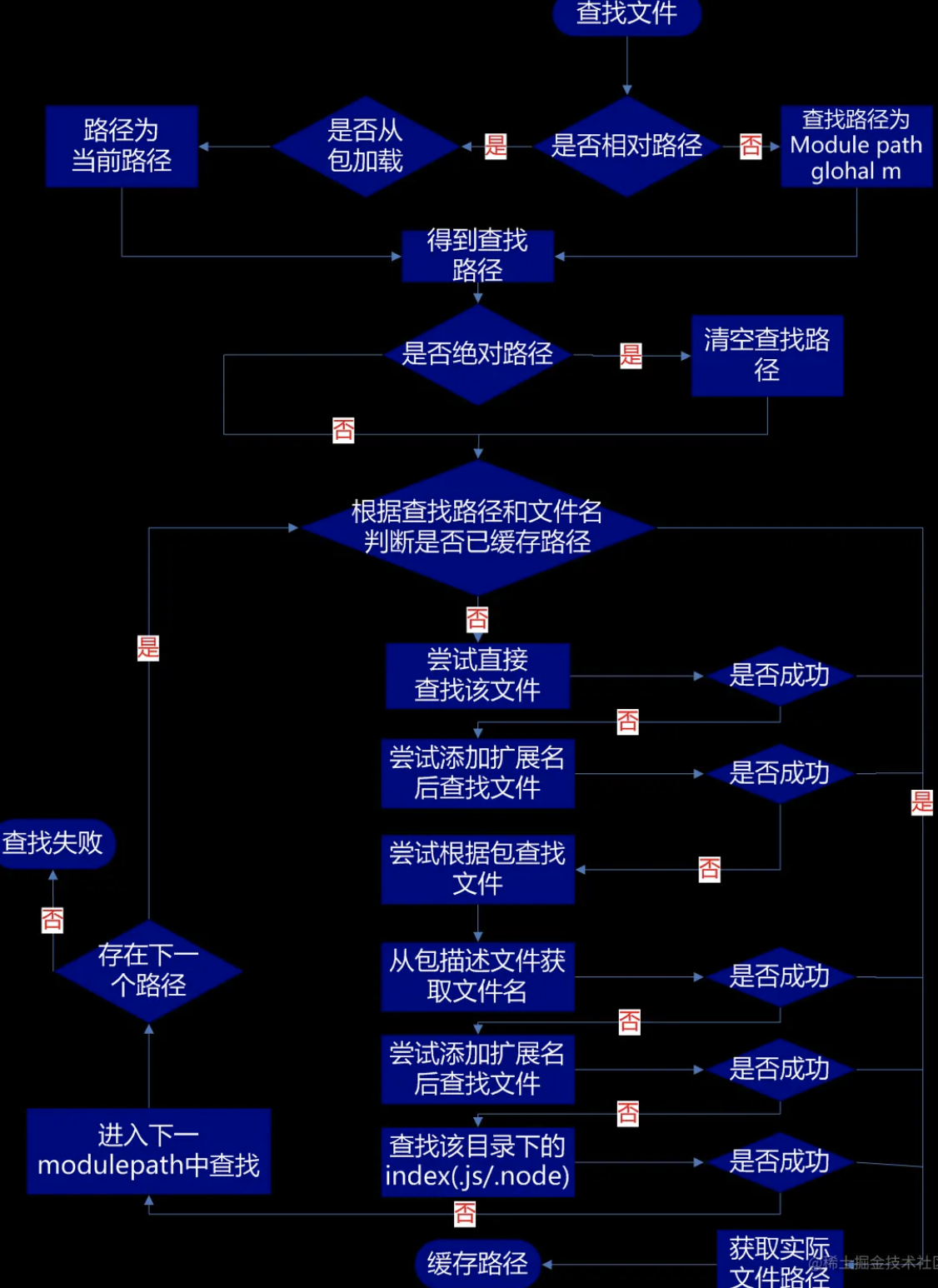

再来说ResNet,ResNet是怕前面的特征丢失,然后把前面的结果另外用一条线引到后面来:

其中虚线是需要一条下采样的path,实线是不需要下采样的

在call函数里面这样写就可以:

def call(self, x):

y=self.c1(x)

y=self.c2(y)

a=x

if(self.path):

a=self.c3(a)

return y+a#残差网络输出的时候记得相加

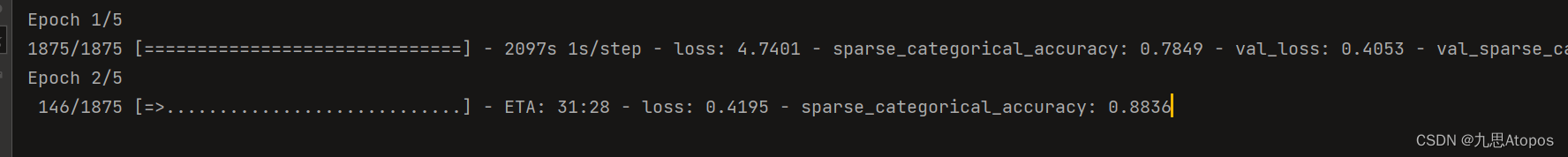

嘶,嗯,,我这只有CPU,跑了半个小时之后训练完了一个epoch,真好,

整体代码:

ResNet

#本文件实现对MNIST数据集的卷积神经网络训练,实现断点续训等操作

import keras

import tensorflow as tf

from tensorflow.python.keras import Model

from tensorflow.python.keras.layers import Conv2D,Dense,Flatten,MaxPool2D,Dropout,Activation

from keras.layers import BatchNormalization

(x_train,y_train),(x_test,y_test)=tf.keras.datasets.mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

x_train=x_train.reshape(x_train.shape[0],28,28,1)

x_test=x_test.reshape(x_test.shape[0],28,28,1)

class resnet18(Model):

def __init__(self,path,filters,kernel_size,strides):

super(resnet18,self).__init__()

self.path=path

self.c1=Conv2D(kernel_size=kernel_size,strides=strides,filters=filters,padding='same')

self.c2=Conv2D(kernel_size=kernel_size,strides=1,filters=filters,padding='same')

if(path):

self.c3=Conv2D(kernel_size=1,strides=strides,filters=filters,use_bias=False,padding='same')

def call(self, x):

y=self.c1(x)

y=self.c2(y)

a=x

if(self.path):

a=self.c3(a)

return y+a#残差网络输出的时候记得相加

class resnet(Model):

def __init__(self):

super(resnet,self).__init__()

self.block=tf.keras.models.Sequential()

begin=64

for i in range(4):

for j in range(2):

if(i!=0 and j==0):

self.block.add(resnet18(1,begin,3,2))

else:

self.block.add(resnet18(0,begin,3,1))

begin *= 2

def call(self, x):

y=self.block(x)

return y

class simple(Model):

def __init__(self):

super(simple,self).__init__()

self.c1=Conv2D(kernel_size=3,filters=64,padding='same')

self.b1=resnet()

self.p1=keras.layers.GlobalAvgPool2D()

self.d1=Dense(10,activation='softmax')

def call(self,x):

y=self.c1(x)

y=self.b1(y)

y=self.p1(y)

y=self.d1(y)

return y

model=simple()

model.compile(optimizer='adam',loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

model_save_path='./conv_weights/weight.ckpt'

bp=tf.keras.callbacks.ModelCheckpoint(filepath=model_save_path,save_best_only=True,save_weights_only=True)

model.fit(x_train,y_train,batch_size=32,epochs=5,validation_data=(x_test,y_test),

validation_freq=1,callbacks=bp)

InceptionNect代码:

#本文件实现对MNIST数据集的卷积神经网络训练,实现断点续训等操作

import keras

import tensorflow as tf

from tensorflow.python.keras import Model

from tensorflow.python.keras.layers import Conv2D,Dense,Flatten,MaxPool2D,Dropout,Activation

from keras.layers import BatchNormalization

(x_train,y_train),(x_test,y_test)=tf.keras.datasets.mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

x_train=x_train.reshape(x_train.shape[0],28,28,1)

x_test=x_test.reshape(x_test.shape[0],28,28,1)

class conv(Model):

def __init__(self,output_shape,stride,size):

super(conv,self).__init__()

self.c1=Conv2D(output_shape,strides=stride,kernel_size=size,padding='same')

self.b1=BatchNormalization()

self.a1=Activation('relu')

def call(self,x):

y=self.c1(x)

y=self.b1(y)

y=self.a1(y)

return y

#下面造Inception块

class Inception(Model):

def __init__(self,output_shape,strides):

super(Inception,self).__init__()

self.c1=conv(output_shape=output_shape,stride=strides,size=1)

self.c2_1=conv(output_shape=output_shape,stride=strides,size=1)

self.c2_2=conv(output_shape=output_shape,stride=1,size=3)

self.c3_1=conv(output_shape=output_shape,stride=strides,size=1)

self.c3_2=conv(output_shape=output_shape,stride=1,size=5)

self.p4_1=MaxPool2D(pool_size=3,strides=1,padding='same')

self.c4_2=conv(output_shape=output_shape,stride=strides,size=1)

def call(self, x):

print("input_shape:",x.shape)

x1=self.c1(x)

print("x1:",x1.shape)

x2_1=self.c2_1(x)

print("x2_1:",x2_1.shape)

x2=self.c2_2(x2_1)

print("x2:",x2.shape)

x3_1=self.c3_1(x)

print("x3_1:",x3_1.shape)

x3=self.c3_2(x3_1)

print("x3:",x3.shape)

x4_1=self.p4_1(x)

print("x4_1:",x4_1.shape)

x4=self.c4_2(x4_1)

print("x4:",x4.shape)

y=tf.concat([x1,x2,x3,x4],axis=3)

return y

class simple(Model):

def __init__(self,num,classes,conv_channel):

super(simple,self).__init__()

self.c1=Conv2D(filters=16,kernel_size=3,padding='same')#先过一个3*3的卷积

self.blocks=tf.keras.models.Sequential()

for i in range(num):

for j in range(2):

if(j==0):

self.blocks.add(Inception(conv_channel,2))

else:

self.blocks.add(Inception(conv_channel,1))

conv_channel *= 2

self.p1=keras.layers.GlobalAvgPool2D()

self.d1=Dense(classes,activation='softmax')

def call(self,x):

y=self.c1(x)

y=self.blocks(y)

y=self.p1(y)

y=self.d1(y)

return y

model=simple(2,10,20)

model.compile(optimizer='adam',loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

model_save_path='./conv_weights/weight.ckpt'

bp=tf.keras.callbacks.ModelCheckpoint(filepath=model_save_path,save_best_only=True,save_weights_only=True)

model.fit(x_train,y_train,batch_size=32,epochs=5,validation_data=(x_test,y_test),

validation_freq=1,callbacks=bp)