一、背景:

haoop的kerberos认证核心是org.apache.hadoop.security.UserGroupInformation类。

UserGroupInformation一般有两种:(1)apache原生的(2)cdh hdp改良过的,即cloudera改良过的。

由此衍生出两个验证方法。

kerberos是有MIT研发出来的,mit也为kerberos开发了客户端。

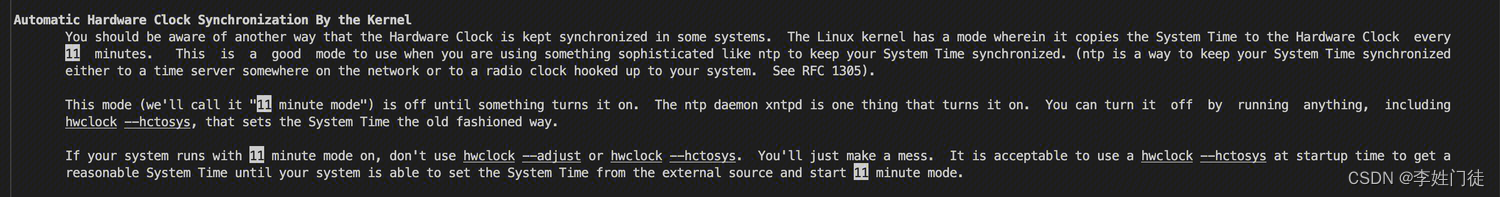

MIT官网为kerberos规定的常用环境变量有如下:

KRB5CCNAME

Default name for the credentials cache file, in the form TYPE:residual. The type of the default cache may determine the availability of a cache collection. FILE is not a collection type; KEYRING, DIR, and KCM are.

If not set, the value of default_ccache_name from configuration files (see KRB5_CONFIG) will be used. If that is also not set, the default type is FILE, and the residual is the path /tmp/krb5cc_uid, where uid is the decimal user ID of the user.

KRB5_KTNAME

Specifies the location of the default keytab file, in the form TYPE:residual. If no type is present, the FILE type is assumed and residual is the pathname of the keytab file. If unset, DEFKTNAME will be used.

KRB5_CONFIG

Specifies the location of the Kerberos configuration file. The default is SYSCONFDIR/krb5.conf. Multiple filenames can be specified, separated by a colon; all files which are present will be read.

KRB5_KDC_PROFILE

Specifies the location of the KDC configuration file, which contains additional configuration directives for the Key Distribution Center daemon and associated programs. The default is LOCALSTATEDIR/krb5kdc/kdc.conf.

KRB5RCACHENAME

(New in release 1.18) Specifies the location of the default replay cache, in the form type:residual. The file2 type with a pathname residual specifies a replay cache file in the version-2 format in the specified location. The none type (residual is ignored) disables the replay cache. The dfl type (residual is ignored) indicates the default, which uses a file2 replay cache in a temporary directory. The default is dfl:.

KRB5RCACHETYPE

Specifies the type of the default replay cache, if KRB5RCACHENAME is unspecified. No residual can be specified, so none and dfl are the only useful types.

KRB5RCACHEDIR

Specifies the directory used by the dfl replay cache type. The default is the value of the TMPDIR environment variable, or /var/tmp if TMPDIR is not set.

KRB5_TRACE

Specifies a filename to write trace log output to. Trace logs can help illuminate decisions made internally by the Kerberos libraries. For example, env KRB5_TRACE=/dev/stderr kinit would send tracing information for kinit to /dev/stderr. The default is not to write trace log output anywhere.

KRB5_CLIENT_KTNAME

Default client keytab file name. If unset, DEFCKTNAME will be used).

KPROP_PORT

kprop port to use. Defaults to 754.

GSS_MECH_CONFIG

Specifies a filename containing GSSAPI mechanism module configuration. The default is to read SYSCONFDIR/gss/mech and files with a .conf suffix within the directory SYSCONFDIR/gss/mech.d.

以上环境变量常用的有

KRB5_CONFIG :krb5.conf或krb5.ini文件路径

KRB5CCNAME:kerberos cache文件路径(注:此文件可由MIT kerberos客户端生成)

二、具体认证步骤

1、krb5.conf信息配置

注意:UserGroupInformation中设置KRB5_CONFIG是没有用的,必须要设置java.security.krb5.conf

如下方法都可以:

(1)项目启动指定java vm变量:-Djava.security.krb5.conf=D:/xxx/xxx/krb5.conf

(2)程序中指定:System.setProperty("java.security.krb5.conf", "D:/xxx/xxx/krb5.conf")

如果不指定程序会找不到kdc,报异常,如下:

org.apache.hadoop.security.KerberosAuthException: failure to login: for principal: xxx@HADOOP.COM from keytab D:\xxx\xxx\xxx.keytab javax.security.auth.login.LoginException: null (68)

Caused by: javax.security.auth.login.LoginException: null (68)

Caused by: KrbException: null (68)

Caused by: KrbException: Identifier doesn't match expected value (906)

2、hadoop conf信息配置

Hadoop configuration配置(类org.apache.hadoop.conf.Configuration)

文档中明确了:会默认加载类路径下的core-default.xml文件内容。

Unless explicitly turned off, Hadoop by default specifies two resources, loaded in-order from the classpath:

core-default.xml: Read-only defaults for hadoop.

core-site.xml: Site-specific configuration for a given hadoop installation.

core-default.xml中含有hadoop的安全配置hadoop.security.authentication,在UserGroupInformation中依据此项配置,查询集群是否启动kerberos。

HADOOP_SECURITY_AUTHENTICATION路径如下:

org.apache.hadoop.fs.CommonConfigurationKeysPublic#HADOOP_SECURITY_AUTHENTICATION

public static AuthenticationMethod getAuthenticationMethod(Configuration conf) {

String value = conf.get(HADOOP_SECURITY_AUTHENTICATION, "simple");

try {

return Enum.valueOf(AuthenticationMethod.class,

StringUtils.toUpperCase(value));

} catch (IllegalArgumentException iae) {

throw new IllegalArgumentException("Invalid attribute value for " +

HADOOP_SECURITY_AUTHENTICATION + " of " + value);

}

}

所以如果在环境变量中配置了HADOOP_HOME或者HADOOP_CONF_DIR对于UserGroupInformation是没有用的。

必须将core-site.xml放在类路径下,或者直接调用org.apache.hadoop.security.UserGroupInformation#setConfiguration设置加载过core-site.xml的conf对象。

3、UserGroupInformation认证

3.1 、apache原生的UserGroupInformation验证:

UserGroupInformation类中使用静态变量存放hadoop conf和已认证用户信息,所以只需要程序中认证一次,不同类不需要传递认证的user,只需要都到UserGroupInformation取即可。

private static Configuration conf;

private static UserGroupInformation loginUser = null;

private static String keytabPrincipal = null;

private static String keytabFile = null;

调用org.apache.hadoop.security.UserGroupInformation#loginUserFromKeytab传入principal和keytab就可以完成认证。

3.2、cloudera改良过的UserGroupInformation验证:

当然,可以调用原生的loginUserFromKeytab也可以。

改良内容就是通过配置环境变量的方法,隐性完成kerberos用户认证。无需UserGroupInformation认证,在调用getLoginUser可以自动完成认证。

具体过程如下:

org.apache.hadoop.security.UserGroupInformation#getLoginUser方法获取用户

public static UserGroupInformation getLoginUser() throws IOException {

...

if (loginUser == null) {

UserGroupInformation newLoginUser = createLoginUser(null);

...

}

}

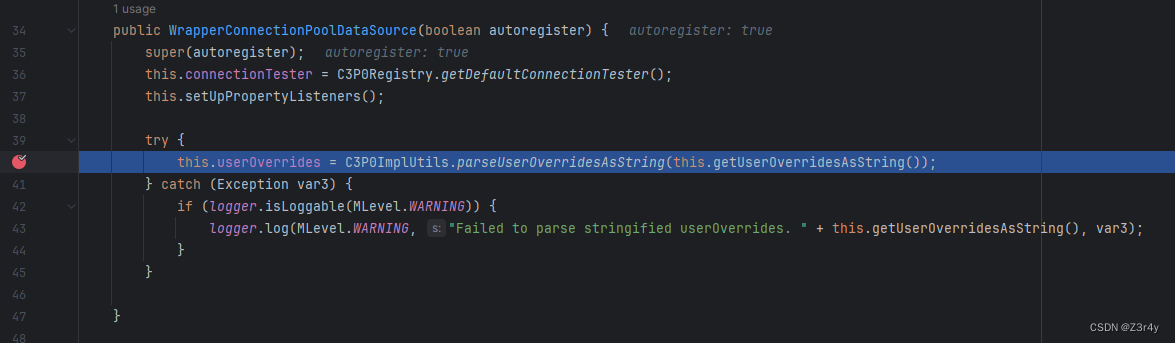

实际是调用了doSubjectLogin(null, null)

UserGroupInformation createLoginUser(Subject subject) throws IOException {

UserGroupInformation realUser = doSubjectLogin(subject, null);

...

}

如下代码subject == null && params == null判断true

private static UserGroupInformation doSubjectLogin(

Subject subject, LoginParams params) throws IOException {

ensureInitialized();

// initial default login.

if (subject == null && params == null) {

params = LoginParams.getDefaults();

}

HadoopConfiguration loginConf = new HadoopConfiguration(params);

try {

HadoopLoginContext login = newLoginContext(

authenticationMethod.getLoginAppName(), subject, loginConf);

login.login();

...

}

获取环境变量:KRB5PRINCIPAL、KRB5KEYTAB、KRB5CCNAME

private static class LoginParams extends EnumMap<LoginParam,String>

implements Parameters {

...

static LoginParams getDefaults() {

LoginParams params = new LoginParams();

params.put(LoginParam.PRINCIPAL, System.getenv("KRB5PRINCIPAL"));

params.put(LoginParam.KEYTAB, System.getenv("KRB5KEYTAB"));

params.put(LoginParam.CCACHE, System.getenv("KRB5CCNAME"));

return params;

}

}

结果是利用环境变量设置的pricipal+keytab或者cache认证。

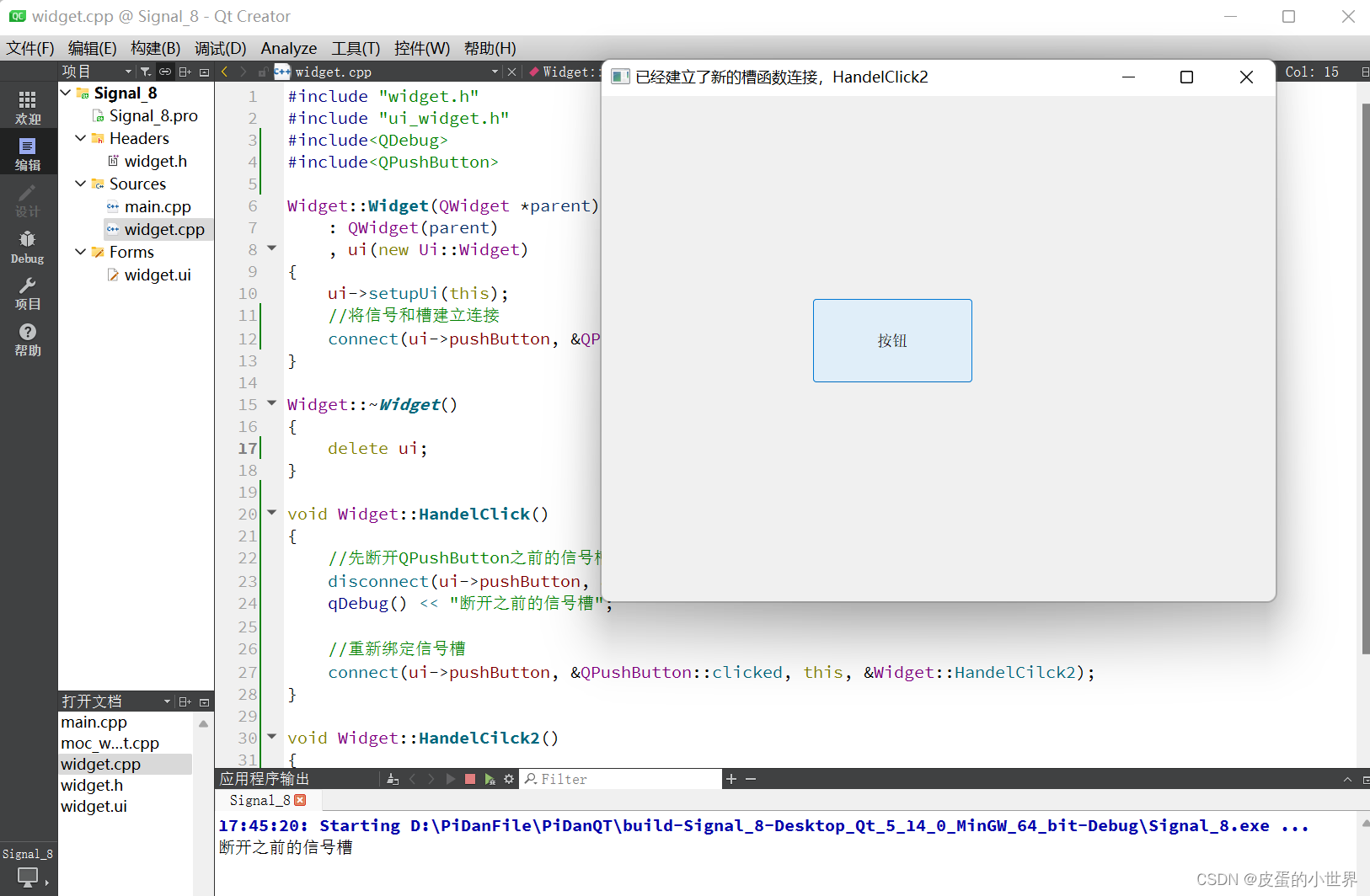

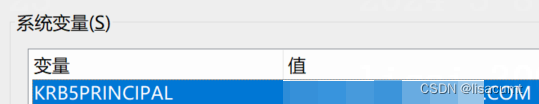

环境变量配置:

(1)每个程序单独配置:

在-DKRB5PRINCIPAL=xxx -DKRB5KEYTAB=xxx 或者 -DKRB5CCNAME=xxx

(2)系统环境默认配置:

win环境如下:

linux环境下/etc/profile添加:

linux环境下/etc/profile添加:

export KRB5PRINCIPAL=xxx -DKRB5KEYTAB=xxx或者export KRB5CCNAME=xxx

完成以上配置就认证成功了,如下info日志信息:

2024-03-08 17:28:11 [org.apache.hadoop.security.UserGroupInformation] INFO : Login successful for user xxx@HADOOP.COM using keytab file D:\xxx\xxx.keytab