一、引用

Microsoft.ML.OnnxRuntime

OpenCvSharp

OpenCvSharp.Extensions

二、人脸检测(Face Detection)

using System;

using System.Collections.Generic;

using System.Linq;

using Microsoft.ML.OnnxRuntime;

using Microsoft.ML.OnnxRuntime.Tensors;

using OpenCvSharp;

namespace FaceRecognition

{

class SCRFD

{

private readonly int[] feat_stride_fpn = new int[] { 8, 16, 32 };

private readonly float nms_threshold = 0.4f;

private Dictionary<string, List<Point>> anchor_centers;

private InferenceSession _onnxSession;

private readonly string input_name;

private readonly int[] input_dimensions;

public SCRFD(string model_path, SessionOptions options)

{

anchor_centers = new Dictionary<string, List<Point>>();

_onnxSession = new InferenceSession(model_path, options);

IReadOnlyDictionary<string, NodeMetadata> _onnx_inputs = _onnxSession.InputMetadata;

input_name = _onnx_inputs.Keys.ToList()[0];

input_dimensions = _onnx_inputs.Values.ToList()[0].Dimensions;

}

public List<PredictionBox> Detect(Mat image, float dete_threshold = 0.5f)

{

int iWidth = image.Width;

int iHeight = image.Height;

int height = input_dimensions[2];

int width = input_dimensions[3];

float rate = width / (float)height;

float iRate = iWidth / (float)iHeight;

if (rate > iRate)

{

iWidth = (int)(height * iRate);

iHeight = height;

}

else

{

iWidth = width;

iHeight = (int)(width / iRate);

}

float resize_Rate = image.Size().Height / (float)iHeight;

Tensor<float> input_tensor = new DenseTensor<float>(new[] { input_dimensions[0], input_dimensions[1], height, width });

OpenCvSharp.Mat dst = new Mat();

Cv2.Resize(image, dst, new OpenCvSharp.Size(iWidth, iHeight));

for (int y = 0; y < height; y++)

for (int x = 0; x < width; x++)

if (y < dst.Height && x < dst.Width)

{

Vec3b color = dst.Get<Vec3b>(y, x);

input_tensor[0, 0, y, x] = (color.Item2 - 127.5f) / 128f;

input_tensor[0, 1, y, x] = (color.Item1 - 127.5f) / 128f;

input_tensor[0, 2, y, x] = (color.Item0 - 127.5f) / 128f;

}

else

{

input_tensor[0, 0, y, x] = (0 - 127.5f) / 128f;

input_tensor[0, 1, y, x] = (0 - 127.5f) / 128f;

input_tensor[0, 2, y, x] = (0 - 127.5f) / 128f;

}

var container = new List<NamedOnnxValue>();

//将 input_tensor 放入一个输入参数的容器,并指定名称

container.Add(NamedOnnxValue.CreateFromTensor(input_name, input_tensor));

//运行 Inference 并获取结果

IDisposableReadOnlyCollection<DisposableNamedOnnxValue> results = _onnxSession.Run(container);

var resultsArray = results.ToArray();

List<PredictionBox> preds = new List<PredictionBox>();

for (int idx = 0; idx < feat_stride_fpn.Length; idx++)

{

int stride = feat_stride_fpn[idx];

DisposableNamedOnnxValue scores_nov = resultsArray[idx];

Tensor<float> scores_tensor = (Tensor<float>)scores_nov.Value;

Tensor<float> bboxs_tensor = (Tensor<float>)resultsArray[idx + 3 * 1].Value;

Tensor<float> kpss_tensor = (Tensor<float>)resultsArray[idx + 3 * 2].Value;

int sHeight = height / stride, sWidth = width / stride;

for (int i = 0; i < scores_tensor.Dimensions[1]; i++)

{

float score = scores_tensor[0, i, 0];

if (score <= dete_threshold)

continue;

string key_anchor_center = String.Format("{0}-{1}-{2}", sHeight, sWidth, stride);

List<Point> anchor_center = null;

try

{

anchor_center = anchor_centers[key_anchor_center];

}

catch (KeyNotFoundException)

{

anchor_center = new List<Point>();

for (int h = 0; h < sHeight; h++)

for (int w = 0; w < sWidth; w++)

{

anchor_center.Add(new Point(w * stride, h * stride));

anchor_center.Add(new Point(w * stride, h * stride));

}

anchor_centers.Add(key_anchor_center, anchor_center);

}

float[] box = new float[bboxs_tensor.Dimensions[2]];

for (int b = 0; b < bboxs_tensor.Dimensions[2]; b++)

box[b] = bboxs_tensor[0, i, b] * stride;

float[] kps = new float[kpss_tensor.Dimensions[2]];

for (int k = 0; k < kpss_tensor.Dimensions[2]; k++)

kps[k] = kpss_tensor[0, i, k] * stride;

box = Distance2Box(box, anchor_center[i], resize_Rate);

kps = Distance2Point(kps, anchor_center[i], resize_Rate);

preds.Add(new PredictionBox(score, box[0], box[1], box[2], box[3], kps));

}

}

if (preds.Count == 0)

return preds;

preds = preds.OrderByDescending(a => a.Score).ToList();

return NMS(preds, nms_threshold);

}

public static List<PredictionBox> NMS(List<PredictionBox> predictions, float nms_threshold)

{

List<PredictionBox> final_predications = new List<PredictionBox>();

while (predictions.Count > 0)

{

PredictionBox pb = predictions[0];

predictions.RemoveAt(0);

final_predications.Add(pb);

int idx = 0;

while (idx < predictions.Count)

{

if (ComputeIOU(pb, predictions[idx]) > nms_threshold)

predictions.RemoveAt(idx);

else

idx++;

}

}

return final_predications;

}

private static float ComputeIOU(PredictionBox PB1, PredictionBox PB2)

{

float ay1 = PB1.BoxTop;

float ax1 = PB1.BoxLeft;

float ay2 = PB1.BoxBottom;

float ax2 = PB1.BoxRight;

float by1 = PB2.BoxTop;

float bx1 = PB2.BoxLeft;

float by2 = PB2.BoxBottom;

float bx2 = PB2.BoxRight;

float x_left = Math.Max(ax1, bx1);

float y_top = Math.Max(ay1, by1);

float x_right = Math.Min(ax2, bx2);

float y_bottom = Math.Min(ay2, by2);

if (x_right < x_left || y_bottom < y_top)

return 0;

float intersection_area = (x_right - x_left) * (y_bottom - y_top);

float bb1_area = (ax2 - ax1) * (ay2 - ay1);

float bb2_area = (bx2 - bx1) * (by2 - by1);

float iou = intersection_area / (bb1_area + bb2_area - intersection_area);

return iou;

}

private static float[] Distance2Box(float[] distance, Point anchor_center, float rate)

{

distance[0] = -distance[0];

distance[1] = -distance[1];

return Distance2Point(distance, anchor_center, rate);

}

private static float[] Distance2Point(float[] distance, Point anchor_center, float rate)

{

for (int i = 0; i < distance.Length; i = i + 2)

{

distance[i] = (anchor_center.X + distance[i]) * rate;

distance[i + 1] = (anchor_center.Y + distance[i + 1]) * rate;

}

return distance;

}

}

class PredictionBox

{

private readonly float score;

private readonly float boxLeft;

private readonly float boxRight;

private readonly float boxBottom;

private readonly float boxTop;

private readonly float[] landmark;

public PredictionBox(float score, float boxLeft, float boxTop, float boxRight, float boxBottom, float[] landmark)

{

this.score = score;

this.boxLeft = boxLeft;

this.boxRight = boxRight;

this.boxBottom = boxBottom;

this.boxTop = boxTop;

this.landmark = landmark;

}

public float Score => score;

public float BoxLeft => boxLeft;

public float BoxRight => boxRight;

public float BoxBottom => boxBottom;

public float BoxTop => boxTop;

public float[] Landmark => landmark;

}

}

三、人脸对齐(Face Alignment)

using OpenCvSharp;

namespace FaceRecognition

{

class FaceAlign

{

static public Mat Align(Mat image, float[] landmarks, int width, int height)

{

//标准脸的关键点

float[,] std = { { 38.2946f, 51.6963f }, { 73.5318f, 51.5014f }, { 56.0252f, 71.7366f }, { 41.5493f, 92.3655f }, { 70.7299f, 92.2041f } };

Mat S = new Mat(5, 2, MatType.CV_32FC1, std);

Mat Q = Mat.Zeros(10, 4, MatType.CV_32FC1);

Mat S1 = S.Reshape(0, 10);

for (int i = 0; i < 5; i++)

{

Q.At<float>(i * 2 + 0, 0) = landmarks[i * 2];

Q.At<float>(i * 2 + 0, 1) = landmarks[i * 2 + 1];

Q.At<float>(i * 2 + 0, 2) = 1;

Q.At<float>(i * 2 + 0, 3) = 0;

Q.At<float>(i * 2 + 1, 0) = landmarks[i * 2 + 1];

Q.At<float>(i * 2 + 1, 1) = -landmarks[i * 2];

Q.At<float>(i * 2 + 1, 2) = 0;

Q.At<float>(i * 2 + 1, 3) = 1;

}

Mat MM = (Q.T() * Q).Inv() * Q.T() * S1;

Mat M= new Mat(2, 3, MatType.CV_32FC1, new float[,]{ { MM.At<float>(0,0), MM.At<float>(1, 0), MM.At<float>(2, 0) }, { -MM.At<float>(1, 0), MM.At<float>(0, 0), MM.At<float>(3, 0) } });

OpenCvSharp.Mat dst = new Mat();

Cv2.WarpAffine(image, dst, M, new OpenCvSharp.Size(width, height));

return dst;

}

}

}

四、特征提取(Feature Extraction)

using System;

using System.Collections.Generic;

using System.Linq;

using Microsoft.ML.OnnxRuntime;

using Microsoft.ML.OnnxRuntime.Tensors;

using OpenCvSharp;

namespace FaceRecognition

{

class ArcFace

{

private InferenceSession _onnxSession;

private readonly string input_name;

private readonly int[] input_dimensions;

public ArcFace(string model_path, SessionOptions options)

{

_onnxSession = new InferenceSession(model_path, options);

IReadOnlyDictionary<string, NodeMetadata> _onnx_inputs = _onnxSession.InputMetadata;

input_name = _onnx_inputs.Keys.ToList()[0];

input_dimensions = _onnx_inputs.Values.ToList()[0].Dimensions;

}

public float[] Extract(Mat image, float[] landmarks)

{

image = FaceAlign.Align(image, landmarks, 112, 112);

Tensor<float> input_tensor = new DenseTensor<float>(new[] { 1, input_dimensions[1], input_dimensions[2], input_dimensions[3] });

for (int y = 0; y < image.Height; y++)

{

for (int x = 0; x < image.Width; x++)

{

Vec3b color = image.Get<Vec3b>(y, x);

input_tensor[0, 0, y, x] = (color.Item2 - 127.5f) / 127.5f;

input_tensor[0, 1, y, x] = (color.Item1 - 127.5f) / 127.5f;

input_tensor[0, 2, y, x] = (color.Item0 - 127.5f) / 127.5f;

}

}

var container = new List<NamedOnnxValue>();

//将 input_tensor 放入一个输入参数的容器,并指定名称

container.Add(NamedOnnxValue.CreateFromTensor(input_name, input_tensor));

//运行 Inference 并获取结果

IDisposableReadOnlyCollection<DisposableNamedOnnxValue> results = _onnxSession.Run(container);

var resultsArray = results.ToArray();

DisposableNamedOnnxValue nov = resultsArray[0];

Tensor<float> tensor = (Tensor<float>)nov.Value;

float[] embedding = new float[tensor.Length];

double l2 = 0;

for (int i = 0; i < tensor.Length; i++)

{

embedding[i] = tensor[0, i];

l2 = l2 + Math.Pow((double)tensor[0, i], 2);

}

l2 = Math.Sqrt(l2);

for (int i = 0; i < embedding.Length; i++)

embedding[i] = embedding[i] / (float)l2;

return embedding;

}

}

}

五、人脸识别(Face Recognition)

using System;

using System.Collections.Generic;

using System.IO;

using Microsoft.ML.OnnxRuntime;

using OpenCvSharp;

namespace FaceRecognition

{

class FaceRecognize

{

private SCRFD detector;

private ArcFace recognizer;

private readonly float reco_threshold;

private readonly float dete_threshold;

private Dictionary<string, float[]> faces_embedding;

public FaceRecognize(string dete_model, string reco_model, int ctx_id= 0,float dete_threshold= 0.50f, float reco_threshold= 1.24f)

{

SessionOptions options = new SessionOptions();

options.LogSeverityLevel = OrtLoggingLevel.ORT_LOGGING_LEVEL_INFO;

if (ctx_id >= 0)

options.AppendExecutionProvider_DML(ctx_id); //使用directml进行加速时调用

else

options.AppendExecutionProvider_CPU(0); //使用cpu进行运算时调用

detector = new SCRFD(dete_model, options);

recognizer = new ArcFace(reco_model, options);

faces_embedding = new Dictionary<string, float[]>();

this.reco_threshold = reco_threshold;

this.dete_threshold = dete_threshold;

}

public void LoadFaces(string face_db_path)

{

if (!Directory.Exists(face_db_path))

Directory.CreateDirectory(face_db_path);

foreach(string fileName in Directory.GetFiles(face_db_path))

{

Mat image = Cv2.ImRead(fileName);

Register(image, System.IO.Path.GetFileNameWithoutExtension(fileName));

}

}

public int Register(Mat image, string user_id)

{

List<PredictionBox> pbs =detector.Detect(image, dete_threshold);

if (pbs.Count == 0)

return 1;

if (pbs.Count > 1)

return 2;

float[] embedding = recognizer.Extract(image, pbs[0].Landmark);

foreach(float[] face in faces_embedding.Values)

if (Compare(embedding, face) < reco_threshold)

return 3;

faces_embedding.Add(user_id, embedding);

return 0;

}

public Dictionary<string, PredictionBox> Recognize(System.Drawing.Bitmap bitmap)

{

Mat image = OpenCvSharp.Extensions.BitmapConverter.ToMat(bitmap);

Dictionary<string, PredictionBox> results = new Dictionary<string, PredictionBox>();

List<PredictionBox> pbs = detector.Detect(image, dete_threshold);

foreach (PredictionBox pb in pbs)

{

float[] embedding = recognizer.Extract(image, pb.Landmark);

foreach(string user_id in faces_embedding.Keys)

{

float[] face = faces_embedding[user_id];

if (Compare(embedding, face) < reco_threshold)

results.Add(user_id, pb);

}

}

return results;

}

static public double Compare(float[] faceA,float[] faceB)

{

double result = 0;

for(int i=0;i<faceA.Length;i++)

result = result + Math.Pow(faceA[i] - faceB[i], 2);

return result;

}

}

}

六、示例

using System.Collections.Generic;

using System.Drawing;

namespace FaceRecognition

{

static class Program

{

static void Main()

{

string dete_model = @"X:\xxx\scrfd_10g_bnkps_shape640x640.onnx";

string reco_model = @"X:\xxx\w600k_r50.onnx";

string face_db_path = @"X:\xxx\face_db";

FaceRecognize face_reco = new FaceRecognize(dete_model, reco_model, -1);

face_reco.LoadFaces(face_db_path);

string image_name = @"X:\xxx\test.jpg";

System.Drawing.Bitmap bitmap = new System.Drawing.Bitmap(image_name);

Dictionary<string, PredictionBox> results = face_reco.Recognize(bitmap);

Graphics draw = Graphics.FromImage(bitmap);

foreach (string user_id in results.Keys)

{

PredictionBox pb = results[user_id];

float w = 2f;//边框的宽度

using (Graphics g = Graphics.FromImage(bitmap))

{

using (Pen pen = new Pen(System.Drawing.Color.Red, 1f))

{

g.DrawString(user_id, new Font("Arial", 10), new SolidBrush(System.Drawing.Color.Blue), (int)pb.BoxLeft, (int)(pb.BoxTop - 16));

}

using (Pen pen = new Pen(System.Drawing.Color.Red, w))

{

g.DrawRectangle(pen, new System.Drawing.Rectangle((int)pb.BoxLeft, (int)pb.BoxTop, (int)(pb.BoxRight - pb.BoxLeft), (int)(pb.BoxBottom - pb.BoxTop)));

}

using (Pen pen = new Pen(System.Drawing.Color.Tomato, 2f))

{

for (int i = 0; i < pb.Landmark.Length; i = i + 2)

g.DrawEllipse(pen, new System.Drawing.Rectangle((int)pb.Landmark[i] - 1, (int)pb.Landmark[i + 1] - 1, 2, 2));

}

g.Dispose();

}

}

draw.Dispose();

bitmap.Save(@"X:\xxx\result.bmp");

bitmap.Dispose();

}

}

}

七、思考

本文使用的SCRFD/ArcFace模型是insightface项目训练的模型,初始时,在SCRFD.Detect中图片Resize处理中使用SixLabors.ImageSharp的Resize方法,在人脸对齐中计算关键点位置然后使用SixLabors.ImageSharp处理图片(具体代码如下)。

using SixLabors.ImageSharp;

using SixLabors.ImageSharp.PixelFormats;

using SixLabors.ImageSharp.Processing;

namespace FaceRecognition

{

class SCRFD

{

private static Image<Rgb24> Resize(Image<Rgb24> image, SixLabors.ImageSharp.Size size)

{

image.Mutate(x => x

.AutoOrient()

.Resize(new ResizeOptions

{

Mode = ResizeMode.BoxPad,

Position = AnchorPositionMode.TopLeft,

Size = size

})

.BackgroundColor(Color.Black));

return image;

}

}

}

using SixLabors.ImageSharp;

using SixLabors.ImageSharp.PixelFormats;

using SixLabors.ImageSharp.Processing;

namespace FaceRecognition

{

class FaceAlign

{

static public Image<Rgb24> Align(Image<Rgb24> image, float[] landmarks, int width, int height)

{

float dy = landmarks[3] - landmarks[1], dx = landmarks[2] - landmarks[0];

double angle = Math.Atan2(dy, dx) * 180f / Math.PI;

float[] eye_center = new float[] { (landmarks[0] + landmarks[2]) / 2, (landmarks[1] + landmarks[3]) / 2 };

float[] lip_center = new float[] { (landmarks[6] + landmarks[8]) / 2, (landmarks[7] + landmarks[9]) / 2 };

float dis = (float)Math.Sqrt(Math.Pow(eye_center[0] - lip_center[0], 2) + Math.Pow(eye_center[1] - lip_center[1], 2)) / 0.35f;

int bottom = (int)Math.Round(dis * 0.65, MidpointRounding.AwayFromZero);

int top = (int)Math.Round(dis - bottom, MidpointRounding.AwayFromZero);

int left = (int)Math.Round(width * dis / height / 2, MidpointRounding.AwayFromZero);

int[] center = new int[] { (int)Math.Round(eye_center[0], MidpointRounding.AwayFromZero), (int)Math.Round(eye_center[1], MidpointRounding.AwayFromZero) };

int x1 = Math.Max(center[0] - 2 * bottom, 0), y1 = Math.Max(center[1] - 2 * bottom, 0);

int x2 = Math.Min(center[0] + 2 * bottom, image.Width - 1), y2 = Math.Min(center[1] + 2 * bottom, image.Height - 1);

image.Mutate(img => img.Crop(new Rectangle(x1, y1, x2 - x1 + 1, y2 - y1 + 1)));

int i_size = 4 * bottom + 1;

var newImage = new Image<Rgb24>(i_size, i_size, backgroundColor: Color.White);

newImage.Mutate(img =>

{

img.DrawImage(image, location: new SixLabors.ImageSharp.Point(2 * bottom - (center[0] - x1), 2 * bottom - (center[1] - y1)), opacity: 1);

img.Rotate((float)(0 - angle));

img.Crop(new Rectangle(newImage.Width / 2 - left, newImage.Height / 2 - top, left * 2, top + bottom));

img.Resize(width, height);

});

return newImage;

}

}

}

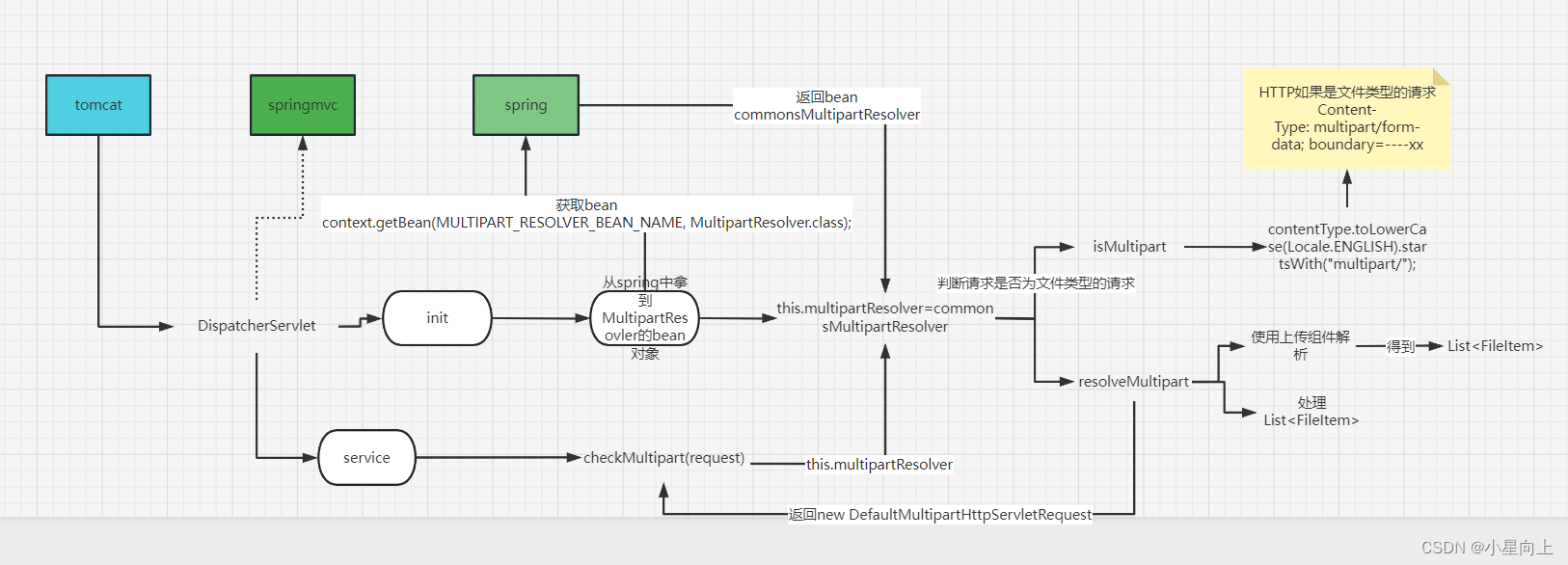

这样提取出来的人脸特征比对结果没有insightface原Python版的效果好,表现是同一个人的两张不同图片FaceRecognize.Compare值较大。如下图两张图片:

后面把图片Resize改用OpenCvSharp,人脸对齐也用OpenCvSharp处理,比对结果就和原版相差无几。造成这种原因可能是原版用OpenCv处理图片的,所以原版训练出来的模型和OpenCv处理图片更适合。

说明:

- SCRFD模型下载:https://onedrive.live.com/redir?resid=4A83B6B633B029CC!5543&authkey=!ACwXX1RtoJZotbE&e=F6i5Vm,也可在此下载。

- ArcFace模型下载:https://drive.google.com/file/d/1qXsQJ8ZT42_xSmWIYy85IcidpiZudOCB/view?usp=sharing,也可在此下载。

- 人脸对齐算法参考:https://zhuanlan.zhihu.com/p/393037076